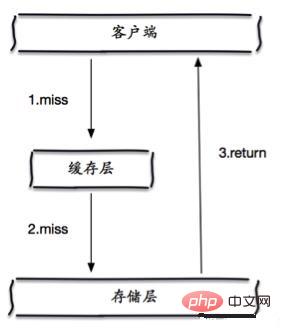

Cache penetration

Cache penetration refers to querying a data that definitely does not exist. Since the cache does not hit, subsequent queries to the database cannot The results of the query will not be written to the cache, which will cause each query to request the database, causing cache penetration; (Recommended learning: Redis Video Tutorial)

Solution

Bloom filtering

Store all possible query parameters in hash form, Verification is performed first at the control layer, and if it does not match, it is discarded, thus avoiding the query pressure on the underlying storage system;

Cache empty objects

When storing After the layer misses, even the returned empty object will be cached, and an expiration time will be set. Accessing the data later will be obtained from the cache, protecting the back-end data source;

But there are two problems with this method:

If null values can be cached, this means that the cache needs more space to store more keys, because there may be There are many keys with null values;

Even if the expiration time is set for null values, there will still be inconsistency between the data in the cache layer and the storage layer for a period of time, which will be problematic for businesses that need to maintain consistency. Influence.

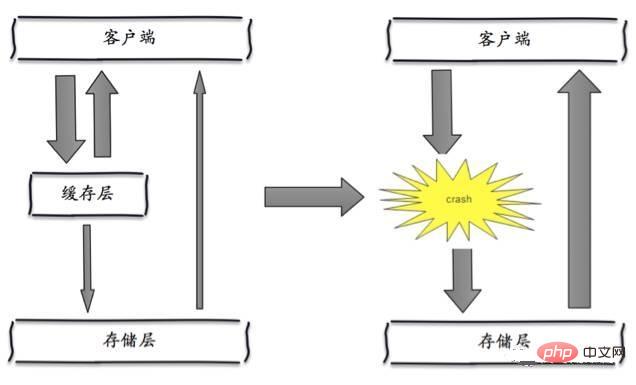

Cache avalanche

Cache avalanche means that because the cache layer carries a large number of requests, it effectively protects the storage layer, but if the cache layer as a whole fails due to some reasons To provide services, all requests will reach the storage layer, and the number of calls to the storage layer will increase dramatically, causing the storage layer to also hang up.

Solution

Ensure high availability of cache layer services

Even if individual nodes , individual machines, or even the computer room is down, services can still be provided. For example, Redis Sentinel and Redis Cluster have achieved high availability.

Rely on the isolation component to limit and downgrade the backend flow

After the cache is invalidated, the number of threads that read the database and write the cache is controlled by locking or queuing. For example, only one thread is allowed to query data and write cache for a certain key, while other threads wait.

Data preheating

You can use the cache reload mechanism to update the cache in advance, and then manually trigger the loading of different keys in the cache before large concurrent access occurs, and set different Expiration time, so that the cache invalidation time is as even as possible.

For more Redis-related technical articles, please visit the Redis Getting Started Tutorial column to learn!

The above is the detailed content of How to solve redis avalanche and penetration. For more information, please follow other related articles on the PHP Chinese website!

Commonly used database software

Commonly used database software

What are the in-memory databases?

What are the in-memory databases?

Which one has faster reading speed, mongodb or redis?

Which one has faster reading speed, mongodb or redis?

How to use redis as a cache server

How to use redis as a cache server

How redis solves data consistency

How redis solves data consistency

How do mysql and redis ensure double-write consistency?

How do mysql and redis ensure double-write consistency?

What data does redis cache generally store?

What data does redis cache generally store?

What are the 8 data types of redis

What are the 8 data types of redis