The process of scrapy

The process can be described as follows:

● The scheduler puts requests-->Engine- ->Download middleware--->Downloader

● Downloader sends a request and gets a response---->Download middleware---->Engine--->Crawler middle File--->Crawler

● The crawler extracts the url address and assembles it into a request object---->Crawler middleware--->Engine--->Scheduler

● Crawler extracts data--->Engine--->Pipeline

● Pipeline processes and saves data

Recommended learning: Python video tutorial

Note:

The green lines in the picture represent the transmission of data

Pay attention to the position of the middleware in the picture, which determines its role

Pay attention to the engine Location, all modules were previously independent of each other and only interacted with the engine

The specific role of each module in scrapy

1. scrapy project Implementation process

Create a scrapy project: scrapy startproject project name

Generate a crawler: scrapy genspider crawler name allows crawling range

Extract data :Improve spider, use xpath and other methods

Save data:Save data in pipeline

2. Create scrapy project

Command: scrapy startproject

Example: scrapy startproject myspider

The generated directory and file results are as follows:

Key points in settings.py Fields and connotations

● USER_AGENT Set ua

● ROBOTSTXT_OBEY Whether to comply with the robots protocol, the default is

● CONCURRENT_REQUESTS Set the number of concurrent requests, the default is 16

● DOWNLOAD_DELAY Download delay, default is no delay

● COOKIES_ENABLED Whether to enable cookies, that is, each request brings the previous cookie, the default is enabled

● DEFAULT_REQUEST_HEADERS Set the default request headers

● SPIDER_MIDDLEWARES crawler middleware, the setting process is the same as the pipeline

● DOWNLOADER_MIDDLEWARES download middleware

Create a crawler

Command: scrapy genspider

The generated directory and file results are as follows:

Perfect spider

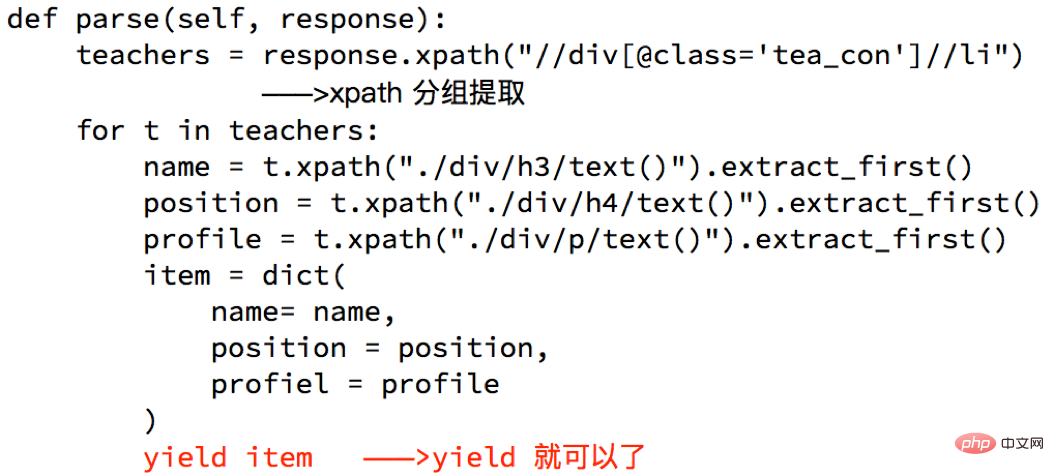

Improving the spider means extracting data through methods:

Note:

● The return result of the response.xpath method is a list-like type, which contains the selector object. The operation is the same as the list, but there are some additional methods

● extract() returns a list containing strings

● extract_first() returns the first string in the list. If the list is empty, None is returned

● The parse method in the spider must have

● Need to grab The url address must belong to allowed_domains, but the url address in start_urls does not have this restriction

● When starting the crawler, pay attention to the starting location. It is started under the project path

The data is passed to pipeline

#Why use yield?

● What are the benefits of turning the entire function into a generator?

● When traversing the return value of this function, read the data into the memory one by one, which will not cause the memory to be too high in an instant

● The range in python3 is the same as the xrange in python2

Note:

The objects that yield can pass can only be: BaseItem, Request, dict, None

6. Improve the pipeline

Pipelines can be opened in settings. Why do you need to open multiple pipelines?

● Different pipelines can process data from different crawlers

● Different pipelines can perform different data processing operations, such as one for data cleaning and one for data storage

Points to note when using pipeline

● You need to enable it in settings before using it

● The key in the setting of the pipeline represents the position (that is, the position of the pipeline in the project can be customized), and the value represents the distance from the engine. The closer the data is, the faster it will pass through

● There are multiple pipelines At this time, the process_item method must return item, otherwise the data obtained by the latter pipeline will be None value

● The process_item method in the pipeline must exist, otherwise the item cannot accept and process it

● process_item The method accepts item and spider, where spider represents the spider currently passing item.

This article comes from the python tutorial column, welcome to learn!

The above is the detailed content of Python crawler-scrapy introduction and use. For more information, please follow other related articles on the PHP Chinese website!