word2vector principle

Mapping word into a new space and representing it as a multi-dimensional continuous real number vector is called "Word Represention" or "Word Embedding".

Since the 21st century, people have gradually transitioned from the original sparse representation of word vectors to the current dense representation in low-dimensional space.

Using sparse representation often encounters the curse of dimensionality when solving practical problems, and semantic information cannot be represented and potential connections between words cannot be revealed.

The use of low-dimensional space representation not only solves the problem of the curse of dimensionality, but also explores the associated attributes between words, thereby improving the accuracy of vector semantics.

word2vec learning tasks

Suppose there is such a sentence: The search engine group will hold a group meeting at 2 o'clock today.

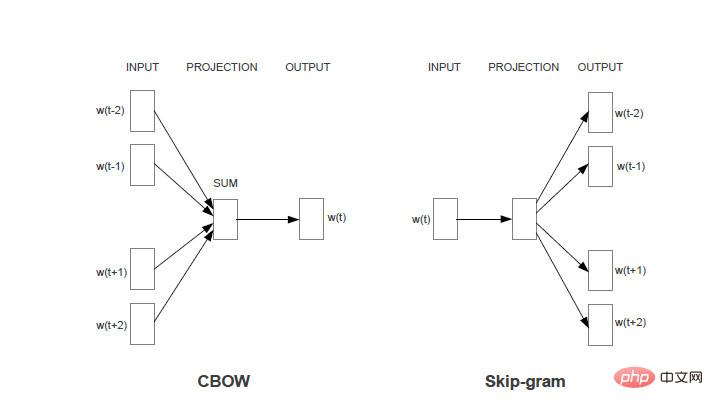

Task 1: For each word, use the words surrounding the word to predict the probability of generating the current word. For example, use "today, afternoon, search, engine, group" to generate "2 o'clock".

Task 2: For each word, use the word itself to predict the probability of generating other words. For example, use "2 o'clock" to generate each word in "today, afternoon, search, engine, group".

The common restriction of both tasks is: for the same input, the sum of the probabilities of outputting each word is 1.

The Word2vec model is a way to improve the accuracy of the above tasks through machine learning. The two tasks correspond to two models (CBOW and skim-gram) respectively. Unless otherwise specified, CBOW, the model corresponding to Task 1, will be used for analysis below.

The Skim-gram model analysis method is the same.

For more Word related technical articles, please visit the Word Tutorial column to learn!

The above is the detailed content of word2vector principle. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52