Database

Database

Mysql Tutorial

Mysql Tutorial

How MySQL uses sharding to solve the storage problem of 50 billion data

How MySQL uses sharding to solve the storage problem of 50 billion data

How MySQL uses sharding to solve the storage problem of 50 billion data

This is a technical study of how we split data across multiple MySQL servers. We completed this sharding approach in early 2012, and it's still the system we use to store core data today.

Before we discuss how to split the data, let’s first understand our data. Mood lighting, chocolate covered strawberries, Star Trek quotes...

Pinteres is the discovery engine for everything that interests you. From a data perspective, Pinterest is the largest collection of human interest images in the world. There are over 50 billion Pins saved by Pinners across 1 billion boards. Users Pin again, like other people's Pins (roughly speaking, a shallow copy), follow other Pinners, boards, and interests, and then view all of the subscribed Pinners' posts on the Home Page. Very good! Now let it scale up!

Growing Pains

In 2011 we had success. In some evaluation reports, we are growing much faster than other startups. In September 2011, every one of our basic equipment was exceeded. We applied a number of NoSQL technologies, all of which resulted in disastrous results. At the same time, the large number of MySQL slave servers used for reading created a lot of annoying bugs, especially caching. We restructured the entire data storage model. To make this effective, we carefully formulate our requirements.

Business Requirements

All our systems need to be very stable, easy to operate and easy to expand. We hope that the supported database can start with a small storage capacity and be able to expand as the business develops.

All Pin-generated content must be readily accessible on the site.

Supports requesting access to N Pins to be displayed in the artboard in a certain order (such as according to the time of creation, or in a user-specific order). Liked Pin friends and the Pin list of Pin friends can also be displayed in a specific order.

For the sake of simplicity, updates generally ensure the best results. To get eventual consistency, you need something extra like a distributed transaction log. This is an interesting and (not) simple thing.

Solution ideas and key points

The solution requires distributing massive data slices to multiple database instances, and cannot use connections, foreign keys, or connections of relational databases. Indexing and other methods integrate the entire data. If you think about it, correlated subqueries cannot span different database instances.

Our solution requires load balancing data access. We hate data migration, especially record-by-record migration, which is very error-prone and adds unnecessary complexity to the system due to the complexity of relationships. If data must be migrated, it is best to migrate the entire set of logical nodes.

In order to achieve reliable and rapid implementation of the solution, we need to use the easiest to implement and most robust technical solution on our distributed data platform.

All data on each instance will be completely copied to a slave instance as a data backup. We are using S3, a highly available MapReduce (distributed computing environment). Our front-end business logic accesses background data and only accesses the main instance of the database. Never give your front-end business read and write access to slave instances. Because there is a delay in data synchronization with the master instance, it will cause inexplicable errors. Once the data is sliced and distributed, there is no reason for your front-end business to read and write data from the slave instance.

Finally, we need to carefully design an excellent solution to generate and parse the globally unique identifier (UUID) of all our data objects.

Our slicing solution

No matter what, we need to design a data distribution solution that meets our needs, is robust, has good performance and is maintainable. In other words, it cannot be naive (without extensive validation). Therefore, our basic design is built on MySQL, see we chose a mature technology. At the beginning of the design, we would naturally shy away from those database products that claim to have the new capabilities of auto-scaling, such as MongoDB, Cassandra and Membase, because they seem to be simple to implement but have poor applicability (often An inexplicable error occurred causing a crash).

Narration: It is strongly recommended to start with the basics and avoid the trendy and new stuff - learn and use MySQL well in a down-to-earth manner. Believe me, every word is filled with tears.

MySQL is a mature, stable and easy-to-use relational database product. Not only do we use it, but many well-known large companies also use it as back-end data support to store massive amounts of data. (Annotation: About a few years ago, MySQL was acquired by Oracle along with SUN and came under the name of Oracle. Many companies, such as Google, Facebook, etc., were worried about MySQL’s open source issues and switched to another one developed by the original author of MySQL. The open source database MariaDB (under) MySQL supports our technical requirements for sequential data requests to the database, querying specified range of data and transaction processing at the row (record) level. MySQL has a bunch of features, but we don't need those. Since MySQL itself is a monolithic solution, we have to slice our data. (Annotation: The meaning here is that a single instance manages massive amounts of data, which will inevitably cause performance problems. Now slicing a massive overall data into individual data sets requires a powerful technical solution to separate each individual data set. The monoliths are integrated into a whole, improving performance without going wrong) The following is our design plan:

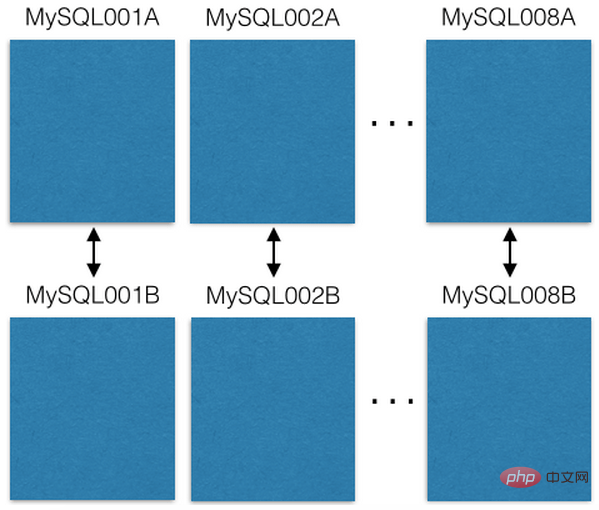

We started with 8 EC2 servers, each server running a MySQL instance:

Each MySQL server uses master-master replicated to a redundant host for disaster recovery. Our front-end business only reads/writes data from the main service instance. I recommend you do the same, it simplifies many things and avoids delayed glitches. (Annotation: Master-master replicated is a function provided by the MySQL database itself. It refers to a mode in which two machines backup each other. Compared with other modes, such as master-slave backup, the data of the two machines are completely consistent. Background synchronization, each machine has its own independent IP and can be accessed concurrently for read/write access. However, the author of the original article has repeatedly emphasized that although the two machines are mutually redundant and use primary-primary backup, both can be accessed. But you logically Distinguish between master and slave, always read/write from one of them. For example, as shown in the figure, there is a master-master backup between MySQL001A and MySQL001B, but you only have read/write access from MySQL001A. Another: They used 16 machines , the other 8 slave machines may not be EC2 or not)

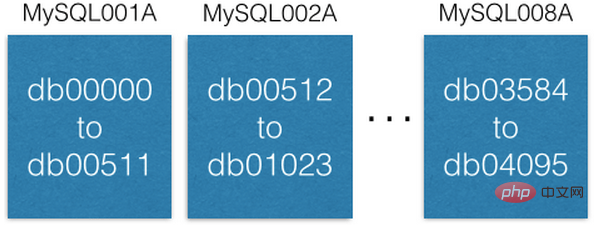

Each MySQL instance can have multiple databases:

Note that each database is How to uniquely name db00000, db00001, until dbNNNN. Each database is a shard of our database. We made a design so that once a piece of data is allocated to a shard, it will not be moved out of that shard. However, you can get more capacity by moving shards to other machines (we'll discuss this later).

We maintain a configuration database table, which records which machine the slice database is on:

[

{“range”: (0,511), “master”: “MySQL001A”, “slave”: “MySQL001B”},

{“range”: (512, 1023), “master”: “MySQL002A”, “slave”: “MySQL002B”},

...

{“range”: (3584, 4095), “master”: “MySQL008A”, “slave”: “MySQL008B”}

]This configuration table is only modified when the slice database is migrated or the host is replaced. For example, if a master instance host goes down, we will promote its slave instance host to the master instance and then replace it with a new machine as the slave instance host as soon as possible. The configuration script is retained on ZooKeeper. When the above modifications occur, the script is sent to the machine that maintains the slicing service for configuration changes. (Annotation: You can find the advantage that the original author has always emphasized that the front-end business only reads and writes data from the logical master instance).

Each slice database maintains the same database table and table structure, such as pins, boards, users_has_pins, users_likes_pins, pin_liked_by_user and other database tables. Build synchronously at deployment time.

Design plan for distributing data to slice servers

We combine the slice ID (shard ID), data type identifier and local ID (local ID) to form a 64-bit globally unique Identification (ID). The slice ID (shard ID) occupies 16 bits (bit), the data type identifier occupies 10 bits (bit), and the local ID (local ID) occupies 36 bits (bit). Anyone with a discerning eye will immediately notice that this is only 62 bits. My past experience in distributing and integrating data tells me that retaining a few for expansion is priceless. Therefore, I kept 2 bits (set to 0). (Annotation: Let me explain here. According to the following operations and explanations, the unique identification ID of any object is 64 bits, the highest 2 bits are always 0, followed by a 36-bit local identification, followed by a 10-bit type identification, and finally a 16-bit The slice identifier. The local identifier can represent 2^36 up to more than 60 billion IDs. The data type can represent 2^10 up to 1024 object types, and the slice identifier can be subdivided into 2^16 up to 65536 slice databases. The solution mentioned above Cut 4096 slice database)

ID = (shard ID << 46) | (type ID << 36) | (local ID<<0) 以 Pin: https://www.pinterest.com/pin/241294492511... 为例,让我们解构这个 Pin 对象的 全局 ID 标识 241294492511762325 : Shard ID = (241294492511762325 >> 46) & 0xFFFF = 3429 Type ID = (241294492511762325 >> 36) & 0x3FF = 1 Local ID = (241294492511762325 >> 0) & 0xFFFFFFFFF = 7075733

It can be seen that this Pin object is in 3429 slice database. Assume that the Pin object data type identifier is 1, and its record is in the 7075733 record row in the pin data table in the 3429 slice database. For example, assuming that the slice 3429 database is in MySQL012A, we can use the following statement to get its data record: (Annotation: The original author here is giving a general example. According to the previous example, 3429 should be on MySQL007A)

conn = MySQLdb.connect(host=”MySQL012A”) conn.execute(“SELECT data FROM db03429.pins where local_id=7075733”)

有两种类型的数据:对象或关系。对象包含对象本身细节。 如 Pin 。

存储对象的数据库表

对象库表中的每个记录,表示我们前端业务中的一个对象,诸如:Pins(钉便签), users(用户),boards(白板)和 comments(注释),每个这样的记录在数据库表中设计一个标识 ID 字段(这个字段在表中作为记录的 自增主键「auto-incrementing primary key」 ,也就是我们前面提到的 局部 ID「 local ID」 ),和一个 blob 数据字段 -- 使用 JSON 保存对象的具体数据 --。

CREATE TABLE pins ( local_id INT PRIMARY KEY AUTO_INCREMENT, data TEXT, ts TIMESTAMP DEFAULT CURRENT_TIMESTAMP ) ENGINE=InnoDB;

举例,一个 Pin 对象形状如下:

{“details”: “New Star Wars character”, “link”: “http://webpage.com/asdf”, “user_id”: 241294629943640797, “board_id”: 241294561224164665, …}创建一个 Pin 对象,收集所有的数据构成 JSON blob 数据。然后,确定它的 切片 ID「 shard ID」 (我们更乐意把 Pin 对象的切片数据放到跟其所在 白板「 board」 对象相同的切片数据库里,这不是强制设计规则)。Pin 对象的数据类型标识为 1。连接到 切片 ID 指示的切片数据库,插入(insert)Pin 对象的 JOSON 数据到 Pin 对象数据库表中,MySQL 操作成功后将会返回 自增主键「auto-incrementing primary key」 给你,这个作为此 Pin 对象的 局部 ID「 local ID」。现在,我们有了 shard 、类型值、local ID 这些必要信息,就可以构建出此 Pin 对象的 64 位 ID 。(译注:原作者提到的,他们的前端业务所用到的每种对象都保存在一个对象数据库表里,每个对象记录都通过一个全局唯一 ID 去找到它,但这个全局唯一 ID 并不是数据库表中的 局部 ID,由于切片的缘故。原作者一直在讲这个设计及其原理。这样设计的目的为了海量数据切片提高性能,还要易用,可维护,可扩展。后面,作者会依次讲解到)

编辑一个 Pin 对象,使用 MySQL 事务「transaction」 在 Pin 对象的数据记录上 读出 -- 修改 -- 写回「read-modify-write」 Pin 对象的 JOSON 数据字段:

> BEGIN > SELECT blob FROM db03429.pins WHERE local_id=7075733 FOR UPDATE [修改 json blob] > UPDATE db03429.pins SET blob=’<修改后的 blob>’ WHERE local_id=7075733 > COMMIT

编辑一个 Pin 对象,您当然可以直接删除这个对象在 MySQL 数据库表中的数据记录。但是,请仔细想一下,是否在对象的 JSON 数据上加个叫做「 active」的域,把剔除工作交由前端中间业务逻辑去处理或许会更好呢。

(译注:学过关系数据库的应知道,自增主键在记录表中是固实,在里面删除记录,会造成孔洞。当多了,势必造成数据库性能下降。数据库只负责保存数据和高性能地查询、读写数据,其数据间的关系完全靠设计精良的对象全局 ID 通过中间件逻辑去维护 这样的设计理念一直贯穿在作者的行文中。只有理解了这点您才能抓住这篇文章的核心)

关系映射数据库表

关系映射表表示的是前端业务对象间的关系。诸如:一个白板(board)上有哪些钉便签(Pin), 一个钉便签(Pin)在哪些白板(board)上等等。表示这种关系的 MySQL 数据库表包括 3 个字段:一个 64 位的「from」ID, 一个 64 位的「to」ID 和一个顺序号。每个字段上都做索引方便快速查询。其记录保存在根据「from」字段 ID 解构出来的切片 ID 指示出的切片数据库上。

CREATE TABLE board_has_pins ( board_id INT, pin_id INT, sequence INT, INDEX(board_id, pin_id, sequence) ) ENGINE=InnoDB;

(译注:这里的关系映射指前端业务对象间的关系用数据库表来运维,并不指我上节注释中说到的关系数据库的关系映射。作者开篇就讲到,由于切片,不能做关系数据库表间的关系映射的,如一对一,一对多,多对多等关系关联)

关系映射表是单向的,如 board_has_pins(板含便签)表方便根据 board (白板)ID 查询其上有多少 Pin(钉便签)。若您需要根据 Pin(钉便签)ID 查询其都在哪些 board(白板)上,您可另建个表 pin_owned_by_board(便签属于哪些白板)表,其中 sequence 字段表示 Pin 在 board 上的顺序号。(由于数据分布在切片数据库上,我们的 ID 本身无法表示其顺序)我们通常将一个新的 Pin 对象加到 board 上时,将其 sequence 设为当时的系统时间。sequence 可被设为任意整数,设为当时的系统时间,保证新建的对象的 sequence 总是大于旧对象的。这是个方便易行的方法。您可通过下面的语句从关系映射表中查询对象数据集:

SELECT pin_id FROM board_has_pins WHERE board_id=241294561224164665 ORDER BY sequence LIMIT 50 OFFSET 150

语句会查出 50 个 pin_ids(便签 ID ), 随后可用这些对象 ID 查询其具体信息。

We only map these relationships at the business application layer, such as board_id -> pin_ids -> pin objects (from whiteboard ID -> Note IDs -> Note objects). A great feature of this design is that you can cache these relational map pairs separately. For example, we cache the pin_id -> pin object (note ID -> note object) relationship mapping on the memcache (memory cache) cluster server, and the board_id -> pin_ids (whiteboard ID -> note IDs) relationship mapping cache on redis on the cluster server. In this way, it can be very suitable for our optimization caching technology strategy.

Increase service capabilities

In our system, there are three main ways to improve service processing capabilities. The easiest thing is to upgrade the machine (larger space, faster hard disk speed, more memory, whatever upgrade can solve the system bottleneck)

Another way is to expand the slicing range. Initially, we designed only to slice 4096 databases. Compared with the 16-bit slice ID we designed, there is still a lot of space, because 16 bits can represent 65536 numbers. At some point in time, if we provide 8 more machines to run 8 MySQL database instances and provide slicing databases from 4096 to 8192, then new data will only be stored in the slicing databases in this range. There are 16 parallel computing databases, and service capabilities will inevitably improve.

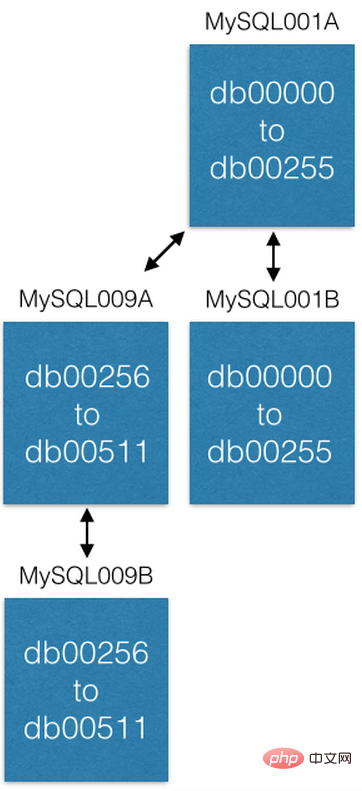

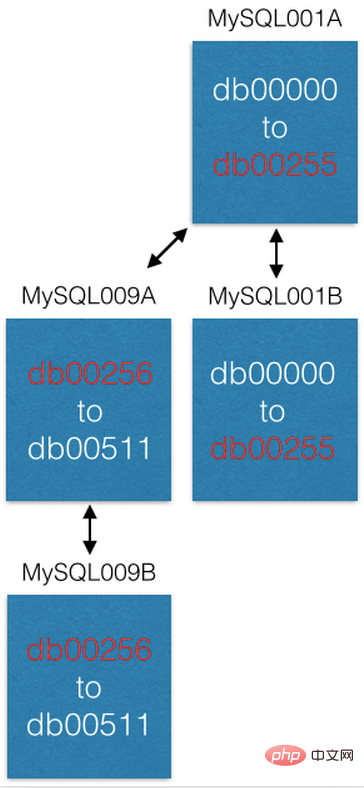

The last way is to migrate the slicing database host to a new slicing host (local slicing expansion) to improve capabilities. For example, we want to expand and distribute the MySQL001A slicing host in the previous example (on which are the slicing databases numbered from 0 to 511) to 2 slicing hosts. As we designed, we create a new master-master mutual backup host pair as the new slice hosts (named MySQL009A and B) and fully copy the data from MySQL001A.

After the data copy is completed, we modify the slicing configuration. MySQL001A is only responsible for the slicing database from 0 to 255, and MySQL009A is only responsible for the slicing database from 256 to 511. Now each of the two hosts is only responsible for half of the tasks that the previous host was responsible for, and the service capability has been improved.

Some feature descriptions

For the business object data generated by the old system, they must be generated for the business objects according to the design With UUIDs in the new system, you should realize that where they go (which tile database) you decide is up to you. (Annotation: You can plan the distribution of old data on the slice database) However, when it is put into the slice database, only when inserting a record, the database will return the local ID of the inserted object. With this, the UUID of the object can be constructed. .

(Annotation: When migrating, you must consider the establishment of relationships between business objects through UUID)

For those database tables that already have a large amount of data, I have used the table structure modification command. (ALTERs) -- like adding a field -- you know that's a very long and painful process. Our design is to never use ALTERs level commands on MySQL (when data is already available). On our business system, Pinterest, the last ALTER statement we used was about 3 years ago. For objects in the object table, if you need to add an object attribute field, you add it to the JOSON blob field of the object data. You can set a default value for the new object's properties. When accessing the data of the old object, if the old object does not have new properties, you can add a new property default value to it. For relational mapping tables, simply create a new relational mapping table to meet your needs. You know all this! Let your system take off!

Slicing of mod database

The name of mod shard is just like Mod Squad, but in fact it is completely different.

Some business objects need to be queried and accessed through non-ID (non-ID) methods. (Translation: This ID refers to the 64-bit UUID in the previous design description) For example, if a Pinner registers and logs in to our business platform with his or her Facebook registered account. We need to map their facebook ID with our Pinner’s ID. For our system, the facebook ID is just a string of binary digits. (Annotation: It implies that we cannot deconstruct the IDs of other platforms like the design of our system platform, nor can we talk about how to design slices. We just save them and design them to map with our IDs) Therefore, we need to save them , they also need to be saved in the slice database respectively. We call this a mod shard. Other examples include IP addresses, usernames, and user emails.

模转数据切片(mod shard)类似前述我们业务系统的数据切片设计。但是,你需要按照其输入的原样进行查询。如何确定其切片位置,需要用到哈希和模数运算。哈希函数将任意字串转换成定长数值,而模数设为系统已有切片数据库的切片数量,取模后,其必然落在某个切片数据库上。结果是其数据将保存在已有切片数据库上。举例:

shard = md5(“1.2.3.4") % 4096

(译注:mod shard 这个词,我网上找遍了,试图找到一个较准确权威的中文翻译!无果,因为 mod 这个词有几种意思,最近的是 module 模块、模组,同时它也是模运算符(%)。我根据原文意思,翻译为 模转 。或可翻译为 模式,但个人感觉意思模糊。不当之处,请指正。另,原作者举的例子是以 IP 地址举例的,哈希使用的是 md5,相比其它,虽老但性能最好)

在这个例子中分片是 1524。 我们维护一个类似于 ID 分片的配置文件:

[{“range”: (0, 511), “master”: “msdb001a”, “slave”: “msdb001b”},

{“range”: (512, 1023), “master”: “msdb002a”, “slave”: “msdb002b”},

{“range”: (1024, 1535), “master”: “msdb003a”, “slave”: “msdb003b”},

…]因此,为了找到 IP 为 1.2.3.4 的数据,我们将这样做:

conn = MySQLdb.connect(host=”msdb003a”) conn.execute(“SELECT data FROM msdb001a.ip_data WHERE ip='1.2.3.4'”)

你失去了一些分片好的属性,例如空间位置。你必须从一开始就设置分片的密钥(它不会为你制作密钥)。最好使用不变的 id 来表示系统中的对象。这样,当用户更改其用户名时,您就不必更新许多引用。

最后的提醒

这个系统作为 Pinterest 的数据支撑已良好运行了 3.5 年,现在看来还会继续运行下去。设计实现这样的系统是直观、容易的。但是让它运行起来,尤其迁移旧数据却太不易了。若您的业务平台面临着急速增长的痛苦且您想切片自己的数据库。建议您考虑建立一个后端集群服务器(优先建议 pyres)脚本化您迁移旧数据到切片数据库的逻辑,自动化处理。我保证无论您想得多周到,多努力,您一定会丢数据或丢失数据之间的关联。我真的恨死隐藏在复杂数据关系中的那些捣蛋鬼。因此,您需要不断地迁移,修正,再迁移... 你需要极大的耐心和努力。直到您确定您不再需要为了旧数据迁移而往您的切片数据库中再操作数据为止。

这个系统的设计为了数据的分布切片,已尽最大的努力做到最好。它本身不能提供给你数据库事务 ACID 四要素中的 Atomicity(原子性)、Consistency(一致性)、Isolation(隔离性)哇呕!听起来很坏呀,不用担心。您可能不能利用数据库本身提供的功能很好地保证这些。但是,我提醒您,一切尽在您的掌握中,您只是让它运行起来,满足您的需要就好。设计简单直接就是王道,(译注:也许需要您做许多底层工作,但一切都在您的控制之中)主要是它运行起来超快! 如果您担心 A(原子性)、I(隔离性)和 C(一致性),写信给我,我有成堆的经验让您克服这些问题。

还有最后的问题,如何灾难恢复,啊哈? 我们创建另外的服务去维护着切片数据库,我们保存切片配置在 ZooKeeper 上。当单点主服务器宕掉时,我们有脚本自动地提升主服务器对应的从服务器立即顶上。之后,以最快的速度运行新机器顶上从服务器的缺。直至今日,我们从未使用过类似自动灾难恢复的服务。

推荐教程:《MySQL教程》

The above is the detailed content of How MySQL uses sharding to solve the storage problem of 50 billion data. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

You can open phpMyAdmin through the following steps: 1. Log in to the website control panel; 2. Find and click the phpMyAdmin icon; 3. Enter MySQL credentials; 4. Click "Login".

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL is an open source relational database management system, mainly used to store and retrieve data quickly and reliably. Its working principle includes client requests, query resolution, execution of queries and return results. Examples of usage include creating tables, inserting and querying data, and advanced features such as JOIN operations. Common errors involve SQL syntax, data types, and permissions, and optimization suggestions include the use of indexes, optimized queries, and partitioning of tables.

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

Redis uses a single threaded architecture to provide high performance, simplicity, and consistency. It utilizes I/O multiplexing, event loops, non-blocking I/O, and shared memory to improve concurrency, but with limitations of concurrency limitations, single point of failure, and unsuitable for write-intensive workloads.

MySQL's Place: Databases and Programming

Apr 13, 2025 am 12:18 AM

MySQL's Place: Databases and Programming

Apr 13, 2025 am 12:18 AM

MySQL's position in databases and programming is very important. It is an open source relational database management system that is widely used in various application scenarios. 1) MySQL provides efficient data storage, organization and retrieval functions, supporting Web, mobile and enterprise-level systems. 2) It uses a client-server architecture, supports multiple storage engines and index optimization. 3) Basic usages include creating tables and inserting data, and advanced usages involve multi-table JOINs and complex queries. 4) Frequently asked questions such as SQL syntax errors and performance issues can be debugged through the EXPLAIN command and slow query log. 5) Performance optimization methods include rational use of indexes, optimized query and use of caches. Best practices include using transactions and PreparedStatemen

Why Use MySQL? Benefits and Advantages

Apr 12, 2025 am 12:17 AM

Why Use MySQL? Benefits and Advantages

Apr 12, 2025 am 12:17 AM

MySQL is chosen for its performance, reliability, ease of use, and community support. 1.MySQL provides efficient data storage and retrieval functions, supporting multiple data types and advanced query operations. 2. Adopt client-server architecture and multiple storage engines to support transaction and query optimization. 3. Easy to use, supports a variety of operating systems and programming languages. 4. Have strong community support and provide rich resources and solutions.

Monitor Redis Droplet with Redis Exporter Service

Apr 10, 2025 pm 01:36 PM

Monitor Redis Droplet with Redis Exporter Service

Apr 10, 2025 pm 01:36 PM

Effective monitoring of Redis databases is critical to maintaining optimal performance, identifying potential bottlenecks, and ensuring overall system reliability. Redis Exporter Service is a powerful utility designed to monitor Redis databases using Prometheus. This tutorial will guide you through the complete setup and configuration of Redis Exporter Service, ensuring you seamlessly build monitoring solutions. By studying this tutorial, you will achieve fully operational monitoring settings

How to view sql database error

Apr 10, 2025 pm 12:09 PM

How to view sql database error

Apr 10, 2025 pm 12:09 PM

The methods for viewing SQL database errors are: 1. View error messages directly; 2. Use SHOW ERRORS and SHOW WARNINGS commands; 3. Access the error log; 4. Use error codes to find the cause of the error; 5. Check the database connection and query syntax; 6. Use debugging tools.

How to connect to the database of apache

Apr 13, 2025 pm 01:03 PM

How to connect to the database of apache

Apr 13, 2025 pm 01:03 PM

Apache connects to a database requires the following steps: Install the database driver. Configure the web.xml file to create a connection pool. Create a JDBC data source and specify the connection settings. Use the JDBC API to access the database from Java code, including getting connections, creating statements, binding parameters, executing queries or updates, and processing results.