Backend Development

Backend Development

Python Tutorial

Python Tutorial

The most efficient Python crawler framework in history (recommended)

The most efficient Python crawler framework in history (recommended)

The most efficient Python crawler framework in history (recommended)

Web crawlers (also known as web spiders, web robots, and more commonly known as web page chasers in the FOAF community) are a type of web crawler that automatically follows certain rules. A program or script that crawls information from the World Wide Web. Now let's learn this together.

1.Scrapy

Scrapy is an application framework written to crawl website data and extract structured data. It can be used in a series of programs including data mining, information processing or storing historical data. . Using this framework, you can easily crawl down data such as Amazon product information.

Project address: https://scrapy.org/

2.PySpider

pyspider is a A powerful web crawler system implemented in python. It can write scripts, schedule functions and view crawling results in real time on the browser interface. The backend uses commonly used databases to store crawling results and can also set timings. Tasks and task priorities, etc.

Project address: https://github.com/binux/pyspider

3.Crawley

Crawley can crawl the content of the corresponding website at high speed, supports relational and non-relational databases, and the data can be exported to JSON, XML, etc.

Project address: http://project.crawley-cloud.com/

4.Portia

Portia is an open source visual crawler tool that allows you to crawl websites without any programming knowledge! Simply annotate the pages that interest you and Portia will create a spider to extract data from similar pages.

Project address: https://github.com/scrapinghub/portia

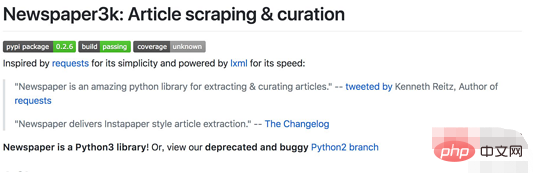

5.Newspaper

Newspaper can be used to extract news, articles and content analysis. Use multi-threading, support more than 10 languages, etc.

Project address: https://github.com/codelucas/newspaper

6.Beautiful Soup

Beautiful Soup is a Python library that can extract data from HTML or XML files. It can realize the usual way of document navigation, search and modification through your favorite converter. Beautiful Soup will save you hours or even days. working hours.

Project address: https://www.crummy.com/software/BeautifulSoup/bs4/doc/

7.Grab

Grab is a Python framework for building web scrapers. With Grab, you can build web scrapers of varying complexity, from simple 5-line scripts to complex asynchronous website scrapers that handle millions of web pages. Grab provides an API for performing network requests and processing received content, such as interacting with the DOM tree of an HTML document.

Project address: http://docs.grablib.org/en/latest/#grab-spider-user-manual

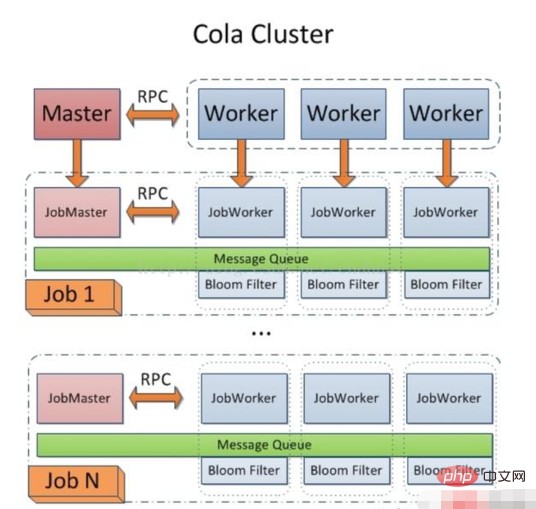

8 .Cola

Cola is a distributed crawler framework. For users, they only need to write a few specific functions without paying attention to the details of distributed operation. Tasks are automatically distributed across multiple machines, and the entire process is transparent to the user.

Project address: https://github.com/chineking/cola

Thank you for reading, I hope you will benefit a lot.

Reprinted to: https://www.toutiao.com/i6560240315519730190/

Recommended tutorial: "python tutorial"

The above is the detailed content of The most efficient Python crawler framework in history (recommended). For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to solve the permissions problem encountered when viewing Python version in Linux terminal?

Apr 01, 2025 pm 05:09 PM

How to solve the permissions problem encountered when viewing Python version in Linux terminal?

Apr 01, 2025 pm 05:09 PM

Solution to permission issues when viewing Python version in Linux terminal When you try to view Python version in Linux terminal, enter python...

How to efficiently copy the entire column of one DataFrame into another DataFrame with different structures in Python?

Apr 01, 2025 pm 11:15 PM

How to efficiently copy the entire column of one DataFrame into another DataFrame with different structures in Python?

Apr 01, 2025 pm 11:15 PM

When using Python's pandas library, how to copy whole columns between two DataFrames with different structures is a common problem. Suppose we have two Dats...

Python hourglass graph drawing: How to avoid variable undefined errors?

Apr 01, 2025 pm 06:27 PM

Python hourglass graph drawing: How to avoid variable undefined errors?

Apr 01, 2025 pm 06:27 PM

Getting started with Python: Hourglass Graphic Drawing and Input Verification This article will solve the variable definition problem encountered by a Python novice in the hourglass Graphic Drawing Program. Code...

Python Cross-platform Desktop Application Development: Which GUI Library is the best for you?

Apr 01, 2025 pm 05:24 PM

Python Cross-platform Desktop Application Development: Which GUI Library is the best for you?

Apr 01, 2025 pm 05:24 PM

Choice of Python Cross-platform desktop application development library Many Python developers want to develop desktop applications that can run on both Windows and Linux systems...

Do Google and AWS provide public PyPI image sources?

Apr 01, 2025 pm 05:15 PM

Do Google and AWS provide public PyPI image sources?

Apr 01, 2025 pm 05:15 PM

Many developers rely on PyPI (PythonPackageIndex)...

How to efficiently count and sort large product data sets in Python?

Apr 01, 2025 pm 08:03 PM

How to efficiently count and sort large product data sets in Python?

Apr 01, 2025 pm 08:03 PM

Data Conversion and Statistics: Efficient Processing of Large Data Sets This article will introduce in detail how to convert a data list containing product information to another containing...

How to optimize processing of high-resolution images in Python to find precise white circular areas?

Apr 01, 2025 pm 06:12 PM

How to optimize processing of high-resolution images in Python to find precise white circular areas?

Apr 01, 2025 pm 06:12 PM

How to handle high resolution images in Python to find white areas? Processing a high-resolution picture of 9000x7000 pixels, how to accurately find two of the picture...

How to solve the problem of file name encoding when connecting to FTP server in Python?

Apr 01, 2025 pm 06:21 PM

How to solve the problem of file name encoding when connecting to FTP server in Python?

Apr 01, 2025 pm 06:21 PM

When using Python to connect to an FTP server, you may encounter encoding problems when obtaining files in the specified directory and downloading them, especially text on the FTP server...