Operation and Maintenance

Operation and Maintenance

Linux Operation and Maintenance

Linux Operation and Maintenance

BigData big data operation and maintenance

BigData big data operation and maintenance

BigData big data operation and maintenance

Big data operation and maintenance

1.HDFSDistributed file system operation and maintenance

1.Create recursion in the root directory of the HDFS file system Directory "1daoyun/file", upload the BigDataSkills.txt file in the attachment Go to the 1daoyun/file directory and use the relevant commands to view the files in the 1daoyun/file directory in the system List information.

hadoop fs -mkdir -p /1daoyun/filehadoop fs -put BigDataSkills.txt /1daoyun/file

hadoop fs -ls /1daoyun/file

2.

at HDFS Create a recursive directory under the root directory of the file system"1daoyun/file", and add the ## in the attachment #BigDataSkills.txt file, upload it to the 1daoyun/file directory, and use HDFS File systemCheck tool checks whether files are damaged. hadoop fs -mkdir -p /1daoyun/file

hadoop fs -put BigDataSkills.txt/1daoyun/file

hadoop fsck /1daoyun/file/BigDataSkills.txt

3.

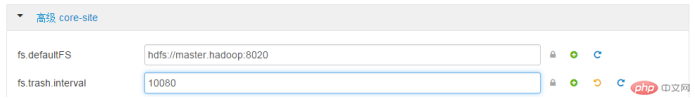

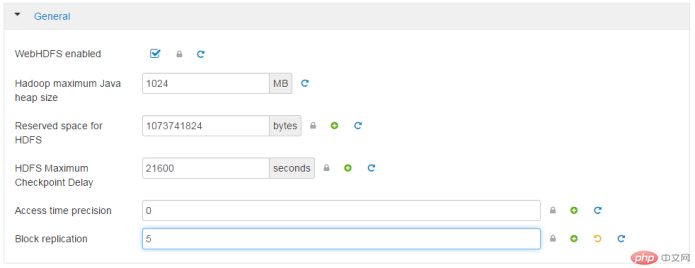

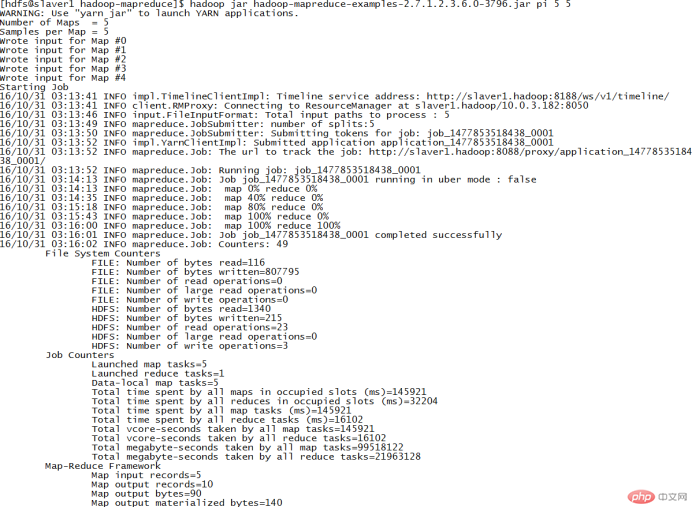

at HDFS Create a recursive directory in the root directory of the file system "1daoyun/file", and add in the attachment BigDataSkills.txt file, upload to the 1daoyun/file directory, specify BigDataSkills.txt # during the upload process The ## file has a replication factor of #HDFS file system of 2 and uses fsck ToolTool checks the number of copies of storage blocks. hadoop fs -mkdir -p /1daoyun/file ##hadoop fs -D dfs.replication=2 -put BigDataSkills.txt /1daoyun/file hadoop fsck /1daoyun/file/BigDataSkills.txt 4.HDFS There is one in the root directory of the file system /apps file directory, it is required to enable the snapshot creation function of the directory and create a snapshot for the directory file , the snapshot name is apps_1daoyun, so use related commands to view the list information of the snapshot file. hadoop dfsadmin -allowSnapshot /apps hadoop fs -createSnapshot /apps apps_1daoyun hadoop fs -ls /apps/.snapshot 5.when Hadoop When the cluster starts, it will first enter the safe mode state, which will exit after 30 seconds by default. When the system is in safe mode, the HDFS file system can only be read, and cannot be written, modified, deleted, etc. It is assumed that the Hadoop cluster needs to be maintained. It is necessary to put the cluster into safe mode and check its status. hdfs dfsadmin -safemode enter 6. In order to prevent operators from accidentally deleting files, HDFS The file system provides the recycle bin function, but Many junk files will take up a lot of storage space. It is required that the WEB interface of the Xiandian big data platform completely delete the files in the HDFS file system recycle bin The time interval is 7 days. Advancedcore-sitefs.trash.interval: 10080 7.In order to prevent operators from accidentally deleting files, the HDFS file system provides a recycle bin function, but too many junk files will take up a lot of storage space. It is required to use the "vi" command in Linux Shell to modify the corresponding configuration file and parameter information. Turn off the recycle bin function. After completion, restart the corresponding service. Advancedcore-sitefs.trash.interval: 0vi /etc/hadoop/2.4.3.0 -227/0/core-site.xml ## ## trash.interval ## # sbin/stop-dfs.sh##sbin/start- dfs.sh The hosts in the cluster may experience downtime or system damage under certain circumstances. One Once these problems are encountered, The data files in the file system will inevitably be damaged or lost, HDFS The reliability of the file system now requires the redundancy replication factor of the cluster in the WEB interface of the Xidian big data platform Modify to 5. GeneralBlock replication5 9.Hadoop The hosts in the cluster may experience downtime or system damage under certain circumstances. Once Due to these problems, HDFS the data files in the file system will inevitably be damaged or lost, In order to ensure that HDFS For the reliability of the file system, the redundancy replication factor of the cluster needs to be modified to 5, in Linux Shell Use the "vi" command to modify the corresponding configuration file and parameter information. After completion, restart the corresponding service. vi/etc/hadoop/2.4.3.0-227/0/hdfs- site.xml ## # #/usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf stop {namenode/datenode} /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start {namenode/datenode} 10. Use the command to view hdfs in the file system/tmp The number of directories under the directory, the number of files and the total size of the files . hadoop fs -count /tmp2.MapREDUCE Case question 1.In the cluster node/usr/hdp/2.4.3.0-227/hadoop-mapreduce/## In the # directory, there is a case JAR Packagehadoop-mapreduce-examples.jar. Run the PI program in the JAR package to calculate Piπ## Approximate value of #, requires running 5 Map tasks, each Map The number of throws for the task is 5. /usr/hdp/2.4.3.0-227/hadoop-mapreduce/##hadoop jar hadoop- mapreduce-examples-2.7.1.2.4.3.0-227.jar pi 5 5

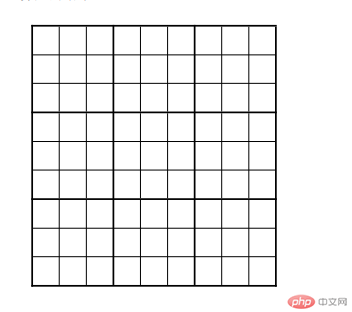

In the cluster node/usr/hdp/2.4.3.0-227/hadoop-mapreduce/ directory, there is a caseJAR Packagehadoop-mapreduce-examples.jar. Run the wordcount program in the JAR package to #/1daoyun/file/ BigDataSkills.txt file counts words, outputs the operation results to the /1daoyun/output directory, and uses related commands to query the word count results. hadoop jar/usr/hdp/2.4.3.0-227/hadoop-mapreduce/hadoop-mapreduce-examples-2.7.1.2.4.3.0-227.jar wordcount /1daoyun/ file/BigDataSkills.txt /1daoyun/output 3.In the cluster node/usr/hdp/2.4.3.0-227/hadoop-mapreduce/## In the # directory, there is a case JAR Packagehadoop-mapreduce-examples.jar. Run the sudoku program in the JAR package to calculate the results of the Sudoku problems in the table below. . cat puzzle1.dta 4. In the cluster node## There is a case JAR in the #/usr/hdp/2.4.3.0-227/hadoop-mapreduce/ directory. Packagehadoop-mapreduce-examples.jar. Run the grep program in the JAR package to count / in the file system 1daoyun/file/BigDataSkills.txt The number of times "Hadoop" appears in the file. After the statistics are completed, query the statistical result information. hadoop jarhadoop-mapreduce-examples-2.7.1.2.4.3.0-227.jar grep /1daoyun/file/BigDataSkills.txt /output hadoop  ##

## or

or ##2.

##2.

The above is the detailed content of BigData big data operation and maintenance. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How do I use regular expressions (regex) in Linux for pattern matching?

Mar 17, 2025 pm 05:25 PM

How do I use regular expressions (regex) in Linux for pattern matching?

Mar 17, 2025 pm 05:25 PM

The article explains how to use regular expressions (regex) in Linux for pattern matching, file searching, and text manipulation, detailing syntax, commands, and tools like grep, sed, and awk.

How do I monitor system performance in Linux using tools like top, htop, and vmstat?

Mar 17, 2025 pm 05:28 PM

How do I monitor system performance in Linux using tools like top, htop, and vmstat?

Mar 17, 2025 pm 05:28 PM

The article discusses using top, htop, and vmstat for monitoring Linux system performance, detailing their unique features and customization options for effective system management.

How do I implement two-factor authentication (2FA) for SSH in Linux?

Mar 17, 2025 pm 05:31 PM

How do I implement two-factor authentication (2FA) for SSH in Linux?

Mar 17, 2025 pm 05:31 PM

The article provides a guide on setting up two-factor authentication (2FA) for SSH on Linux using Google Authenticator, detailing installation, configuration, and troubleshooting steps. It highlights the security benefits of 2FA, such as enhanced sec

How do I manage software packages in Linux using package managers (apt, yum, dnf)?

Mar 17, 2025 pm 05:26 PM

How do I manage software packages in Linux using package managers (apt, yum, dnf)?

Mar 17, 2025 pm 05:26 PM

Article discusses managing software packages in Linux using apt, yum, and dnf, covering installation, updates, and removals. It compares their functionalities and suitability for different distributions.

How do I use sudo to grant elevated privileges to users in Linux?

Mar 17, 2025 pm 05:32 PM

How do I use sudo to grant elevated privileges to users in Linux?

Mar 17, 2025 pm 05:32 PM

The article explains how to manage sudo privileges in Linux, including granting, revoking, and best practices for security. Key focus is on editing /etc/sudoers safely and limiting access.Character count: 159

Key Linux Operations: A Beginner's Guide

Apr 09, 2025 pm 04:09 PM

Key Linux Operations: A Beginner's Guide

Apr 09, 2025 pm 04:09 PM

Linux beginners should master basic operations such as file management, user management and network configuration. 1) File management: Use mkdir, touch, ls, rm, mv, and CP commands. 2) User management: Use useradd, passwd, userdel, and usermod commands. 3) Network configuration: Use ifconfig, echo, and ufw commands. These operations are the basis of Linux system management, and mastering them can effectively manage the system.

The 5 Pillars of Linux: Understanding Their Roles

Apr 11, 2025 am 12:07 AM

The 5 Pillars of Linux: Understanding Their Roles

Apr 11, 2025 am 12:07 AM

The five pillars of the Linux system are: 1. Kernel, 2. System library, 3. Shell, 4. File system, 5. System tools. The kernel manages hardware resources and provides basic services; the system library provides precompiled functions for applications; the shell is the interface for users to interact with the system; the file system organizes and stores data; and system tools are used for system management and maintenance.

Linux Maintenance Mode: Tools and Techniques

Apr 10, 2025 am 09:42 AM

Linux Maintenance Mode: Tools and Techniques

Apr 10, 2025 am 09:42 AM

In Linux systems, maintenance mode can be entered by pressing a specific key at startup or using a command such as "sudosystemctlrescue". Maintenance mode allows administrators to perform system maintenance and troubleshooting without interference, such as repairing file systems, resetting passwords, patching security vulnerabilities, etc.