How to set php to prohibit crawling websites

How to prohibit crawling in php: first obtain UA information through the "$_SERVER['HTTP_USER_AGENT'];" method; then store the malicious "USER_AGENT" into the array; finally prohibit mainstream collection such as empty "USER_AGENT" program.

Recommended: "PHP Tutorial"

We all know that there are many crawlers on the Internet. Some are useful for website inclusion, such as Baidu Spider, but there are also useless crawlers that not only do not comply with robots rules and put pressure on the server, but also cannot bring traffic to the website, such as Yisou Spider ( Latest addition: Yisou Spider Has been acquired by UC Shenma Search! Therefore, this article has been removed from the ban on Yisou Spider! ==>Related articles). Recently, Zhang Ge discovered that there were a lot of crawling records of Yisou and other garbage in the nginx log, so he compiled and collected various methods on the Internet to prohibit garbage spiders from crawling the website. While setting up his own website, he also provided reference for all webmasters. .

1. Apache

①. By modifying the .htaccess file

2. Nginx code

Enter the conf directory under the nginx installation directory and change it as follows Save the code as agent_deny.conf

cd /usr/local/nginx/conf

vim agent_deny.conf

#禁止Scrapy等工具的抓取

if ($http_user_agent ~* (Scrapy|Curl|HttpClient)) {

return 403;

}

#禁止指定UA及UA为空的访问

if ($http_user_agent ~* "FeedDemon|Indy Library|Alexa Toolbar|AskTbFXTV|AhrefsBot|CrawlDaddy|CoolpadWebkit|Java|Feedly|UniversalFeedParser|ApacheBench|Microsoft URL Control|Swiftbot|ZmEu|oBot|jaunty|Python-urllib|lightDeckReports Bot|YYSpider|DigExt|HttpClient|MJ12bot|heritrix|EasouSpider|Ezooms|^$" ) {

return 403;

}

#禁止非GET|HEAD|POST方式的抓取

if ($request_method !~ ^(GET|HEAD|POST)$) {

return 403;

}Then, insert the following code after location / { in the website-related configuration:

include agent_deny.conf;

Such as the configuration of Zhang Ge’s blog:

[marsge@Mars_Server ~]$ cat /usr/local/nginx/conf/zhangge.conf

location / {

try_files $uri $uri/ /index.php?$args;

#这个位置新增1行:

include agent_deny.conf;

rewrite ^/sitemap_360_sp.txt$ /sitemap_360_sp.php last;

rewrite ^/sitemap_baidu_sp.xml$ /sitemap_baidu_sp.php last;

rewrite ^/sitemap_m.xml$ /sitemap_m.php last;

保存后,执行如下命令,平滑重启nginx即可:

/usr/local/nginx/sbin/nginx -s reload3. PHP code

Place the following method after the first //Get UA information

$ua = $_SERVER['HTTP_USER_AGENT']; //将恶意USER_AGENT存入数组 $now_ua = array('FeedDemon ','BOT/0.1 (BOT for JCE)','CrawlDaddy ','Java','Feedly','UniversalFeedParser','ApacheBench','Swiftbot','ZmEu','Indy Library','oBot','jaunty','YandexBot','AhrefsBot','MJ12bot','WinHttp','EasouSpider','HttpClient','Microsoft URL Control','YYSpider','jaunty','Python-urllib','lightDeckReports Bot');

//Prohibited Empty USER_AGENT, dedecms and other mainstream collection programs are all empty USER_AGENT, and some sql injection tools are also empty USER_AGENT

if(!$ua) {

header("Content-type: text/html; charset=utf-8");

die('请勿采集本站,因为采集的站长木有小JJ!');

}else{

foreach($now_ua as $value )

//判断是否是数组中存在的UA

if(eregi($value,$ua)) {

header("Content-type: text/html; charset=utf-8");

die('请勿采集本站,因为采集的站长木有小JJ!');

}

}4. Test effect

If it is vps, it is very simple, use curl -A to simulate Just crawl, for example:

Simulate Yisou Spider crawling:

curl -I -A 'YisouSpider' zhang.ge

Simulate crawling when UA is empty:

curl -I -A ' ' zhang.ge

Simulate the crawling of Baidu Spider:

curl -I -A 'Baiduspider' zhang.ge

Modify the .htaccess in the website directory and add the following code (2 types Code optional): The screenshots of the three crawling results are as follows:

# It can be seen that the empty return of Yisou Spider and UA is a 403 Forbidden Access logo, while Baidu Spider succeeds Return 200, the description is valid!

Supplement: The next day, check the screenshot of the nginx log effect:

①. Garbage collection with empty UA information is intercepted:

②. Banned UAs are intercepted:

Therefore, for the collection of spam spiders, we can analyze the access logs of the website to find out some unseen ones. After the name of the spider is correct, it can be added to the prohibited list of the previous code to prohibit crawling.

5. Appendix: UA Collection

The following is a list of common spam UA on the Internet, for reference only, and you are welcome to add to it.

FeedDemon 内容采集 BOT/0.1 (BOT for JCE) sql注入 CrawlDaddy sql注入 Java 内容采集 Jullo 内容采集 Feedly 内容采集 UniversalFeedParser 内容采集 ApacheBench cc攻击器 Swiftbot 无用爬虫 YandexBot 无用爬虫 AhrefsBot 无用爬虫 YisouSpider 无用爬虫(已被UC神马搜索收购,此蜘蛛可以放开!) MJ12bot 无用爬虫 ZmEu phpmyadmin 漏洞扫描 WinHttp 采集cc攻击 EasouSpider 无用爬虫 HttpClient tcp攻击 Microsoft URL Control 扫描 YYSpider 无用爬虫 jaunty wordpress爆破扫描器 oBot 无用爬虫 Python-urllib 内容采集 Indy Library 扫描 FlightDeckReports Bot 无用爬虫 Linguee Bot 无用爬虫

The above is the detailed content of How to set php to prohibit crawling websites. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

7 PHP Functions I Regret I Didn't Know Before

Nov 13, 2024 am 09:42 AM

7 PHP Functions I Regret I Didn't Know Before

Nov 13, 2024 am 09:42 AM

If you are an experienced PHP developer, you might have the feeling that you’ve been there and done that already.You have developed a significant number of applications, debugged millions of lines of code, and tweaked a bunch of scripts to achieve op

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c

Explain JSON Web Tokens (JWT) and their use case in PHP APIs.

Apr 05, 2025 am 12:04 AM

Explain JSON Web Tokens (JWT) and their use case in PHP APIs.

Apr 05, 2025 am 12:04 AM

JWT is an open standard based on JSON, used to securely transmit information between parties, mainly for identity authentication and information exchange. 1. JWT consists of three parts: Header, Payload and Signature. 2. The working principle of JWT includes three steps: generating JWT, verifying JWT and parsing Payload. 3. When using JWT for authentication in PHP, JWT can be generated and verified, and user role and permission information can be included in advanced usage. 4. Common errors include signature verification failure, token expiration, and payload oversized. Debugging skills include using debugging tools and logging. 5. Performance optimization and best practices include using appropriate signature algorithms, setting validity periods reasonably,

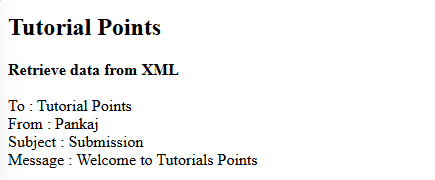

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

This tutorial demonstrates how to efficiently process XML documents using PHP. XML (eXtensible Markup Language) is a versatile text-based markup language designed for both human readability and machine parsing. It's commonly used for data storage an

PHP Program to Count Vowels in a String

Feb 07, 2025 pm 12:12 PM

PHP Program to Count Vowels in a String

Feb 07, 2025 pm 12:12 PM

A string is a sequence of characters, including letters, numbers, and symbols. This tutorial will learn how to calculate the number of vowels in a given string in PHP using different methods. The vowels in English are a, e, i, o, u, and they can be uppercase or lowercase. What is a vowel? Vowels are alphabetic characters that represent a specific pronunciation. There are five vowels in English, including uppercase and lowercase: a, e, i, o, u Example 1 Input: String = "Tutorialspoint" Output: 6 explain The vowels in the string "Tutorialspoint" are u, o, i, a, o, i. There are 6 yuan in total

Explain late static binding in PHP (static::).

Apr 03, 2025 am 12:04 AM

Explain late static binding in PHP (static::).

Apr 03, 2025 am 12:04 AM

Static binding (static::) implements late static binding (LSB) in PHP, allowing calling classes to be referenced in static contexts rather than defining classes. 1) The parsing process is performed at runtime, 2) Look up the call class in the inheritance relationship, 3) It may bring performance overhead.

What are PHP magic methods (__construct, __destruct, __call, __get, __set, etc.) and provide use cases?

Apr 03, 2025 am 12:03 AM

What are PHP magic methods (__construct, __destruct, __call, __get, __set, etc.) and provide use cases?

Apr 03, 2025 am 12:03 AM

What are the magic methods of PHP? PHP's magic methods include: 1.\_\_construct, used to initialize objects; 2.\_\_destruct, used to clean up resources; 3.\_\_call, handle non-existent method calls; 4.\_\_get, implement dynamic attribute access; 5.\_\_set, implement dynamic attribute settings. These methods are automatically called in certain situations, improving code flexibility and efficiency.