What does computer word length mean?

The word length of the computer refers to the total number of binary digits in the data packet. The word length is a major indicator that reflects the performance of the computer. The word length determines the computing accuracy of the computer. The longer the word length, the higher the computing accuracy. The higher.

The word length of the computer refers to:

1, bit[Bit (abbreviation is small b)]---refers to a binary number "0" or "1"

It is the smallest unit for computers to represent information.

2, byte[Byte (referred to as big B)]---8-bit binary information is called a byte.

An English letter---occupies one byte

A Chinese character-------occupies two bytes

An integer--- ------Occupies two bytes

A real number---------Occupies four bytes

3, Word length- --The total number of binary digits in the data packet.

The concept of "word" in computers refers to a set of binary numbers processed in parallel by the computer at one time. This set of binary numbers is called a "word" (Word), and the number of digits in this set of binary numbers called word length. A "word" can store a computer instruction or a piece of data. If a computer system uses 32-bit binary information to represent an instruction, the computer's "word length" is said to be 32 bits.

So word length is a major indicator of computer performance. The word length determines the computing accuracy of the computer. The longer the word length, the higher the computing accuracy. The word length of microcomputers is generally 8, 16, 32, or 64 bits.

The above is the detailed content of What does computer word length mean?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

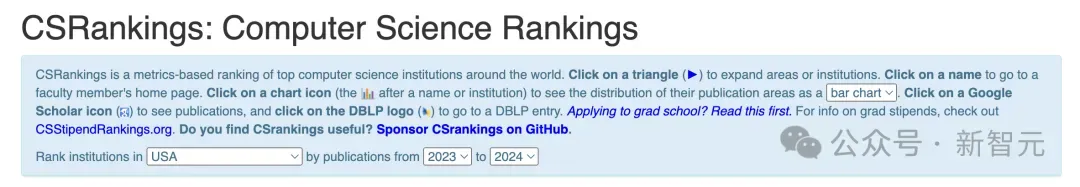

2024 CSRankings National Computer Science Rankings Released! CMU dominates the list, MIT falls out of the top 5

Mar 25, 2024 pm 06:01 PM

2024 CSRankings National Computer Science Rankings Released! CMU dominates the list, MIT falls out of the top 5

Mar 25, 2024 pm 06:01 PM

The 2024CSRankings National Computer Science Major Rankings have just been released! This year, in the ranking of the best CS universities in the United States, Carnegie Mellon University (CMU) ranks among the best in the country and in the field of CS, while the University of Illinois at Urbana-Champaign (UIUC) has been ranked second for six consecutive years. Georgia Tech ranked third. Then, Stanford University, University of California at San Diego, University of Michigan, and University of Washington tied for fourth place in the world. It is worth noting that MIT's ranking fell and fell out of the top five. CSRankings is a global university ranking project in the field of computer science initiated by Professor Emery Berger of the School of Computer and Information Sciences at the University of Massachusetts Amherst. The ranking is based on objective

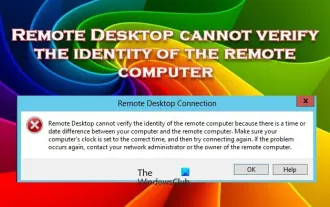

Remote Desktop cannot authenticate the remote computer's identity

Feb 29, 2024 pm 12:30 PM

Remote Desktop cannot authenticate the remote computer's identity

Feb 29, 2024 pm 12:30 PM

Windows Remote Desktop Service allows users to access computers remotely, which is very convenient for people who need to work remotely. However, problems can be encountered when users cannot connect to the remote computer or when Remote Desktop cannot authenticate the computer's identity. This may be caused by network connection issues or certificate verification failure. In this case, the user may need to check the network connection, ensure that the remote computer is online, and try to reconnect. Also, ensuring that the remote computer's authentication options are configured correctly is key to resolving the issue. Such problems with Windows Remote Desktop Services can usually be resolved by carefully checking and adjusting settings. Remote Desktop cannot verify the identity of the remote computer due to a time or date difference. Please make sure your calculations

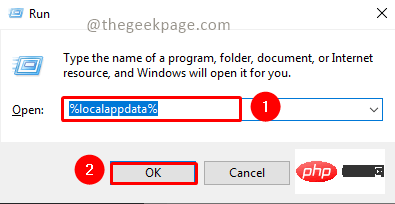

Fix: Microsoft Teams error code 80090016 Your computer's Trusted Platform module has failed

Apr 19, 2023 pm 09:28 PM

Fix: Microsoft Teams error code 80090016 Your computer's Trusted Platform module has failed

Apr 19, 2023 pm 09:28 PM

<p>MSTeams is the trusted platform to communicate, chat or call with teammates and colleagues. Error code 80090016 on MSTeams and the message <strong>Your computer's Trusted Platform Module has failed</strong> may cause difficulty logging in. The app will not allow you to log in until the error code is resolved. If you encounter such messages while opening MS Teams or any other Microsoft application, then this article can guide you to resolve the issue. </p><h2&

What is e in computer

Aug 31, 2023 am 09:36 AM

What is e in computer

Aug 31, 2023 am 09:36 AM

The "e" of computer is the scientific notation symbol. The letter "e" is used as the exponent separator in scientific notation, which means "multiplied to the power of 10". In scientific notation, a number is usually written as M × 10^E, where M is a number between 1 and 10 and E represents the exponent.

What does computer cu mean?

Aug 15, 2023 am 09:58 AM

What does computer cu mean?

Aug 15, 2023 am 09:58 AM

The meaning of cu in a computer depends on the context: 1. Control Unit, in the central processor of a computer, CU is the component responsible for coordinating and controlling the entire computing process; 2. Compute Unit, in a graphics processor or other accelerated processor, CU is the basic unit for processing parallel computing tasks.

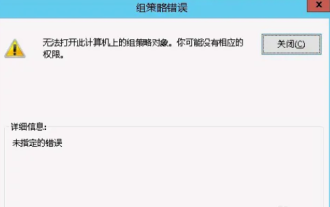

Unable to open the Group Policy object on this computer

Feb 07, 2024 pm 02:00 PM

Unable to open the Group Policy object on this computer

Feb 07, 2024 pm 02:00 PM

Occasionally, the operating system may malfunction when using a computer. The problem I encountered today was that when accessing gpedit.msc, the system prompted that the Group Policy object could not be opened because the correct permissions may be lacking. The Group Policy object on this computer could not be opened. Solution: 1. When accessing gpedit.msc, the system prompts that the Group Policy object on this computer cannot be opened because of lack of permissions. Details: The system cannot locate the path specified. 2. After the user clicks the close button, the following error window pops up. 3. Check the log records immediately and combine the recorded information to find that the problem lies in the C:\Windows\System32\GroupPolicy\Machine\registry.pol file

What should I do if steam cannot connect to the remote computer?

Mar 01, 2023 pm 02:20 PM

What should I do if steam cannot connect to the remote computer?

Mar 01, 2023 pm 02:20 PM

Solution to the problem that steam cannot connect to the remote computer: 1. In the game platform, click the "steam" option in the upper left corner; 2. Open the menu and select the "Settings" option; 3. Select the "Remote Play" option; 4. Check Activate the "Remote Play" function and click the "OK" button.

Unable to copy data from remote desktop to local computer

Feb 19, 2024 pm 04:12 PM

Unable to copy data from remote desktop to local computer

Feb 19, 2024 pm 04:12 PM

If you have problems copying data from a remote desktop to your local computer, this article can help you resolve it. Remote desktop technology allows multiple users to access virtual desktops on a central server, providing data protection and application management. This helps ensure data security and enables companies to manage their applications more efficiently. Users may face challenges while using Remote Desktop, one of which is the inability to copy data from the Remote Desktop to the local computer. This may be caused by different factors. Therefore, this article will provide guidance on resolving this issue. Why can't I copy from the remote desktop to my local computer? When you copy a file on your computer, it is temporarily stored in a location called the clipboard. If you cannot use this method to copy data from the remote desktop to your local computer