Why does Redis6.0 introduce multi-threading?

The following column will introduce to you why Redis6.0 introduces multi-threading in the Redis Tutorial column? , I hope it will be helpful to friends in need!

About the author: He once worked at Alibaba, Daily Fresh and other Internet companies as technical director. 15 years of e-commerce Internet experience.

One hundred days ago, Redis author antirez released a piece of news on his blog (antirez.com), and Redis 6.0 was officially released. One of the most eye-catching changes is that Redis 6.0 introduces multi-threading.

This article is mainly divided into two parts. First, let’s talk about why Redis adopted the single-threaded model before 6.0. Then I will explain the multi-threading of Redis6.0 in detail.

Why did Redis use a single-threaded model before 6.0?

Strictly speaking, after Redis 4.0 it is not a single-threaded model. thread. In addition to the main thread, there are also some background threads that handle some slower operations, such as the release of useless connections, the deletion of large keys, etc.

Single-threaded model, why is the performance so high?

The author of Redis has considered many aspects from the beginning of the design. Ultimately it was chosen to use a single-threaded model for command processing. There are several important reasons why we choose the single-threaded model:

Redis operations are based on memory, and the performance bottleneck of most operations is not in the CPU

-

Single-threaded model avoids the performance overhead caused by switching between threads

Using the single-threaded model can also process client requests concurrently (multiplexed I/O)

Using a single-threaded model has higher maintainability and lower development, debugging and maintenance costs

The third reason above is The decisive factor for Redis to finally adopt the single-threaded model. The other two reasons are the additional benefits of using the single-threaded model. Here we will introduce the above reasons in order.

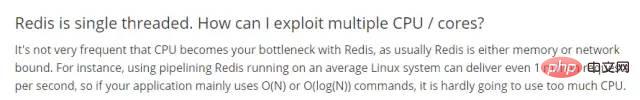

The performance bottleneck is not in the CPU

The following figure is the description of the single-thread model from the Redis official website. The general meaning is: the bottleneck of Redis is not the CPU, its main bottleneck is the memory and network. In a Linux environment, Redis can even submit 1 million requests per second.

#Why is it said that the bottleneck of Redis is not the CPU?

First of all, most operations of Redis are based on memory and are pure kv (key-value) operations, so the command execution speed is very fast. We can roughly understand that the data in redis is stored in a large HashMap. The advantage of HashMap is that the time complexity of searching and writing is O(1). Redis uses this structure to store data internally, which lays the foundation for Redis's high performance. According to the description on the Redis official website, under ideal circumstances Redis can submit one million requests per second, and the time required to submit each request is on the order of nanoseconds. Since every Redis operation is so fast and can be completely handled by a single thread, why bother using multi-threads!

Thread context switching problem

In addition, thread context switching will occur in multi-thread scenarios. Threads are scheduled by the CPU. One core of the CPU can only execute one thread at the same time within a time slice. A series of operations will occur when the CPU switches from thread A to thread B. The main process includes saving the execution of thread A. scene, and then load the execution scene of thread B. This process is "thread context switching". This involves saving and restoring thread-related instructions.

Frequent thread context switching may cause a sharp decline in performance, which will result in us not only failing to improve the speed of processing requests, but also reducing performance. This is one of the reasons why Redis is cautious about multi-threading technology.

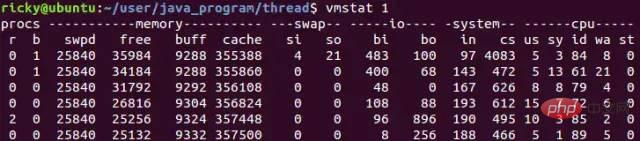

In Linux systems, you can use the vmstat command to check the number of context switches. The following is an example of using vmstat to check the number of context switches:

vmstat 1 means counting once per second, where cs The column refers to the number of context switches. Generally, the context switches of an idle system are less than 1500 per second.

vmstat 1 means counting once per second, where cs The column refers to the number of context switches. Generally, the context switches of an idle system are less than 1500 per second.

Processing client requests in parallel (I/O multiplexing)

As mentioned above: the bottleneck of Redis is not CPU, its main bottlenecks are memory and network. The so-called memory bottleneck is easy to understand. When Redis is used as a cache, many scenarios need to cache a large amount of data, so a large amount of memory space is required. This can be solved through cluster sharding, such as Redis's own centerless cluster sharding solution and Codis-based Cluster sharding scheme for agents.

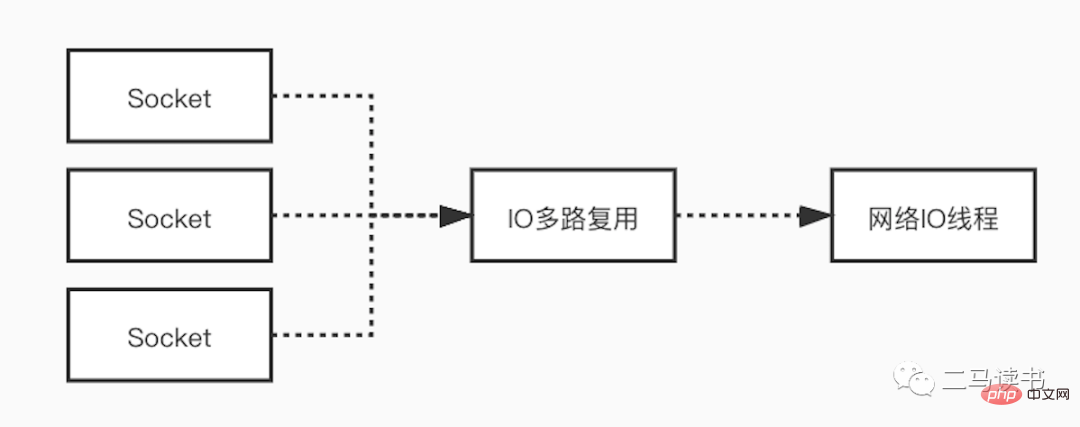

For network bottlenecks, Redis uses multiplexing technology in the network I/O model to reduce the impact of network bottlenecks. The use of a single-threaded model in many scenarios does not mean that the program cannot process tasks concurrently. Although Redis uses a single-threaded model to process user requests, it uses I/O multiplexing technology to "parallel" process multiple connections from the client, while waiting for requests sent by multiple connections. Using I/O multiplexing technology can greatly reduce system overhead. The system no longer needs to create a dedicated listening thread for each connection, avoiding the huge performance overhead caused by the creation of a large number of threads.

Let’s explain the multiplexed I/O model in detail. In order to understand it more fully, we first understand a few basic concepts.

Socket (Socket): Socket can be understood as the communication endpoints in the two applications when the two applications communicate over the network. During communication, one application writes data to the Socket, and then sends the data to the Socket of another application through the network card. The remote communication we usually call HTTP and TCP protocols is implemented based on Socket at the bottom layer. The five network IO models also all implement network communication based on Socket.

Blocking and non-blocking: The so-called blocking means that a request cannot be returned immediately and a response cannot be returned until all the logic has been processed. Non-blocking, on the contrary, sends a request and returns a response immediately without waiting for all logic to be processed.

Kernel space and user space: In Linux, the stability of application programs is far inferior to that of operating system programs. In order to ensure the stability of the operating system, Linux distinguishes between kernel space and user space. It can be understood that the kernel space runs operating system programs and drivers, and the user space runs applications. In this way, Linux isolates operating system programs and applications, preventing applications from affecting the stability of the operating system itself. This is also the main reason why Linux systems are super stable. All system resource operations are performed in the kernel space, such as reading and writing disk files, memory allocation and recycling, network interface calls, etc. Therefore, during a network IO reading process, the data is not read directly from the network card to the application buffer in user space, but is first copied from the network card to the kernel space buffer, and then copied from the kernel to the user space. application buffer. For the network IO writing process, the process is the opposite. The data is first copied from the application buffer in user space to the kernel buffer, and then the data is sent from the kernel buffer through the network card.

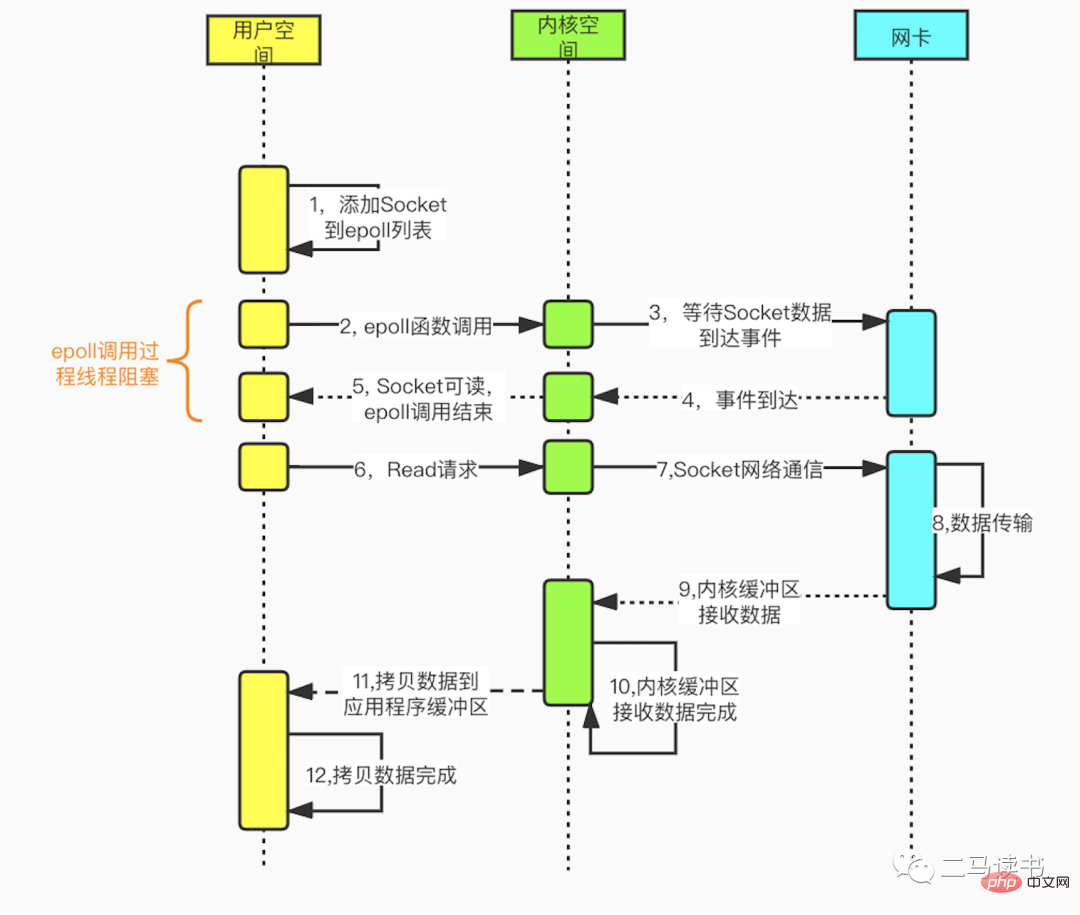

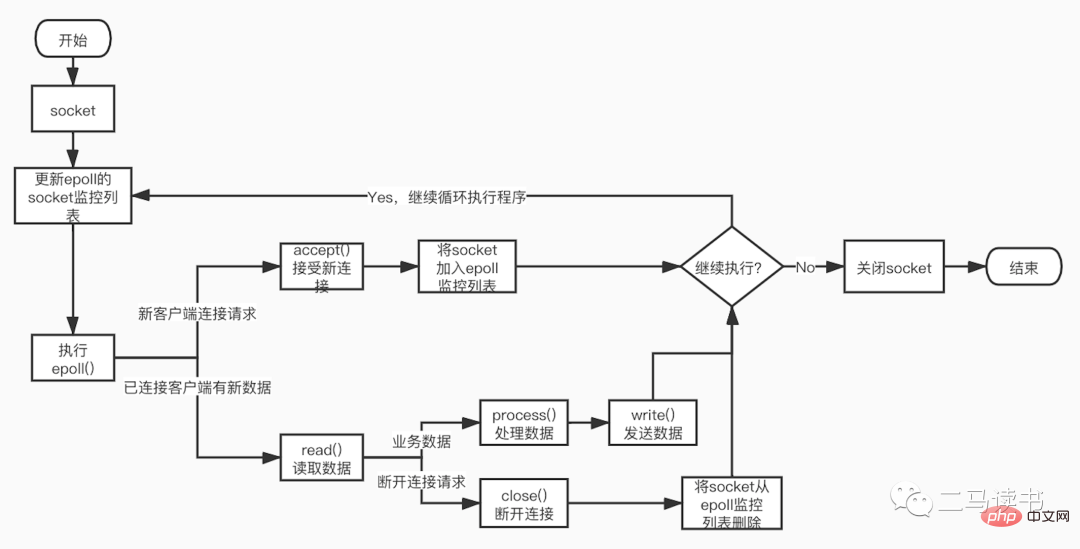

The multiplexed I/O model is built on the multi-channel event separation functions select, poll, and epoll. Taking epoll used by Redis as an example, before initiating a read request, the socket monitoring list of epoll is updated first, and then waits for the epoll function to return (this process is blocking, so multiplexed IO is essentially a blocking IO model). When data arrives from a certain socket, the epoll function returns. At this time, the user thread officially initiates a read request to read and process the data. This mode uses a dedicated monitoring thread to check multiple sockets. If data arrives in a certain socket, it is handed over to the worker thread for processing. Since the process of waiting for Socket data to arrive is very time-consuming, this method solves the problem that one Socket connection in the blocking IO model requires one thread, and there is no problem of CPU performance loss caused by busy polling in the non-blocking IO model. There are many practical application scenarios for the multiplexed IO model. The familiar Redis, Java NIO, and Netty, the communication framework used by Dubbo, all use this model.

#The following figure is the detailed process of Socket programming based on the epoll function.

Maintainability

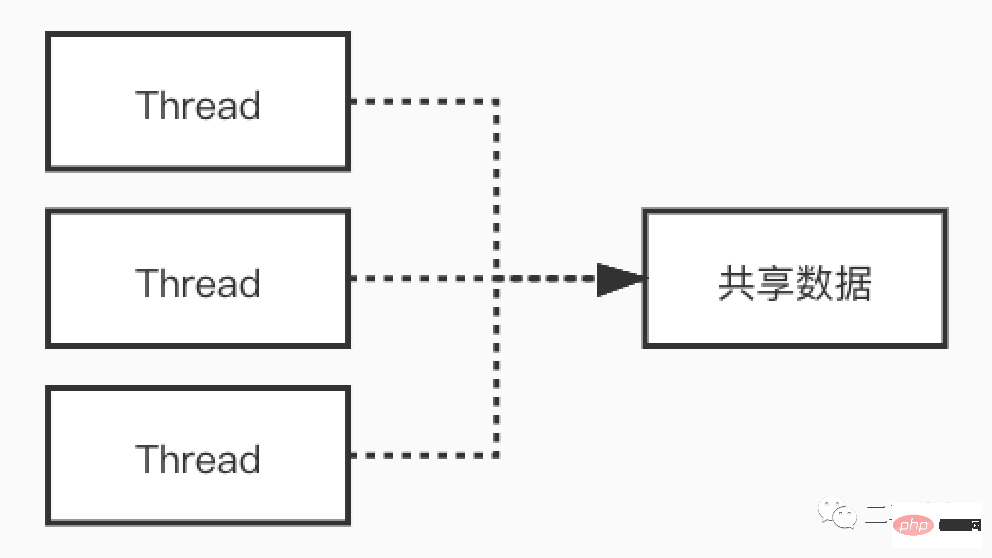

We know that multi-threading can make full use of multi-core CPUs and achieve high concurrency In this scenario, it can reduce the CPU loss caused by I/O waiting and bring good performance. However, multi-threading is a double-edged sword. While it brings benefits, it also brings difficulties in code maintenance, difficulty in locating and debugging online problems, deadlocks and other problems. The execution process of code in the multi-threading model is no longer serial, and shared variables accessed by multiple threads at the same time will also cause strange problems if not handled properly.

# Let’s use an example to take a look at the strange phenomena that occur in multi-thread scenarios. Look at the code below: When

class MemoryReordering {

int num = 0;

boolean flag = false;

public void set() {

num = 1; //语句1

flag = true; //语句2

}

public int cal() {

if( flag == true) { //语句3

return num + num; //语句4

}

return -1;

}

}flag is true, what is the return value of the cal() method? Many people will say: Do you even need to ask? Definitely returns 2

结果可能会让你大吃一惊!上面的这段代码,由于语句1和语句2没有数据依赖性,可能会发生指令重排序,有可能编译器会把flag=true放到num=1的前面。此时set和cal方法分别在不同线程中执行,没有先后关系。cal方法,只要flag为true,就会进入if的代码块执行相加的操作。可能的顺序是:

语句1先于语句2执行,这时的执行顺序可能是:语句1->语句2->语句3->语句4。执行语句4前,num = 1,所以cal的返回值是2

语句2先于语句1执行,这时的执行顺序可能是:语句2->语句3->语句4->语句1。执行语句4前,num = 0,所以cal的返回值是0

我们可以看到,在多线程环境下如果发生了指令重排序,会对结果造成严重影响。

当然可以在第三行处,给flag加上关键字volatile来避免指令重排。即在flag处加上了内存栅栏,来阻隔flag(栅栏)前后的代码的重排序。当然多线程还会带来可见性问题,死锁问题以及共享资源安全等问题。

boolean volatile flag = false;

Redis6.0为何引入多线程?

Redis6.0引入的多线程部分,实际上只是用来处理网络数据的读写和协议解析,执行命令仍然是单一工作线程。

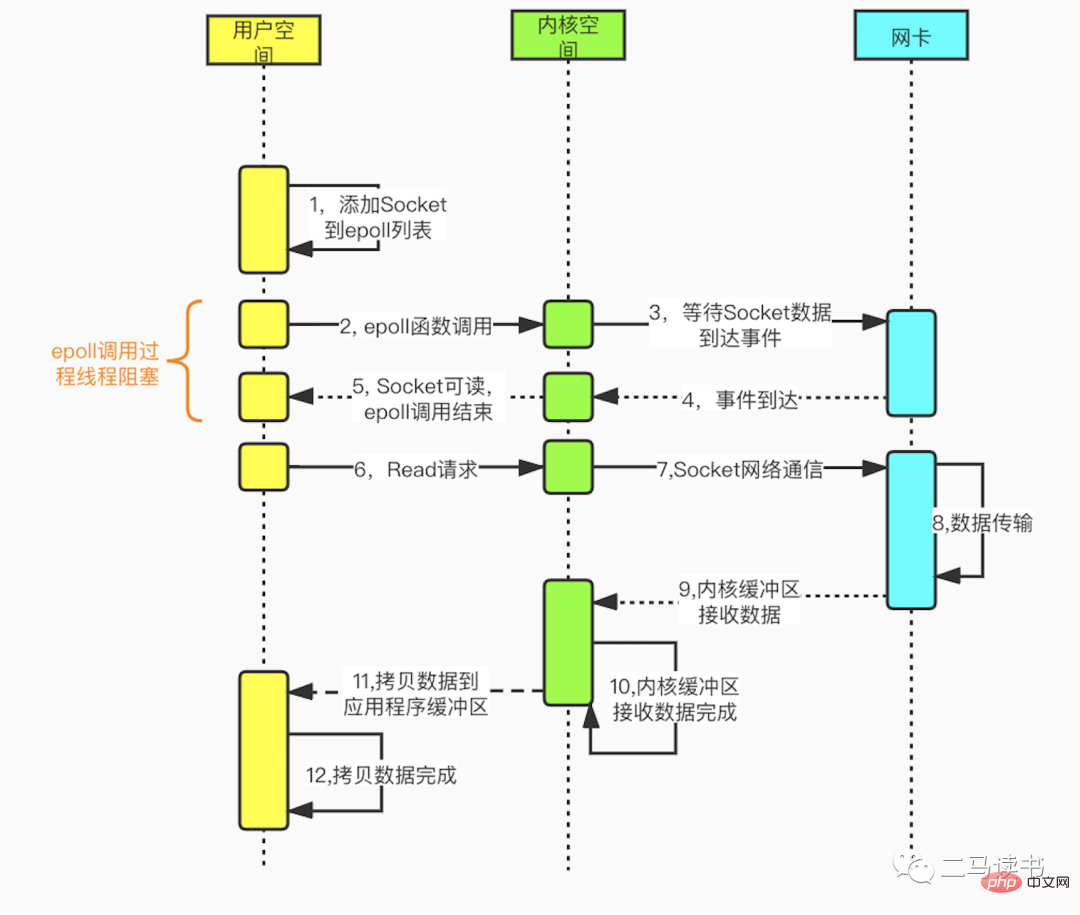

从上图我们可以看到Redis在处理网络数据时,调用epoll的过程是阻塞的,也就是说这个过程会阻塞线程,如果并发量很高,达到几万的QPS,此处可能会成为瓶颈。一般我们遇到此类网络IO瓶颈的问题,可以增加线程数来解决。开启多线程除了可以减少由于网络I/O等待造成的影响,还可以充分利用CPU的多核优势。Redis6.0也不例外,在此处增加了多线程来处理网络数据,以此来提高Redis的吞吐量。当然相关的命令处理还是单线程运行,不存在多线程下并发访问带来的种种问题。

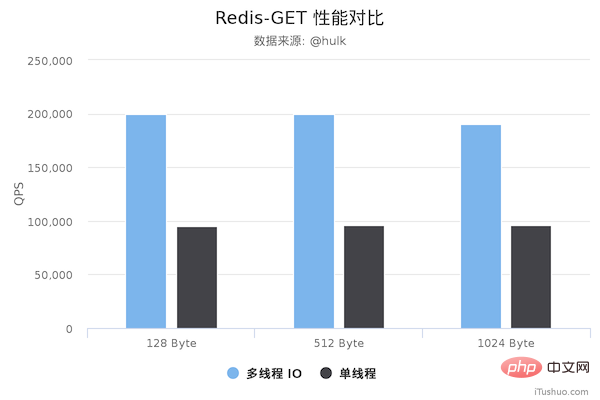

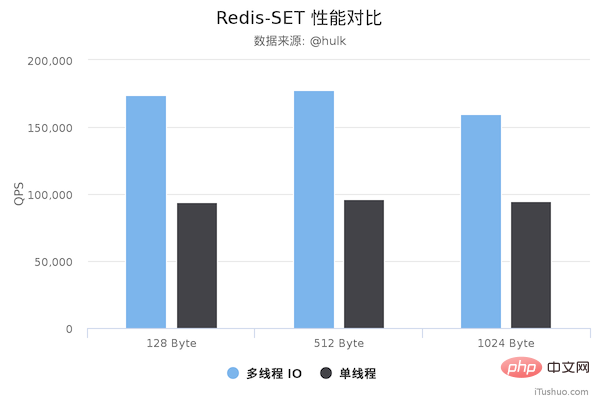

性能对比

压测配置:

Redis Server: 阿里云 Ubuntu 18.04,8 CPU 2.5 GHZ, 8G 内存,主机型号 ecs.ic5.2xlarge Redis Benchmark Client: 阿里云 Ubuntu 18.04,8 2.5 GHZ CPU, 8G 内存,主机型号 ecs.ic5.2xlarge

多线程版本Redis 6.0,单线程版本是 Redis 5.0.5。多线程版本需要新增以下配置:

io-threads 4 # 开启 4 个 IO 线程 io-threads-do-reads yes # 请求解析也是用 IO 线程

压测命令: redis-benchmark -h 192.168.0.49 -a foobared -t set,get -n 1000000 -r 100000000 --threads 4 -d ${datasize} -c 256

图片来源于网络

图片来源于网络

从上面可以看到 GET/SET 命令在多线程版本中性能相比单线程几乎翻了一倍。另外,这些数据只是为了简单验证多线程 I/O 是否真正带来性能优化,并没有针对具体的场景进行压测,数据仅供参考。本次性能测试基于 unstble 分支,不排除后续发布的正式版本的性能会更好。

最后

可见单线程有单线程的好处,多线程有多线程的优势,只有充分理解其中的本质原理,才能灵活运用于生产实践当中。

The above is the detailed content of Why does Redis6.0 introduce multi-threading?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How do I choose a shard key in Redis Cluster?

Mar 17, 2025 pm 06:55 PM

How do I choose a shard key in Redis Cluster?

Mar 17, 2025 pm 06:55 PM

The article discusses choosing shard keys in Redis Cluster, emphasizing their impact on performance, scalability, and data distribution. Key issues include ensuring even data distribution, aligning with access patterns, and avoiding common mistakes l

How do I implement authentication and authorization in Redis?

Mar 17, 2025 pm 06:57 PM

How do I implement authentication and authorization in Redis?

Mar 17, 2025 pm 06:57 PM

The article discusses implementing authentication and authorization in Redis, focusing on enabling authentication, using ACLs, and best practices for securing Redis. It also covers managing user permissions and tools to enhance Redis security.

How do I use Redis for job queues and background processing?

Mar 17, 2025 pm 06:51 PM

How do I use Redis for job queues and background processing?

Mar 17, 2025 pm 06:51 PM

The article discusses using Redis for job queues and background processing, detailing setup, job definition, and execution. It covers best practices like atomic operations and job prioritization, and explains how Redis enhances processing efficiency.

How do I implement cache invalidation strategies in Redis?

Mar 17, 2025 pm 06:46 PM

How do I implement cache invalidation strategies in Redis?

Mar 17, 2025 pm 06:46 PM

The article discusses strategies for implementing and managing cache invalidation in Redis, including time-based expiration, event-driven methods, and versioning. It also covers best practices for cache expiration and tools for monitoring and automat

How do I monitor the performance of a Redis Cluster?

Mar 17, 2025 pm 06:56 PM

How do I monitor the performance of a Redis Cluster?

Mar 17, 2025 pm 06:56 PM

Article discusses monitoring Redis Cluster performance and health using tools like Redis CLI, Redis Insight, and third-party solutions like Datadog and Prometheus.

How do I use Redis for pub/sub messaging?

Mar 17, 2025 pm 06:48 PM

How do I use Redis for pub/sub messaging?

Mar 17, 2025 pm 06:48 PM

The article explains how to use Redis for pub/sub messaging, covering setup, best practices, ensuring message reliability, and monitoring performance.

How do I use Redis for session management in web applications?

Mar 17, 2025 pm 06:47 PM

How do I use Redis for session management in web applications?

Mar 17, 2025 pm 06:47 PM

The article discusses using Redis for session management in web applications, detailing setup, benefits like scalability and performance, and security measures.

How do I secure Redis against common vulnerabilities?

Mar 17, 2025 pm 06:57 PM

How do I secure Redis against common vulnerabilities?

Mar 17, 2025 pm 06:57 PM

Article discusses securing Redis against vulnerabilities, focusing on strong passwords, network binding, command disabling, authentication, encryption, updates, and monitoring.