What hbase relies on to store underlying data

hbase relies on "HDFS" to store underlying data. HBase uses Hadoop HDFS as its file storage system to provide HBase with high-reliability underlying storage support; HDFS has high fault tolerance and is designed to be deployed on low-cost hardware.

HBase – Hadoop Database is a highly reliable, high-performance, column-oriented, scalable distributed storage system that can be run on cheap PCs using HBase technology. A large-scale structured storage cluster is built on the server.

hbase relies on "HDFS" to store underlying data.

HBase is an open source implementation of Google Bigtable. Similar to Google Bigtable, which uses GFS as its file storage system, HBase uses Hadoop HDFS as its file storage system. ; Google runs MapReduce to process Bigtable HBase also uses Hadoop MapReduce to process the massive data in HBase; Google Bigtable uses Chubby as a collaborative service, and HBase uses Zookeeper as a counterpart.

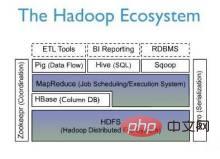

The above figure describes each layer of the system in Hadoop EcoSystem. Among them, HBase is located in the structured storage layer, Hadoop HDFS provides HBase with high-reliability underlying storage support, Hadoop MapReduce provides HBase with high-performance computing capabilities, and Zookeeper provides stable services and failover for HBase. mechanism.

HDFS

Hadoop Distributed File System (HDFS) refers to a distributed file system (Distributed File System) designed to run on common hardware (commodity hardware). System). It has a lot in common with existing distributed file systems. But at the same time, the difference between it and other distributed file systems is also obvious. HDFS is a highly fault-tolerant system suitable for deployment on cheap machines. HDFS can provide high-throughput data access and is very suitable for applications on large-scale data sets. HDFS relaxes some POSIX constraints to achieve the purpose of streaming file system data. HDFS was originally developed as the infrastructure for the Apache Nutch search engine project. HDFS is part of the Apache Hadoop Core project.

HDFS has the characteristics of high fault-tolerant and is designed to be deployed on low-cost hardware. And it provides high throughput to access application data, suitable for applications with large data sets. HDFS relaxes POSIX requirements so that streaming access to data in the file system can be achieved.

HDFS adopts a master/slave structure model. An HDFS cluster is composed of a NameNode and several DataNodes. The NameNode serves as the main server, managing the namespace of the file system and the client's access to files; the DataNode in the cluster manages the stored data.

The above is the detailed content of What hbase relies on to store underlying data. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

what is hdfs command

Mar 14, 2023 pm 03:51 PM

what is hdfs command

Mar 14, 2023 pm 03:51 PM

The hdfs command refers to the command of the Hadoop hdfs system. Its common commands include: 1. ls command; 2. cat command; 3. mkdir command; 4. rm command; 5. put command; 6. cp command; 7. copyFromLocal command; 8. get command; 9. copyToLocal command; 10. mv command, etc.

Steps and precautions for using localstorage to store data

Jan 11, 2024 pm 04:51 PM

Steps and precautions for using localstorage to store data

Jan 11, 2024 pm 04:51 PM

Steps and precautions for using localStorage to store data This article mainly introduces how to use localStorage to store data and provides relevant code examples. LocalStorage is a way of storing data in the browser that keeps the data local to the user's computer without going through a server. The following are the steps and things to pay attention to when using localStorage to store data. Step 1: Check whether the browser supports LocalStorage

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

With the advent of the big data era, data processing and storage have become more and more important, and how to efficiently manage and analyze large amounts of data has become a challenge for enterprises. Hadoop and HBase, two projects of the Apache Foundation, provide a solution for big data storage and analysis. This article will introduce how to use Hadoop and HBase in Beego for big data storage and query. 1. Introduction to Hadoop and HBase Hadoop is an open source distributed storage and computing system that can

Steps to use localstorage to store data

Jan 11, 2024 am 09:14 AM

Steps to use localstorage to store data

Jan 11, 2024 am 09:14 AM

How to use localstorage to store data? Introduction: localstorage is a browser local storage mechanism provided by HTML5, through which data can be easily stored and read in the browser. This article will introduce how to use localstorage to store data and provide specific code examples. This article is divided into the following parts: 1. Introduction to localstorage; 2. Steps to use localstorage to store data; 3. Code examples; 4. Frequently asked questions

How does blockchain store data?

Sep 05, 2023 pm 05:01 PM

How does blockchain store data?

Sep 05, 2023 pm 05:01 PM

The ways in which blockchain stores data include file storage, database storage, cache storage, distributed storage, storage network and smart contract storage. Detailed introduction: 1. File storage. Blockchain can store data in the form of files. This method is relatively simple. You can use any text editor to create a blockchain file and store the data in it. This method has data security. and reliability issues, because files may be tampered with or deleted; 2. Database storage, blockchain can store data in the form of a database, which can improve data security and reliability, etc.

How to use JSON format in MySQL to store and query data?

Jul 30, 2023 pm 09:37 PM

How to use JSON format in MySQL to store and query data?

Jul 30, 2023 pm 09:37 PM

How to use JSON format in MySQL to store and query data? In modern application development, using JSON format to store and query data has become a common practice. JSON (JavaScriptObjectNotation) is a lightweight data exchange format that is widely used for front-end and back-end data transmission, configuration files, logging, etc. MySQL has introduced support for JSON fields starting from version 5.7, allowing us to store them directly in MySQL

What is distributed storage data protection?

Jan 04, 2024 pm 03:33 PM

What is distributed storage data protection?

Jan 04, 2024 pm 03:33 PM

Protection methods include: 1. Redundant backup; 2. Fault tolerance; 3. Erasure coding technology; 4. Remote replication; 5. Fully symmetrical and fully redundant software and hardware design; 6. Automatic data reconstruction and recovery mechanism; 7. File/directory level snapshot; 8. Power failure protection mechanism. Detailed introduction: 1. Redundant backup: Distributed storage will store multiple copies of data on multiple nodes, ensuring that even if one node or storage device fails, data can still be recovered from other copies; 2. Fault tolerance: Distribution Storage systems are typically fault-tolerant and can detect and correct data corruption or loss, among other things.

How to integrate hbase in springboot

May 30, 2023 pm 04:31 PM

How to integrate hbase in springboot

May 30, 2023 pm 04:31 PM

Dependency: org.springframework.dataspring-data-hadoop-hbase2.5.0.RELEASEorg.apache.hbasehbase-client1.1.2org.springframework.dataspring-data-hadoop2.5.0.RELEASE The official way to add configuration is through xml, which is simple After rewriting, it is as follows: @ConfigurationpublicclassHBaseConfiguration{@Value("${hbase.zooke