Database

Database

Mysql Tutorial

Mysql Tutorial

Detailed explanation of performance optimization of MySQL batch SQL insertion

Detailed explanation of performance optimization of MySQL batch SQL insertion

Detailed explanation of performance optimization of MySQL batch SQL insertion

mysql tutorialColumn introduction to batch SQL insertion

Recommended (Free): mysql tutorial

For some systems with large amounts of data, in addition to low query efficiency, the database faces It just takes a long time for data to be stored in the database. Especially for reporting systems, the time spent on data import may last for several hours or more than ten hours every day. Therefore, it makes sense to optimize database insertion performance.

One SQL statement inserts multiple pieces of data

INSERT INTO `insert_table` (`datetime`, `uid`, `content`, `type`)

VALUES ('0', 'userid_0', 'content_0', 0);INSERT INTO `insert_table` (`datetime`, `uid`, `content`, `type`)

VALUES ('1', 'userid_1', 'content_1', 1);INSERT INTO `insert_table` (`datetime`, `uid`, `content`, `type`)

VALUES ('0', 'userid_0', 'content_0', 0), ('1', 'userid_1', 'content_1', 1);- The main reason for the high efficiency of SQL execution is merging The amount of logs [mysql's binlog and InnoDB's transaction logs] are reduced, which reduces the data volume and frequency of log flushing, thereby improving efficiency.

- By merging SQL statements, it can also reduce the number of SQL statement parsing and reduce network transmission IO.

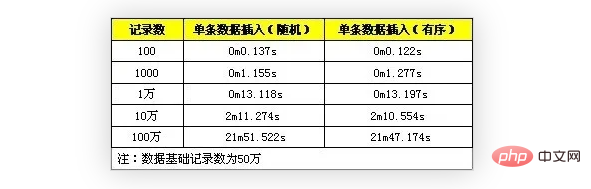

Test and compare data, respectively, importing a single piece of data and converting it into a SQL statement for import.

Insertion processing in transactions

START TRANSACTION;INSERT INTO `insert_table` (`datetime`, `uid`, `content`, `type`) VALUES ('0', 'userid_0', 'content_0', 0);INSERT INTO `insert_table` (`datetime`, `uid`, `content`, `type`) VALUES ('1', 'userid_1', 'content_1', 1);...COMMIT;- Using transactions can improve the efficiency of data insertion, which This is because when an insert operation is performed, a transaction is created internally in MySQL, and the actual insert processing operation is performed within the transaction.

- Reduce the consumption of creating transactions by using transactions. All inserts are submitted before being executed.

Test and compare data, divided into written tests not applicable to transactions and using transaction operations

Orderly insertion of data

Orderly insertion of data is to insert records on the primary key. Sequential sorting

INSERT INTO `insert_table` (`datetime`, `uid`, `content`, `type`) VALUES ('1', 'userid_1', 'content_1', 1);INSERT INTO `insert_table` (`datetime`, `uid`, `content`, `type`) VALUES ('0', 'userid_0', 'content_0', 0);INSERT INTO `insert_table` (`datetime`, `uid`, `content`, `type`) VALUES ('2', 'userid_2', 'content_2',2);INSERT INTO `insert_table` (`datetime`, `uid`, `content`, `type`) VALUES ('0', 'userid_0', 'content_0', 0);INSERT INTO `insert_table` (`datetime`, `uid`, `content`, `type`) VALUES ('1', 'userid_1', 'content_1', 1);INSERT INTO `insert_table` (`datetime`, `uid`, `content`, `type`) VALUES ('2', 'userid_2', 'content_2',2);- Since the database needs to maintain index data when inserting, unnecessary records will increase the cost of maintaining the index.

Refer to the B tree index used by InnoDB. If each inserted record is at the end of the index, the index positioning efficiency is very high and there will be less adjustment to the index; if the inserted record In the middle of the index, B trees need to be split and merged, which will consume more computing resources, and the index positioning efficiency of inserted records will decrease. When the amount of data is large, there will be frequent disk operations.

Test comparison data, performance comparison of random data and sequential data

Delete the index first and insert Rebuild the index after completion

Comprehensive performance test

- Merge data transaction When the amount of data is small, the performance of the method is obviously improved. When the amount of data is large, the performance drops sharply. This is because the amount of data exceeds the capacity of innodb_buffer. Each positioning index involves more disk read and write operations, and the performance Decline faster.

- The orderly method of merging data transactions still performs well when the data volume reaches tens of millions. When the data volume is large, the ordered data index positioning is more convenient and does not require frequent read and write operations on the disk. Can maintain a higher value

Notes

- ##SQL statements have length limits, so when merging data The SQL length limit must not be exceeded in the same SQL. It can be modified through the

max_allowed_packet

configuration. The default is1M, which can be modified to8Mduring testing. - Transactions need to be controlled in size. Things that are too large may affect execution efficiency. MySQL has the

innodb_log_buffer_size

configuration item. If this value is exceeded, the innodb data will be flushed to the disk. At this time, the efficiency will be reduced. So a better approach is to perform transaction commit before the data reaches this value.

The above is the detailed content of Detailed explanation of performance optimization of MySQL batch SQL insertion. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL is an open source relational database management system. 1) Create database and tables: Use the CREATEDATABASE and CREATETABLE commands. 2) Basic operations: INSERT, UPDATE, DELETE and SELECT. 3) Advanced operations: JOIN, subquery and transaction processing. 4) Debugging skills: Check syntax, data type and permissions. 5) Optimization suggestions: Use indexes, avoid SELECT* and use transactions.

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

You can open phpMyAdmin through the following steps: 1. Log in to the website control panel; 2. Find and click the phpMyAdmin icon; 3. Enter MySQL credentials; 4. Click "Login".

How to create navicat premium

Apr 09, 2025 am 07:09 AM

How to create navicat premium

Apr 09, 2025 am 07:09 AM

Create a database using Navicat Premium: Connect to the database server and enter the connection parameters. Right-click on the server and select Create Database. Enter the name of the new database and the specified character set and collation. Connect to the new database and create the table in the Object Browser. Right-click on the table and select Insert Data to insert the data.

How to create a new connection to mysql in navicat

Apr 09, 2025 am 07:21 AM

How to create a new connection to mysql in navicat

Apr 09, 2025 am 07:21 AM

You can create a new MySQL connection in Navicat by following the steps: Open the application and select New Connection (Ctrl N). Select "MySQL" as the connection type. Enter the hostname/IP address, port, username, and password. (Optional) Configure advanced options. Save the connection and enter the connection name.

MySQL and SQL: Essential Skills for Developers

Apr 10, 2025 am 09:30 AM

MySQL and SQL: Essential Skills for Developers

Apr 10, 2025 am 09:30 AM

MySQL and SQL are essential skills for developers. 1.MySQL is an open source relational database management system, and SQL is the standard language used to manage and operate databases. 2.MySQL supports multiple storage engines through efficient data storage and retrieval functions, and SQL completes complex data operations through simple statements. 3. Examples of usage include basic queries and advanced queries, such as filtering and sorting by condition. 4. Common errors include syntax errors and performance issues, which can be optimized by checking SQL statements and using EXPLAIN commands. 5. Performance optimization techniques include using indexes, avoiding full table scanning, optimizing JOIN operations and improving code readability.

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

Redis uses a single threaded architecture to provide high performance, simplicity, and consistency. It utilizes I/O multiplexing, event loops, non-blocking I/O, and shared memory to improve concurrency, but with limitations of concurrency limitations, single point of failure, and unsuitable for write-intensive workloads.

How to recover data after SQL deletes rows

Apr 09, 2025 pm 12:21 PM

How to recover data after SQL deletes rows

Apr 09, 2025 pm 12:21 PM

Recovering deleted rows directly from the database is usually impossible unless there is a backup or transaction rollback mechanism. Key point: Transaction rollback: Execute ROLLBACK before the transaction is committed to recover data. Backup: Regular backup of the database can be used to quickly restore data. Database snapshot: You can create a read-only copy of the database and restore the data after the data is deleted accidentally. Use DELETE statement with caution: Check the conditions carefully to avoid accidentally deleting data. Use the WHERE clause: explicitly specify the data to be deleted. Use the test environment: Test before performing a DELETE operation.

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL is an open source relational database management system, mainly used to store and retrieve data quickly and reliably. Its working principle includes client requests, query resolution, execution of queries and return results. Examples of usage include creating tables, inserting and querying data, and advanced features such as JOIN operations. Common errors involve SQL syntax, data types, and permissions, and optimization suggestions include the use of indexes, optimized queries, and partitioning of tables.