Java

Java

JavaBase

JavaBase

Java implementation ensures the consistency of double writing between cache and database

Java implementation ensures the consistency of double writing between cache and database

Java implementation ensures the consistency of double writing between cache and database

java basic tutorial Column ensures the consistency of double writing between cache and database

Please raise your head, my princess, or the crown will fall off.

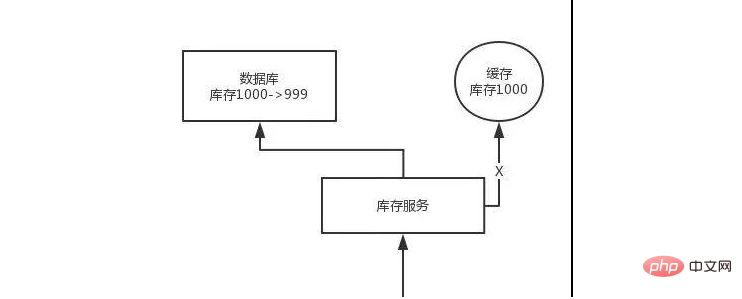

Distributed cache is an indispensable component in many distributed applications. However, when using distributed cache, it may involve double storage and double writing of cache and database. You only need to double write. , there will definitely be data consistency problems, so how do you solve the consistency problem?

Cache Aside Pattern

The most classic cache database read and write pattern is the Cache Aside Pattern.

When reading, read the cache first. If there is no cache, read the database, then take out the data and put it into the cache, and return the response at the same time.

When updating, update the database first and then delete the cache.

Why delete the cache instead of updating the cache?

The reason is very simple. In many cases, in complex caching scenarios, the cache is not just the value taken directly from the database.

For example, a field of a certain table may be updated, and then the corresponding cache needs to query the data of the other two tables and perform operations to calculate the latest value of the cache.

In addition, the cost of updating the cache is sometimes very high. Does it mean that every time the database is modified, the corresponding cache must be updated? This may be the case in some scenarios, but for more complex cached data calculation scenarios, this is not the case. If you frequently modify multiple tables involved in a cache, the cache will also be updated frequently. But the question is, will this cache be accessed frequently?

For example, if a field of a table involved in a cache is modified 20 times or 100 times in 1 minute, then the cache will be updated 20 times or 100 times; but this cache will only be updated 20 times or 100 times in 1 minute. It has been read once and has a lot of cold data. In fact, if you just delete the cache, then the cache will only be recalculated once in 1 minute, and the overhead will be greatly reduced. The cache is only used to calculate the cache.

In fact, deleting the cache instead of updating the cache is a lazy calculation idea. Don't re-do complex calculations every time, regardless of whether it will be used, but let it be used when it needs to be used. Recalculate again. Like mybatis and hibernate, they all have the idea of lazy loading. When querying a department, the department brings a list of employees. There is no need to say that every time you query the department, the data of the 1,000 employees in it will also be found at the same time. In 80% of cases, checking this department only requires access to the information of this department. First check the department, and at the same time you need to access the employees inside. Then only when you want to access the employees inside, you will query the database for 1,000 employees.

The most basic cache inconsistency problem and solution

Problem: Modify the database first, and then delete the cache. If deletion of the cache fails, it will result in new data in the database and old data in the cache, resulting in data inconsistency.

Solution: Delete the cache first, and then modify the database. If the database modification fails, then there is old data in the database and the cache is empty, so the data will not be inconsistent. Because there is no cache when reading, the old data in the database is read and then updated to the cache.

Analysis of more complex data inconsistency issues

The data has changed, the cache has been deleted first, and then the database has to be modified, but it has not been modified yet. When a request comes in, I read the cache and find that the cache is empty. I query the database and find the old data before modification and put it in the cache. Then the data change program completes the modification of the database.

It’s over, the data in the database and cache are different. . .

Why does the cache have this problem in a high-concurrency scenario with hundreds of millions of traffic?

This problem may only occur when a piece of data is read and written concurrently. In fact, if your concurrency is very low, especially if the read concurrency is very low, with only 10,000 visits per day, then in rare cases, the inconsistent scenario just described will occur. But the problem is, if there are hundreds of millions of traffic every day and tens of thousands of concurrent reads per second, as long as there are data update requests every second, the above-mentioned database cache inconsistency may occur.

The solution is as follows:

When updating data, route the operation based on the unique identifier of the data and send it to a jvm internal queue. When reading data, if it is found that the data is not in the cache, the data will be re-read and the cache operation will be updated. After routing based on the unique identifier, it will also be sent to the same jvm internal queue.

A queue corresponds to a worker thread. Each worker thread obtains the corresponding operations serially and then executes them one by one. In this case, for a data change operation, the cache is first deleted and then the database is updated, but the update is not completed yet. At this time, if a read request comes and the empty cache is read, the cache update request can be sent to the queue first. At this time, it will be backlogged in the queue, and then it can wait for the cache update to be completed synchronously.

There is an optimization point here. In a queue, it is meaningless to string multiple update cache requests together, so filtering can be done. If it is found that there is already a request to update the cache in the queue, then There is no need to put another update request operation in, just wait for the previous update operation request to be completed.

After the worker thread corresponding to that queue completes the modification of the database for the previous operation, it will perform the next operation, which is the cache update operation. At this time, the latest value will be read from the database. , and then write to the cache.

If the request is still within the waiting time range and continuous polling finds that the value can be obtained, then it will return directly; if the request waits for more than a certain period of time, then this time the current value will be read directly from the database. old value.

In high concurrency scenarios, issues that need to be paid attention to with this solution:

1. Read requests are blocked for a long time

Because the read requests are very slightly asynchronous , so be sure to pay attention to the read timeout issue. Each read request must be returned within the timeout time range.

The biggest risk point of this solution is that the data may be updated frequently, resulting in a large backlog of update operations in the queue, and then a large number of timeouts will occur in the read requests, and finally a large number of requests will be sent directly. database. Be sure to run some simulated real-life tests to see how often the data is updated.

Another point, because there may be a backlog of update operations for multiple data items in a queue, you need to test it according to your own business conditions. You may need to deploy multiple services, and each service will share some data. Update operation. If a memory queue actually squeezes the inventory modification operations of 100 products, and each inventory modification operation takes 10ms to complete, then the read request for the last product may wait for 10 * 100 = 1000ms = 1s before the data can be obtained. , this will lead to long-term blocking of read requests.

Be sure to conduct some stress tests and simulate the online environment based on the actual operation of the business system to see how many update operations the memory queue may squeeze during the busiest times, which may lead to How long will the read request corresponding to the last update operation hang? If the read request returns in 200ms, if you calculate it, even at the busiest time, there will be a backlog of 10 update operations, and the maximum wait time is 200ms, then it is okay.

If there are a lot of update operations that may be backlogged in a memory queue, then you need to add machines to allow the service instances deployed on each machine to process less data. Then the backlog of updates in each memory queue will There will be fewer operations.

In fact, based on previous project experience, generally speaking, the frequency of data writing is very low, so in fact, normally, the backlog of update operations in the queue should be very small. For projects like this that target high read concurrency and read caching architecture, generally speaking, there are very few write requests. It would be good if the QPS per second can reach a few hundred.

Actual rough calculation

If there are 500 write operations per second, if divided into 5 time slices, there will be 100 write operations every 200ms, divided into 20 In the memory queue, each memory queue may have a backlog of 5 write operations. After each write operation performance test, it is usually completed in about 20ms, so the read request for the data in each memory queue will only hang for a while at most, and it will definitely be returned within 200ms.

After the simple calculation just now, we know that the writing QPS supported by a single machine is no problem in the hundreds. If the writing QPS is expanded by 10 times, then the machine will be expanded, and the machine will be expanded by 10 times. Each machine 20 queues.

2. The number of concurrent read requests is too high

You must also do a stress test here to ensure that when the above situation happens to happen, there is another risk, that is, a large number of read requests will suddenly occur. The delay of tens of milliseconds hangs on the service. It depends on whether the service can handle it and how many machines are needed to handle the peak of the maximum extreme situation.

But because not all data is updated at the same time, the cache will not expire at the same time, so the cache of a small number of data may be invalid each time, and then the read requests corresponding to those data come over and concurrently The quantity shouldn't be particularly large.

3. Request routing for multi-service instance deployment

Maybe this service deploys multiple instances, then it must be ensured that requests for data update operations and cache update operations are passed through The Nginx server is routed to the same service instance.

For example, all read and write requests for the same product are routed to the same machine. You can do your own hash routing between services according to a certain request parameter, or you can use Nginx's hash routing function, etc.

4. The routing problem of hot products leads to the skew of requests.

If the read and write requests of a certain product are particularly high, and they are all sent to the same queue of the same machine, it may cause The pressure on a certain machine is too high. That is to say, because the cache will only be cleared when the product data is updated, and then read and write concurrency will occur, so it actually depends on the business system. If the update frequency is not too high, the impact of this problem is not particularly large. But it is true that the load of some machines may be higher.

The above is the detailed content of Java implementation ensures the consistency of double writing between cache and database. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1375

1375

52

52

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

In MySQL database, the relationship between the user and the database is defined by permissions and tables. The user has a username and password to access the database. Permissions are granted through the GRANT command, while the table is created by the CREATE TABLE command. To establish a relationship between a user and a database, you need to create a database, create a user, and then grant permissions.

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

Data Integration Simplification: AmazonRDSMySQL and Redshift's zero ETL integration Efficient data integration is at the heart of a data-driven organization. Traditional ETL (extract, convert, load) processes are complex and time-consuming, especially when integrating databases (such as AmazonRDSMySQL) with data warehouses (such as Redshift). However, AWS provides zero ETL integration solutions that have completely changed this situation, providing a simplified, near-real-time solution for data migration from RDSMySQL to Redshift. This article will dive into RDSMySQL zero ETL integration with Redshift, explaining how it works and the advantages it brings to data engineers and developers.

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

MySQL database performance optimization guide In resource-intensive applications, MySQL database plays a crucial role and is responsible for managing massive transactions. However, as the scale of application expands, database performance bottlenecks often become a constraint. This article will explore a series of effective MySQL performance optimization strategies to ensure that your application remains efficient and responsive under high loads. We will combine actual cases to explain in-depth key technologies such as indexing, query optimization, database design and caching. 1. Database architecture design and optimized database architecture is the cornerstone of MySQL performance optimization. Here are some core principles: Selecting the right data type and selecting the smallest data type that meets the needs can not only save storage space, but also improve data processing speed.

How to fill in mysql username and password

Apr 08, 2025 pm 07:09 PM

How to fill in mysql username and password

Apr 08, 2025 pm 07:09 PM

To fill in the MySQL username and password: 1. Determine the username and password; 2. Connect to the database; 3. Use the username and password to execute queries and commands.

Query optimization in MySQL is essential for improving database performance, especially when dealing with large data sets

Apr 08, 2025 pm 07:12 PM

Query optimization in MySQL is essential for improving database performance, especially when dealing with large data sets

Apr 08, 2025 pm 07:12 PM

1. Use the correct index to speed up data retrieval by reducing the amount of data scanned select*frommployeeswherelast_name='smith'; if you look up a column of a table multiple times, create an index for that column. If you or your app needs data from multiple columns according to the criteria, create a composite index 2. Avoid select * only those required columns, if you select all unwanted columns, this will only consume more server memory and cause the server to slow down at high load or frequency times For example, your table contains columns such as created_at and updated_at and timestamps, and then avoid selecting * because they do not require inefficient query se

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Detailed explanation of database ACID attributes ACID attributes are a set of rules to ensure the reliability and consistency of database transactions. They define how database systems handle transactions, and ensure data integrity and accuracy even in case of system crashes, power interruptions, or multiple users concurrent access. ACID Attribute Overview Atomicity: A transaction is regarded as an indivisible unit. Any part fails, the entire transaction is rolled back, and the database does not retain any changes. For example, if a bank transfer is deducted from one account but not increased to another, the entire operation is revoked. begintransaction; updateaccountssetbalance=balance-100wh

How to copy and paste mysql

Apr 08, 2025 pm 07:18 PM

How to copy and paste mysql

Apr 08, 2025 pm 07:18 PM

Copy and paste in MySQL includes the following steps: select the data, copy with Ctrl C (Windows) or Cmd C (Mac); right-click at the target location, select Paste or use Ctrl V (Windows) or Cmd V (Mac); the copied data is inserted into the target location, or replace existing data (depending on whether the data already exists at the target location).

How to view mysql

Apr 08, 2025 pm 07:21 PM

How to view mysql

Apr 08, 2025 pm 07:21 PM

View the MySQL database with the following command: Connect to the server: mysql -u Username -p Password Run SHOW DATABASES; Command to get all existing databases Select database: USE database name; View table: SHOW TABLES; View table structure: DESCRIBE table name; View data: SELECT * FROM table name;