How to install requests library

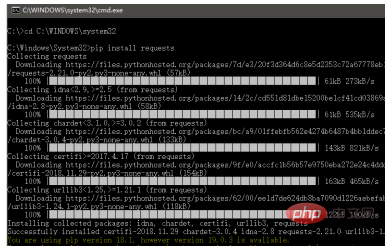

Installation method of requests library: first turn on the computer, find "Run"; then enter "cmd" in the search bar, and use the command "cd C:\WINDOW\system32" to switch directories; finally enter the command " pip install requests" to install the requests library.

The operating environment of this article: Windows 10 system, python2.7.14 version, Dell G3 computer.

Recommendation: "Python Video Tutorial"

First turn on the computer, find the leftmost icon in the bottom row, and right-click.

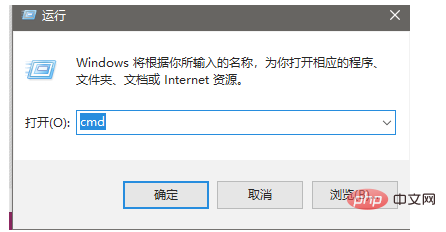

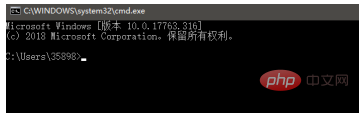

After finding "Run", click it with the mouse and enter "cmd" in the search bar.

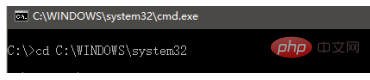

You can see the current directory and use the command cd C:\WINDOW\system32 to switch directories.

After the directory switch is completed, you can see the subsequent directory changes.

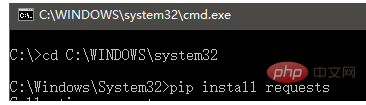

Enter the command "pip install requests" below to start the installation.

#The interface shown below appears, which means the installation is successful and you can use it next.

The above is the detailed content of How to install requests library. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to realize the mutual conversion between CURL and python requests in python

May 03, 2023 pm 12:49 PM

How to realize the mutual conversion between CURL and python requests in python

May 03, 2023 pm 12:49 PM

Both curl and Pythonrequests are powerful tools for sending HTTP requests. While curl is a command-line tool that allows you to send requests directly from the terminal, Python's requests library provides a more programmatic way to send requests from Python code. The basic syntax for converting curl to Pythonrequestscurl command is as follows: curl[OPTIONS]URL When converting curl command to Python request, we need to convert the options and URL into Python code. Here is an example curlPOST command: curl-XPOST https://example.com/api

How to use the Python crawler Requests library

May 16, 2023 am 11:46 AM

How to use the Python crawler Requests library

May 16, 2023 am 11:46 AM

1. Install the requests library. Because the learning process uses the Python language, Python needs to be installed in advance. I installed Python 3.8. You can check the Python version you installed by running the command python --version. It is recommended to install Python 3.X or above. After installing Python, you can directly install the requests library through the following command. pipinstallrequestsPs: You can switch to domestic pip sources, such as Alibaba and Douban, which are fast. In order to demonstrate the function, I used nginx to simulate a simple website. After downloading, just run the nginx.exe program in the root directory.

How Python uses Requests to request web pages

Apr 25, 2023 am 09:29 AM

How Python uses Requests to request web pages

Apr 25, 2023 am 09:29 AM

Requests inherits all features of urllib2. Requests supports HTTP connection persistence and connection pooling, the use of cookies to maintain sessions, file uploading, automatic determination of the encoding of response content, and automatic encoding of internationalized URLs and POST data. Installation method uses pip to install $pipinstallrequestsGET request basic GET request (headers parameters and parmas parameters) 1. The most basic GET request can directly use the get method 'response=requests.get("http://www.baidu.com/"

How to use python requests post

Apr 29, 2023 pm 04:52 PM

How to use python requests post

Apr 29, 2023 pm 04:52 PM

Python simulates the browser sending post requests importrequests format request.postrequest.post(url,data,json,kwargs)#post request format request.get(url,params,kwargs)#Compared with get request, sending post request parameters are divided into forms ( x-www-form-urlencoded) json (application/json) data parameter supports dictionary format and string format. The dictionary format uses the json.dumps() method to convert the data into a legal json format string. This method requires

Download PDF files using Python's Requests and BeautifulSoup

Aug 30, 2023 pm 03:25 PM

Download PDF files using Python's Requests and BeautifulSoup

Aug 30, 2023 pm 03:25 PM

Request and BeautifulSoup are Python libraries that can download any file or PDF online. The requests library is used to send HTTP requests and receive responses. BeautifulSoup library is used to parse the HTML received in the response and get the downloadable pdf link. In this article, we will learn how to download PDF using Request and BeautifulSoup in Python. Install dependencies Before using BeautifulSoup and Request libraries in Python, we need to install these libraries in the system using the pip command. To install request and the BeautifulSoup and Request libraries,

Using the Requests module in Python

Sep 02, 2023 am 10:21 AM

Using the Requests module in Python

Sep 02, 2023 am 10:21 AM

Requests is a Python module that can be used to send various HTTP requests. It is an easy-to-use library with many features, from passing parameters in URLs to sending custom headers and SSL verification. In this tutorial, you will learn how to use this library to send simple HTTP requests in Python. You can use requests in Python versions 2.6–2.7 and 3.3–3.6. Before continuing, you should know that Requests is an external module, so you must install it before trying the examples in this tutorial. You can install it by running the following command in the terminal: pipinstallrequests Once the module is installed, you can import it using the following command

How to install and use Python requests

May 18, 2023 pm 07:49 PM

How to install and use Python requests

May 18, 2023 pm 07:49 PM

1. Preparation work First, we need to make sure that we have installed the requests library before. If it is not installed, follow the steps below to install the library. pip installation Whether it is Windows, Linux or Mac, it can be installed through the pip package management tool. Run the following command on the command line to complete the installation of the requests library: pip3installrequests This is the simplest installation method and is recommended. Verify installation In order to verify whether the library has been installed successfully, you can test it on the command line: importrequestsres=requests.get('https://www.baidu

How to use Python crawler to crawl web page data using BeautifulSoup and Requests

Apr 29, 2023 pm 12:52 PM

How to use Python crawler to crawl web page data using BeautifulSoup and Requests

Apr 29, 2023 pm 12:52 PM

1. Introduction The implementation principle of web crawlers can be summarized into the following steps: Sending HTTP requests: Web crawlers obtain web page content by sending HTTP requests (usually GET requests) to the target website. In Python, HTTP requests can be sent using the requests library. Parse HTML: After receiving the response from the target website, the crawler needs to parse the HTML content to extract useful information. HTML is a markup language used to describe the structure of web pages. It consists of a series of nested tags. The crawler can locate and extract the required data based on these tags and attributes. In Python, you can use libraries such as BeautifulSoup and lxml to parse HTML. Data extraction: After parsing the HTML,