Redis key points analysis

1. Introduction

Redis (Remote Dictionary Server), that is, remote dictionary service, is an open source written in ANSI C language, supports the network, and can be based on memory or memory. A durable log-type, Key-Value database, and provides APIs in multiple languages.

(Learning video sharing: redis video tutorial)

Because it is quick to get started, has high execution efficiency, has a variety of data structures, supports persistence and clustering and other functions and Features are used by many Internet companies. However, if used and operated improperly, it will cause serious consequences such as memory waste and even system downtime.

2. Key points analysis

2.1 Use the correct data type

Among the 5 data types of Redis, the string type is the most commonly used and simplest. However, being able to solve a problem does not mean that you are using the correct data type.

For example, to save a user (name, age, city) information into Redis, there are three options below:

Option 1: Use string type, each attribute is treated as a key

set user:1:name laowang set user:1:age 40 set user:1:city shanghai

Advantages: simple and intuitive, each attribute supports update operations

Disadvantages: using too many keys, occupying a large amount of memory, and poor aggregation of user information, making management and maintenance troublesome

Option 2: Use the string type to serialize user information into a string and save it

// 序列化用户信息 String userInfo = serialize(user) set user:1 userInfo

Advantages: Simplified storage steps

Disadvantages: There is a certain overhead in serialization and deserialization

Option 3: Use hash type, use a field-value pair for each attribute, but only use one key

hmset user:1 name laowang age 40 city shanghai

Advantages: simple and intuitive, reasonable use can reduce memory space

Summary: Try to reduce the keys in Redis.

2.2 Be wary of Big Key

Big key generally refers to a string type value that is very large (greater than 10KB), or has a large number of hash, list, set, or ordered set elements ( greater than 5000) keys.

Big key will cause many negative impacts on Redis:

Imbalanced memory: In a cluster environment, big key is allocated to a certain node machine, because it is not known which node it is allocated to. And the node has a large memory footprint, which is not conducive to the unified management of memory in a cluster environment

Timeout blocking: Since Redis is a single-threaded operation, operating big keys is time-consuming and can easily cause blocking

Expiration Deletion: Big keys are not only slow to read and write, but also slow to delete. Deleting expired big keys is also time-consuming.

Difficulties in migration: Due to the huge data, backup and restore can easily cause congestion and operation failure

Knowing the dangers of big key, how do we judge and query big key? In fact, redis-cli provides the --bigkeys parameter. Type redis-cli --bigkeys to query the big key.

After finding the big key, we usually split the big key into multiple small keys for storage. This approach seems to contradict the summary in 2.1, but any solution has advantages and disadvantages, and weighing the pros and cons depends on the actual situation.

Summary: Try to reduce big keys in Redis.

Supplement: If you want to check the memory space occupied by a certain key, you can use the memory usage command. Note: This command is only available in Redis 4.0. If you want to use it, you must upgrade Redis to 4.0.

2.3 Memory consumption

Even if we use the correct data type to save data and split the Big Key into small keys, memory consumption problems will still occur. So how does Redis memory consumption occur? ? Generally caused by the following three situations:

Business continues to develop, and the amount of stored data continues to increase (inevitable)

Invalid/expired data is not processed in a timely manner (can be optimized)

No downgrading of cold data (can be optimized)

Before optimizing case 2, we must first know why there is a problem of not processing expired data in a timely manner. This has to do with the 3 types provided by Redis. Expired deletion strategy:

Scheduled deletion: A timer will be created for each key with an expiration time set, and it will be deleted immediately once the expiration time is reached

Lazy deletion: When a key is accessed, Determine whether the key has expired, and delete it if it has expired

Regular deletion: scan the dictionary of expired keys in Redis at regular intervals, and clear some expired keys

Due to timing Deletion requires the creation of a timer, which will occupy a lot of memory. At the same time, accurately deleting a large number of keys will also consume a lot of CPU resources. Therefore, Redis uses both lazy deletion and scheduled deletion strategies. If the client does not request an expired key or the regular deletion thread does not scan and clear the key, the key will continue to occupy memory, resulting in a waste of memory.

After knowing the cause of memory consumption, we can quickly come up with an optimization solution: manual deletion.

After using the cache, even if the cache has an expiration time, we must manually call the del method/command to delete it. If it cannot be deleted on the spot, we can also start a timer in the code to delete these expired keys regularly. Compared with the two deletion strategies of Redis, manual data clearing is much more timely.

The problem in case 3 is not a big one. We can adjust the Redis elimination strategy for its optimization methods.

2.4 Execution of multiple commands

Redis is a synchronous request service based on one request and one response. That is, when multiple clients send commands to the Redis server, the Redis server can only receive and process the command of one of the clients. The other clients can only wait for the Redis server to process the current command and respond before continuing. Receive and process other command requests.

Redis processes commands in three processes: receiving commands, processing commands, and returning results. Since the data processed is all in memory, the processing time is usually in the nanosecond level, which is very fast (except for big keys). Therefore, most of the time-consuming situations occur in accepting commands and returning results. When the client sends multiple commands to the Redis server, if one command takes a long time to process, other commands can only wait, thus affecting the overall performance.

In order to solve this kind of problem, Redis provides a pipeline. The client can put multiple commands into the pipeline, and then send the pipeline commands to the Redis server for processing at once. When the Redis server After processing, the results are returned to the client all at once. This processing reduces the number of interactions between the client and the Redis server, thereby reducing round-trip time and improving performance.

Additional:

Comparison between Redis pipeline and native batch commands:

Native batch commands are atomic, pipeline is non-atomic

The native batch command can only be used once Can execute one command, and pipeline supports executing multiple commands

Native batch commands are implemented on the server side, and pipeline requires server and client implementations

Notes on using Redis pipeline:

The number of commands loaded using pipeline cannot be too many

The commands in the pipeline will be executed in the order of buffering, but they may be interspersed with commands sent by other clients, that is, timing is not guaranteed

If an exception occurs during the execution of an instruction in the pipeline, subsequent instructions will continue to be executed, that is, atomicity is not guaranteed

2.5 Cache penetration

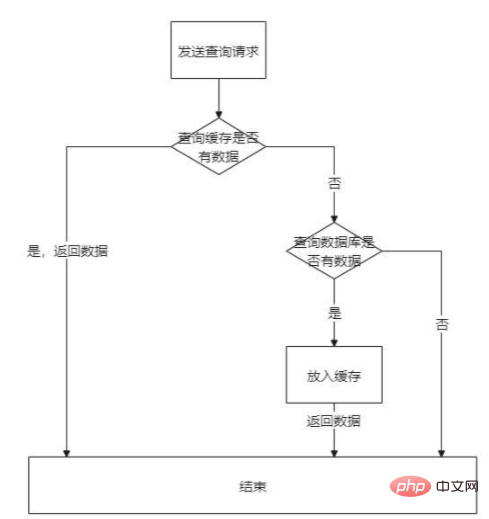

Use cache in the project , our usual design idea is as follows:

Send a request to query the data. The query rule is to check the cache first. If there is no data in the cache, query the database again and put the found data. into the cache and finally returns the data to the client. If the requested data does not exist, eventually each request will be requested to the database, which is cache penetration.

Cache penetration poses a huge security risk. If someone uses a tool to send a large number of requests for non-existent data, a large number of requests will flow into the database, causing increased pressure on the database and possibly causing the database to fail. Downtime will affect the normal operation of the entire application and lead to system paralysis.

To solve this type of problem, the focus is on reducing access to the database. There are usually the following solutions:

Cache preheating: After the system is released online, relevant data is directly loaded into the cache in advance.

Set the default value in the system: If the request ends up in the database and the database cannot find the data, set a default value for the cache key and put it in the cache. Note: Because this default value is meaningless , so we need to set the expiration time to reduce memory usage

Bloom filter: Hash all possible data into a bitmap that is large enough, and non-existent data will definitely be intercepted by the bitmap

2.6 Cache avalanche

Cache avalanche: Simply put, it refers to a large number of requests to access cached data but cannot be queried, and then requests the database, resulting in increased database pressure, performance degradation, and unbearable Heavy load downtime, thus affecting the normal operation of the entire system, or even system paralysis.

For example, a complete system consists of three subsystems: System A, System B, and System C. Their data request chain is System A -> System B -> System C -> Database. If there is no data in the cache and the database is down, system C cannot query the data and respond, and can only be in the retry waiting stage, thus affecting system B and system A. An abnormality in one node causes a series of problems, just like a gust of wind blowing through a snow-capped mountain causing an avalanche.

Seeing this, some readers may be wondering, what is the difference between cache penetration and cache avalanche?

Cache penetration focuses on requesting the database when the requested data is not in the cache, just like requesting the database directly through the cache.

Cache avalanche focuses on large requests. Since the data cannot be queried in the cache, accessing the database causes increased pressure on the database and causes a series of exceptions.

To solve the cache avalanche problem, you must first know the cause of the problem:

Redis itself has a problem

Invalid hotspot data set

For reason 1, we can be a master-slave, cluster, try to make all requests find data in the cache, and reduce access to the database

For reason 2, when setting the expiration time for the cache, stagger the expiration time (For example, adding or subtracting a random value to the basic time) to avoid centralized cache failure. At the same time, we can also set up a local cache (such as ehcache) to limit the flow of the interface or downgrade the service, which can also reduce the access pressure on the database.

3. Reference materials

Redis application-Bloom filter

Related recommendations: redis database tutorial

The above is the detailed content of Redis key points analysis. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

Redis cluster mode deploys Redis instances to multiple servers through sharding, improving scalability and availability. The construction steps are as follows: Create odd Redis instances with different ports; Create 3 sentinel instances, monitor Redis instances and failover; configure sentinel configuration files, add monitoring Redis instance information and failover settings; configure Redis instance configuration files, enable cluster mode and specify the cluster information file path; create nodes.conf file, containing information of each Redis instance; start the cluster, execute the create command to create a cluster and specify the number of replicas; log in to the cluster to execute the CLUSTER INFO command to verify the cluster status; make

How to use the redis command

Apr 10, 2025 pm 08:45 PM

How to use the redis command

Apr 10, 2025 pm 08:45 PM

Using the Redis directive requires the following steps: Open the Redis client. Enter the command (verb key value). Provides the required parameters (varies from instruction to instruction). Press Enter to execute the command. Redis returns a response indicating the result of the operation (usually OK or -ERR).

How to read the source code of redis

Apr 10, 2025 pm 08:27 PM

How to read the source code of redis

Apr 10, 2025 pm 08:27 PM

The best way to understand Redis source code is to go step by step: get familiar with the basics of Redis. Select a specific module or function as the starting point. Start with the entry point of the module or function and view the code line by line. View the code through the function call chain. Be familiar with the underlying data structures used by Redis. Identify the algorithm used by Redis.

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

Redis uses a single threaded architecture to provide high performance, simplicity, and consistency. It utilizes I/O multiplexing, event loops, non-blocking I/O, and shared memory to improve concurrency, but with limitations of concurrency limitations, single point of failure, and unsuitable for write-intensive workloads.

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear Redis data: Use the FLUSHALL command to clear all key values. Use the FLUSHDB command to clear the key value of the currently selected database. Use SELECT to switch databases, and then use FLUSHDB to clear multiple databases. Use the DEL command to delete a specific key. Use the redis-cli tool to clear the data.

How to view all keys in redis

Apr 10, 2025 pm 07:15 PM

How to view all keys in redis

Apr 10, 2025 pm 07:15 PM

To view all keys in Redis, there are three ways: use the KEYS command to return all keys that match the specified pattern; use the SCAN command to iterate over the keys and return a set of keys; use the INFO command to get the total number of keys.

How to read redis queue

Apr 10, 2025 pm 10:12 PM

How to read redis queue

Apr 10, 2025 pm 10:12 PM

To read a queue from Redis, you need to get the queue name, read the elements using the LPOP command, and process the empty queue. The specific steps are as follows: Get the queue name: name it with the prefix of "queue:" such as "queue:my-queue". Use the LPOP command: Eject the element from the head of the queue and return its value, such as LPOP queue:my-queue. Processing empty queues: If the queue is empty, LPOP returns nil, and you can check whether the queue exists before reading the element.

How to start the server with redis

Apr 10, 2025 pm 08:12 PM

How to start the server with redis

Apr 10, 2025 pm 08:12 PM

The steps to start a Redis server include: Install Redis according to the operating system. Start the Redis service via redis-server (Linux/macOS) or redis-server.exe (Windows). Use the redis-cli ping (Linux/macOS) or redis-cli.exe ping (Windows) command to check the service status. Use a Redis client, such as redis-cli, Python, or Node.js, to access the server.