We all know that nginx is famous for its high performance, stability, rich functions, simple configuration and low resource consumption, so why is nginx so fast? Let's analyze it from the underlying principles.

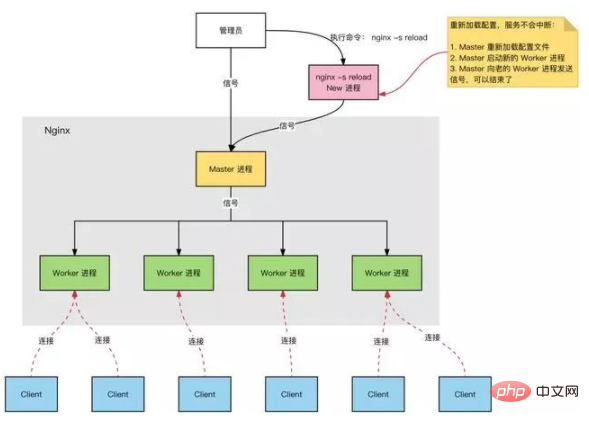

Nginx process model

#Nginx server, during normal operation:

Multiple processes: one Master process, multiple Worker processes .

Master process: manages Worker process.

External interface: receive external operations (signals);

Internal forwarding: manage Workers through signals according to different external operations;

Monitoring: monitor the Worker process In the running state, after the Worker process terminates abnormally, the Worker process is automatically restarted.

Worker process: All Worker processes are equal.

Actual processing: Network requests are processed by the Worker process.

Number of Worker processes: Configured in nginx.conf, generally set to the number of cores to make full use of CPU resources. At the same time, avoid excessive number of processes, avoid processes competing for CPU resources, and increase the loss of context switching.

Thinking:

The request is connected to Nginx, and the Master process is responsible for processing and forwarding?

How to select which Worker process handles the request?

Does the request processing result still need to go through the Master process?

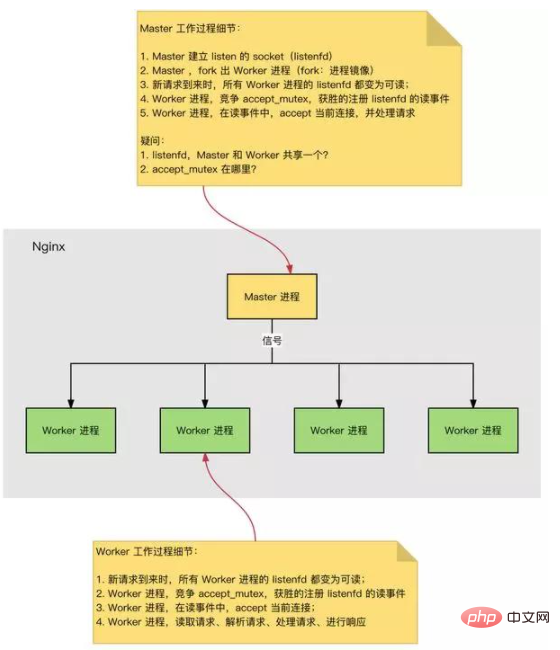

#HTTP connection establishment and request processing process are as follows:

When Nginx starts, the Master process loads the configuration file. Master process, initializes the listening Socket. Master process, Fork out multiple Worker processes. Worker processes compete for new connections. The winner establishes a Socket connection through a three-way handshake and processes the request.

Nginx High Performance, High Concurrency

Why does Nginx have high performance and support high concurrency?

Nginx adopts multi-process asynchronous non-blocking mode (IO multiplexing Epoll). The complete process of the request: establish a link → read the request → parse the request → process the request → respond to the request. The complete process of the request corresponds to the bottom layer: reading and writing Socket events.

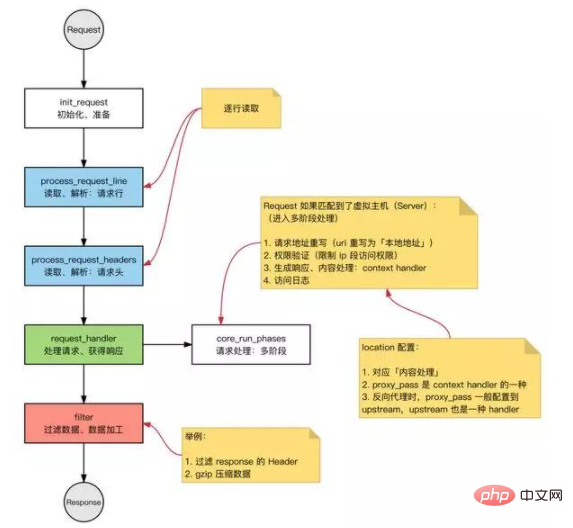

Nginx event processing model

Request: HTTP request in Nginx.

Basic HTTP Web Server working mode:

Receive a request: read the request line and request header line by line, and read the request body after judging that the segment has a request body. Process the request. Return response: Based on the processing results, generate the corresponding HTTP request (response line, response header, response body).

Nginx also follows this routine, and the overall process is the same:

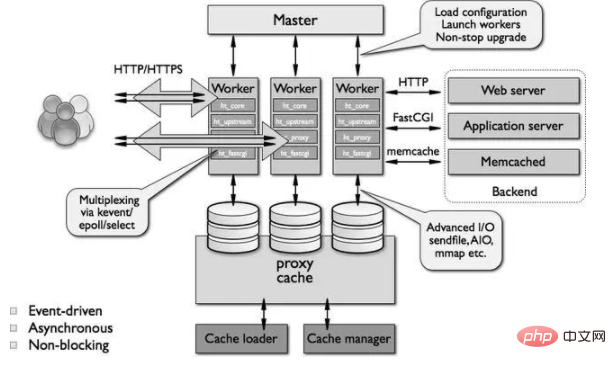

Modular architecture

Nginx is a multi-process model, and the Worker process is used to process requests. The number of connections (file descriptor fd) of a single process has an upper limit (nofile): ulimit -n. Configure the maximum number of connections for a single Worker process on Nginx: the upper limit of worker_connections is nofile. Configure the number of Worker processes on Nginx: worker_processes.

Therefore, the maximum number of connections for Nginx:

The maximum number of connections for Nginx: the number of Worker processes x the maximum number of connections for a single Worker process. The above is the maximum number of connections when Nginx is used as a general server. When Nginx serves as a reverse proxy server, the maximum number of connections it can serve: (number of Worker processes x maximum number of connections for a single Worker process) / 2. When Nginx reverse proxy is used, a connection to the Client and a connection to the back-end Web Server will be established, occupying 2 connections.

Thinking:

Every time a Socket is opened, it occupies one fd? Why is there a limit to the number of fds a process can open?

HTTP request and response

HTTP request:

Request line: method, uri, http version request header request body

HTTP response:

Response line: http version, status code response header response body

IO model

When processing multiple requests, you can use: IO multiplexing or blocking IO multi-threading:

IO multiplexing: One thread tracks the status of multiple Sockets, and whichever one is ready will be read and written. Blocking IO multi-threading: for each request, a new service thread is created.

What are the applicable scenarios for IO multiplexing and multi-threading?

IO multiplexing: There is no advantage in the request processing speed of a single connection. Large concurrency: Only one thread is used to process a large number of concurrent requests, reducing context switching loss. There is no need to consider concurrency issues, and it can handle relatively more requests. Consumes fewer system resources (no thread scheduling overhead required). Suitable for long connections (long connections in multi-thread mode can easily cause too many threads and cause frequent scheduling). Blocking IO multi-threading: simple to implement and does not rely on system calls. Each thread requires time and space. As the number of threads increases, thread scheduling overhead increases exponentially.

The comparison between select/poll and epoll is as follows:

select/poll system call:

// select 系统调用 int select(int maxfdp,fd_set *readfds,fd_set *writefds,fd_set *errorfds,struct timeval *timeout); // poll 系统调用 int poll(struct pollfd fds[], nfds_t nfds, int timeout);

select:

Query fd_set to see if there is a ready fd , you can set a timeout period and return when fd (File descripter) is ready or times out. fd_set is a bit set, the size is a constant when compiling the kernel, the default size is 1024. Features: The number of connections is limited, the number of fds that fd_set can represent is too small; linear scan: to determine whether fd is ready, you need to traverse one side of fd_set; data copy: user space and kernel space, copy the connection readiness status information.

poll:

Solve the connection limit: in poll, replace fd_set in select with a pollfd array to solve the problem of too small number of fd. Data replication: user space and kernel space, copy connection readiness status information.

epoll, event event-driven:

Event mechanism: avoid linear scan, register a listening event for each fd, when the fd changes to ready, add the fd to the ready list. Number of fds: No limit (OS level limit, how many fds can be opened by a single process).

select, poll, epoll:

I/O multiplexing mechanism. I/O multiplexing uses a mechanism to monitor multiple descriptors. Once a certain descriptor is ready (usually read ready or write ready), it can notify the program to perform corresponding read and write operations; monitor multiple files Descriptor. But select, poll, and epoll are essentially synchronous I/O: the user process is responsible for reading and writing (copying from kernel space to user space). During the reading and writing process, the user process is blocked; asynchronous IO does not require the user process to be responsible for reading and writing. , Asynchronous IO will be responsible for copying from kernel space to user space.

Concurrency processing capability of Nginx

About Nginx’s concurrent processing capability: the number of concurrent connections, generally after optimization, the peak value can be maintained at around 1~3w. (The number of memory and CPU cores is different, there will be room for further optimization)

Related recommendations: nginx tutorial

The above is the detailed content of Why is nginx so fast?. For more information, please follow other related articles on the PHP Chinese website!

nginx restart

nginx restart

Detailed explanation of nginx configuration

Detailed explanation of nginx configuration

Detailed explanation of nginx configuration

Detailed explanation of nginx configuration

What are the differences between tomcat and nginx

What are the differences between tomcat and nginx

What is machine language

What is machine language

How to represent negative numbers in binary

How to represent negative numbers in binary

Convert text to numeric value

Convert text to numeric value

btc coin latest news

btc coin latest news

What are the servers that are exempt from registration?

What are the servers that are exempt from registration?