This article will share with you 21 key points that you must know when using Redis. It has certain reference value. Friends in need can refer to it. I hope it will be helpful to everyone.

1. Redis usage specifications

1.1. Key specifications Key points

When we design the Redis key, we should pay attention to the following points:

- Prefix the key with the business name and use a colon Separate to prevent key conflict coverage. For example, live:rank:1

- To ensure that the semantics of the key are clear, the length of the key should be as short as 30 characters.

- key is prohibited from containing special characters, such as spaces, newlines, single and double quotation marks, and other escape characters.

- Redis keys should be set to ttl as much as possible to ensure that unused keys can be cleared or eliminated in time.

1.2. Key points of value specification

The value of Redis cannot be set arbitrarily.

First point, there will be problems if a large number of bigKeys are stored, which will lead to slow queries, excessive memory growth, etc.

- If it is a String type, the size of a single value should be controlled within 10k.

- If it is a hash, list, set, or zset type, the number of elements generally does not exceed 5000.

The second point is to choose the appropriate data type. Many friends only use the String type of Redis, mainly set and get. In fact, Redis provides rich data structure types, and in some business scenarios, it is more suitable for hash, zset and other data results. [Related recommendations: Redis Video Tutorial]

Counterexample:

set user:666:name jay set user:666:age 18

positive example

hmset user:666 name jay age 18

1.3. Set the expiration time for the Key, and pay attention to the keys of different businesses, and try to spread the expiration time as much as possible

If a large number of keys expire at a certain point in time, Redis may be stuck at the time of expiration, or even cache avalanche, so it is generally different The expiration time of business keys should be spread out. Sometimes, if you are in the same business, you can also add a random value to the time to spread the expiration time.

1.4. It is recommended to use batch operations to improve efficiency

When we write SQL every day, we all know that batch operations will be more efficient, once Updating 50 items is more efficient than looping 50 times and updating one item each time. In fact, the same principle applies to Redis operation commands.

The execution of a command by the Redis client can be divided into 4 processes: 1. Send command-> 2. Command queuing-> 3. Command execution-> 4. Return the result. 1 and 4 are called RRT (command execution round trip time). Redis provides batch operation commands, such as mget, mset, etc., which can effectively save RRT. However, most commands do not support batch operations, such as hgetall, and mhgetall does not exist. Pipeline can solve this problem.

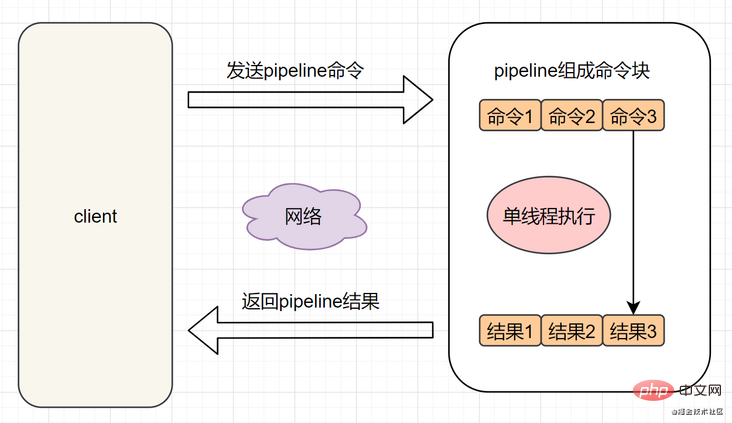

What is Pipeline? It can assemble a set of Redis commands, transmit them to Redis through an RTT, and then return the execution results of this set of Redis commands to the client in order.

Let’s first look at the model that executes n commands without using Pipeline:

Using Pipeline to execute n commands, the whole process requires 1 RTT. The model is as follows:

2. Commands with pitfalls in Redis

2.1. Use with caution <span style="font-size: 18px;">O(n)</span> Complexity commands, such as <span style="font-size: 18px;">hgetall</span>, <span style="font-size: 18px;">smember</span>,<span style="font-size: 18px;">lrange</span>etc

because Redis executes commands in a single thread. The time complexity of commands such as hgetall and smember is O(n). When n continues to increase, it will cause the Redis CPU to continue to surge and block the execution of other commands.

hgetall、smember,lrange等这些命令不是一定不能使用,需要综合评估数据量,明确n的值,再去决定。 比如hgetall,如果哈希元素n比较多的话,可以优先考虑使用hscan。

2.2 慎用Redis的monitor命令

Redis Monitor 命令用于实时打印出Redis服务器接收到的命令,如果我们想知道客户端对redis服务端做了哪些命令操作,就可以用Monitor 命令查看,但是它一般调试用而已,尽量不要在生产上用!因为monitor命令可能导致redis的内存持续飙升。

monitor的模型是酱紫的,它会将所有在Redis服务器执行的命令进行输出,一般来讲Redis服务器的QPS是很高的,也就是如果执行了monitor命令,Redis服务器在Monitor这个客户端的输出缓冲区又会有大量“存货”,也就占用了大量Redis内存。

2.3、生产环境不能使用 keys指令

Redis Keys 命令用于查找所有符合给定模式pattern的key。如果想查看Redis 某类型的key有多少个,不少小伙伴想到用keys命令,如下:

keys key前缀*

但是,redis的keys是遍历匹配的,复杂度是O(n),数据库数据越多就越慢。我们知道,redis是单线程的,如果数据比较多的话,keys指令就会导致redis线程阻塞,线上服务也会停顿了,直到指令执行完,服务才会恢复。因此,一般在生产环境,不要使用keys指令。官方文档也有声明:

Warning: consider KEYS as a command that should only be used in production environments with extreme care. It may ruin performance when it is executed against large databases. This command is intended for debugging and special operations, such as changing your keyspace layout. Don't use KEYS in your regular application code. If you're looking for a way to find keys in a subset of your keyspace, consider using sets.

其实,可以使用scan指令,它同keys命令一样提供模式匹配功能。它的复杂度也是 O(n),但是它通过游标分步进行,不会阻塞redis线程;但是会有一定的重复概率,需要在客户端做一次去重。

scan支持增量式迭代命令,增量式迭代命令也是有缺点的:举个例子, 使用 SMEMBERS 命令可以返回集合键当前包含的所有元素, 但是对于 SCAN 这类增量式迭代命令来说, 因为在对键进行增量式迭代的过程中, 键可能会被修改, 所以增量式迭代命令只能对被返回的元素提供有限的保证 。

2.4 禁止使用flushall、flushdb

- Flushall 命令用于清空整个 Redis 服务器的数据(删除所有数据库的所有 key )。

- Flushdb 命令用于清空当前数据库中的所有 key。

这两命令是原子性的,不会终止执行。一旦开始执行,不会执行失败的。

2.5 注意使用del命令

删除key你一般使用什么命令?是直接del?如果删除一个key,直接使用del命令当然没问题。但是,你想过del的时间复杂度是多少嘛?我们分情况探讨一下:

O(1),可以直接del。O(n), n表示元素个数。因此,如果你删除一个List/Hash/Set/ZSet类型的key时,元素越多,就越慢。当n很大时,要尤其注意,会阻塞主线程的。那么,如果不用del,我们应该怎么删除呢?

- 如果是List类型,你可以执行

lpop或者rpop,直到所有元素删除完成。- 如果是Hash/Set/ZSet类型,你可以先执行

hscan/sscan/scan查询,再执行hdel/srem/zrem依次删除每个元素。

2.6 避免使用SORT、SINTER等复杂度过高的命令。

执行复杂度较高的命令,会消耗更多的 CPU 资源,会阻塞主线程。所以你要避免执行如SORT、SINTER、SINTERSTORE、ZUNIONSTORE、ZINTERSTORE等聚合命令,一般建议把它放到客户端来执行。

3、项目实战避坑操作

3.1 分布式锁使用的注意点

分布式锁其实就是,控制分布式系统不同进程共同访问共享资源的一种锁的实现。秒杀下单、抢红包等等业务场景,都需要用到分布式锁。我们经常使用Redis作为分布式锁,主要有这些注意点:

3.1.1 两个命令SETNX + EXPIRE分开写(典型错误实现范例)

if(jedis.setnx(key_resource_id,lock_value) == 1){ //加锁

expire(key_resource_id,100); //设置过期时间

try {

do something //业务请求

}catch(){

}

finally {

jedis.del(key_resource_id); //释放锁

}

}如果执行完setnx加锁,正要执行expire设置过期时间时,进程crash或者要重启维护了,那么这个锁就“长生不老”了,别的线程永远获取不到锁啦,所以一般分布式锁不能这么实现。

3.1.2 SETNX + value值是过期时间 (有些小伙伴是这么实现,有坑)

long expires = System.currentTimeMillis() + expireTime; //系统时间+设置的过期时间

String expiresStr = String.valueOf(expires);

// 如果当前锁不存在,返回加锁成功

if (jedis.setnx(key_resource_id, expiresStr) == 1) {

return true;

}

// 如果锁已经存在,获取锁的过期时间

String currentValueStr = jedis.get(key_resource_id);

// 如果获取到的过期时间,小于系统当前时间,表示已经过期

if (currentValueStr != null && Long.parseLong(currentValueStr) <p>这种方案的<strong>缺点</strong>:</p><blockquote><ul>

<li>过期时间是客户端自己生成的,分布式环境下,每个客户端的时间必须同步</li>

<li>没有保存持有者的唯一标识,可能被别的客户端释放/解锁。</li>

<li>锁过期的时候,并发多个客户端同时请求过来,都执行了<code>jedis.getSet()</code>,最终只能有一个客户端加锁成功,但是该客户端锁的过期时间,可能被别的客户端覆盖。</li>

</ul></blockquote><p><strong>3.1.3: SET的扩展命令(SET EX PX NX)(注意可能存在的问题)</strong></p><pre class="brush:php;toolbar:false">if(jedis.set(key_resource_id, lock_value, "NX", "EX", 100s) == 1){ //加锁

try {

do something //业务处理

}catch(){

}

finally {

jedis.del(key_resource_id); //释放锁

}

}这个方案还是可能存在问题:

3.1.4 SET EX PX NX + 校验唯一随机值,再删除(解决了误删问题,还是存在锁过期,业务没执行完的问题)

if(jedis.set(key_resource_id, uni_request_id, "NX", "EX", 100s) == 1){ //加锁

try {

do something //业务处理

}catch(){

}

finally {

//判断是不是当前线程加的锁,是才释放

if (uni_request_id.equals(jedis.get(key_resource_id))) {

jedis.del(lockKey); //释放锁

}

}

}在这里,判断是不是当前线程加的锁和释放锁不是一个原子操作。如果调用jedis.del()释放锁的时候,可能这把锁已经不属于当前客户端,会解除他人加的锁。

一般也是用lua脚本代替。lua脚本如下:

if redis.call('get',KEYS[1]) == ARGV[1] then

return redis.call('del',KEYS[1])

else

return 0

end;3.1.5 Redisson框架 + Redlock算法 解决锁过期释放,业务没执行完问题+单机问题

Redisson 使用了一个Watch dog解决了锁过期释放,业务没执行完问题,Redisson原理图如下:

以上的分布式锁,还存在单机问题:

如果线程一在Redis的master节点上拿到了锁,但是加锁的key还没同步到slave节点。恰好这时,master节点发生故障,一个slave节点就会升级为master节点。线程二就可以获取同个key的锁啦,但线程一也已经拿到锁了,锁的安全性就没了。

针对单机问题,可以使用Redlock算法。有兴趣的朋友可以看下我这篇文章哈,七种方案!探讨Redis分布式锁的正确使用姿势

3.2 缓存一致性注意点

有兴趣的朋友,可以看下我这篇文章哈:并发环境下,先操作数据库还是先操作缓存?

3.3 合理评估Redis容量,避免由于频繁set覆盖,导致之前设置的过期时间无效。

我们知道,Redis的所有数据结构类型,都是可以设置过期时间的。假设一个字符串,已经设置了过期时间,你再去重新设置它,就会导致之前的过期时间无效。

Redis setKey源码如下:

void setKey(redisDb *db,robj *key,robj *val) {

if(lookupKeyWrite(db,key)==NULL) {

dbAdd(db,key,val);

}else{

dbOverwrite(db,key,val);

}

incrRefCount(val);

removeExpire(db,key); //去掉过期时间

signalModifiedKey(db,key);

}实际业务开发中,同时我们要合理评估Redis的容量,避免频繁set覆盖,导致设置了过期时间的key失效。新手小白容易犯这个错误。

3.4 缓存穿透问题

先来看一个常见的缓存使用方式:读请求来了,先查下缓存,缓存有值命中,就直接返回;缓存没命中,就去查数据库,然后把数据库的值更新到缓存,再返回。

Cache Penetration: Refers to querying a data that must not exist. Since the cache does not hit, it needs to be queried from the database. If the data cannot be found, it will not be written to the cache, which will result in this non-existent data. Every time the data is requested, the database must be queried, which puts pressure on the database.

In layman's terms, when a read request is accessed, neither the cache nor the database has a certain value, which will cause each query request for this value to penetrate into the database. This is cache penetration.

Cache penetration is generally caused by the following situations:

How to avoid cache penetration? Generally there are three methods.

Bloom filter principle: It consists of a bitmap array with an initial value of 0 and N hash functions. Perform N hash algorithms on a key to obtain N values. Hash these N values in the bit array and set them to 1. Then when checking, if these specific positions are all 1, then Bloom filtering The server determines that the key exists.

3.5 Cache snow run problem

Cache snow run: refers to the expiration time of large batches of data in the cache, and The amount of query data is huge, and all requests directly access the database, causing excessive pressure on the database and even downtime.

3.6 Cache breakdown problem

Cache breakdown: refers to the hotspot key at a certain point in time When it expires, there are a large number of concurrent requests for this Key at this time, and a large number of requests are hit to the db.

Cache breakdown looks a bit similar. In fact, the difference between them is that cache snowfall means that the database is under excessive pressure or even down. Cache breakdown is just a large number of concurrent requests to the DB database level. It can be considered that breakdown is a subset of cache snowrun. Some articles believe that the difference between the two is that breakdown is aimed at a certain hot key cache, while Xuebeng is targeted at many keys.

There are two solutions:

3.7. Cache hot key problem

In Redis, we call keys with high access frequency as hot keys. If a request for a certain hotspot key is sent to the server host, due to a particularly large request volume, it may result in insufficient host resources or even downtime, thus affecting normal services.

How is the hotspot Key generated? There are two main reasons:

- The data consumed by users is much greater than the data produced, such as flash sales, hot news and other scenarios where more reading and less writing are required.

- Request sharding is concentrated and exceeds the performance of a single Redi server. For example, if the fixed name key and Hash fall into the same server, the amount of instant access will be huge, exceeding the machine bottleneck, and causing hot key problems.

So how to identify hot keys in daily development?

- Determine which hot keys are based on experience;

- Report client statistics;

- Report to service agent layer

How to solve the hot key problem?

- Redis cluster expansion: add shard copies to balance read traffic;

- Hash hot keys, such as backing up a key as key1, key2...keyN, the same There are N backups of data, and the N backups are distributed to different shards. During access, one of the N backups can be randomly accessed to further share the read traffic;

- Use the second-level cache, that is, the JVM local cache, to reduce Redis read request.

4. Redis configuration and operation

4.1 Use long connections instead of short connections , and properly configure the client's connection pool

4.2 Only use db0

Redis-standalone architecture prohibits the use of non-db0. There are two reasons

4.3 Set maxmemory appropriate elimination strategy.

In order to prevent the memory backlog from expanding. For example, sometimes, when the business volume increases, redis keys are used a lot, the memory is simply not enough, and the operation and maintenance guy forgets to increase the memory. Could redis just hang up like this? Therefore, you need to select the maxmemory-policy (maximum memory elimination policy) and set the expiration time based on the actual business. There are a total of 8 memory elimination strategies:

4.4 Turn on lazy-free mechanism

Redis4.0 version supports lazy-free mechanism, if your Redis still has bigKey This kind of thing exists, it is recommended to turn on lazy-free. When it is turned on, if Redis deletes a bigkey, the time-consuming operation of releasing memory will be executed on a background thread, reducing the blocking impact on the main thread.

For more programming related knowledge, please visit: Programming Video! !

The above is the detailed content of 21 points you must pay attention to when using Redis (summary). For more information, please follow other related articles on the PHP Chinese website!