headlines

headlines

Take a look at the different seventh national census through a technical perspective! !

Take a look at the different seventh national census through a technical perspective! !

Take a look at the different seventh national census through a technical perspective! !

After reading the seventh census bulletin, you will find that the entire work process is similar to the data analysis process in enterprises. This article will first look at the commonalities between the two, and then look at what information in the "Seven People's Census" should focus on as an Internet practitioner.

We refer to the "Seventh National Census Bulletin" for explanation

.Innovate the census content and census methods. The electronic data collection method is fully adopted, and the enumerators use electronic equipment to collect and report the data directly in real time; Transform and report, corresponding to the enterprise is

buried point reporting

How to understand buried points? "Point" is actually a certain location on the app or website. Buried points are at a certain location. After the user takes a certain action, the user's current information is recorded. An action can be browsing, clicking, swiping, etc. For example: In an e-commerce app, when a user clicks the order button, the time, amount, product ID, mobile network status, mobile operating system and other information of the user's order are recorded. This is a hidden point. A burial point can record any information, but there are three essential pieces of information: time, place, and person. Time is the time when the behavior occurs and is used to analyze user timing; location is the specific location on the current page where the behavior occurred; character is the user identification, which is generally generated using device information from mobile phones and PCs. Other information is selectively collected based on the needs of data analysis.

After the information is collected, it is usuallyreported

to the enterprise's server through real-time technology for subsequent analysis. Based on these buried points, we can analyze what content the user browsed at what time, what content he clicked on last, how long he watched the clicked content, what he finally purchased, how much he spent, etc., and further analysis of the user's What content is preferred and what is the user’s spending power, so as to further make personalized recommendations.Make full use of Internet cloud technology, cloud services and cloud applications to complete data processing work

Distributed storage and computing frameworks can be open source, such as Hadoop, Hive, Spark, etc., or self-developed by enterprises, such as Alibaba Cloud's MaxCompute.Due to the large amount of buried data and the need for long-term storage. Therefore, after an enterprise's buried points are reported, they are generally stored in distributed storage media, and subsequent data analysis work is mostly processed using a distributed computing framework. Distributed storage and computing services currently mostly take the form of cloud services. A company I worked for originally bought its own servers to build distributed services. Because the operation and maintenance costs were too high and unbearable, it eventually moved to Alibaba Cloud, which saved a large part of the operation and maintenance costs.

Carry out secure management of census data collection, transmission, and storage in accordance with the national network security level three protection standards to ensure the security of citizens’ personal information

, in the enterprise, user confidential information, such as ID number, will be desensitized, that is, the ID number will be encoded into a unique identifier, so that It neither affects use nor leaks private information.What this part says

Personal Information Protection

In addition to desensitization, it is also necessary to classify the confidentiality of data and establish a corresponding authority review mechanism. What level of confidential data is used must be applied for corresponding permissions and recorded so that information leakage can be traced.

Census agencies at all levels strictly implement quality control requirements and carefully carry out quality inspection to ensure the quality of work at all stages of the census

. In an enterprise, monitoring the quality of buried points is also a key component. If the reported buried points are all wrong and cannot be used, it is obviously meaningless.This part talks about

data quality monitoring

The quality monitoring of buried points by enterprises is generally done in two aspects. First, verifying a single buried point, checking whether the format of each field of the reported buried point is correct, monitoring the null value rate of core fields, etc. . Second, monitor the traffic and determine whether there are any abnormalities in the magnitude of reported buried points through year-on-year comparison.

The seventh national census comprehensively investigated the number, structure, distribution and other aspects of my country's population, and grasped the trend characteristics of population changes. It provides a basis for improving my country's population development strategy and policy system and formulating It provides accurate statistical information support for economic and social development planning and promoting high-quality economic development.

we are familiar with. In the enterprise, it is to analyze user behavior, obtain valuable conclusions, and provide decision support for the iteration of the app or website. .This part is the

data analysis

Data analysis is generally divided into two parts. One part is numerical analysis, which can be simple numerical statistics, or you can use Python machine learning for fitting, classification, etc. When the amount of data is large, distributed computing frameworks Hadoop and Spark will be used. The other part is text analysis, which uses more machine learning and deep learning methods to mine things that cannot be seen through numerical analysis.

Also, add something. The age, gender, education and other information we see in the census are generally called user portraits in companies. This information cannot be collected through buried points, but it is very important data for enterprises. It often needs to be combined with user behavior and predicted using machine learning and deep learning algorithms.

This is the end of the first part. We take the census as an example to introduce the process of enterprise data analysis and the technologies involved. Let’s briefly talk about what aspects we should pay attention to as Internet practitioners.

The quality of the population continues to improve, and new advantages in talent dividends will gradually emerge. At the same time, the employment pressure of college students is increasing, and the pace of industrial transformation and upgrading needs to be accelerated.

The white-collar population has been oversupplied for a long time, and the 996 involution will continue to be intense. Therefore, the talent cost of high-tech enterprises has been reduced, and the "talent dividend advantage has gradually emerged."

For blacksmithing, you still need to be hard-working, and you need to continuously improve your real skills and learning.

The accelerated population agglomeration not only reflects the trend changes in urbanization and economic agglomeration, but also puts forward new requirements for improving the quality of urbanization and promoting coordinated regional development.

The inflow of population into big cities is accelerating, while the loss of rural population is accelerating.

China’s urbanization process has not yet been completed. For students who have not yet graduated, choosing first-tier and new first-tier cities is a wise choice. For migrant workers who are already in big cities, buying a house in a central location is a wise choice.

The proportion of the elderly population is rising rapidly, and aging has become my country’s basic national condition for some time to come. At the same time, the increase in the elderly population will also bring wisdom, inheritance, performance and expansion of demand.

Be prepared to delay retirement. It seems that you have to consider not only the mid-life crisis, but also the old-age crisis.

No company will be idle and analyze a bunch of useless data all day long. The same goes for the census. Finding information that is useful to you and finding out how to go in the future is what everyone should do most.

Related recommendations:

php past life, present life and future prospects

For beginners, how to quickly learn php from scratch? (To you who are confused)

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Read CSV files and perform data analysis using pandas

Jan 09, 2024 am 09:26 AM

Read CSV files and perform data analysis using pandas

Jan 09, 2024 am 09:26 AM

Pandas is a powerful data analysis tool that can easily read and process various types of data files. Among them, CSV files are one of the most common and commonly used data file formats. This article will introduce how to use Pandas to read CSV files and perform data analysis, and provide specific code examples. 1. Import the necessary libraries First, we need to import the Pandas library and other related libraries that may be needed, as shown below: importpandasaspd 2. Read the CSV file using Pan

Introduction to data analysis methods

Jan 08, 2024 am 10:22 AM

Introduction to data analysis methods

Jan 08, 2024 am 10:22 AM

Common data analysis methods: 1. Comparative analysis method; 2. Structural analysis method; 3. Cross analysis method; 4. Trend analysis method; 5. Cause and effect analysis method; 6. Association analysis method; 7. Cluster analysis method; 8 , Principal component analysis method; 9. Scatter analysis method; 10. Matrix analysis method. Detailed introduction: 1. Comparative analysis method: Comparative analysis of two or more data to find the differences and patterns; 2. Structural analysis method: A method of comparative analysis between each part of the whole and the whole. ; 3. Cross analysis method, etc.

How to build a fast data analysis application using React and Google BigQuery

Sep 26, 2023 pm 06:12 PM

How to build a fast data analysis application using React and Google BigQuery

Sep 26, 2023 pm 06:12 PM

How to use React and Google BigQuery to build fast data analysis applications Introduction: In today's era of information explosion, data analysis has become an indispensable link in various industries. Among them, building fast and efficient data analysis applications has become the goal pursued by many companies and individuals. This article will introduce how to use React and Google BigQuery to build a fast data analysis application, and provide detailed code examples. 1. Overview React is a tool for building

11 basic distributions that data scientists use 95% of the time

Dec 15, 2023 am 08:21 AM

11 basic distributions that data scientists use 95% of the time

Dec 15, 2023 am 08:21 AM

Following the last inventory of "11 Basic Charts Data Scientists Use 95% of the Time", today we will bring you 11 basic distributions that data scientists use 95% of the time. Mastering these distributions helps us understand the nature of the data more deeply and make more accurate inferences and predictions during data analysis and decision-making. 1. Normal Distribution Normal Distribution, also known as Gaussian Distribution, is a continuous probability distribution. It has a symmetrical bell-shaped curve with the mean (μ) as the center and the standard deviation (σ) as the width. The normal distribution has important application value in many fields such as statistics, probability theory, and engineering.

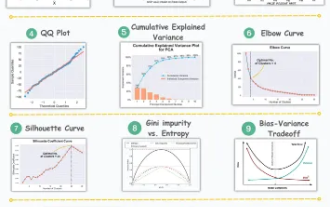

11 Advanced Visualizations for Data Analysis and Machine Learning

Oct 25, 2023 am 08:13 AM

11 Advanced Visualizations for Data Analysis and Machine Learning

Oct 25, 2023 am 08:13 AM

Visualization is a powerful tool for communicating complex data patterns and relationships in an intuitive and understandable way. They play a vital role in data analysis, providing insights that are often difficult to discern from raw data or traditional numerical representations. Visualization is crucial for understanding complex data patterns and relationships, and we will introduce the 11 most important and must-know charts that help reveal the information in the data and make complex data more understandable and meaningful. 1. KSPlotKSPlot is used to evaluate distribution differences. The core idea is to measure the maximum distance between the cumulative distribution functions (CDF) of two distributions. The smaller the maximum distance, the more likely they belong to the same distribution. Therefore, it is mainly interpreted as a "system" for determining distribution differences.

Machine learning and data analysis using Go language

Nov 30, 2023 am 08:44 AM

Machine learning and data analysis using Go language

Nov 30, 2023 am 08:44 AM

In today's intelligent society, machine learning and data analysis are indispensable tools that can help people better understand and utilize large amounts of data. In these fields, Go language has also become a programming language that has attracted much attention. Its speed and efficiency make it the choice of many programmers. This article introduces how to use Go language for machine learning and data analysis. 1. The ecosystem of machine learning Go language is not as rich as Python and R. However, as more and more people start to use it, some machine learning libraries and frameworks

How to use ECharts and php interfaces to implement data analysis and prediction of statistical charts

Dec 17, 2023 am 10:26 AM

How to use ECharts and php interfaces to implement data analysis and prediction of statistical charts

Dec 17, 2023 am 10:26 AM

How to use ECharts and PHP interfaces to implement data analysis and prediction of statistical charts. Data analysis and prediction play an important role in various fields. They can help us understand the trends and patterns of data and provide references for future decisions. ECharts is an open source data visualization library that provides rich and flexible chart components that can dynamically load and process data by using the PHP interface. This article will introduce the implementation method of statistical chart data analysis and prediction based on ECharts and php interface, and provide

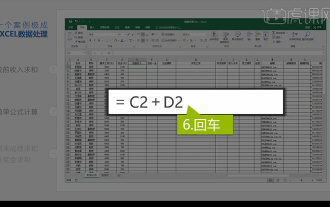

Integrated Excel data analysis

Mar 21, 2024 am 08:21 AM

Integrated Excel data analysis

Mar 21, 2024 am 08:21 AM

1. In this lesson, we will explain integrated Excel data analysis. We will complete it through a case. Open the course material and click on cell E2 to enter the formula. 2. We then select cell E53 to calculate all the following data. 3. Then we click on cell F2, and then we enter the formula to calculate it. Similarly, dragging down can calculate the value we want. 4. We select cell G2, click the Data tab, click Data Validation, select and confirm. 5. Let’s use the same method to automatically fill in the cells below that need to be calculated. 6. Next, we calculate the actual wages and select cell H2 to enter the formula. 7. Then we click on the value drop-down menu to click on other numbers.