What are the three address mapping methods between main memory and cache?

There are three mapping methods: full associative method, direct method and group associative method. Direct mapping can store a main memory block into a unique Cache line; fully associative mapping can store a main memory block into any Cache line; group associative mapping can store a main memory block into a unique Cache group. any line in it.

The operating environment of this tutorial: Windows 10 system, Dell G3 computer.

cache is a high-speed buffer register and an important technology used to solve the speed mismatch between the CPU and main memory.

CPU access to memory usually reads and writes one word unit at a time. When the CPU access to the cache misses, the word unit stored in the main memory needs to be transferred into the cache together with several subsequent words. The reason for doing this is to ensure that subsequent memory accesses can hit the cache.

Therefore, the unit of data exchanged between main memory and Cache should be a data block. The size of the data block is fixed and consists of several words, and the data block size of the main memory and Cache is the same.

From the perspective of the goals achieved at the Cache-main memory level, on the one hand, the memory access speed of the CPU must be close to the Cache access speed, and on the other hand, the running space provided for user programs should remain the main memory capacity. size storage space.

In a system that uses the Cache-main memory hierarchy, the Cache is transparent to the user program, that is to say, the user program does not need to know the existence of the Cache. Therefore, every time the CPU accesses memory, it still gives a main memory address just like the case when the Cache is not used. But in the Cache-main memory hierarchy, the first thing the CPU accesses is the Cache, not the main memory.

To this end, a mechanism is needed to convert the CPU's main memory access address into the Cache access address. The conversion between the main memory address and the Cache address is closely related to the mapping relationship between the main memory block and the Cache block. That is to say, when the CPU access to the Cache misses, the word to be accessed needs to be located in the main memory. The block is transferred into the Cache, and the strategy used to transfer it directly affects the corresponding relationship between the main memory address and the Cache address. This is the address mapping problem between the main memory and the Cache to be solved in this section.

There are three address mapping methods between main memory and cache: full associative method, direct method and set associative method.

-

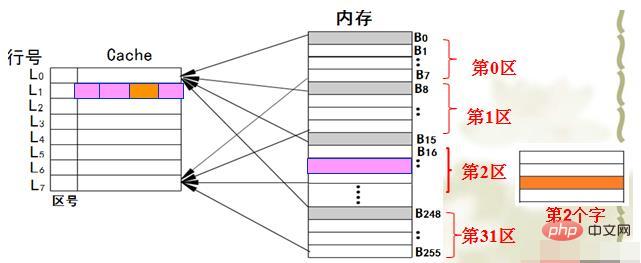

Direct mapping (directmapping)

Store a main memory block to a unique Cache line.

-

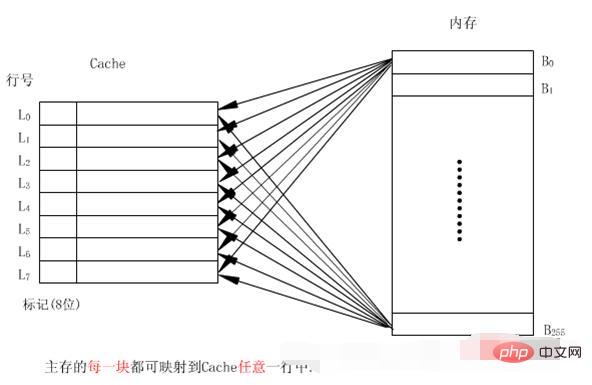

Fully associative mapping

You can store a main memory block to any Cache line.

-

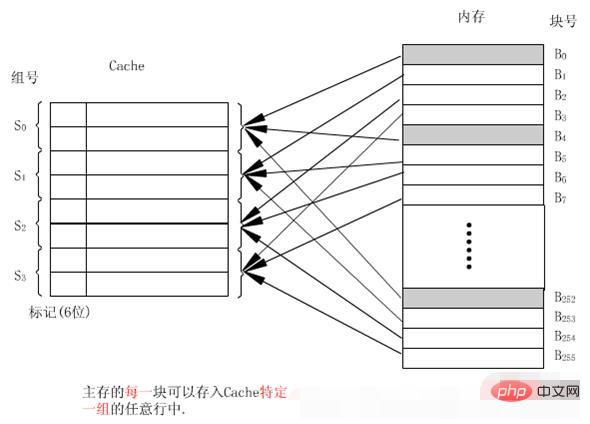

Set associative mapping (setassociative mapping)

You can store a main memory block to any row in a unique Cache group.

Direct mapping

Many-to-one mapping relationship, but a main memory block can only be copied to a specific row position in the cache.

The cache line number i and the main memory block number j have the following functional relationship: i=j mod m (m is the total number of lines in the cache)

Advantages: simple hardware, easy to implement

Disadvantages: low hit rate, low Cache storage space utilization

##Fully associative mapping

A block of main memory is directly copied to any line in the cache.Advantages: high hit rate, high cache storage space utilizationDisadvantages: complex wiring, high cost, low speed

Group associative mapping

Divide the cache into u groups, each group has v rows, and which group the main memory block is stored in It is fixed. As for which row is stored in the group, it is flexible, that is, there is the following functional relationship: the total number of cache rows m=u×v The group number q=j mod u uses direct mapping between groups, and within the group: QuanlianlianThe hardware is simpler, the speed is faster, and the hit rate is higher

FAQcolumn!

The above is the detailed content of What are the three address mapping methods between main memory and cache?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52