5 rows in set (0.01 sec)

If you find that the lock contention is serious, such as the values of InnoDB_row_lock_waits and InnoDB_row_lock_time_avg are relatively high, you can also set InnoDB Monitors to further observe the tables and data where lock conflicts occur. Wait, and analyze the cause of lock contention.

The specific method is as follows:

mysql> CREATE TABLE innodb_monitor(a INT) ENGINE=INNODB;

Query OK, 0 rows affected (0.14 sec)

Then you can use the following statement to view:

mysql> Show innodb status\G;

The monitor can stop viewing by issuing the following statement:

mysql> ; DROP TABLE innodb_monitor;

Query OK, 0 rows affected (0.05 sec)

After setting up the monitor, in the display content of SHOW INNODB STATUS, there will be detailed information about the current lock waiting , including table name, lock type, lock record status, etc., to facilitate further analysis and problem determination. After opening the monitor, by default, the monitoring content will be recorded in the log every 15 seconds. If it is opened for a long time, the .err file will become very large. Therefore, after confirming the cause of the problem, the user must remember to delete the monitoring table to close it. monitor, or by starting the server using the "--console" option to turn off writing the log file.

5. InnoDB’s row lock mode and locking method

InnoDB implements the following two types of row locks.

Shared lock (S): allows one transaction to read a row and prevents other transactions from obtaining an exclusive lock on the same data set.

Exclusive lock (X): Allows transactions that obtain exclusive locks to update data, and prevents other transactions from obtaining shared read locks and exclusive write locks on the same data set.

In addition, in order to allow row locks and table locks to coexist and implement a multi-granularity locking mechanism, InnoDB also has two internally used intention locks (Intention Locks).

Both intention locks are It's a table lock.

Intention shared lock (IS): The transaction intends to add a row shared lock to the data row. The transaction must first obtain the IS lock of the table before adding a shared lock to a data row.

Intention exclusive lock (IX): The transaction intends to add a row exclusive lock to the data row. The transaction must first obtain the IX lock of the table before adding an exclusive lock to a data row.

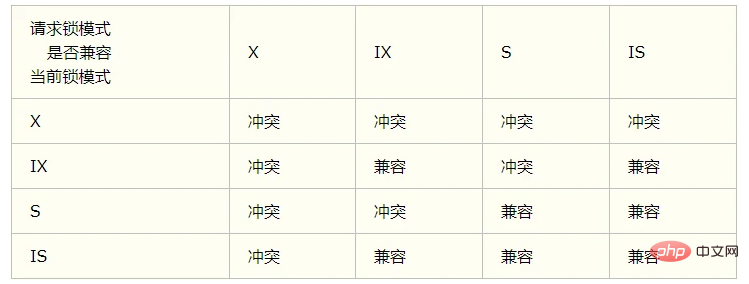

If the lock mode requested by a transaction is compatible with the current lock, InnoDB will grant the requested lock to the transaction; otherwise, if the two are not Compatible, the transaction will wait for the lock to be released.

Intention locks are automatically added by InnoDB and do not require user intervention.

The summary is as follows:

1. For UPDATE, DELETE and INSERT statements, InnoDB will automatically add exclusive locks (X) to the involved data sets;

2. For ordinary SELECT statement, InnoDB will not add any locks;

3. Transactions can add shared locks or exclusive locks to the recordset through the following statements.

Shared lock(S): SELECT * FROM table_name WHERE ... LOCK IN SHARE MODE.

Exclusive lock (X): SELECT * FROM table_name WHERE ... FOR UPDATE.

Use SELECT ... IN SHARE MODE to obtain a shared lock, which is mainly used to confirm whether a certain row of records exists when data dependencies are required, and to ensure that no one performs UPDATE or DELETE operations on this record.

However, if the current transaction also needs to update the record, it is likely to cause a deadlock. For applications that need to update the row record after locking it, you should use the SELECT... FOR UPDATE method to obtain exclusive Lock.

6. InnoDB row lock implementation method

InnoDB row lock is implemented by locking the index items on the index. This is different from MySQL and Oracle. The latter is implemented by locking the index items in the data block. This is achieved by locking the corresponding data rows.

InnoDB’s row lock implementation feature means that InnoDB will use row-level locks only when data is retrieved through index conditions. Otherwise, InnoDB will use table locks!

In practical applications, special attention should be paid to this feature of InnoDB row locks, otherwise it may cause a large number of lock conflicts, thus affecting concurrency performance.

(1) When querying without index conditions, InnoDB does use table locks instead of row locks.

(2) Since MySQL's row lock is a lock for the index, not for the record, although records of different rows are accessed,

but if the same index is used key, there will be a lock conflict. Please pay attention to this when designing your application.

(3) When the table has multiple indexes, different transactions can use different indexes to lock different rows.

In addition, whether using primary key index, unique index or ordinary index , InnoDB will use row locks to lock data.

(4) Even if an index field is used in the condition, whether to use the index to retrieve data is determined by MySQL by judging the cost of different execution plans. If MySQL believes that a full table scan is more efficient, such as For some very small tables, it will not use indexes. In this case, InnoDB will use table locks instead of row locks. Therefore, when analyzing lock conflicts, don't forget to check the SQL execution plan to confirm whether the index is actually used. For a detailed discussion of the circumstances under which MySQL does not use indexes, see the introduction in the "Index Issues" section of this chapter.

7. Gap lock (Next-Key lock)

When we use range conditions instead of equality conditions to retrieve data and request shared or exclusive locks, InnoDB will lock the index entries of existing data records that meet the conditions; for key values that are within the condition range but do not exist The record is called "GAP", and InnoDB will also lock this "gap". This locking mechanism is the so-called gap lock (Next-Key lock). If there are only 101 records in the emp table, the empid values are 1,2,...,100,101. The following SQL:

Select * from emp where empid > 100 for update;

is a range condition retrieval. InnoDB will not only lock the records with empid value 101 that meet the conditions, but also lock the "gaps" with empid greater than 101 (these records do not exist). The purpose of InnoDB using gap locks is, on the one hand, to prevent phantom reads and meet the requirements of relevant isolation levels. For the above example, if gap locks are not used, if other transactions insert any record with emid greater than 100, then if this transaction If the above statement is executed again, phantom reading will occur; on the other hand, it is to meet its recovery and replication needs. The impact of its recovery and replication on the lock mechanism, as well as InnoDB's use of gap locks under different isolation levels, will be further introduced in subsequent chapters.

Obviously, when using range conditions to retrieve and lock records, InnoDB's locking mechanism will block concurrent insertion of key values within the qualified range, which often results in serious lock waits. Therefore, in actual application development, especially applications with a lot of concurrent insertions, we should try our best to optimize the business logic, try to use equal conditions to access update data, and avoid using range conditions.

Special note is that, in addition to using gap locks when locking through range conditions, InnoDB will also use gap locks if equal conditions are used to request a lock for a non-existent record!

The need for recovery and replication, the impact on the InnoDB lock mechanism

MySQL records the successful execution of INSERT, UPDATE, DELETE and other SQL statements to update data through BINLOG, and thus realizes the MySQL database Recovery and master-slave replication. MySQL's recovery mechanism (replication is actually continuous BINLOG-based recovery on Slave Mysql) has the following characteristics.

First, MySQL recovery is at the SQL statement level, that is, re-executing the SQL statement in BINLOG. This is different from the Oracle database, which is based on database file blocks.

Second, MySQL's BINLOG is recorded in the order in which transactions are submitted, and recovery is also performed in this order. This is also different from Oracle. Oracle restores data according to the System Change Number (SCN). When each transaction starts, Oracle will allocate a globally unique SCN. The order of the SCN and the time order of the transaction start are Consistent.

It can be seen from the above two points that MySQL's recovery mechanism requires that before a transaction is submitted, other concurrent transactions cannot insert any records that meet its locking conditions, that is, phantom reads are not allowed. This has exceeded The ISO/ANSI SQL92 "repeatable read" isolation level requirement actually requires transactions to be serialized.

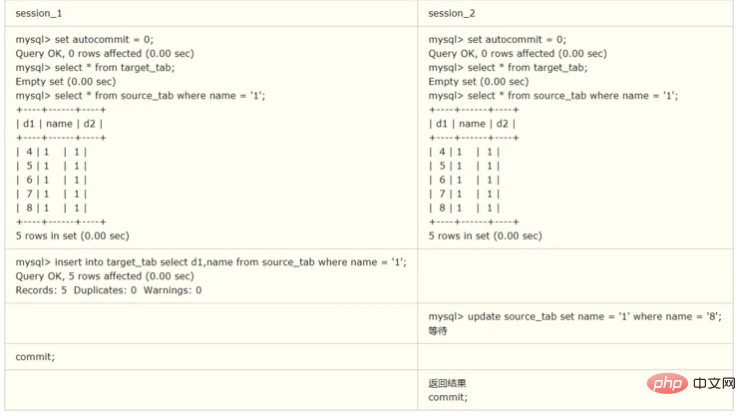

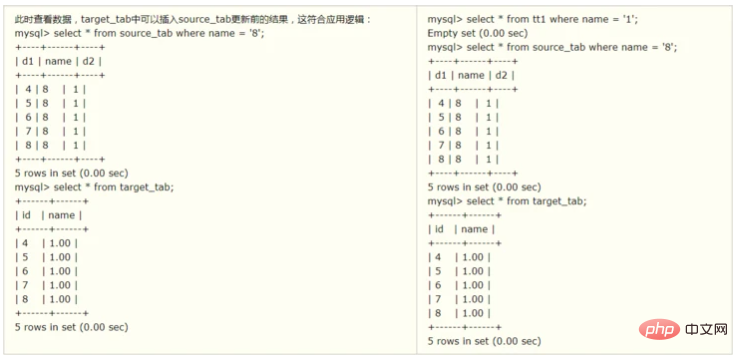

In addition, for SQL statements such as "insert into target_tab select * from source_tab where ..." and "create table new_tab ...select ... From source_tab where ...(CTAS)", users There is no update operation done on source_tab, but MySQL does special processing for this kind of SQL statement.

(Here InnoDB adds a shared lock to source_tab and does not use multi-version data consistency reading technology!)

In the above example, simply Reading the data in the source_tab table is equivalent to executing an ordinary SELECT statement. Just use consistent reading. ORACLE does exactly this. It uses multi-version data implemented by MVCC technology to achieve consistent reading without adding any locks to source_tab. We know that InnoDB also implements multi-version data, and does not require any locks for ordinary SELECT consistent reading; but here InnoDB adds a shared lock to source_tab and does not use multi-version data consistent reading technology!

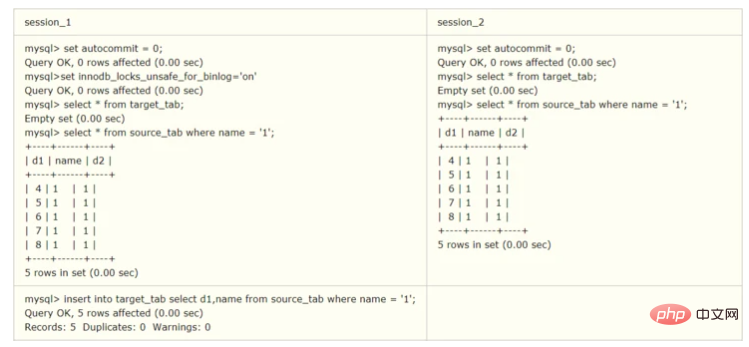

Why does MySQL do this? The reason is to ensure the correctness of recovery and replication. Because without locking, if other transactions update source_tab during the execution of the above statement, it may lead to incorrect data recovery results. To demonstrate this, let's repeat the previous example. The difference is that before session_1 executes the transaction, the value of the system variable innodb_locks_unsafe_for_binlog is set to "on" (its default value is off)

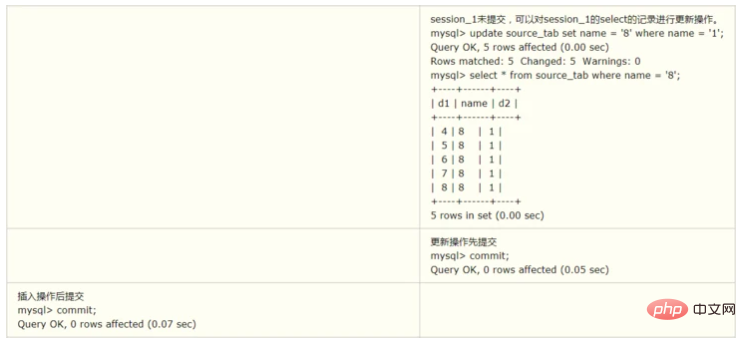

As can be seen from the above, after setting the value of the system variable innodb_locks_unsafe_for_binlog to "on", InnoDB no longer locks the source_tab, and the result is in line with the application logic. However, if the content of BINLOG is analyzed:

SET TIMESTAMP=1169175130 ;

BEGIN;

# at 274

#070119 10:51:57 server id 1 end_log_pos 105 Query thread_id=1 exec_time=0 error_code=0

SET TIMESTAMP=1169175117;

update source_tab set name = '8' where name = '1';

# at 379

#070119 10:52:10 server id 1 end_log_pos 406 error_code=0

SET TIMESTAMP=1169175134;

BEGIN;

# at 474

#070119 10:51:29 server id 1 end_log_pos 119 Query thread_id=2 exec_time=0 error_code=0

SET TIMESTAMP=1169175089;

insert into target_tab select d1,name from source_tab where name = '1';

# at 593

#070119 10:52:14 server id 1 end_log_pos 620 Xid = 7

COMMIT;

It can be found that in BINLOG, the location of the update operation is INSERT. ..Before SELECT, if you use this BINLOG for database recovery, the recovery results will not match the actual application logic; if you copy, it will lead to inconsistency between the master and slave databases!

Therefore, the INSERT...SELECT... and CREATE TABLE...SELECT... statements may prevent concurrent updates to the source table, causing waiting for the source table lock. If the query is complex, it will cause serious performance problems, and we should try to avoid using it in our applications. In fact, MySQL calls this kind of SQL non-deterministic SQL and is not recommended.

If this kind of SQL must be used to implement business logic in the application, and you do not want to affect the concurrent updates of the source table, you can take the following two measures:

First, take the above example As in, set the value of innodb_locks_unsafe_for_binlog to "on" to force MySQL to use multi-version data consistency reading. However, the price paid is that the data may not be restored or copied correctly using binlog, so this method is not recommended.

The second is to achieve it indirectly by using a combination of "select * from source_tab ... Into outfile" and "load data infile ..." statements. In this way, MySQL will not lock source_tab.

8. Differences in InnoDB’s consistent reading and locking under different isolation levels

As mentioned earlier, locks and multi-version data are the key to InnoDB’s implementation of consistent reading and ISO/ANSI SQL92 isolation levels. Therefore, under different isolation levels, the consistent read strategy and required locks used by InnoDB when processing SQL are different. At the same time, the characteristics of data recovery and replication mechanisms also have a great impact on some SQL consistent read strategies and lock strategies. These characteristics are summarized as shown in Table 20-16 for the convenience of readers.

1: When the isolation level is RC, gap locks are not used. The official documentation explains as follows:

Each consistent read, even within the same transaction, sets and reads its own fresh snapshot. For information about consistent reads, see Section 14.8.2.3, “Consistent Nonlocking Reads”.

For locking reads (SELECT with FOR UPDATE or LOCK IN SHARE MODE), UPDATE statements, and DELETE statements, InnoDB locks only index records, not the gaps before them, and thus permits the free insertion of new records next to locked records. Gap locking is only used for foreign-key constraint checking and duplicate-key checking.

Official Document The description address is: https://dev.mysql.com/doc/refman/5.5/en/innodb-transaction-isolation-levels.html

2: Under the repeatable read isolation level, if the index is Unique, and the search is also unique, no gap lock is needed, otherwise gap lock will be used. The official description is as follows:

REPEATABLE READ

This is the default isolation level for InnoDB. Consistent reads within the same transaction read the snapshot established by the first read. This means that if you issue several plain (nonlocking) SELECT statements within the same transaction, theseSELECT statements are consistent also with respect to each other. See Section 14.8.2.3, “Consistent Nonlocking Reads”.

For locking reads (SELECT with FOR UPDATE or LOCK IN SHARE MODE), UPDATE, and DELETE statements, locking depends on whether the statement uses a unique index with a unique search condition, or a range -type search condition.

For a unique index with a unique search condition, InnoDB locks only the index record found, not the gap before it.

For other search conditions, InnoDB locks the index range scanned, using gap locks or next-key locks to block insertions by other sessions into the gaps covered by the range. For information about gap locks and next-key locks, see Section 14.8.1, “InnoDB Locking”.

The official document description address is: https://dev.mysql.com/doc/refman/5.5/en/innodb-transaction-isolation-levels.html

9. When to use table locks?

For InnoDB tables, row-level locks should be used in most cases, because transactions and row locks are often the reasons why we choose InnoDB tables. However, in individual special transactions, table-level locks can also be considered.

The first situation is: the transaction needs to update most or all of the data, and the table is relatively large. If the default row lock is used, not only will the transaction execution efficiency be low, but it may also cause other transactions to wait for a long time for locks and Lock conflict, in this case, you can consider using table locks to improve the execution speed of the transaction.

The second situation is: the transaction involves multiple tables and is relatively complex, which is likely to cause deadlock and cause a large number of transaction rollbacks. In this case, you can also consider locking the tables involved in the transaction once to avoid deadlock and reduce the database overhead caused by transaction rollback.

Of course, there should not be too many of these two types of transactions in the application, otherwise, you should consider using MyISAM tables.

Under InnoDB, pay attention to the following two points when using table locks.

(1) Although you can add table-level locks to InnoDB using LOCK TABLES, it must be noted that table locks are not managed by the InnoDB storage engine layer, but by the upper layer: MySQL Server. , only when autocommit=0, innodb_table_locks=1 (default setting), the InnoDB layer can know the table lock added by MySQL, and MySQL Server can also perceive the row lock added by InnoDB. In this case, InnoDB can automatically identify the table-level lock involved. deadlock; otherwise, InnoDB will not be able to automatically detect and handle this deadlock. Regarding deadlock, we will continue to discuss it in the next section.

(2) When using LOCK TABLES to lock the InnoDB table, be careful to set AUTOCOMMIT to 0, otherwise MySQL will not lock the table; do not use UNLOCK TABLES to release the table lock before the transaction ends. Because UNLOCK TABLES will implicitly commit the transaction; COMMIT or ROLLBACK cannot release the table-level lock added with LOCK TABLES, and the table lock must be released with UNLOCK TABLES. For the correct method, see the following statement.

For example, if you need to write to table t1 and read from table t, you can do as follows:

SET AUTOCOMMIT=0;

LOCK TABLES t1 WRITE, t2 READ, ...;

[do something with tables t1 and t2 here];

COMMIT;

UNLOCK TABLES;

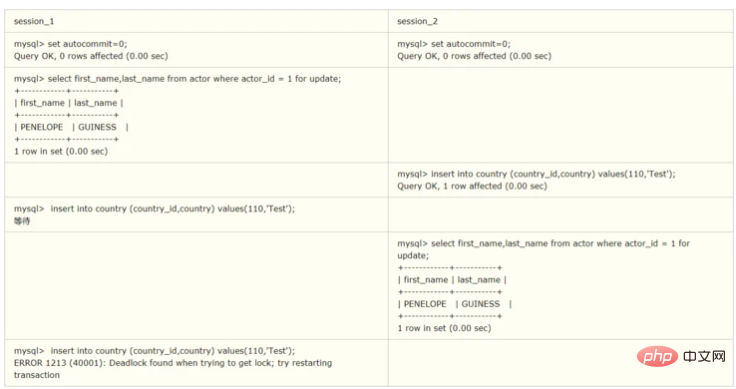

10. About deadlock

As mentioned above, MyISAM table locks are deadlock free. This is because MyISAM always obtains all the locks it needs at one time, either to satisfy them all or to wait, so there will be no deadlocks. Lock. But in InnoDB, except for transactions composed of a single SQL, locks are acquired gradually, which determines that deadlock is possible in InnoDB. Table 20-17 shows an example of a deadlock.

In the above example, both transactions need to obtain the exclusive lock held by the other party to continue completing the transaction. This kind of cyclic lock waiting is a typical deadlock.

After a deadlock occurs, InnoDB can generally automatically detect it and cause one transaction to release the lock and roll back, while another transaction obtains the lock and continues to complete the transaction. However, when external locks or table locks are involved, InnoDB cannot completely automatically detect deadlocks. This needs to be solved by setting the lock wait timeout parameter innodb_lock_wait_timeout. It should be noted that this parameter is not only used to solve the deadlock problem. When concurrent access is relatively high, if a large number of transactions are suspended because the required locks cannot be obtained immediately, it will occupy a large amount of computer resources and cause serious performance problems. The problem even drags across databases. We can avoid this situation by setting an appropriate lock wait timeout threshold.

Generally speaking, deadlocks are problems of application design. By adjusting business processes, database object design, transaction size, and SQL statements that access the database, most deadlocks can be avoided.

The following will introduce several common methods to avoid deadlock through examples.

(1) In an application, if different programs access multiple tables concurrently, try to agree to access the tables in the same order, which can greatly reduce the chance of deadlock. In the following example, because the two sessions access the two tables in different orders, the chance of deadlock is very high! But if accesses are done in the same order, deadlock can be avoided.

(2) When the program processes data in batches, if the data is sorted in advance to ensure that each thread processes records in a fixed order, it can also be greatly reduced. Deadlock is possible.

(3) In a transaction, if you want to update a record, you should directly apply for a lock of sufficient level, that is, an exclusive lock, instead of applying for a shared lock first and then update it again. Apply for an exclusive lock, because when the user applies for an exclusive lock, other transactions may have obtained the shared lock of the same record, causing lock conflicts or even deadlocks. For specific demonstration, please refer to the example in Section 20.3.3.

(4) As mentioned before, under the REPEATABLE-READ isolation level, if two threads use SELECT...FOR UPDATE to add exclusive locks to records with the same condition at the same time, if there is no record that meets the condition, the two threads will All will be locked successfully. The program discovers that the record does not yet exist and attempts to insert a new record. If both threads do this, a deadlock will occur. In this case, changing the isolation level to READ COMMITTED can avoid the problem.

(5) When the isolation level is READ COMMITTED, if both threads execute SELECT...FOR UPDATE first, determine whether there are records that meet the conditions. If not, , insert the record. At this time, only one thread can insert successfully, and the other thread will wait for a lock. When the first thread submits, the second thread will make an error due to the primary key. However, although this thread makes an error, it will obtain an exclusive lock! At this time, if a third thread applies for an exclusive lock, a deadlock will also occur.

In this case, you can perform the insertion operation directly, and then catch the primary key duplicate exception, or always execute ROLLBACK to release the acquired exclusive lock when encountering a primary key duplicate error.

Although deadlocks can be greatly reduced through the design and SQL optimization and other measures introduced above, deadlocks are difficult to completely avoid. Therefore, it is a good programming habit to always catch and handle deadlock exceptions in programming.

If a deadlock occurs, you can use the SHOW INNODB STATUS command to determine the cause of the last deadlock. The returned results include detailed information about deadlock-related transactions, such as the SQL statement that caused the deadlock, the locks that the transaction has acquired, what locks it is waiting for, and the transactions that were rolled back. Based on this, the causes of deadlock and improvement measures can be analyzed.

The following is a sample output of SHOW INNODB STATUS:

mysql> show innodb status \G

InnoDB Summary

This chapter focuses on MyISAM in MySQL The implementation characteristics of table-level locks and InnoDB row-level locks are discussed, and lock problems and solutions often encountered by the two storage engines are discussed.

For MyISAM table locks, the following points are mainly discussed:

(1) Shared read locks (S) are compatible, but shared read locks (S) are not compatible with exclusive writes The locks (X) and the exclusive write locks (X) are mutually exclusive, which means that reading and writing are serial.

(2) Under certain conditions, MyISAM allows queries and inserts to be executed concurrently. We can use this to solve the lock contention problem for queries and inserts into the same table in applications.

(3) MyISAM's default lock scheduling mechanism is write priority, which is not necessarily suitable for all applications. Users can adjust reading and writing by setting the LOW_PRIORITY_UPDATES parameter or specifying the LOW_PRIORITY option in the INSERT, UPDATE, and DELETE statements. Lock contention.

(4) Since the locking granularity of the table lock is large and the reading and writing are serial, if there are many update operations, the MyISAM table may have serious lock waits. You can consider using the InnoDB table. to reduce lock conflicts.

For InnoDB tables, this chapter mainly discusses the following contents.

InnoDB's row lock is based on lock index. If the data is not accessed through the index, InnoDB will use table lock.

Introduces the InnoDB gap lock (Next-key) mechanism and the reasons why InnoDB uses gap locks.

Under different isolation levels, InnoDB’s locking mechanism and consistent read strategy are different.

MySQL recovery and replication also have a great impact on the InnoDB lock mechanism and consistent read strategy.

Lock conflicts and even deadlocks are difficult to completely avoid.

After understanding the InnoDB lock characteristics, users can reduce lock conflicts and deadlocks through design and SQL adjustments, including:

Use a lower isolation level as much as possible;

Carefully design the index, and try to use the index to access data to make locking more accurate, thereby reducing the chance of lock conflicts.

Choose a reasonable transaction size, and the probability of lock conflicts for small transactions is smaller.

When locking the record set display, it is best to request a sufficient level of lock at one time. For example, if you want to modify data, it is best to apply for an exclusive lock directly instead of applying for a shared lock first and then request an exclusive lock when modifying, which can easily lead to deadlock.

When different programs access a group of tables, they should try to agree to access each table in the same order. For a table, try to access the rows in the table in a fixed order. This greatly reduces the chance of deadlock.

Try to use equal conditions to access data, so as to avoid the impact of gap locks on concurrent insertion.

Do not apply for a lock level that exceeds the actual need; unless necessary, do not display locking when querying.

For some specific transactions, table locks can be used to increase processing speed or reduce the possibility of deadlock.