What are the stable sorting algorithms?

Stable sorting algorithms include: 1. Bubble sort; 2. Selection sort; 3. Insertion sort; 4. Quick sort; 5. Merge sort; 6. Radix sort; 7. Hill sort (shell ); 8. Heap sorting.

The operating environment of this tutorial: Windows 10 system, Dell G3 computer.

Analyze the stability of common sorting algorithms, and give simple reasons for each.

Stable sorting algorithm:

1. Bubble sorting

Bubble sorting Bubble sorting is to move small elements forward or move large elements backward. Comparison is a comparison of two adjacent elements, and exchange also occurs between these two elements. So, if two elements are equal, I don't think you would just swap them boringly.

If two equal elements are not adjacent, then even if the two are adjacent through the previous pairwise exchange, they will not be exchanged at this time, so the order of the same elements has not changed, so there is a risk Bubble sort is a stable sorting algorithm.

2. Selection sorting

Selection sorting selects the smallest current element for each position, for example, selects the smallest for the first position, and selects the smallest among the remaining elements. Select the second smallest element for the second element, and so on, until the n-1th element. The nth element does not need to be selected, because it is the only largest element left. Then, in a selection, if the current element is smaller than an element, and the small element appears after an element that is equal to the current element, then the stability will be destroyed after the exchange.

is a bit difficult to pronounce. For example, in the sequence 5 8 5 2 9, we know that selecting the 1th element 5 in the first pass will If exchanged with 2, then the relative order of 2 5 in the original sequence will be destroyed, so selection sorting is not a stable sorting algorithm.

3. Insertion sort

Insertion sort inserts one element at a time based on an already ordered small sequence. Of course, at the beginning, this small ordered sequence only had one element, which was the first element. The comparison starts from the end of the ordered sequence, that is, the element you want to insert is compared with the largest one that is already sorted. If it is larger than it, insert it directly behind it, otherwise keep looking forward until you find the element it should be inserted into. Location.

If you encounter an element that is equal to the inserted element, then the inserted element will place the element you want to insert after the equal element.

So, the order of equal elements has not changed. The order from the original unordered sequence is the sorted order, so insertion sort is stable.

4. Quick sort

Quick sort has two directions. The i subscript on the left goes all the way to the right. When a[i] <= a[center_index], where center_index is the array subscript of the center element, which is generally taken as the 0 element of the array. The j subscript on the right goes all the way to the left, when a[j]>a[center_index].

If both i and j can't walk, i<=j, exchange a[i] and a[j], repeat The above process until i>j. Swap a[j] and a[center_index] to complete a quick sort. When the central element is exchanged with a[j], it is very likely to disrupt the stability of the previous elements. For example, the sequence is 5 3 3 4 3 8 9 10 11, Now the exchange of the pivot elements 5 and 3 (the 5th element, the subscript starts from 1) will change the element 3 The stability is disrupted, so quick sort is an unstable sorting algorithm. The instability occurs at the moment when the central element and a[j] are exchanged.

5. Merge sort

Merge sort is to recursively divide the sequence into short sequences. The recursive exit is that the short sequence has only 1 elements (think directly ordered) or 2 sequences (1 comparisons and exchanges), and then merge each ordered segment sequence into an ordered long sequence, and continue to merge until the original sequence All sorted. It can be found that when there are 1 or 2 elements, 1 elements will not be exchanged, and 2 elements will not be exchanged if they are equal in size. No one is intentionally swapping, it doesn't destroy stability.

So, in the process of merging short ordered sequences, is stability destroyed?

No, during the merging process we can ensure that if the two current elements are equal, we will save the element of the previous sequence in front of the result sequence, thus ensuring stability. Therefore, merge sort is also a stable sorting algorithm.

6. Radix sorting

Radix sorting is sorting by low order first, and then collecting;

Then sorting by high order, and then collecting;

And so on, until the highest position. Sometimes some attributes have a priority order. They are sorted by low priority first, then by high priority. The final order is that those with high priority come first, and those with the same high priority and low priority come first. Radix sorting is based on separate sorting and separate collection, so it is a stable sorting algorithm.

7. Hill sorting (shell)

Hill sorting is to insert and sort elements according to different synchronization lengths. When the elements are very disordered at the beginning, The step size is the largest, so the number of elements in insertion sort is small and the speed is very fast.

When the elements are basically ordered and the step size is small, insertion sort is very efficient for ordered sequences. Therefore, the time complexity of Hill sorting will be better than O(n^2). Due to multiple insertion sortings, we know that one insertion sorting is stable and will not change the relative order of the same elements. However, in different insertion sorting processes, the same elements may move in their respective insertion sortings, and finally their stability will change. are scrambled, so shell sorting is unstable.

8. Heap sorting

We know that the structure of the heap is that the children of node i are 2 * i and 2 * i 1 node, the large top heap requires the parent node to be greater than or equal to its 2 child node, and the small top heap requires the parent node to be less than or equal to its 2 child node. In a sequence of length n, the heap sorting process is to select the largest (largest) value starting from n / 2 and its child nodes in total 3 Heap) or minimum (small top heap), the choice between these 3 elements will certainly not destroy stability. But when selecting elements for n / 2-1, n/2-2, ...1, the stability will be destroyed . It is possible that the n/2th parent node exchanges the following element, while the n/2-1th parent node does not exchange the following same element, then The stability between these two identical elements is destroyed. Therefore, heap sort is not a stable sorting algorithm.

For more related knowledge, please visit the FAQ column!

The above is the detailed content of What are the stable sorting algorithms?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

Complex experimental design issues in Kuaishou's two-sided market

Apr 15, 2023 pm 07:40 PM

Complex experimental design issues in Kuaishou's two-sided market

Apr 15, 2023 pm 07:40 PM

1. Background of the problem 1. Introduction to the two-sided market experiment The two-sided market, that is, a platform, includes two participants, producers and consumers, and both parties promote each other. For example, Kuaishou has a video producer and a video consumer, and the two identities may overlap to a certain extent. Bilateral experiment is an experimental method that combines groups on the producer and consumer sides. Bilateral experiments have the following advantages: (1) The impact of the new strategy on two aspects can be detected simultaneously, such as changes in product DAU and the number of people uploading works. Bilateral platforms often have cross-side network effects. The more readers there are, the more active the authors will be, and the more active the authors will be, the more readers will follow. (2) Effect overflow and transfer can be detected. (3) Help us better understand the mechanism of action. The AB experiment itself cannot tell us the relationship between cause and effect, only

How to filter and sort data in Vue technology development

Oct 09, 2023 pm 01:25 PM

How to filter and sort data in Vue technology development

Oct 09, 2023 pm 01:25 PM

How to filter and sort data in Vue technology development In Vue technology development, data filtering and sorting are very common and important functions. Through data filtering and sorting, we can quickly query and display the information we need, improving user experience. This article will introduce how to filter and sort data in Vue, and provide specific code examples to help readers better understand and use these functions. 1. Data filtering Data filtering refers to filtering out data that meets the requirements based on specific conditions. In Vue, we can pass comp

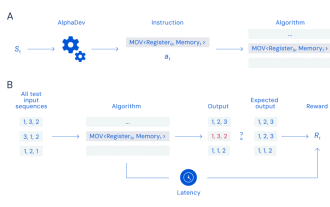

Google uses AI to break the ten-year ranking algorithm seal. It is executed trillions of times every day, but netizens say it is the most unrealistic research?

Jun 22, 2023 pm 09:18 PM

Google uses AI to break the ten-year ranking algorithm seal. It is executed trillions of times every day, but netizens say it is the most unrealistic research?

Jun 22, 2023 pm 09:18 PM

Organizing | Nuka-Cola, Chu Xingjuan Friends who have taken basic computer science courses must have personally designed a sorting algorithm - that is, using code to rearrange the items in an unordered list in ascending or descending order. It's an interesting challenge, and there are many possible ways to do it. A lot of time has been invested in figuring out how to accomplish sorting tasks more efficiently. As a basic operation, sorting algorithms are built into the standard libraries of most programming languages. There are many different sorting techniques and algorithms used in code bases around the world to organize large amounts of data online, but at least as far as the C++ libraries used with the LLVM compiler are concerned, the sorting code has not changed in more than a decade. Recently, the Google DeepMindAI team has now developed a

How to use radix sort algorithm in C++

Sep 19, 2023 pm 12:15 PM

How to use radix sort algorithm in C++

Sep 19, 2023 pm 12:15 PM

How to use the radix sort algorithm in C++ The radix sort algorithm is a non-comparative sorting algorithm that completes sorting by dividing the elements to be sorted into a limited set of digits. In C++, we can use the radix sort algorithm to sort a set of integers. Below we will discuss in detail how to implement the radix sort algorithm, with specific code examples. Algorithm idea The idea of the radix sorting algorithm is to divide the elements to be sorted into a limited set of digital bits, and then sort the elements on each bit in turn. Sorting on each bit is completed

How to implement the selection sort algorithm in C#

Sep 20, 2023 pm 01:33 PM

How to implement the selection sort algorithm in C#

Sep 20, 2023 pm 01:33 PM

How to implement the selection sort algorithm in C# Selection sort (SelectionSort) is a simple and intuitive sorting algorithm. Its basic idea is to select the smallest (or largest) element from the elements to be sorted each time and put it at the end of the sorted sequence. Repeat this process until all elements are sorted. Let's learn more about how to implement the selection sort algorithm in C#, along with specific code examples. Creating a selection sort method First, we need to create a method for implementing selection sort. This method accepts a

Swoole Advanced: How to use multi-threading to implement high-speed sorting algorithm

Jun 14, 2023 pm 09:16 PM

Swoole Advanced: How to use multi-threading to implement high-speed sorting algorithm

Jun 14, 2023 pm 09:16 PM

Swoole is a high-performance network communication framework based on PHP language. It supports the implementation of multiple asynchronous IO modes and multiple advanced network protocols. On the basis of Swoole, we can use its multi-threading function to implement efficient algorithm operations, such as high-speed sorting algorithms. The high-speed sorting algorithm (QuickSort) is a common sorting algorithm. By locating a benchmark element, the elements are divided into two subsequences. Those smaller than the benchmark element are placed on the left, and those greater than or equal to the benchmark element are placed on the right. Then the left and right subsequences are placed. subsequence recursion

What are the sorting algorithms for arrays?

Jun 02, 2024 pm 10:33 PM

What are the sorting algorithms for arrays?

Jun 02, 2024 pm 10:33 PM

Array sorting algorithms are used to arrange elements in a specific order. Common types of algorithms include: Bubble sort: swap positions by comparing adjacent elements. Selection sort: Find the smallest element and swap it to the current position. Insertion sort: Insert elements one by one to the correct position. Quick sort: divide and conquer method, select the pivot element to divide the array. Merge Sort: Divide and Conquer, Recursive Sorting and Merging Subarrays.

Discussion on application scenarios of different PHP array sorting algorithms

Apr 28, 2024 am 09:39 AM

Discussion on application scenarios of different PHP array sorting algorithms

Apr 28, 2024 am 09:39 AM

For different scenarios, it is crucial to choose the appropriate PHP array sorting algorithm. Bubble sort is suitable for small-scale arrays without stability requirements; quick sort has the lowest time complexity in most cases; merge sort has high stability and is suitable for scenarios that require stable results; selection sort is suitable for situations without stability requirements. Situation; Heap sort efficiently finds the maximum or minimum value. Through comparison of actual cases, quick sort is superior to other algorithms in terms of time efficiency, but merge sort should be chosen when stability needs to be considered.