How to remove duplicates in Redis? The following article will introduce to you 4 methods of Redis deduplication. I hope it will be helpful to you!

This article mainly introduces the sharing of three methods for realizing unique counting in Redis. This article explains three methods based on SET, based on bit, and based on HyperLogLog. Friends can refer to

Unique counting is a very common feature in website systems. For example, a website needs to count the number of unique visitors (that is, UV) that visits every day. Counting problems are very common, but they can be very complicated to solve: first, the amount that needs to be counted may be very large, for example, a large site is visited by millions of people every day, and the amount of data is quite large; second, it is usually desirable to expand the dimension of counting. For example, in addition to daily UV, you also want to know weekly or monthly UV, which makes the calculation very complicated. [Related recommendation: Redis Video Tutorial]

In a system stored in a relational database, the method to achieve unique counting is select count(distinct

Redis is easy to solve this kind of counting problem. It is faster and consumes less resources than relational databases. It even provides 3 different methods.

Redis is used to save a unique data collection. Through it, you can quickly determine whether an element exists in the collection, and you can also quickly Counts the number of elements in a set, and can merge sets into a new set. The commands involved are as follows:

Copy the code as follows:

SISMEMBER key member # 判断 member 是否存在 SADD key member # 往集合中加入 member SCARD key # 获取集合元素个数

The set-based method is simple and effective, with accurate counting, wide application and easy to understand. Its disadvantage is that it consumes a lot of resources (of course Much less than a relational database), if the number of elements is large (such as hundreds of millions), the memory consumption is terrible.

Redis can be used to implement counting that is more highly compressed than set memory. It uses a bit 1 or 0 to store whether an element is Information exists. For example, for the count of unique visitors to a website, user_id can be used as the offset of the bit. Set to 1 to indicate access. Using 1 MB of space can store the one-day access count of more than 8 million users. The commands involved are as follows: Copy the code as follows:

SETBIT key offset value # 设置位信息 GETBIT key offset # 获取位信息 BITCOUNT key [start end] # 计数 BITOP operation destkey key [key ...] # 位图合并

The bit-based method consumes much less space than the set method, but it requires that the elements can be simply mapped to bit offsets, and the applicable scope is much narrower. In addition, it consumes a lot of space. Depends on the maximum offset, regardless of the count value. If the maximum offset is large, the memory consumption is also considerable.

It is difficult to achieve accurate unique counting of extremely large amounts of data, but if it is just an approximation, there are many efficient algorithms in computing science , among which HyperLogLog Counting is a very famous algorithm. It can only use about 12 k of memory to achieve hundreds of millions of unique counts, and the error is controlled at about one percent. The commands involved are as follows: Copy the code as follows:

PFADD key element [element ...] # 加入元素 PFCOUNT key [key ...] # 计数

This counting method is really amazing. It involves some uniform distribution, random probability, Bernoulli distribution, etc. in statistics. I have not completely understood it. I am interested. You can delve into relevant articles.

The three unique counting methods provided by redis each have their own advantages and disadvantages, and can fully meet the counting requirements in different situations.

BloomFilter uses a data structure similar to a bitmap or a bit set to store data, and uses a bit array to concisely represent a set. And it can quickly determine whether an element already exists in this collection. Although BloomFilter is not 100% accurate, the error rate can be reduced by adjusting parameters, the number of Hash functions used, and the size of the bit array. This adjustment can completely reduce the error rate to close to 0. It can meet most scenarios.

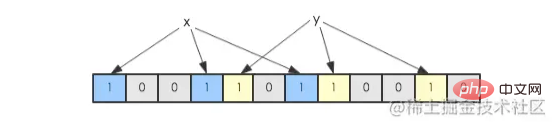

If there is a set S = {x1, x2, … xn}, Bloom Filter uses k independent hash functions to map each element in the set to {1,…,m}. range. For any element, the number mapped to is used as the index of the corresponding bit array, and the bit will be set to 1. For example, element x1 is mapped to the number 8 by the hash function, then the 8th bit of the bit array will be set to 1. In the figure below, the set S has only two elements x and y, which are mapped by three hash functions respectively. The mapped positions are (0, 3, 6) and (4, 7, 10) respectively, and the corresponding bits will be set. is 1:

#Now if you want to determine whether another element is in this set, you only need to be mapped by these three hash functions to see if there is 0 in the corresponding position. Existence, if any, means that this element definitely does not exist in this set, otherwise it might exist.

Redis needs to install plug-ins to use Bloom filters: https://blog.csdn.net/u013030276/article/details/88350641.

For more programming-related knowledge, please visit: Introduction to Programming! !

The above is the detailed content of How to remove duplicates in Redis? A brief analysis of 4 methods to remove duplicates. For more information, please follow other related articles on the PHP Chinese website!

Commonly used database software

Commonly used database software

What are the in-memory databases?

What are the in-memory databases?

Which one has faster reading speed, mongodb or redis?

Which one has faster reading speed, mongodb or redis?

How to use redis as a cache server

How to use redis as a cache server

How redis solves data consistency

How redis solves data consistency

How do mysql and redis ensure double-write consistency?

How do mysql and redis ensure double-write consistency?

What data does redis cache generally store?

What data does redis cache generally store?

What are the 8 data types of redis

What are the 8 data types of redis