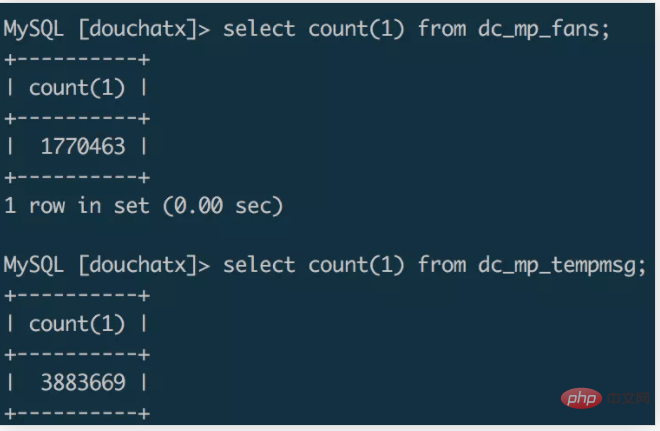

Focus on recording the million-level data migration process of Mysql!

Suppose there is such a scenario, a small program is developed, and taking advantage of the popularity of the Double Eleven event, it quickly accumulates more than one million users in a month. We add a bureau for collecting formids on the small program page. Point is used to send template message notifications to WeChat users.

As the amount of data increases, the server space used before is starting to be a bit insufficient. Recently, I have written a new framework specifically for backend development of small programs, so I want to migrate the original data to the new system. database. I bought a 4-core 8G machine and started data migration. Let's make a simple record of the migration process.

Plan selection

mysqldump migration

In normal development, we often The data backup and migration method used is to use the mysqldump tool to export a sql file, and then import the sql into the new database to complete the data migration. [Recommended: mysql video tutorial]

Experiments have found that it takes a few minutes to export a million-level database into a sql file through mysqldump, and the size of the exported sql file is about 1G. , and then copy the 1G sql file to another server through the scp command, which may take a few minutes. I used the source command to import data in the database of the new server. I ran it all night and the data was not imported. The CPU was full.

Script migration

Directly operating the database through the command line to export and import data is a more convenient way, but the amount of data is large. In this case, it is often more time-consuming and requires higher server performance. If the time requirement for data migration is not very high, you can try writing a script to migrate the data. Although I haven't actually tried it, I think there are probably two scripting solutions.

The first method is to run a migration script on the migration target server, remotely connect to the database of the source data server, read the source data in chunks by setting query conditions, and write it to the target database after reading. . This migration method may be relatively inefficient. Data export and import are equivalent to a synchronous process, and you need to wait until the reading is completed before writing. If the query conditions are designed reasonably, multiple migration scripts can also be started in a multi-threaded manner to achieve the effect of parallel migration.

The second method can be combined with redis to build a "production and consumption" migration solution. The source data server can serve as a data producer, running a multi-threaded script on the source data server, reading the data in the database in parallel, and writing the data to the redis queue. As a consumer, the target server also runs a multi-threaded script on the target server, remotely connects to redis, reads the data in the redis queue in parallel, and writes the read data to the target database. Compared with the first method, this method is an asynchronous solution. Data import and data export can be performed at the same time. By using redis as the data transfer station, the efficiency will be greatly improved.

Here you can also use the go language to write migration scripts. Using its native concurrency features, you can achieve the purpose of migrating data in parallel and improve migration efficiency.

File Migration

The first migration solution is too inefficient, and the second migration solution has a higher encoding cost. Through comparison and online After analyzing the information I was looking for, I finally chose to use mysql. The

select data into outfile file.txt、load data infile file.txt into table

command completes the migration of millions of data in the form of import and export files.

Migration process

Export the data file in the source database

select * from dc_mp_fans into outfile '/data/fans.txt';

Copy the data file to the target server

zip fans.zip /data/fans.txtscp fans.zip root@ip:/data/

In the target database Import the file

unzip /data/fans.zipload data infile '/data/fans.txt' into table wxa_fans(id,appid,openid,unionid,@dummy,created_at,@dummy,nickname,gender,avatar_url,@dummy,@dummy,@dummy,@dummy,language,country,province,city,@dummy,@dummy,@dummy,@dummy,@dummy,@dummy,@dummy,@dummy,@dummy);

Follow these steps and complete the cross-server migration of a million-level data table in a few minutes.

Note items

- mysql security item settings

Execute load data infile and into in mysql The outfile command requires the secure_file_priv option to be enabled in mysql. You can use show global variables like '%secure%'; to check whether mysql has this option enabled. The default value of Null indicates that the import and export commands are not allowed to be executed.

Modify the mysql configuration item through vim /etc/my.cnf and set the value of secure_file_priv to empty:

[mysqld] secure_file_priv=''

Then you can import and export data files through commands.

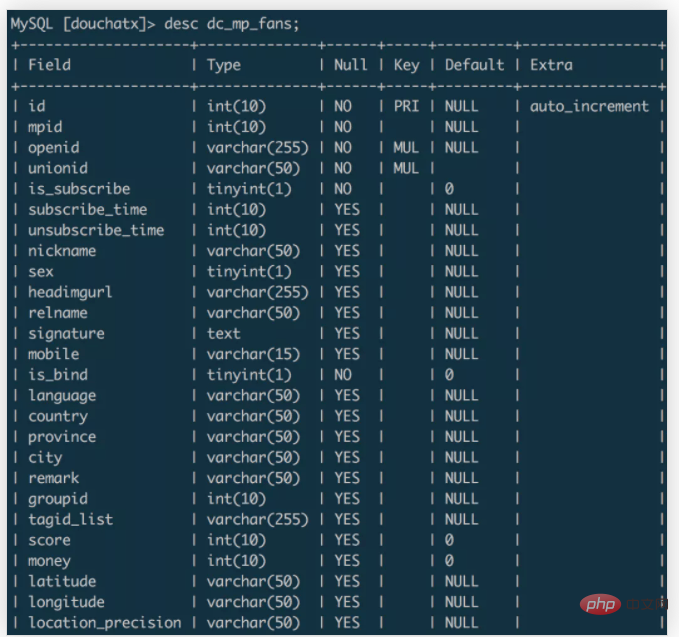

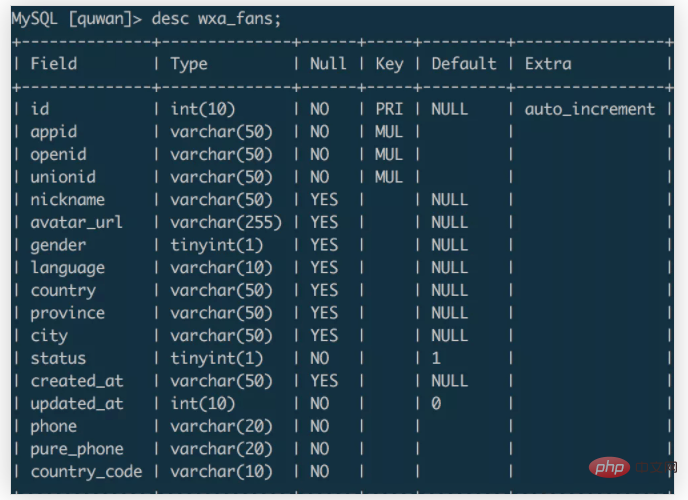

- The imported and exported data table fields do not correspond

In the above example, the data is migrated from the dc_mp_fans table of the source database to the wxa_fans table of the target database. The fields of the two data tables are: dc_mp_fans

wxa_fans

When importing data, you can set the field name to match the data of the target field. Unnecessary target field data can be discarded through @dummy.

Summary

Based on this data migration experience, the summary is:

- Small amounts of data can be imported and exported using the mysqldump command. This method is simple and convenient.

- When the amount of data is large and you have enough migration patience, you can choose to write your own script and choose an appropriate parallel solution to migrate the data. This method has higher coding costs.

- When the amount of data is large and you want to complete the data migration in a short time, you can migrate it through mysql import and export files, which is more efficient.

The above is the detailed content of Focus on recording the million-level data migration process of Mysql!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

PHP's big data structure processing skills

May 08, 2024 am 10:24 AM

PHP's big data structure processing skills

May 08, 2024 am 10:24 AM

Big data structure processing skills: Chunking: Break down the data set and process it in chunks to reduce memory consumption. Generator: Generate data items one by one without loading the entire data set, suitable for unlimited data sets. Streaming: Read files or query results line by line, suitable for large files or remote data. External storage: For very large data sets, store the data in a database or NoSQL.

How to use MySQL backup and restore in PHP?

Jun 03, 2024 pm 12:19 PM

How to use MySQL backup and restore in PHP?

Jun 03, 2024 pm 12:19 PM

Backing up and restoring a MySQL database in PHP can be achieved by following these steps: Back up the database: Use the mysqldump command to dump the database into a SQL file. Restore database: Use the mysql command to restore the database from SQL files.

How to optimize MySQL query performance in PHP?

Jun 03, 2024 pm 08:11 PM

How to optimize MySQL query performance in PHP?

Jun 03, 2024 pm 08:11 PM

MySQL query performance can be optimized by building indexes that reduce lookup time from linear complexity to logarithmic complexity. Use PreparedStatements to prevent SQL injection and improve query performance. Limit query results and reduce the amount of data processed by the server. Optimize join queries, including using appropriate join types, creating indexes, and considering using subqueries. Analyze queries to identify bottlenecks; use caching to reduce database load; optimize PHP code to minimize overhead.

How to insert data into a MySQL table using PHP?

Jun 02, 2024 pm 02:26 PM

How to insert data into a MySQL table using PHP?

Jun 02, 2024 pm 02:26 PM

How to insert data into MySQL table? Connect to the database: Use mysqli to establish a connection to the database. Prepare the SQL query: Write an INSERT statement to specify the columns and values to be inserted. Execute query: Use the query() method to execute the insertion query. If successful, a confirmation message will be output.

How to create a MySQL table using PHP?

Jun 04, 2024 pm 01:57 PM

How to create a MySQL table using PHP?

Jun 04, 2024 pm 01:57 PM

Creating a MySQL table using PHP requires the following steps: Connect to the database. Create the database if it does not exist. Select a database. Create table. Execute the query. Close the connection.

How to use MySQL stored procedures in PHP?

Jun 02, 2024 pm 02:13 PM

How to use MySQL stored procedures in PHP?

Jun 02, 2024 pm 02:13 PM

To use MySQL stored procedures in PHP: Use PDO or the MySQLi extension to connect to a MySQL database. Prepare the statement to call the stored procedure. Execute the stored procedure. Process the result set (if the stored procedure returns results). Close the database connection.

How to fix mysql_native_password not loaded errors on MySQL 8.4

Dec 09, 2024 am 11:42 AM

How to fix mysql_native_password not loaded errors on MySQL 8.4

Dec 09, 2024 am 11:42 AM

One of the major changes introduced in MySQL 8.4 (the latest LTS release as of 2024) is that the "MySQL Native Password" plugin is no longer enabled by default. Further, MySQL 9.0 removes this plugin completely. This change affects PHP and other app

The difference between oracle database and mysql

May 10, 2024 am 01:54 AM

The difference between oracle database and mysql

May 10, 2024 am 01:54 AM

Oracle database and MySQL are both databases based on the relational model, but Oracle is superior in terms of compatibility, scalability, data types and security; while MySQL focuses on speed and flexibility and is more suitable for small to medium-sized data sets. . ① Oracle provides a wide range of data types, ② provides advanced security features, ③ is suitable for enterprise-level applications; ① MySQL supports NoSQL data types, ② has fewer security measures, and ③ is suitable for small to medium-sized applications.