Using this technique, git clone can be speeded up dozens of times!

I don’t know if you have encountered larger projects where git clone is very slow or even fails. How will everyone deal with it?

You may consider changing the download source, and you may use some means to increase the Internet speed, but if you have tried all these, it is still relatively slow?

I encountered this problem today. I needed to download the typescript code from gitlab, but the speed was very slow:

git clone https://github.com/microsoft/TypeScript ts

After waiting for a long time, the download was still not completed, so I added a parameter :

git clone https://github.com/microsoft/TypeScript --depth=1 ts

The speed is increased dozens of times, and the download is completed in an instant.

Adding --depth will only download one commit, so the content will be much less and the speed will increase. [Related recommendations: Git usage tutorial]

And the downloaded content can continue to submit new commits and create new branches. It does not affect subsequent development, but you cannot switch to historical commits and historical branches.

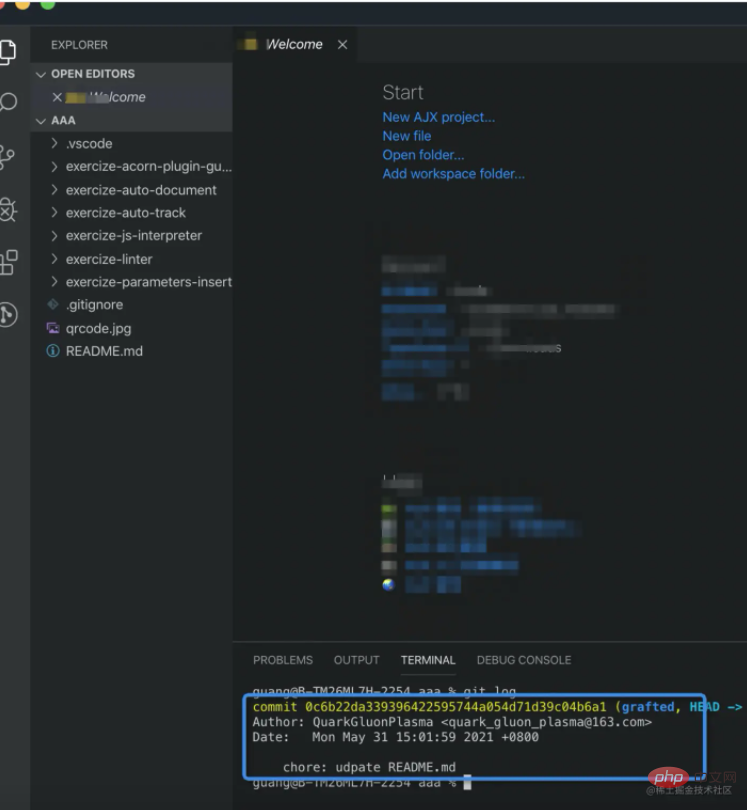

I tested it with one of my projects. I first downloaded a commit:

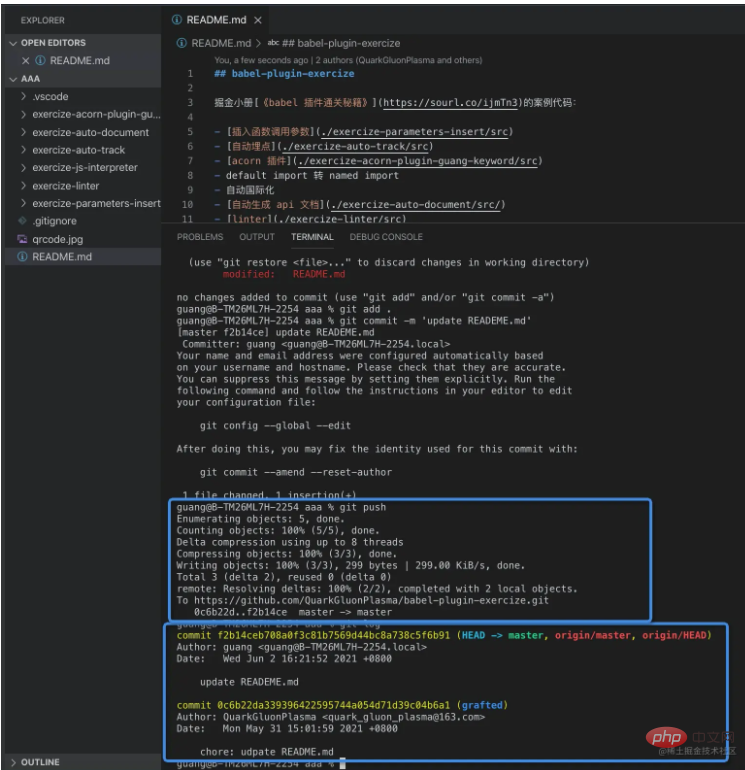

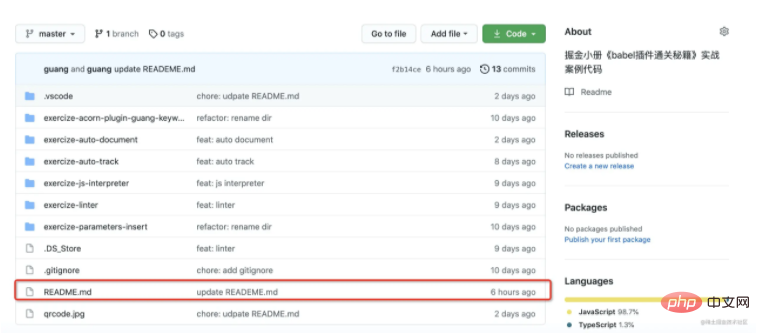

Then made some changes, then git add, commit, push, Can be submitted normally:

Create a new branch and can be submitted normally. The only disadvantage is that you cannot switch to historical commits and historical branches.

It is quite useful in some scenarios: when you need to switch to a historical branch, you can also calculate how many commits are needed, and then specify the depth, which can also improve the speed.

Have you ever thought about why this works?

Git principle

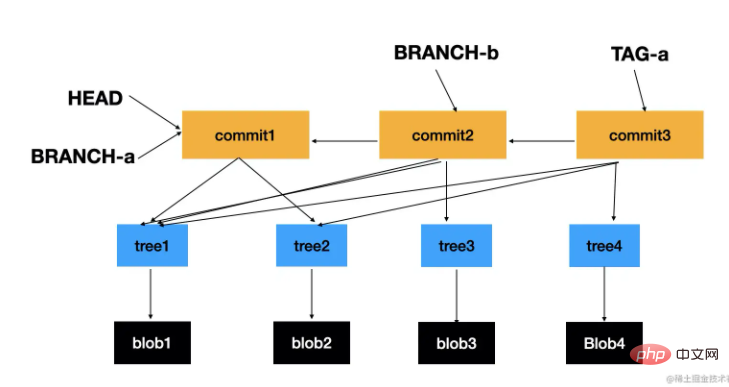

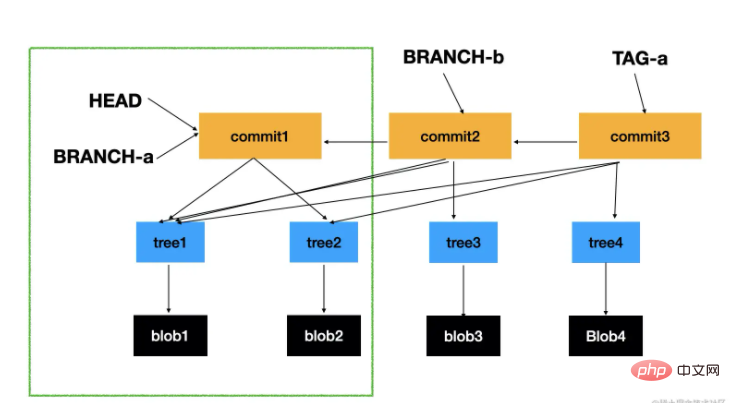

Git saves information through some objects:

- glob object storage file content

- tree object storage file path

- The commit object stores commit information and associates the tree

With one commit as the entry point, all associated trees and blobs are the contents of this commit.

#Commits are related to each other, and head, branch, tag, etc. are pointers to specific commits. It can be seen under .git/refs. In this way, concepts such as branches and tags are implemented based on commit.

Git implements version management and branch switching functions through these three objects. All objects can be seen under .git/objects.

This is the principle of git.

Mainly understand the three objects of blob, tree, and commit, as well as refs such as head, tag, branch, and remote.

The principle of being able to download a single commit

We know that git associates all objects through a certain commit as the entry point, so if we don't need history, we can naturally download only one commit.

#In this way, a new commit is still created based on that commit, and new blobs, trees, etc. are associated. However, the historical commits, trees, and blobs cannot be switched back because they have not been downloaded, nor can the corresponding tag, branch, and other pointers. This is how we download a single commit but can still create new branches, commits, etc.

Summary

When encountering a large git project, you can greatly improve the speed by adding the --depth parameter. The more historical commits, the greater the download speed improvement.

And the downloaded project can still be developed for subsequent development, and new commits, new branches, and tags can be created, but you cannot switch to historical commits, branches, and tags.

We have sorted out the principles of git: files and submission information are stored through the three objects tree, blob, and commit, and functions such as branches and tags are implemented through the association between commits. Commit is the entry point, associated with all trees and blobs.

When we download a commit, we download all its associated trees, blobs, and some refs (including tags, branches, etc.). This is the principle of --depth.

I hope you can use this technique to improve the git clone speed of large projects without switching to historical commits and branches.

The above is the detailed content of Using this technique, git clone can be speeded up dozens of times!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

Git vs. GitHub: Version Control and Code Hosting

Apr 11, 2025 am 11:33 AM

Git vs. GitHub: Version Control and Code Hosting

Apr 11, 2025 am 11:33 AM

Git is a version control system, and GitHub is a Git-based code hosting platform. Git is used to manage code versions and supports local operations; GitHub provides online collaboration tools such as Issue tracking and PullRequest.

Is Git the same as GitHub?

Apr 08, 2025 am 12:13 AM

Is Git the same as GitHub?

Apr 08, 2025 am 12:13 AM

Git and GitHub are not the same thing. Git is a version control system, and GitHub is a Git-based code hosting platform. Git is used to manage code versions, and GitHub provides an online collaboration environment.

Is GitHub difficult to learn?

Apr 02, 2025 pm 02:45 PM

Is GitHub difficult to learn?

Apr 02, 2025 pm 02:45 PM

GitHub is not difficult to learn. 1) Master the basic knowledge: GitHub is a Git-based version control system that helps track code changes and collaborative development. 2) Understand core functions: Version control records each submission, supporting local work and remote synchronization. 3) Learn how to use: from creating a repository to push commits, to using branches and pull requests. 4) Solve common problems: such as merge conflicts and forgetting to add files. 5) Optimization practice: Use meaningful submission messages, clean up branches, and manage tasks using the project board. Through practice and community communication, GitHub’s learning curve is not steep.

Should I put Git or GitHub on my resume?

Apr 04, 2025 am 12:04 AM

Should I put Git or GitHub on my resume?

Apr 04, 2025 am 12:04 AM

On your resume, you should choose to write Git or GitHub based on your position requirements and personal experience. 1. If the position requires Git skills, highlight Git. 2. If the position values community participation, show GitHub. 3. Make sure to describe the usage experience and project cases in detail and end with a complete sentence.

Does Microsoft own Git or GitHub?

Apr 05, 2025 am 12:20 AM

Does Microsoft own Git or GitHub?

Apr 05, 2025 am 12:20 AM

Microsoft does not own Git, but owns GitHub. 1.Git is a distributed version control system created by Linus Torvaz in 2005. 2. GitHub is an online code hosting platform based on Git. It was founded in 2008 and acquired by Microsoft in 2018.

How to use GitHub for HTML?

Apr 07, 2025 am 12:13 AM

How to use GitHub for HTML?

Apr 07, 2025 am 12:13 AM

The reason for using GitHub to manage HTML projects is that it provides a platform for version control, collaborative development and presentation of works. The specific steps include: 1. Create and initialize the Git repository, 2. Add and submit HTML files, 3. Push to GitHub, 4. Use GitHubPages to deploy web pages, 5. Use GitHubActions to automate building and deployment. In addition, GitHub also supports code review, Issue and PullRequest features to help optimize and collaborate on HTML projects.

Should I start with Git or GitHub?

Apr 06, 2025 am 12:09 AM

Should I start with Git or GitHub?

Apr 06, 2025 am 12:09 AM

Starting from Git is more suitable for a deep understanding of version control principles, and starting from GitHub is more suitable for focusing on collaboration and code hosting. 1.Git is a distributed version control system that helps manage code version history. 2. GitHub is an online platform based on Git, providing code hosting and collaboration capabilities.

What is Git in simple words?

Apr 09, 2025 am 12:12 AM

What is Git in simple words?

Apr 09, 2025 am 12:12 AM

Git is an open source distributed version control system that helps developers track file changes, work together and manage code versions. Its core functions include: 1) record code modifications, 2) fallback to previous versions, 3) collaborative development, and 4) create and manage branches for parallel development.