Operation and Maintenance

Operation and Maintenance

Docker

Docker

What is the difference between docker containers and traditional virtualization?

What is the difference between docker containers and traditional virtualization?

What is the difference between docker containers and traditional virtualization?

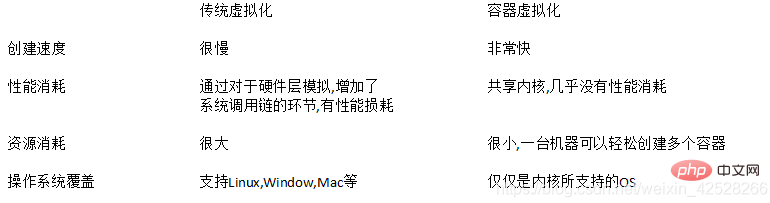

Difference: 1. The creation speed of traditional virtualization is very slow, while the creation speed of container virtualization is very fast; 2. Traditional virtualization adds links to the system adjustment chain and causes performance loss, while container virtualization has a common core , almost no performance loss; 3. Traditional virtualization supports multiple operating systems, while container virtualization only supports operating systems supported by the kernel.

The operating environment of this tutorial: linux7.3 system, docker-1.13.1 version, Dell G3 computer.

What is the difference between docker containers and traditional virtualization

Traditional virtualization technology

Virtualization refers to virtualizing one computer into multiple computers through virtualization technology Logical computer. Multiple logical computers can be run simultaneously on one computer. Each logical computer can run a different operating system, and applications can run in independent spaces without affecting each other, thereby significantly improving the computer's work efficiency.

With the continuous development of hardware manufacturers, many instructions in virtual machines do not need to go through the virtual hardware layer to the real hardware layer. Hardware manufacturers support practical instructions to operate hardware directly in the virtual machine. This The technology we call is hardware-assisted virtualization. Compared with the hardware layer of software virtualization, this kind of hardware-assisted virtualization does not need to simulate all the hardware. Some instructions run directly on the virtual machine to operate the hardware. The performance and efficiency are higher than traditional virtualization.

System-level virtualization

Features:

No need to simulate the hardware layer.

Sharing the kernel of the same host

The difference between traditional virtualization and container virtualization

Container’s core technology

1.CGroup limits the resource usage of containers

2.Namespace mechanism to achieve isolation between containers

3.chroot, file system isolation.

CGroup:

The Linux kernel provides restrictions, records and isolation of resources used by process groups. Proposed by Google engineers, the background is integrated into the kernel. The control and recording of different resource usage is achieved through different subsystems .

1 |

|

Namespace:

pid: The container has its own independent process table and thread No. 1.

net: The container has its own independent network info

ipc: During ipc communication, additional information needs to be added to identify the process

mnt: Each container has its own unique directory mount

utc: Each container has an independent hostname and domain

chroot:

A certain directory in the host is the root directory in the container.

All applications have their own dependencies, among which Includes software and hardware resources. Docker is an open platform for developers that isolates dependencies by packaging each application into a container. Containers are like lightweight virtual machines that can scale to thousands of nodes, helping to increase cloud portability by running the same application in different virtual environments. Virtual machines are widely used in cloud computing to achieve isolation and resource control through the use of virtual machines. The virtual machine loads a complete operating system with its own memory management, making applications more efficient and secure while ensuring their high availability.

What is the difference between Docker containers and virtual machines?

The virtual machine has a complete operating system, and its own memory management is supported through related virtual devices. In a virtual machine, efficient resources are allocated to the user operating system and hypervisor, allowing multiple instances of one or more operating systems to run in parallel on a single computer (or host). Each guest operating system runs as a single entity within the host system.

On the other hand, Docker containers are executed using the Docker engine rather than the hypervisor. Containers are therefore smaller than virtual machines and can start faster due to the sharing of the host kernel, with better performance, less isolation and better compatibility. Docker containers are able to share a kernel and share application libraries, so containers have lower system overhead than virtual machines. As long as users are willing to use a single platform to provide a shared operating system, containers can be faster and use fewer resources. A virtual machine can take minutes to create and start, whereas a container can take just seconds to create and start. Applications contained in containers provide superior performance compared to running applications in virtual machines.

One of the key indicators that Docker containers are weaker than virtual machines is "isolation". Intel's VT-d and VT-x technologies provide ring-1 hardware isolation technology for virtual machines, so virtual machines can take full advantage of it. It helps virtual machines use resources efficiently and prevent interference with each other. Docker containers also do not have any form of hardware isolation, making them vulnerable to attacks.

How to choose?

Choosing containers or virtual machines depends on how the application is designed. If the application is designed to provide scalability and high availability, then containers are the best choice, otherwise the application can be placed in a virtual machine. For businesses with high I/O requirements, such as database services, it is recommended to deploy Docker physical machines, because when Docker is deployed in a virtual machine, I/O performance will be limited by the virtual machine. For businesses such as virtual desktop services that emphasize tenant permissions and security, it is recommended to use virtual machines. The multi-tenant strong isolation feature of virtual machines ensures that while tenants have root permissions on the virtual machine, other tenants and hosts are safe.

Or a better option is a hybrid solution, with containers running in virtual machines. Docker containers can run inside virtual machines and provide them with proven isolation, security properties, mobility, dynamic virtual networking, and more. In order to achieve safe isolation and high utilization of resources, we should basically follow the idea of using virtual machine isolation for business operations of different tenants, and deploy similar types of businesses on the same set of containers.

Conclusion

Docker containers are becoming an important tool in DevOps environments. There are many use cases for Docker Containers in the DevOps world. Running applications on Docker containers and deploying them anywhere (Cloud or on-premises or any flavor of Linux) is now a reality.

Working in heterogeneous environments, virtual machines provide a high degree of flexibility, while Docker containers mainly focus on applications and their dependencies. Docker Containers allow easy porting of application stacks across clouds by using each cloud's virtual machine environment to handle clouds. This represents a useful feature that, without Docker Containers, would have to be implemented in a more complex and tedious way. What is explained here is not about giving up virtual machines, but using Docker containers according to actual conditions in addition to virtual machines when necessary. It is not believed that Docker containers can completely eliminate virtual machines.

Recommended learning: "docker video tutorial"

The above is the detailed content of What is the difference between docker containers and traditional virtualization?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1319

1319

25

25

1269

1269

29

29

1248

1248

24

24

How to exit the container by docker

Apr 15, 2025 pm 12:15 PM

How to exit the container by docker

Apr 15, 2025 pm 12:15 PM

Four ways to exit Docker container: Use Ctrl D in the container terminal Enter exit command in the container terminal Use docker stop <container_name> Command Use docker kill <container_name> command in the host terminal (force exit)

How to copy files in docker to outside

Apr 15, 2025 pm 12:12 PM

How to copy files in docker to outside

Apr 15, 2025 pm 12:12 PM

Methods for copying files to external hosts in Docker: Use the docker cp command: Execute docker cp [Options] <Container Path> <Host Path>. Using data volumes: Create a directory on the host, and use the -v parameter to mount the directory into the container when creating the container to achieve bidirectional file synchronization.

How to check the name of the docker container

Apr 15, 2025 pm 12:21 PM

How to check the name of the docker container

Apr 15, 2025 pm 12:21 PM

You can query the Docker container name by following the steps: List all containers (docker ps). Filter the container list (using the grep command). Gets the container name (located in the "NAMES" column).

How to start containers by docker

Apr 15, 2025 pm 12:27 PM

How to start containers by docker

Apr 15, 2025 pm 12:27 PM

Docker container startup steps: Pull the container image: Run "docker pull [mirror name]". Create a container: Use "docker create [options] [mirror name] [commands and parameters]". Start the container: Execute "docker start [Container name or ID]". Check container status: Verify that the container is running with "docker ps".

How to restart docker

Apr 15, 2025 pm 12:06 PM

How to restart docker

Apr 15, 2025 pm 12:06 PM

How to restart the Docker container: get the container ID (docker ps); stop the container (docker stop <container_id>); start the container (docker start <container_id>); verify that the restart is successful (docker ps). Other methods: Docker Compose (docker-compose restart) or Docker API (see Docker documentation).

How to start mysql by docker

Apr 15, 2025 pm 12:09 PM

How to start mysql by docker

Apr 15, 2025 pm 12:09 PM

The process of starting MySQL in Docker consists of the following steps: Pull the MySQL image to create and start the container, set the root user password, and map the port verification connection Create the database and the user grants all permissions to the database

How to create containers for docker

Apr 15, 2025 pm 12:18 PM

How to create containers for docker

Apr 15, 2025 pm 12:18 PM

Create a container in Docker: 1. Pull the image: docker pull [mirror name] 2. Create a container: docker run [Options] [mirror name] [Command] 3. Start the container: docker start [Container name]

How to view logs from docker

Apr 15, 2025 pm 12:24 PM

How to view logs from docker

Apr 15, 2025 pm 12:24 PM

The methods to view Docker logs include: using the docker logs command, for example: docker logs CONTAINER_NAME Use the docker exec command to run /bin/sh and view the log file, for example: docker exec -it CONTAINER_NAME /bin/sh ; cat /var/log/CONTAINER_NAME.log Use the docker-compose logs command of Docker Compose, for example: docker-compose -f docker-com