Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

[Hematemesis] 28 interview questions about PHP core technology to help you change jobs!

[Hematemesis] 28 interview questions about PHP core technology to help you change jobs!

[Hematemesis] 28 interview questions about PHP core technology to help you change jobs!

This article compiles and shares 28 interview questions about PHP core technology with you, giving you an in-depth understanding of PHP core technology. You can quickly avoid pitfalls during the interview. It is essential for job-hopping. It is worth collecting and learning. I hope it will be helpful to everyone. !

![[Hematemesis] 28 interview questions about PHP core technology to help you change jobs!](https://img.php.cn/upload/article/000/000/024/620b1b39ed9c9914.jpg)

Related recommendations: "2022 PHP Interview Questions Summary (Collection)"

1 What is oop?

Answer: oop is object-oriented programming. Object-oriented programming is a computer programming architecture. A basic principle of OOP is that a computer program is composed of a single subroutine that can function as a subroutine. It is composed of units or objects.

OOP has three major characteristics

1. Encapsulation: also known as information hiding, which means to separate the use and implementation of a class and only retain some interfaces and methods Contact the outside world, or only expose some methods for developers to use. Therefore, developers only need to pay attention to how this class is used, rather than its specific implementation process. This can achieve MVC division of labor and cooperation, effectively avoid interdependence between programs, and achieve loose coupling between code modules.

2. Inheritance: subclasses automatically inherit the properties and methods of their parent class, and can add new properties and methods or rewrite some properties and methods. Inheritance increases code reusability. PHP only supports single inheritance, which means that a subclass can only have one parent class.

3. Polymorphism: The subclass inherits the properties and methods from the parent class and rewrites some of the methods. Therefore, although multiple subclasses have the same method, objects instantiated by these subclasses can obtain completely different results after calling these same methods. This technology is polymorphism.

Polymorphism enhances the flexibility of software.

1. Easy to maintain

The structure is designed with object-oriented thinking and has high readability. Due to the existence of inheritance, even if the requirements change, the maintenance will only be in the local module, so It is very convenient and low cost to maintain.

2. High quality

When designing, existing classes that have been tested in the field of previous projects can be reused so that the system meets business needs and has high quality. .

3. High efficiency

When developing software, abstract things in the real world and generate classes according to the needs of the design. Using this method to solve problems is close to daily life and natural way of thinking, which will inevitably improve the efficiency and quality of software development.

4. Easy to expand

Due to the characteristics of inheritance, encapsulation, and polymorphism, a system structure with high cohesion and low coupling is naturally designed, making the system more flexible, easier to expand, and cost-effective. lower.

2 There are several ways to merge two arrays, try to compare their similarities and differences

Methods:

1, array_merge ()

2, ' '

3, array_merge_recursive

Similarities and differences:

array_merge simple merge array

array_merge_recursive merges two arrays. If there is exactly the same data in the array, merge them recursively

array_combine and ' ': merge two arrays, and the value of the former is used as the key of the new array

3 There is a bug in PHP's is_writeable() function. It cannot accurately determine whether a directory/file is writable. Please write a function to determine whether the directory/file is absolutely writable.

Answer: Among them The bug exists in two aspects.

1. In windows, when the file only has read-only attributes, the is_writeable() function returns false. When true is returned, the file is not necessarily writable.

If it is a directory, create a new file in the directory and check by opening the file;

If it is a file, you can test whether the file is writable by opening the file (fopen).

2. In Unix, when safe_mode is turned on in the php configuration file (safe_mode=on), is_writeable() is also unavailable.

Read the configuration file to see if safe_mode is turned on.

/**

* Tests for file writability

*

* is_writable() returns TRUE on Windows servers when you really can't write to

* the file, based on the read-only attribute. is_writable() is also unreliable

* on Unix servers if safe_mode is on.

*

* @access private

* @return void

*/

if ( ! function_exists('is_really_writable'))

{

function is_really_writable($file){

// If we're on a Unix server with safe_mode off we call is_writable

if (DIRECTORY_SEPARATOR == '/' AND @ini_get("safe_mode") == FALSE){

return is_writable($file);

}

// For windows servers and safe_mode "on" installations we'll actually

// write a file then read it. Bah...

if (is_dir($file)){

$file = rtrim($file, '/').'/'.md5(mt_rand(1,100).mt_rand(1,100));

if (($fp = @fopen($file, FOPEN_WRITE_CREATE)) === FALSE){

return FALSE;

}

fclose($fp);

@chmod($file, DIR_WRITE_MODE);

@unlink($file);

return TRUE;

} elseif ( ! is_file($file) OR ($fp = @fopen($file, FOPEN_WRITE_CREATE)) === FALSE) {

return FALSE;

}

fclose($fp);

return TRUE;

}

}4 What is the garbage collection mechanism of PHP?

PHP can automatically manage memory and clear objects that are no longer needed. PHP uses a simple garbage collection mechanism called reference counting.

Each object contains a reference counter. Each reference is connected to the object and the counter is incremented by 1. When reference leaves the living space or is set to NULL, the counter is decremented by 1. When an object's reference counter reaches zero, PHP knows that you no longer need to use the object and releases the memory space it occupies.

5 Write a function to retrieve the file extension from a standard URL as efficiently as possible,

For example: http://www.startphp.cn /abc/de/fg.php?id=1 needs to remove php or .php

<?php

// 方案一

function getExt1($url){

$arr = parse_url($url);

//Array ( [scheme] => http [host] => www.startphp.cn [path] => /abc/de/fg.php [query] => id=1 )

$file = basename($arr['path']);

$ext = explode('.', $file);

return $ext[count($ext)-1];

}

// 方案二

function getExt2($url){

$url = basename($url);

$pos1 = strpos($url,'.');

$pos2 = strpos($url,'?');

if (strstr($url,'?')) {

return substr($url,$pos1+1,$pos2-$pos1-1);

} else {

return substr($url,$pos1);

}

}

$path = "http://www.startphp.cn/abc/de/fg.php?id=1";

echo getExt1($path);

echo "<br />";

echo getExt2($path);

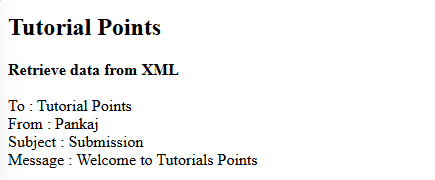

?>6 Use regular expressions to extract a piece of markup language (html or xml)

The specified attribute value of the specified tag in the code segment (it needs to be considered that the attribute value is irregular, such as case insensitivity, there is a space between the attribute name value and the equal sign, etc.). It is assumed here that the attr attribute value of the test tag needs to be extracted. Please construct a string containing the tag yourself (Tencent)

as follows:

<?php

header("content-type:text/html;charset=utf-8");

function getAttrValue($str,$tagName,$attrName){

$pattern1="/<".$tagName."(s+w+s*=s*(['"]?)([^'"]*)())*s+".$attrName."s*=s*(['"]?)([^'"]*)()(s+w+s*=s*(['"]?)([^'"]*)(9))*s*>/i";

$arr=array();

$re=preg_match($pattern1,$str,$arr);

if($re){

echo"<br/>$arr[6]={$arr[6]}";

}else{

echo"<br/>没找到。";

}

}

// 示例

$str1="<test attr='ddd'>";

getAttrValue($str1,"test","attr");//找test标签中attr属性的值,结果为ddd

$str2="<test2 attr='ddd'attr2='ddd2't1="t1 value"t2='t2 value'>";

getAttrValue($str2,"test2","t1");//找test2标签中t1属性的值,结果为t1 value

?>7 php中WEB上传文件的原理是什么,如何限制上传文件的大小?

上传文件的表单使用post方式,并且要在form中添加enctype='multipart/form-data'。

一般可以加上隐藏域:<input type=hidden name='MAX_FILE_SIZE' value=dddddd>,位置在file域前面。

value的值是上传文件的客户端字节限制。可以避免用户在花时间等待上传大文件之后才发现文件过大上传失败的麻烦。

使用file文件域来选择要上传的文件,当点击提交按钮之后,文件会被上传到服务器中的临时目录,在脚本运行结束时会被销毁,所以应该在脚本结束之前,将其移动到服务器上的某个目录下,可以通过函数move_uploaded_file()来移动临时文件,要获取临时文件的信息,使用$_FILES。

限制上传文件大小的因素有:

客户端的隐藏域MAX_FILE_SIZE的数值(可以被绕开)。

服务器端的upload_max_filesize,post_max_size和memory_limit。这几项不能够用脚本来设置。

自定义文件大小限制逻辑。即使服务器的限制是能自己决定,也会有需要个别考虑的情况。所以这个限制方式经常是必要的。

8 请说明 PHP 中传值与传引用的区别,什么时候传值什么时候传引用?

按值传递:函数范围内对值的任何改变在函数外部都会被忽略

按引用传递:函数范围内对值的任何改变在函数外部也能反映出这些修改

优缺点:按值传递时,php必须复制值。特别是对于大型的字符串和对象来说,这将会是一个代价很大的操作。按引用传递则不需要复制值,对于性能提高很有好处。

9 MySQL数据库中的字段类型varchar和char的主要区别是什么?

Varchar是变长,节省存储空间,char是固定长度。查找效率要char型快,因为varchar是非定长,必须先查找长度,然后进行数据的提取,比char定长类型多了一个步骤,所以效率低一些。

10 静态化如何实现的?伪静态如何实现?

1、 静态化指的是页面静态化,也即生成实实在在的静态文件,也即不需要查询数据库就可以直接从文件中获取数据,指的是真静态。

实现方式主要有两种:一种是我们在添加信息入库的时候就生成的静态文件,也称为模板替换技术。一种是用户在访问我们的页面时先判断是否有对应的缓存文件存在,如果存在就读缓存,不存在就读数据库,同时生成缓存文件。

2、伪静态不是真正意义上的静态化,之所以使用伪静态,主要是为了SEO推广,搜索引擎对动态的文件获取难度大,不利于网站的推广。

实习原理是基于Apache或Nginx的rewrite

主要有两种方式:

一种是直接在配置虚拟机的位置配置伪静态,这个每次修改完成后需要重启web服务器。

另一种采用分布式的,可以在网站的根目录上创建.htaccess的文件,在里面配置相应的重写规则来实现伪静态,这种每次重写时不需要重启web服务器,且结构上比较清晰。

11 如何处理负载,高并发?

1、HTML静态化

效率最高、消耗最小的就是纯静态化的html页面,所以我们尽可能使我们的 网站上的页面采用静态页面来实现,这个最简单的方法其实也是最有效的方法。

2、图片服务器分离

把图片单独存储,尽量减少图片等大流量的开销,可以放在一些相关的平台上,如七牛等

3、数据库集群和库表散列及缓存

数据库的并发连接为100,一台数据库远远不够,可以从读写分离、主从复制,数据库集群方面来着手。另外尽量减少数据库的访问,可以使用缓存数据库如memcache、redis。

4、镜像:

尽量减少下载,可以把不同的请求分发到多个镜像端。

5、负载均衡:

Apache的最大并发连接为1500,只能增加服务器,可以从硬件上着手,如F5服务器。当然硬件的成本比较高,我们往往从软件方面着手。

12 PHP7的新特性?

标量类型声明:PHP 7 中的函数的形参类型声明可以是标量了。在 PHP 5 中只能是类名、接口、array 或者 callable (PHP 5.4,即可以是函数,包括匿名函数),现在也可以使用 string、int、float和 bool 了。

Return value type declaration: Added support for return type declaration. Similar to the parameter type declaration, the return type declaration specifies the type of the function's return value. The available types are the same as those available in the parameter declaration.

NULL merging operator: Since there are a lot of situations where ternary expressions and isset() are used simultaneously in daily use, the NULL merging operator will return its own value if the variable exists and the value is not NULL. , otherwise returns its second operand.

use enhancement: classes, functions and constants imported from the same namespace can now be imported at once through a single use statement. Anonymous classes: now support instantiating an anonymous class through new class

13 Common PHP security attacks SQL injection:

Users use the method of entering SQL statements in form fields to affect normal SQL execution.

Prevent: Use mysql_real_escape_string() to filter data. Manually check whether each data is the correct data type. Use prepared statements and bind variables. Parameterized SQL: refers to when designing to connect to the database and access the data. Where values or data need to be filled in, use parameters (Parameter) to give values, using @ or? to represent parameters.

XSS attack: Cross-site scripting attack, where the user enters some data into your website, which includes client-side script (usually JavaScript). If you output data to another web page without filtering, this script will be executed.

Prevention: In order to prevent XSS attacks, use PHP's htmlentities() function to filter and then output to the browser.

CSRF: Cross-site request forgery refers to a request made by a page that looks like a trusted user of the website, but is fake

Prevention: Generally speaking, ensure that the user comes from your form, and match every form you send out. Two things to remember: Use appropriate security measures for user sessions, such as updating IDs for each session and using SSL for users. Generate another one-time token and embed it in the form, save it in the session (a session variable), check it on submit. For example, _token

code injection in laravel: Code injection is caused by exploiting computer vulnerabilities by processing invalid data. The problem comes when you accidentally execute arbitrary code, usually via file inclusion. Poorly written code can allow a remote file to be included and executed. Like many PHP functions, require can contain a URL or file name.

Prevent code injection and filter user input. Set disable allow_url_fopen and allow_url_include in php.ini. This will disable the remote file of require/include/fopen

14 What are the object-oriented features?

Mainly include encapsulation, inheritance, and polymorphism. If it is 4 aspects, add: abstraction.

Encapsulation:

Encapsulation is the basis for ensuring that software components have excellent modularity. The goal of encapsulation is to achieve high cohesion, low coupling, and prevent The impact of changes caused by program interdependencies.

Inheritance:

When defining and implementing a class, you can build on an existing class Come up and use the content defined by this existing class as your own content, and you can add some new content, or modify the original method to make it more suitable for special needs. This is inheritance. Inheritance is a mechanism for subclasses to automatically share parent class data and methods. This is a relationship between classes that improves the reusability and scalability of software.

Polymorphism:

Polymorphism refers to the specific type pointed to by the reference variable defined in the program and the method call issued through the reference variable. Determined, but only determined during the running of the program, that is, which class instance object a reference variable will point to, and the method call issued by the reference variable is a method implemented in which class, which must be determined during the running of the program.

Abstraction:

Abstraction is to find out the similarities and commonalities of some things, and then classify these things into a class. This class only considers the similarities of these things. and commonalities, and will ignore those aspects that are irrelevant to the current topic and goal, and focus on aspects that are relevant to the current goal. For example, if you see an ant and an elephant and you can imagine how they are similar, that is abstraction.

15 What are the methods for optimizing SQL statements? (Select a few)

(1) In the Where clause: The connection between where tables must be written before other Where conditions, and those conditions that can filter out the maximum number of records must be written in the Where sub At the end of the sentence.HAVING last.

(2) Replace IN with EXISTS and NOT IN with NOT EXISTS.

(3) Avoid using calculations on index columns

(4) Avoid using IS NULL and IS NOT NULL on index columns

(5) Optimize queries , you should try to avoid full table scans, and first consider creating indexes on the columns involved in where and order by.

(6) Try to avoid making null value judgments on fields in the where clause, otherwise the engine will give up using the index and perform a full table scan

(7) Try to avoid using the where clause Expression operations are performed on fields in clauses, which will cause the engine to give up using the index and perform a full table scan

16 The MySQL database is used as the storage of the publishing system. The increment of more than 50,000 items per day is estimated to be three years of operation and maintenance. How to optimize it?

(1) Design a well-designed database structure, allow partial data redundancy, and try to avoid join queries to improve efficiency.

(2) Select the appropriate table field data type and storage engine, and add indexes appropriately.

(3) Do mysql master-slave replication read-write separation.

(4) Divide the data table into tables to reduce the amount of data in a single table and improve query speed.

(5) Add caching mechanism, such as redis, memcached, etc.

(6) For pages that do not change frequently, generate static pages (such as ob caching).

(7) Write efficient SQL. For example, SELECT * FROM TABEL is changed to SELECT field_1, field_2, field_3 FROM TABLE.

17 For a website with high traffic, what method do you use to solve the problem of statistics of page visits?

(1) Confirm whether the server can support the current traffic.

(2) Optimize database access.

(3) Prohibit external access to links (hotlinking), such as hotlinking of pictures.

(4) Control file download.

(5) Do load balancing and use different hosts to offload traffic.

(6) Use browsing statistics software to understand the number of visits and perform targeted optimization.

18 Talk about your understanding of the difference between MyISAM and InnoDB in the mysql engine?

InnoDB and MyISAM are the two most commonly used table types by many people when using MySQL. Both table types have their own advantages and disadvantages, depending on the specific application. The basic difference is: the MyISAM type does not support advanced processing such as transaction processing, while the InnoDB type does. The MyISAM type table emphasizes performance, and its execution times are faster than the InnoDB type, but it does not provide transaction support, while InnoDB provides transaction support and advanced database functions such as foreign keys.

The following are some details and specific implementation differences:

What is the difference between MyISAM and InnoDB?

1. Storage structure

MyISAM: Each MyISAM is stored as three files on the disk. The name of the first file begins with the name of the table, and the extension indicates the file type. .frm files store table definitions. The data file extension is .MYD (MYData). The extension of the index file is .MYI (MYIndex).

InnoDB: All tables are stored in the same data file (or multiple files, or independent table space files). The size of the InnoDB table is only limited by the size of the operating system file. Generally 2GB.

2. Storage space

MyISAM: It can be compressed and has smaller storage space. Supports three different storage formats: static table (default, but please note that there must be no spaces at the end of the data, it will be removed), dynamic table, and compressed table.

InnoDB: Requires more memory and storage, it will establish its own dedicated buffer pool in main memory for caching data and indexes.

3. Portability, backup and recovery

MyISAM: Data is stored in the form of files, so it is very convenient for cross-platform data transfer. You can perform operations on a table individually during backup and recovery.

InnoDB: Free solutions include copying data files, backing up binlog, or using mysqldump, which is relatively painful when the data volume reaches dozens of gigabytes.

4. Transaction support

MyISAM: The emphasis is on performance. Each query is atomic and its execution times are faster than the InnoDB type, but it does not provide transaction support.

InnoDB: Provides transaction support, foreign keys and other advanced database functions. Transaction-safe (ACID compliant) tables with transaction (commit), rollback (rollback), and crash recovery capabilities.

5. AUTO_INCREMENT

MyISAM: You can create a joint index with other fields. The engine's automatic growth column must be an index. If it is a combined index, the automatic growth column does not need to be the first column. It can be sorted according to the previous columns and then incremented.

InnoDB: InnoDB must contain an index with only this field. The engine's auto-growing column must be an index, and if it is a composite index, it must also be the first column of the composite index.

6. Differences in table locks

MyISAM: Only table-level locks are supported. When users operate myisam tables, select, update, delete, and insert statements will automatically lock the table. If locked, If the table in the future meets insert concurrency, new data can be inserted at the end of the table.

InnoDB: Supporting transactions and row-level locks is the biggest feature of innodb. Row locks greatly improve the performance of multi-user concurrent operations. However, InnoDB's row lock is only valid on the primary key of WHERE. Any non-primary key WHERE will lock the entire table.

7. Full-text index

MyISAM: supports full-text index of FULLTEXT type

InnoDB: does not support full-text index of FULLTEXT type, but innodb can use sphinx plug-in to support full-text index. And it works better.

8. Table primary key

MyISAM: Allows a table without any index or primary key to exist. The index is the address where the row is saved.

InnoDB: If the primary key or non-empty unique index is not set, a 6-byte primary key (invisible to the user) will be automatically generated. The data is part of the primary index, and the additional index saves the primary index. value.

9. The specific number of rows in the table

MyISAM: Saves the total number of rows in the table. If you select count(*) from table;, the value will be taken out directly.

InnoDB: The total number of rows in the table is not saved. If you use select count(*) from table; it will traverse the entire table, which consumes a lot of money. However, after adding the wehre condition, myisam and innodb handle it in the same way.

10. CURD operation

MyISAM: If you perform a large number of SELECTs, MyISAM is a better choice.

InnoDB: If your data performs a large number of INSERTs or UPDATEs, you should use an InnoDB table for performance reasons. DELETE is better in terms of performance than InnoDB, but when DELETE FROM table, InnoDB will not re-create the table, but will delete it row by row. If you want to clear a table with a large amount of data on InnoDB, it is best to use the truncate table command.

11. Foreign keys

MyISAM: Not supported

InnoDB: Supported

Through the above analysis, you can basically consider using InnoDB to replace the MyISAM engine The reason is that InnoDB has many good features, such as transaction support, stored procedures, views, row-level locking, etc. In the case of a lot of concurrency, I believe that InnoDB's performance will definitely be much better than MyISAM. In addition, no table is omnipotent. Only by selecting the appropriate table type appropriately for the business type can the performance advantages of MySQL be maximized. If it is not a very complex web application or a non-critical application, you can still consider MyISAM. You can consider this specific situation yourself.

19 The difference between redis and memache cache

Summary 1:

1 .Data types

Redis has rich data types and supports set list and other types

memcache supports simple data types and requires the client to handle complex objects by itself

2. Persistence

redis supports data landing persistent storage

memcache does not support data persistent storage

3.Distributed storage

redis supports master-slave replication mode

memcache can use consistent hashing for distribution

value sizes are different

memcache is a memory cache, key The length is less than 250 characters, and the storage of a single item is less than 1M, which is not suitable for virtual machines.

4. Data consistency is different

redis uses a single-thread model to ensure The data is submitted in order.

Memcache needs to use cas to ensure data consistency. CAS (Check and Set) is a mechanism to ensure concurrency consistency and belongs to the category of "optimistic locking"; the principle is very simple: take the version number, operate, compare the version number, if it is consistent, operate, if not, abandon any operation

5.cpu utilization

The redis single-thread model can only use one CPU and can open multiple redis processes

Summary 2:

1. In Redis, not all data is always stored in memory. This is the biggest difference compared with Memcached.

2.Redis not only supports simple k/v type data, but also provides storage of data structures such as list, set, and hash.

3.Redis supports data backup, that is, data backup in master-slave mode.

4.Redis supports data persistence, which can keep data in memory on disk and can be loaded again for use when restarting.

I personally think that the most essential difference is that Redis has the characteristics of a database in many aspects, or is a database system, while Memcached is just a simple K/V cache

Summary 3:

The difference between redis and memecache is:

1. Storage method:

memecache stores all the data It is stored in the memory and will hang up after a power outage. The data cannot exceed the memory size

Some parts of redis are stored on the hard disk, which ensures the persistence of the data.

2. Data support type:

redis has much more data support than memecache.

3. The underlying model is different:

The new version of redis directly builds the VM mechanism by itself, because the general system calls system functions, which will waste a certain amount of time. Go move and request.

4. Different operating environments:

redis currently only officially supports Linux, thus eliminating the need for support for other systems, so that it can be better managed The energy was used to optimize the system environment, although a team from Microsoft later wrote a patch for it. But it is not placed on the trunk

memcache can only be used as a cache. The content of cache

redis can be implemented, which is similar to MongoDB. Then redis can also be used as a cache and can be set master-slave

20 What should we pay attention to in the first-in-first-out redis message queue?

Answer: Usually a list is used to implement the queue operation, which has a small limitation, so All tasks are uniformly first-in, first-out. If you want to prioritize a certain task, it is not easy to handle. This requires the queue to have the concept of priority. We can prioritize high-level tasks. There are several implementation methods. Way:

1)单一列表实现:队列正常的操作是 左进右出(lpush,rpop)为了先处理高优先级任务,在遇到高级别任务时,可以直接插队,直接放入队列头部(rpush),这样,从队列头部(右侧)获取任务时,取到的就是高优先级的任务(rpop)

2)使用两个队列,一个普通队列,一个高级队列,针对任务的级别放入不同的队列,获取任务时也很简单,redis的BRPOP命令可以按顺序从多个队列中取值,BRPOP会按照给出的 key 顺序查看,并在找到的第一个非空 list 的尾部弹出一个元素,redis> BRPOP list1 list2 0

list1 做为高优先级任务队列 list2 做为普通任务队列 这样就实现了先处理高优先级任务,当没有高优先级任务时,就去获取普通任务 方式1 最简单,但实际应用比较局限,方式3可以实现复杂优先级,但实现比较复杂,不利于维护 方式2 是推荐用法,实际应用最为合适

21 Redis如何防止高并发?

答:其实redis是不会存在并发问题的,因为他是单进程的,再多的命令都是一个接一个地执行的。我们使用的时候,可能会出现并发问题,比如获得和设定这一对。Redis的为什么 有高并发问题?Redis的出身决定等

Redis是一种单线程机制的nosql数据库,基于key-value,数据可持久化落盘。由于单线程所以redis本身并没有锁的概念,多个客户端连接并不存在竞争关系,但是利用jedis等客户端对redis进行并发访问时会出现问题。发生连接超时、数据转换错误、阻塞、客户端关闭连接等问题,这些问题均是由于客户端连接混乱造成。

同时,单线程的天性决定,高并发对同一个键的操作会排队处理,如果并发量很大,可能造成后来的请求超时。

在远程访问redis的时候,因为网络等原因造成高并发访问延迟返回的问题。

解决办法

在客户端将连接进行池化,同时对客户端读写Redis操作采用内部锁synchronized。

服务器角度,利用setnx变向实现锁机制。

22 做秒杀用什么数据库,怎么实现的?

答:因为秒杀的一瞬间,并发非常大,如果同时请求数据库,会导致数据库的压力非常大,导致数据库的性能急剧下降,更严重的可能会导致数据库服务器宕机。

这时候一般采用内存高速缓存数据库redis来实现的,redis是非关系型数据库,redis是单线程的,通过redis的队列可以完成秒杀过程。

23 什么情况下使用缓存?

答:当用户第一次访问应用系统的时候,因为还没有登录,会被引导到认证系统中进行登录;根据用户提供的登录信息,认证系统进行身份校验,如果通过校验,应该返回给用户一个认证的凭据--ticket;

用户再访问别的应用的时候,就会将这个ticket带上,作为自己认证的凭据,应用系统接受到请求之后会把 ticket送到认证系统进行校验,检查ticket的合法性。如果通过校验,用户就可以在不用再次登录的情况下访问应用系统2和应用系统3了。

实现主要技术点:

1、两个站点共用一个数据验证系统

2、主要通过跨域请求的方式来实现验证及session处理。

24 如何解决异常处理?

答: 抛出异常:使用try…catch,异常的代码放在try代码块内,如果没有触发异常,则代码继续执行,如果异常被触发,就会 抛出一个异常。Catch代码块捕获异常,并创建一个包含异常信息的对象。$e->getMessage(),输出异常的错误信息。

解决异常:使用set_error_handler函数获取异常(也可以使用try()和catch()函数),然后使用set_exception_handler()函数设置默认的异常处理程序,register_shutdown_function()函数来执行,执行机制是,php要把调入的函数调入到内存,当页面所有的php语句都执行完成时,再调用此函数

25 权限管理(RBAC)的实现?

1.首先创建一张用户表:id name auto(保存格式为:控制器-方法)

2.然后在后台中创建一个基类控制器,控制器里封装一个构造方法,当用户登陆成功后,使用TP框架中封装好的session函数获取保存在服务器中的session id,然后实例化模型,通过用户id获取保存在数据表中的auth数据,使用explode函数分割获取到的数据,并使用一个数组保存起来,然后使用TP框架中封装好的常量获取当前控制器和方法,然后把他们组装成字符串,使用in_array函数进行判断该数组中是否含有当前获取到的控制器和方法,如果没有,就提示该用户没有权限,如果有就进行下一步操作

26 How to ensure that promotional products will not be oversold?

Answer: This problem was a difficulty we encountered during development. The main reason for oversold was the order placed. The number was inconsistent with the number of products we wanted to promote. Every time, the number of orders was always more than the number of products we promoted. Our team discussed it for a long time and came up with several plans to achieve it:

The first option: Before placing an order, we judge whether the quantity of promotional products is enough. If it is not enough, we are not allowed to place an order. When changing the inventory, add a condition, and only change the inventory of products whose inventory is greater than 0. At that time, We use ab for stress testing. When the concurrency exceeds 500 and the number of visits exceeds 2,000, overselling will still occur. So we denied it.

Second solution: use mysql transactions and exclusive locks to solve the problem. First, we choose the storage engine of the database as innoDB, which is implemented using exclusive locks. At the beginning, we tested the shared lock. It is found that oversold conditions still occur. One problem is that when we conduct high-concurrency testing, it has a great impact on the performance of the database, causing great pressure on the database, and was ultimately rejected by us.

The third option: use file lock implementation. When a user grabs a promotional item, the file lock is first triggered to prevent other users from entering. After the user grabs the promotional item, the file lock is unlocked and other users are allowed to operate. This can solve the oversold problem, but it will cause a lot of I/O overhead for the file.

Finally we used redis queue to implement. The number of products to be promoted is stored in redis in a queue. Whenever a user grabs a promotional product, a piece of data is deleted from the queue to ensure that the product will not be oversold. This is very convenient to operate and extremely efficient. In the end, we adopted this method to achieve

27 The realization of flash sales in the mall?

Answer: Rush buying and flash sales are nowadays A very common application scenario, there are two main problems that need to be solved:

The pressure caused by high concurrency on the database

Under competition conditions How to solve the correct reduction of inventory ("oversold" problem)

For the first problem, it is already easy to think of using cache to handle rush buying and avoid directly operating the database, such as using Redis.

The second question, we can use redis queue to complete, put the products to be sold instantly into the queue, because the pop operation is atomic, even if many users arrive at the same time, they will be executed in sequence, file lock The performance of transactions and transactions declines quickly under high concurrency. Of course, other aspects must be considered, such as making the snap-up page static and calling the interface through Ajax. There may also be a situation where a user snaps up multiple times. In this case, it is necessary to Plus a queuing queue, snap-up result queue and inventory queue.

Under high concurrency conditions, enter the user into the queue, use a thread loop to remove a user from the queue, and determine whether the user is already in the purchase result queue. If so, it has been purchased. Otherwise, it has not been purchased. Inventory Decrease it by 1, write to the database, and put the user into the result queue.

28 How to deal with load and high concurrency?

Answer: From the perspective of low cost, high performance and high scalability, there are the following solutions:

1. HTML staticization

In fact, everyone knows that the most efficient and least consumed is a purely static html page, so we try our best to use static pages for the pages on our website. This simplest method is actually the most effective method. .

2. Image server separation

Store images separately to minimize the overhead of large traffic such as images. You can place them on some related platforms, such as bull riding, etc.

3. Database cluster and database table hashing and caching

The concurrent connections of the database are 100. One database is far from enough. It can be separated from reading and writing. Let’s start with replication and database clustering. In addition, to minimize database access, you can use cache databases such as memcache and redis.

4. Mirror:

Reduce downloads as much as possible and distribute different requests to multiple mirrors.

5. Load balancing:

The maximum concurrent connections of Apache is 1500. You can only add servers. You can start with hardware, such as F5 server. Of course, the cost of hardware is relatively high, so we often start from the software side.

Load Balancing is built on the existing network structure. It provides a cheap, effective and transparent method to expand the bandwidth of network devices and servers, increase throughput, and enhance network data processing capabilities. Can improve network flexibility and availability. Currently, the most widely used load balancing software is Nginx, LVS, and HAProxy.

Let’s talk about the advantages and disadvantages of the three types respectively:

The advantages of Nginx are:

Working on the 7th layer of the network, you can make some diversion strategies for http applications, such as domain names and directory structures. Its regular rules are more powerful and flexible than HAProxy, which is one of the main reasons why it is currently widely popular. , Nginx can be used in far more situations than LVS based on this alone.

Nginx relies very little on network stability. In theory, it can perform load functions as long as it can be pinged. This is also one of its advantages; on the contrary, LVS relies heavily on network stability, which I am deeply aware of;

Nginx is relatively simple to install and configure, and it is more convenient to test. It can basically print out errors in logs. The configuration and testing of LVS takes a relatively long time, and LVS relies heavily on the network.

It can withstand high load pressure and is stable. If the hardware is not bad, it can generally support tens of thousands of concurrency, and the load degree is relatively smaller than LVS.

Nginx can detect internal server failures through the port, such as status codes, timeouts, etc. returned by the server processing web pages, and will resubmit requests that return errors to another node. However, the disadvantage is that it cannot Support url to detect. For example, if the user is uploading a file, and the node processing the upload fails during the upload process, Nginx will switch the upload to another server for reprocessing, and LVS will be directly disconnected. If a large file is uploaded, Or very important files, users may be dissatisfied.

Nginx is not only an excellent load balancer/reverse proxy software, it is also a powerful web application server. LNMP is also a very popular web architecture in recent years, and its stability is also very good in high-traffic environments.

Nginx is now becoming more and more mature as a Web reverse acceleration cache and is faster than the traditional Squid server. You can consider using it as a reverse proxy accelerator.

Nginx can be used as a mid-level reverse proxy. At this level, Nginx has basically no rivals. The only one that can compare with Nginx is lighttpd. However, lighttpd does not yet have the full functions of Nginx, and the configuration is not that good. It is clear and easy to read, and the community information is far less active than Nginx.

Nginx can also be used as a static web page and image server, and its performance in this area is unmatched. The Nginx community is also very active and there are many third-party modules.

The disadvantages of Nginx are:

Nginx can only support http, https and Email protocols, so the scope of application is smaller. This is its shortcoming.

The health check of the back-end server only supports detection through the port and does not support detection through the URL. Direct retention of Session is not supported, but it can be solved through ip_hash.

LVS: Use Linux kernel cluster to implement a high-performance, high-availability load balancing server, which has good scalability (Scalability), reliability (Reliability) and manageability (Manageability).

The advantages of LVS are:

It has strong load resistance and works above the 4th layer of the network for distribution only. There is no traffic generation. This feature also This determines its strongest performance among load balancing software and its relatively low consumption of memory and CPU resources.

The configurability is relatively low, which is a disadvantage and an advantage. Because there is nothing that can be configured too much, it does not require too much contact, which greatly reduces the chance of human error.

It works stably because it has strong load resistance and has a complete dual-machine hot backup solution, such as LVS Keepalived. However, the one we use most in project implementation is LVS/DR Keepalived.

No traffic, LVS only distributes requests, and traffic does not go out from itself. This ensures that the performance of the balancer IO will not be affected by large traffic.

The application range is relatively wide. Because LVS works on layer 4, it can load balance almost all applications, including http, databases, online chat rooms, etc.

The disadvantages of LVS are:

The software itself does not support regular expression processing and cannot separate dynamic and static; and many websites now have strong needs in this regard , this is the advantage of Nginx/HAProxy Keepalived.

If the website application is relatively large, LVS/DR Keepalived will be more complicated to implement. Especially if there is a Windows Server machine behind it, the implementation, configuration and maintenance process will be more complicated. Relatively speaking, , Nginx/HAProxy Keepalived is much simpler.

The characteristics of HAProxy are:

HAProxy also supports virtual hosts.

The advantages of HAProxy can supplement some of the shortcomings of Nginx, such as supporting Session retention and Cookie guidance; it also supports detecting the status of the back-end server by obtaining the specified URL.

HAProxy is similar to LVS, it is just a load balancing software; purely in terms of efficiency, HAProxy will have better load balancing speed than Nginx, and it is also better than Nginx in concurrent processing.

HAProxy supports load balancing forwarding of the TCP protocol. It can load balance MySQL reads and detect and load balance the back-end MySQL nodes. You can use LVS Keepalived to load balance the MySQL master and slave.

There are many HAProxy load balancing strategies. HAProxy's load balancing algorithms currently have the following eight types:

① roundrobin, which means simple polling. Not much to say, this is the basics of load balancing. They are all available;

② static-rr, indicating that based on the weight, it is recommended to pay attention;

③ leastconn, indicating that the least connected ones are processed first, it is recommended to pay attention;

④ source, Indicates based on the request source IP. This is similar to Nginx’s IP_hash mechanism. We use it as a method to solve session problems. It is recommended to pay attention;

⑤ ri, represents the requested URI;

⑥ rl_param, represents the requested URL parameter 'balance url_param' requires an URL parameter name;

⑦ hdr(name), represents Lock each HTTP request based on the HTTP request header;

⑧ rdp-cookie(name), indicating that each TCP request is locked and hashed based on the cookie(name).

Summary of the comparison between Nginx and LVS:

Nginx works on layer 7 of the network, so it can implement diversion strategies for the http application itself, such as domain names and directories. Structure, etc. In contrast, LVS does not have such a function, so Nginx can be used in far more situations than LVS based on this alone; but these useful functions of Nginx make it more adjustable than LVS, so it is often You have to touch, touch, touch. If you touch too much, the chances of human problems will increase.

Nginx has less dependence on network stability. In theory, as long as ping is successful and web page access is normal, Nginx can connect. This is a major advantage of Nginx! Nginx can also distinguish between internal and external networks. If it is a node with both internal and external networks, it is equivalent to a single machine having a backup line; LVS is more dependent on the network environment. Currently, the servers are in the same network segment and LVS uses direct mode to offload. The effect is more guaranteed.

Also note that LVS needs to apply for at least one more IP from the hosting provider to be used as a Visual IP. It seems that it cannot use its own IP as a VIP. To be a good LVS administrator, you really need to follow up and learn a lot of knowledge about network communication, which is no longer as simple as HTTP.

Nginx is relatively simple to install and configure, and it is also very convenient to test, because it can basically print out errors in the log. The installation, configuration, and testing of LVS take a relatively long time; LVS relies heavily on the network. In many cases, failure to configure successfully is due to network problems rather than configuration problems. If there is a problem, it will be much more troublesome to solve. .

Nginx can also withstand high loads and is stable, but the load and stability are worse than LVS. There are several levels: Nginx handles all traffic, so it is limited by machine IO and configuration; its own bugs are still difficult to solve. Avoided.

Nginx can detect internal server failures, such as status codes, timeouts, etc. returned by the server processing web pages, and will resubmit requests that return errors to another node. Currently, ldirectd in LVS can also support monitoring the internal conditions of the server, but the principle of LVS prevents it from resending requests. For example, if the user is uploading a file, and the node processing the upload fails during the upload process, Nginx will switch the upload to another server for reprocessing, and LVS will be directly disconnected. If a large file is uploaded, Or very important files, users may be annoyed by this.

Nginx’s asynchronous processing of requests can help the node server reduce the load. If apache is used to serve external services directly, then when there are many narrowband links, the apache server will occupy a large amount of memory and cannot be released. Use one more Nginx as apache If you use a proxy, these narrowband links will be blocked by Nginx, and too many requests will not accumulate on Apache, thus reducing a considerable amount of resource usage. Using Squid has the same effect in this regard. Even if Squid itself is configured not to cache, it is still of great help to Apache.

Nginx can support http, https and email (the email function is less commonly used), and LVS supports more applications than Nginx in this regard. In terms of use, generally the strategy adopted by the front end should be LVS, that is, the direction of DNS should be the LVS equalizer. The advantages of LVS make it very suitable for this task.

Important IP addresses are best managed by LVS, such as database IPs, webservice server IPs, etc. These IP addresses will become more and more widely used over time. If you change the IP address, it will malfunction. will follow. Therefore, it is safest to hand over these important IPs to LVS for hosting. The only disadvantage of doing so is that the number of VIPs required will be larger.

Nginx can be used as an LVS node machine. First, it can take advantage of the functions of Nginx. Second, it can take advantage of the performance of Nginx. Of course, you can also use Squid directly at this level. Squid's functions are much weaker than Nginx, and its performance is also inferior to Nginx.

Nginx can also be used as a mid-level proxy. At this level, Nginx basically has no rivals. The only one that can shake Nginx is lighttpd. However, lighttpd currently does not have the full functions of Nginx, and the configuration is not so Clear and easy to read. In addition, the IP of the middle-level agent is also important, so it is the most perfect solution for the middle-level agent to also have a VIP and LVS.

The specific application must be analyzed in detail. If it is a relatively small website (daily PV is less than 10 million), it is perfectly fine to use Nginx. If there are many machines, you can use DNS polling. LVS consumes There are still a lot of machines; for large websites or important services, when the machines are not worried, you should consider using LVS.

Recommended learning: "PHP Video Tutorial"

The above is the detailed content of [Hematemesis] 28 interview questions about PHP core technology to help you change jobs!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1384

1384

52

52

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c

7 PHP Functions I Regret I Didn't Know Before

Nov 13, 2024 am 09:42 AM

7 PHP Functions I Regret I Didn't Know Before

Nov 13, 2024 am 09:42 AM

If you are an experienced PHP developer, you might have the feeling that you’ve been there and done that already.You have developed a significant number of applications, debugged millions of lines of code, and tweaked a bunch of scripts to achieve op

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

This tutorial demonstrates how to efficiently process XML documents using PHP. XML (eXtensible Markup Language) is a versatile text-based markup language designed for both human readability and machine parsing. It's commonly used for data storage an

Explain JSON Web Tokens (JWT) and their use case in PHP APIs.

Apr 05, 2025 am 12:04 AM

Explain JSON Web Tokens (JWT) and their use case in PHP APIs.

Apr 05, 2025 am 12:04 AM

JWT is an open standard based on JSON, used to securely transmit information between parties, mainly for identity authentication and information exchange. 1. JWT consists of three parts: Header, Payload and Signature. 2. The working principle of JWT includes three steps: generating JWT, verifying JWT and parsing Payload. 3. When using JWT for authentication in PHP, JWT can be generated and verified, and user role and permission information can be included in advanced usage. 4. Common errors include signature verification failure, token expiration, and payload oversized. Debugging skills include using debugging tools and logging. 5. Performance optimization and best practices include using appropriate signature algorithms, setting validity periods reasonably,

PHP Program to Count Vowels in a String

Feb 07, 2025 pm 12:12 PM

PHP Program to Count Vowels in a String

Feb 07, 2025 pm 12:12 PM

A string is a sequence of characters, including letters, numbers, and symbols. This tutorial will learn how to calculate the number of vowels in a given string in PHP using different methods. The vowels in English are a, e, i, o, u, and they can be uppercase or lowercase. What is a vowel? Vowels are alphabetic characters that represent a specific pronunciation. There are five vowels in English, including uppercase and lowercase: a, e, i, o, u Example 1 Input: String = "Tutorialspoint" Output: 6 explain The vowels in the string "Tutorialspoint" are u, o, i, a, o, i. There are 6 yuan in total

Explain late static binding in PHP (static::).

Apr 03, 2025 am 12:04 AM

Explain late static binding in PHP (static::).

Apr 03, 2025 am 12:04 AM

Static binding (static::) implements late static binding (LSB) in PHP, allowing calling classes to be referenced in static contexts rather than defining classes. 1) The parsing process is performed at runtime, 2) Look up the call class in the inheritance relationship, 3) It may bring performance overhead.

What are PHP magic methods (__construct, __destruct, __call, __get, __set, etc.) and provide use cases?

Apr 03, 2025 am 12:03 AM

What are PHP magic methods (__construct, __destruct, __call, __get, __set, etc.) and provide use cases?

Apr 03, 2025 am 12:03 AM

What are the magic methods of PHP? PHP's magic methods include: 1.\_\_construct, used to initialize objects; 2.\_\_destruct, used to clean up resources; 3.\_\_call, handle non-existent method calls; 4.\_\_get, implement dynamic attribute access; 5.\_\_set, implement dynamic attribute settings. These methods are automatically called in certain situations, improving code flexibility and efficiency.