Operation and Maintenance

Operation and Maintenance

Docker

Docker

Docker image principle: joint file system and layered understanding (detailed examples)

Docker image principle: joint file system and layered understanding (detailed examples)

Docker image principle: joint file system and layered understanding (detailed examples)

This article brings you relevant knowledge about the joint file system and layered understanding of Docker image principles, including issues related to joint file systems, hierarchical structures and layered practices. I hope it will be helpful to everyone.

Docker——Union file system and layered understanding of mirroring principle

1. Union file system

UnionFS( Union File System)

UnionFS (Union File System): Union File System (UnionFS) is a hierarchical, lightweight and high-performance file system that supports modifications to the file system as One submission can be applied layer by layer, and different directories can be mounted to the same virtual file system (unite several directories into a single virtual file system). The Union file system is the basis of Docker images. Images can be inherited through layering. Based on the base image (without a parent image), various specific application images can be produced.

In addition, different Docker containers can share some basic file system layers, and at the same time add their own unique change layers, greatly improving storage efficiency.

The AUFS (AnotherUnionFS) used in Docker is a union file system. AUFS supports setting readonly, readwrite, and whiteout-able permissions for each member directory (similar to Git branches). At the same time, AUFS has a concept similar to hierarchies. For read-only permissions, Permission branches can be logically modified incrementally (without affecting the read-only part).

Docker currently supports joint file system types including AUFS, btrfs, vfs and DeviceMapper.

Features: Load multiple file systems at the same time, but from the outside, only one file system can be seen. Joint loading will superimpose each layer of file systems, so that the final file system will include all underlying files. files and directories.

base mirror

base mirror simply means that it does not depend on any other mirror. It is built completely from scratch. Other mirrors are built on top of it. It can be compared to the foundation of a building and the origin of docker mirroring.

The base image has two meanings: (1) It does not depend on other images and is built from scratch; (2) Other images can be expanded based on it.

So, what can be called a base image are usually Docker images of various Linux distributions, such as Ubuntu, Debian, CentOS, etc.

Docker image loading principle

Docker's image is actually composed of layer by layer file systems, and this layer of file system is UnionFS.

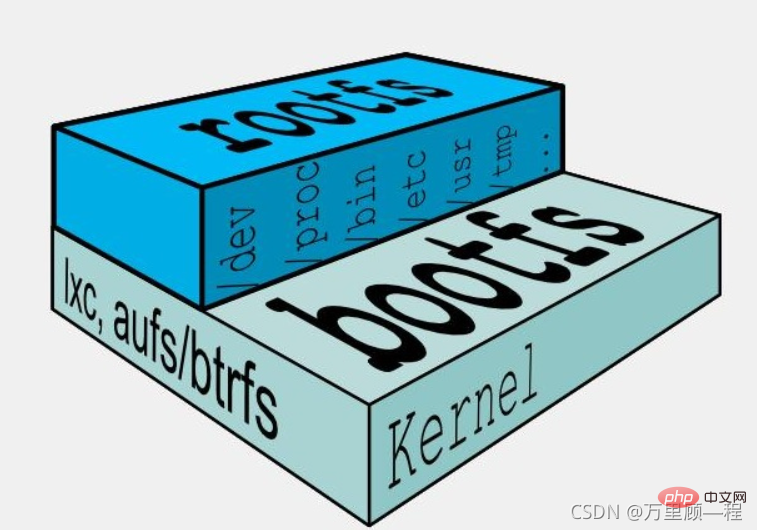

Typical Linux requires two FSs to start and run, bootfs rootfs:

bootfs (boot file system) mainly includes bpotloader and kernel, and bootloader mainly Boot loading kernel, Linux will load the bootfs file system when it first starts. The bottom layer of the Docker image is bootfs. This layer is the same as our typical Linux/Unix system, including the boot loader bootloader and kernel kernel. When the boot loading is completed, the entire kernel is in the memory. At this time, the right to use the memory has been transferred from bootfs to the kernel. At this time, the system will also uninstall bootfs.

rootfs (root file system), on top of bootfs. Contains standard directories and files such as /dev, /proc, /bin, /etc and so on in typical Linux systems. Roots are various operating system distributions, such as Ubuntu, Centos, etc.

Why is there no kernel in the Docker image?

In terms of image size, a relatively small image is only a little over 1KB, or a few MB, while the kernel file requires several Ten MB, so there is no kernel in the image. After being started as a container, the image will directly use the host's kernel, and the image itself only provides the corresponding rootfs, which is the user space file system necessary for the normal operation of the system, such as /dev/, /proc, /bin, /etc and other directories, so there is basically no /boot directory in the container, and /boot stores files and directories related to the kernel.

Since the container starts and runs directly using the host's kernel and does not directly call the physical hardware, it does not involve hardware drivers, so the kernel and drivers are not used. And if virtual machine technology, each virtual machine has its own independent kernel

2. Hierarchical structure

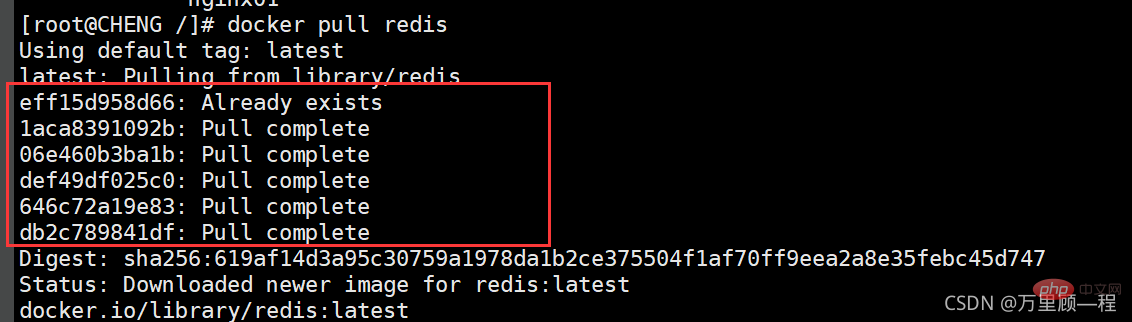

Docker image is a hierarchical structure, each layer is built on other layers Above, to achieve the function of incrementally adding content, the Docker image is also downloaded in layers. Take downloading the redis image as an example:

As you can see, the new image is generated layer by layer from the base image. Each time you install a piece of software, you add a layer to the existing image.

Why does Docker image adopt this hierarchical structure?

The biggest benefit is resource sharing. For example, if multiple images are built from the same Base image, then the host only needs to keep one base image on the disk, and only one base image needs to be loaded into the memory, so that it can serve all containers. , and each layer of the image can be shared.

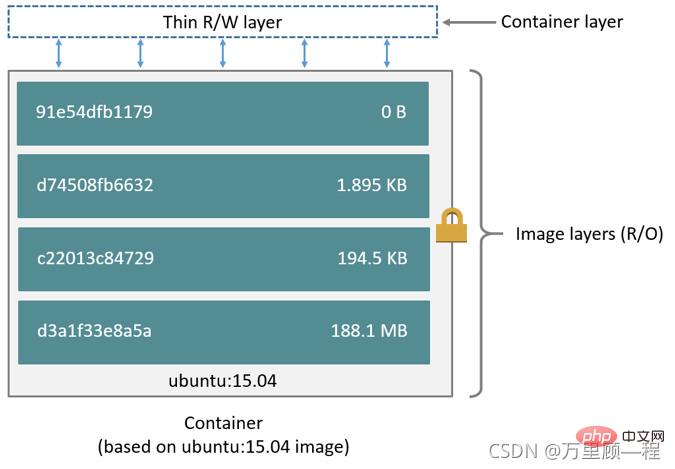

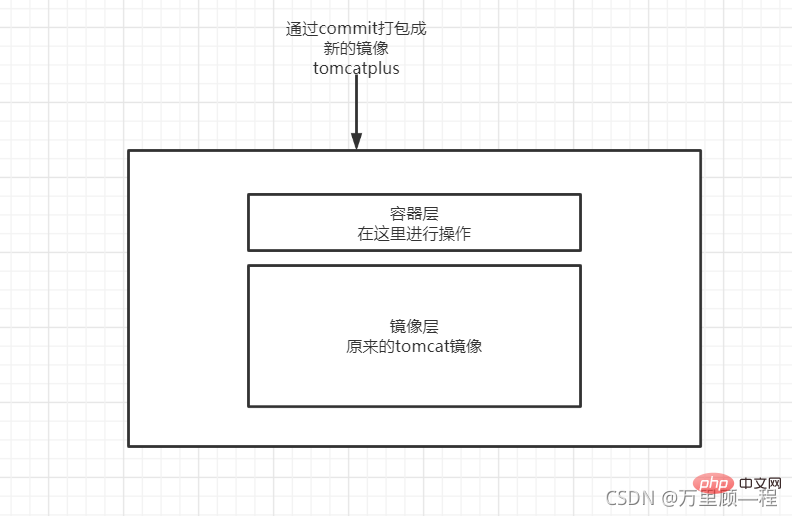

Writable container layer

Docker images are read-only. When the container starts, a new writable layer is loaded into Mirror top.

This new layer is the writable container layer, and everything below the container is called the mirror layer.

Docker uses a copy-on-write strategy to ensure the security of the base image, as well as higher performance and space utilization.

- When the container needs to read a file

Start from the top image layer and search downwards. After finding it, read it into the memory. If it is already in the memory, , can be used directly. In other words, Docker containers running on the same machine share the same files at runtime.

- When the container needs to modify a file

Search from top to bottom and copy it to the container layer after finding it. For the container, what you can see is the container layer For this file, you cannot see the files in the image layer, and then directly modify the files in the container layer.

- When the container needs to delete a file

Search from top to bottom, and after finding it, record the deletion in the container. It is not a real deletion, but a soft deletion. This causes the image size to only increase, not decrease.

- When the container needs to add files

Add them directly to the topmost container writable layer without affecting the image layer.

All changes to the container, whether adding, deleting, or modifying files, will only occur in the container layer. Only the container layer is writable, and all image layers below the container layer are read-only, so the image can be shared by multiple containers.

3. Layering practice - commit to submit the image

Create a container through the image, then operate the container layer, keep the image layer unchanged, and then package the container layer and image layer after the operation Submit as a new image.

docker commit: Create a new image with a container.

Syntax:

docker commit [OPTIONS] CONTAINER [REPOSITORY[:TAG]]

OPTIONS Description:

- **-a*The mirror author submitted;

- **-c *Use Dockerfile instructions to create the image;

- **-m *Descriptive text when submitting;

- **-p *Pause the container when committing.

Usage example: Create a container through an image, then operate the container layer, and then package the operated container layer and image layer into a new image for submission.

1. First download the tomcat image

2. Create and run the tomcat container through the tomcat image:

docker run -d --name="tomcat01" tomcat

3. Enter the running tomcat container:

docker exec -it tomcat01 /bin/bash

4. Copy the files in the tomcat container webapps.dist directory to the webapps directory:

cp -r webapps.dist/* webapps

5. Docker commit commit image

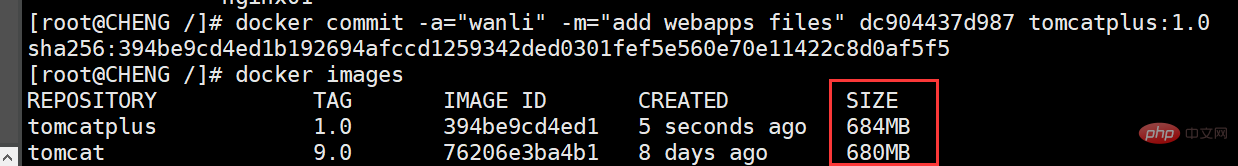

Save the container dc904437d987 as a new image, And add the submitter information and description information. The submitted image is named tomcatplus and the version is 1.0:

docker commit -a="wanli" -m="add webapps files" dc904437d987 tomcatplus:1.0

You can see that the new tomcat image size after commit is larger than the original one. The tomcat image is a little larger because we copy files in the container layer.

Recommended learning: "docker video tutorial"

The above is the detailed content of Docker image principle: joint file system and layered understanding (detailed examples). For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to choose Hadoop version in Debian

Apr 13, 2025 am 11:48 AM

How to choose Hadoop version in Debian

Apr 13, 2025 am 11:48 AM

When choosing a Hadoop version suitable for Debian system, the following key factors need to be considered: 1. Stability and long-term support: For users who pursue stability and security, it is recommended to choose a Debian stable version, such as Debian11 (Bullseye). This version has been fully tested and has a support cycle of up to five years, which can ensure the stable operation of the system. 2. Package update speed: If you need to use the latest Hadoop features and features, you can consider Debian's unstable version (Sid). However, it should be noted that unstable versions may have compatibility issues and stability risks. 3. Community support and resources: Debian has huge community support, which can provide rich documentation and

Docker on Linux: Best Practices and Tips

Apr 13, 2025 am 12:15 AM

Docker on Linux: Best Practices and Tips

Apr 13, 2025 am 12:15 AM

Best practices for using Docker on Linux include: 1. Create and run containers using dockerrun commands, 2. Use DockerCompose to manage multi-container applications, 3. Regularly clean unused images and containers, 4. Use multi-stage construction to optimize image size, 5. Limit container resource usage to improve security, and 6. Follow Dockerfile best practices to improve readability and maintenance. These practices can help users use Docker efficiently, avoid common problems and optimize containerized applications.

How to integrate GitLab with other tools in Debian

Apr 13, 2025 am 10:12 AM

How to integrate GitLab with other tools in Debian

Apr 13, 2025 am 10:12 AM

Integrating GitLab with other tools in Debian can be achieved through the following steps: Install the GitLab update system package: sudoapt-getupdate Install dependencies: sudoapt-getinstall-ycurlopenssh-serverca-certificatestzdataperl Add GitLab official repository: curlhttps://packages.gitlab.co

Discussion on the log rotation strategy of Debian Node.js

Apr 12, 2025 pm 09:03 PM

Discussion on the log rotation strategy of Debian Node.js

Apr 12, 2025 pm 09:03 PM

This article discusses the log rotation strategy for running Node.js applications in Debian systems, aiming to effectively manage the size and quantity of log files, avoid excessive disk space, and simplify the log archiving and analysis process. Log Rotation Method utilizes Node.js log library: Many popular Node.js log libraries (such as Winston, Bunyan, and Pino) have built-in log Rotation functionality, which can be easily implemented through configuration. For example, the RotatingFileHandler of the Winston library can set the log file size and quantity limits. Configuration file example (Winston):constwinston=require('wi

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

Linux Containers: The Foundation of Docker

Apr 14, 2025 am 12:14 AM

Linux Containers: The Foundation of Docker

Apr 14, 2025 am 12:14 AM

LXC is the foundation of Docker, and it realizes resource and environment isolation through cgroups and namespaces of the Linux kernel. 1) Resource isolation: cgroups limit CPU, memory and other resources. 2) Environment isolation: namespaces provides independent process, network, and file system views.

What is the CentOS MongoDB backup strategy?

Apr 14, 2025 pm 04:51 PM

What is the CentOS MongoDB backup strategy?

Apr 14, 2025 pm 04:51 PM

Detailed explanation of MongoDB efficient backup strategy under CentOS system This article will introduce in detail the various strategies for implementing MongoDB backup on CentOS system to ensure data security and business continuity. We will cover manual backups, timed backups, automated script backups, and backup methods in Docker container environments, and provide best practices for backup file management. Manual backup: Use the mongodump command to perform manual full backup, for example: mongodump-hlocalhost:27017-u username-p password-d database name-o/backup directory This command will export the data and metadata of the specified database to the specified backup directory.

How to upgrade GitLab version of Debian system

Apr 13, 2025 am 09:48 AM

How to upgrade GitLab version of Debian system

Apr 13, 2025 am 09:48 AM

Upgrading the GitLab version on the Debian system can follow the following steps: Method 1: Use the upgrade script provided by GitLab to back up the data. Before any upgrade, please make sure that you back up all important data of GitLab, including repositories, configuration files and databases. Download the latest version of GitLab and visit the official GitLab website to find the latest version suitable for your system, and download the corresponding installation package. Stop GitLab service sudogitlab-ctlstopunicorn