Operation and Maintenance

Operation and Maintenance

Apache

Apache

Let's talk about how to parse Apache Avro data (explanation with examples)

Let's talk about how to parse Apache Avro data (explanation with examples)

Let's talk about how to parse Apache Avro data (explanation with examples)

How to parse Apache Avro data? This article will introduce you to the methods of serializing to generate Avro data, deserializing to parse Avro data, and using FlinkSQL to parse Avro data. I hope it will be helpful to you!

With the rapid development of the Internet, cutting-edge technologies such as cloud computing, big data, artificial intelligence AI, and the Internet of Things have become mainstream high-tech technologies in today's era, such as e-commerce websites , face recognition, driverless driving, smart homes, smart cities, etc., not only facilitate people's daily necessities, food, housing and transportation, but behind the scenes, there is always a large amount of data being collected, cleared and analyzed by various system platforms. , and it is particularly important to ensure low latency, high throughput, and security of data. Apache Avro itself is serialized through Schema for binary transmission. On the one hand, it ensures high-speed transmission of data, and on the other hand, it ensures data security. , avro is currently used more and more widely in various industries. How to process and parse avro data is particularly important. This article will demonstrate how to generate avro data through serialization and use FlinkSQL for analysis.

This article is a demo of avro parsing. Currently, FlinkSQL is only suitable for simple avro data parsing. Complex nested avro data is not supported for the time being.

Scene introduction

This article mainly introduces the following three key contents:

How to serialize and generate Avro data

-

How to deserialize and parse Avro data

How to use FlinkSQL to parse Avro data

Prerequisites

To understand what avro is, please refer to the apache avro official website quick start guide

Understand avro application scenarios

Operation steps

1. Create a new avro maven project and configure the pom dependency

The content of the pom file is as follows:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.huawei.bigdata</groupId>

<artifactId>avrodemo</artifactId>

<version>1.0-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.apache.avro</groupId>

<artifactId>avro</artifactId>

<version>1.8.1</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.avro</groupId>

<artifactId>avro-maven-plugin</artifactId>

<version>1.8.1</version>

<executions>

<execution>

<phase>generate-sources</phase>

<goals>

<goal>schema</goal>

</goals>

<configuration>

<sourceDirectory>${project.basedir}/src/main/avro/</sourceDirectory>

<outputDirectory>${project.basedir}/src/main/java/</outputDirectory>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.6</source>

<target>1.6</target>

</configuration>

</plugin>

</plugins>

</build>

</project>Note: The above pom file is configured to be automatically generated The path to the class, i.e. {project.basedir}/src/main/java/, after this configuration, when executing the mvn command, this plug-in will automatically generate a class file from the avsc schema in this directory and put it in the latter Under contents. If the avro directory is not generated, just create it manually. 2. Define schema

Use JSON to define schema for Avro. The schema consists of basic types (null, boolean, int, long, float, double, bytes, and string) and complex types (record, enum, array, map, union, and fixed). For example, the following defines a user's schema, creates an avro directory in the main directory, and then creates a new file user.avsc in the avro directory:

{"namespace": "lancoo.ecbdc.pre",

"type": "record",

"name": "User",

"fields": [

{"name": "name", "type": "string"},

{"name": "favorite_number", "type": ["int", "null"]},

{"name": "favorite_color", "type": ["string", "null"]}

]

} 3. Compile schema

3. Compile schema

点击maven projects项目的compile进行编译,会自动在创建namespace路径和User类代码

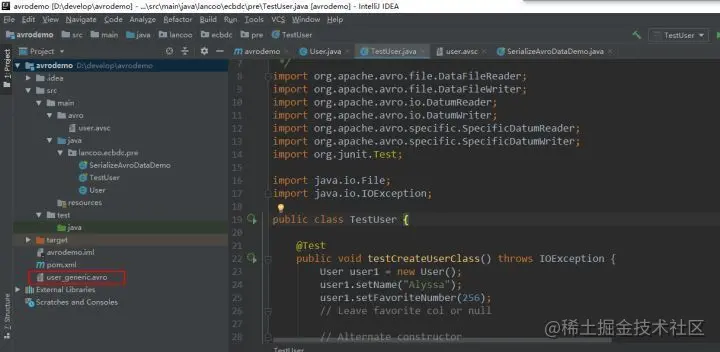

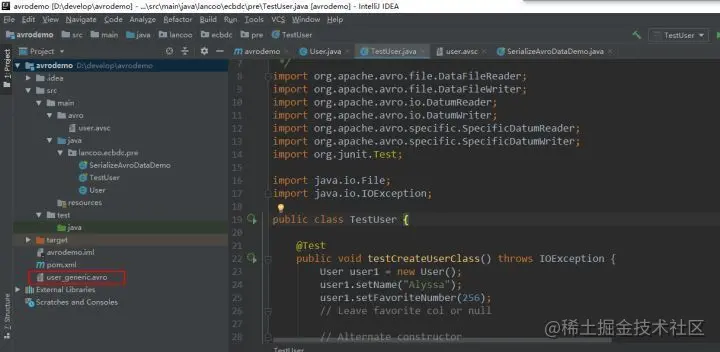

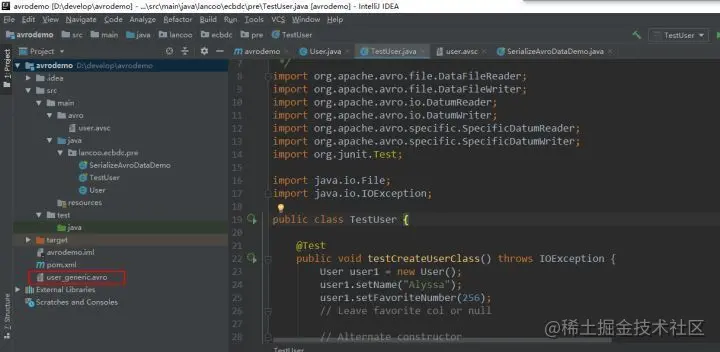

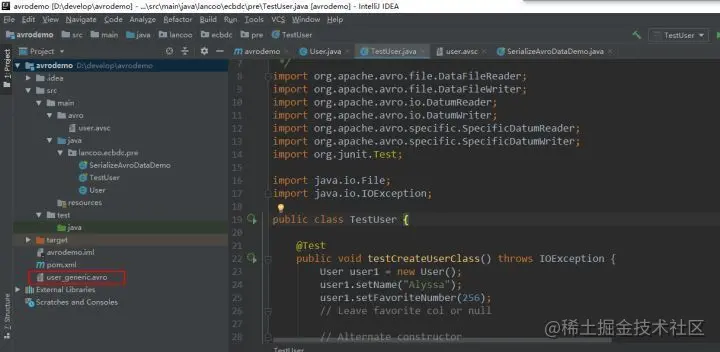

4、序列化

创建TestUser类,用于序列化生成数据

User user1 = new User();

user1.setName("Alyssa");

user1.setFavoriteNumber(256);

// Leave favorite col or null

// Alternate constructor

User user2 = new User("Ben", 7, "red");

// Construct via builder

User user3 = User.newBuilder()

.setName("Charlie")

.setFavoriteColor("blue")

.setFavoriteNumber(null)

.build();

// Serialize user1, user2 and user3 to disk

DatumWriter<User> userDatumWriter = new SpecificDatumWriter<User>(User.class);

DataFileWriter<User> dataFileWriter = new DataFileWriter<User>(userDatumWriter);

dataFileWriter.create(user1.getSchema(), new File("user_generic.avro"));

dataFileWriter.append(user1);

dataFileWriter.append(user2);

dataFileWriter.append(user3);

dataFileWriter.close();执行序列化程序后,会在项目的同级目录下生成avro数据

user_generic.avro内容如下:

Objavro.schema�{"type":"record","name":"User","namespace":"lancoo.ecbdc.pre","fields":[{"name":"name","type":"string"},{"name":"favorite_number","type":["int","null"]},{"name":"favorite_color","type":["string","null"]}]}至此avro数据已经生成。

5、反序列化

通过反序列化代码解析avro数据

// Deserialize Users from disk

DatumReader<User> userDatumReader = new SpecificDatumReader<User>(User.class);

DataFileReader<User> dataFileReader = new DataFileReader<User>(new File("user_generic.avro"), userDatumReader);

User user = null;

while (dataFileReader.hasNext()) {

// Reuse user object by passing it to next(). This saves us from

// allocating and garbage collecting many objects for files with

// many items.

user = dataFileReader.next(user);

System.out.println(user);

}执行反序列化代码解析user_generic.avro

avro数据解析成功。

6、将user_generic.avro上传至hdfs路径

hdfs dfs -mkdir -p /tmp/lztest/ hdfs dfs -put user_generic.avro /tmp/lztest/

7、配置flinkserver

- 准备avro jar包

将flink-sql-avro-*.jar、flink-sql-avro-confluent-registry-*.jar放入flinkserver lib,将下面的命令在所有flinkserver节点执行

cp /opt/huawei/Bigdata/FusionInsight_Flink_8.1.2/install/FusionInsight-Flink-1.12.2/flink/opt/flink-sql-avro*.jar /opt/huawei/Bigdata/FusionInsight_Flink_8.1.3/install/FusionInsight-Flink-1.12.2/flink/lib chmod 500 flink-sql-avro*.jar chown omm:wheel flink-sql-avro*.jar

同时重启FlinkServer实例,重启完成后查看avro包是否被上传

hdfs dfs -ls /FusionInsight_FlinkServer/8.1.2-312005/lib

8、编写FlinkSQL

CREATE TABLE testHdfs( name String, favorite_number int, favorite_color String ) WITH( 'connector' = 'filesystem', 'path' = 'hdfs:///tmp/lztest/user_generic.avro', 'format' = 'avro' );CREATE TABLE KafkaTable ( name String, favorite_number int, favorite_color String ) WITH ( 'connector' = 'kafka', 'topic' = 'testavro', 'properties.bootstrap.servers' = '96.10.2.1:21005', 'properties.group.id' = 'testGroup', 'scan.startup.mode' = 'latest-offset', 'format' = 'avro' ); insert into KafkaTable select * from testHdfs;

保存提交任务

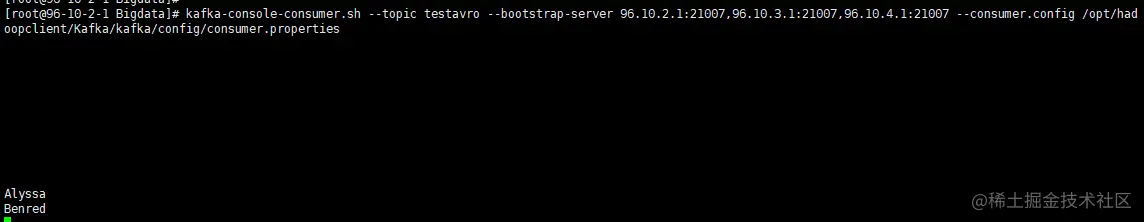

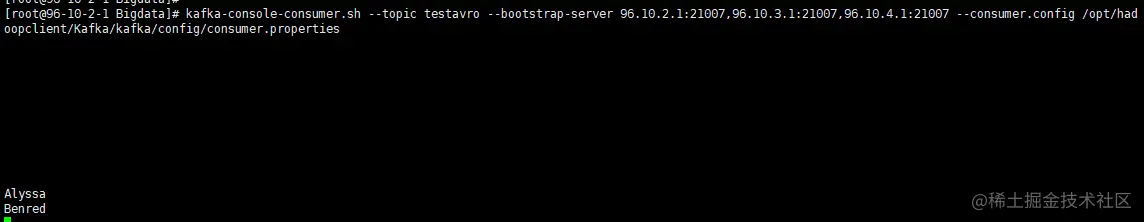

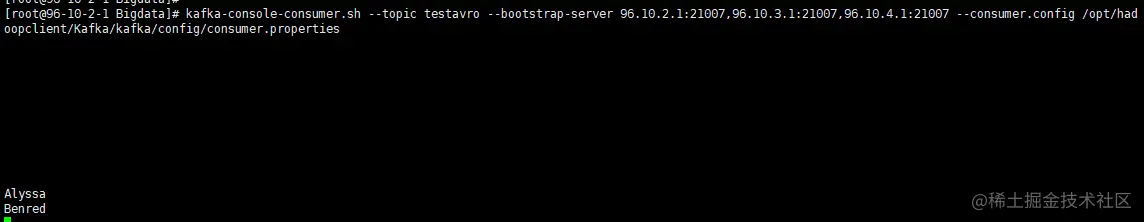

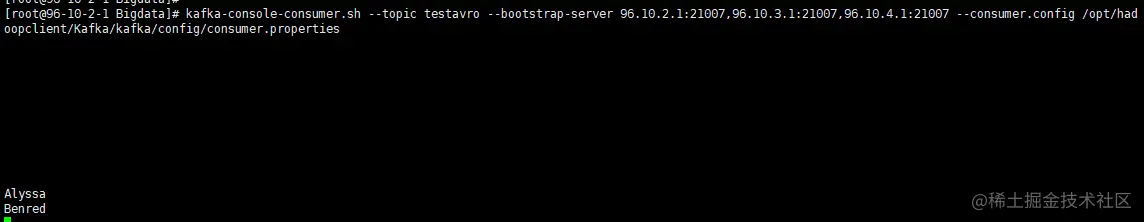

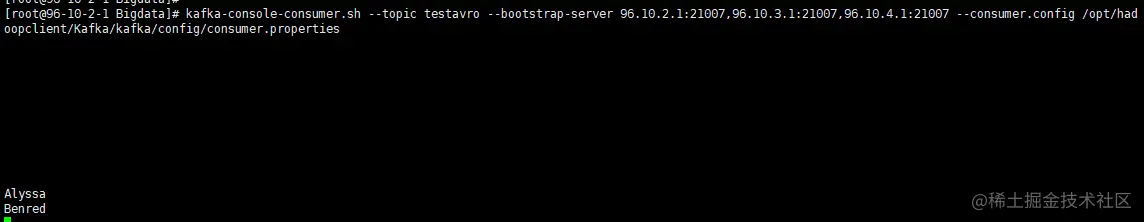

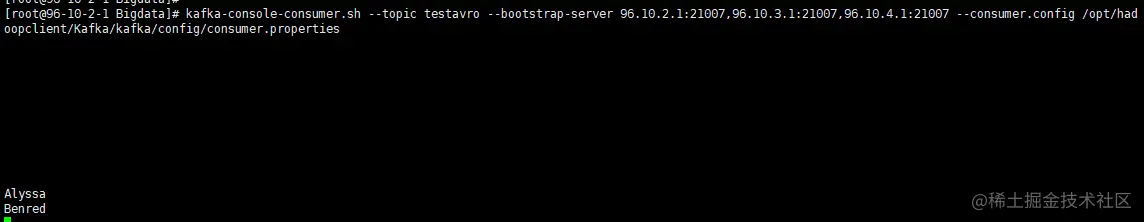

9、查看对应topic中是否有数据

FlinkSQL解析avro数据成功。

【推荐:Apache使用教程】

The above is the detailed content of Let's talk about how to parse Apache Avro data (explanation with examples). For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

1207

1207

24

24

How to set the cgi directory in apache

Apr 13, 2025 pm 01:18 PM

How to set the cgi directory in apache

Apr 13, 2025 pm 01:18 PM

To set up a CGI directory in Apache, you need to perform the following steps: Create a CGI directory such as "cgi-bin", and grant Apache write permissions. Add the "ScriptAlias" directive block in the Apache configuration file to map the CGI directory to the "/cgi-bin" URL. Restart Apache.

How to start apache

Apr 13, 2025 pm 01:06 PM

How to start apache

Apr 13, 2025 pm 01:06 PM

The steps to start Apache are as follows: Install Apache (command: sudo apt-get install apache2 or download it from the official website) Start Apache (Linux: sudo systemctl start apache2; Windows: Right-click the "Apache2.4" service and select "Start") Check whether it has been started (Linux: sudo systemctl status apache2; Windows: Check the status of the "Apache2.4" service in the service manager) Enable boot automatically (optional, Linux: sudo systemctl

What to do if the apache80 port is occupied

Apr 13, 2025 pm 01:24 PM

What to do if the apache80 port is occupied

Apr 13, 2025 pm 01:24 PM

When the Apache 80 port is occupied, the solution is as follows: find out the process that occupies the port and close it. Check the firewall settings to make sure Apache is not blocked. If the above method does not work, please reconfigure Apache to use a different port. Restart the Apache service.

How to connect to the database of apache

Apr 13, 2025 pm 01:03 PM

How to connect to the database of apache

Apr 13, 2025 pm 01:03 PM

Apache connects to a database requires the following steps: Install the database driver. Configure the web.xml file to create a connection pool. Create a JDBC data source and specify the connection settings. Use the JDBC API to access the database from Java code, including getting connections, creating statements, binding parameters, executing queries or updates, and processing results.

How to delete more than server names of apache

Apr 13, 2025 pm 01:09 PM

How to delete more than server names of apache

Apr 13, 2025 pm 01:09 PM

To delete an extra ServerName directive from Apache, you can take the following steps: Identify and delete the extra ServerName directive. Restart Apache to make the changes take effect. Check the configuration file to verify changes. Test the server to make sure the problem is resolved.

How to view your apache version

Apr 13, 2025 pm 01:15 PM

How to view your apache version

Apr 13, 2025 pm 01:15 PM

There are 3 ways to view the version on the Apache server: via the command line (apachectl -v or apache2ctl -v), check the server status page (http://<server IP or domain name>/server-status), or view the Apache configuration file (ServerVersion: Apache/<version number>).

How to use Debian Apache logs to improve website performance

Apr 12, 2025 pm 11:36 PM

How to use Debian Apache logs to improve website performance

Apr 12, 2025 pm 11:36 PM

This article will explain how to improve website performance by analyzing Apache logs under the Debian system. 1. Log Analysis Basics Apache log records the detailed information of all HTTP requests, including IP address, timestamp, request URL, HTTP method and response code. In Debian systems, these logs are usually located in the /var/log/apache2/access.log and /var/log/apache2/error.log directories. Understanding the log structure is the first step in effective analysis. 2. Log analysis tool You can use a variety of tools to analyze Apache logs: Command line tools: grep, awk, sed and other command line tools.

How Debian improves Hadoop data processing speed

Apr 13, 2025 am 11:54 AM

How Debian improves Hadoop data processing speed

Apr 13, 2025 am 11:54 AM

This article discusses how to improve Hadoop data processing efficiency on Debian systems. Optimization strategies cover hardware upgrades, operating system parameter adjustments, Hadoop configuration modifications, and the use of efficient algorithms and tools. 1. Hardware resource strengthening ensures that all nodes have consistent hardware configurations, especially paying attention to CPU, memory and network equipment performance. Choosing high-performance hardware components is essential to improve overall processing speed. 2. Operating system tunes file descriptors and network connections: Modify the /etc/security/limits.conf file to increase the upper limit of file descriptors and network connections allowed to be opened at the same time by the system. JVM parameter adjustment: Adjust in hadoop-env.sh file