Completely master the implementation of Redis's LRU cache elimination algorithm

This article brings you relevant knowledge about Redis, which mainly introduces the implementation of the LRU cache elimination algorithm, including the implementation of the approximate LRU algorithm of Redis, the actual execution of the approximate LRU algorithm, etc. ,I hope everyone has to help.

Recommended learning: Redis learning tutorial

1 Implementation principle of standard LRU

LRU, Least Recently Used (Least Recently Used, LRU), classic caching algorithm.

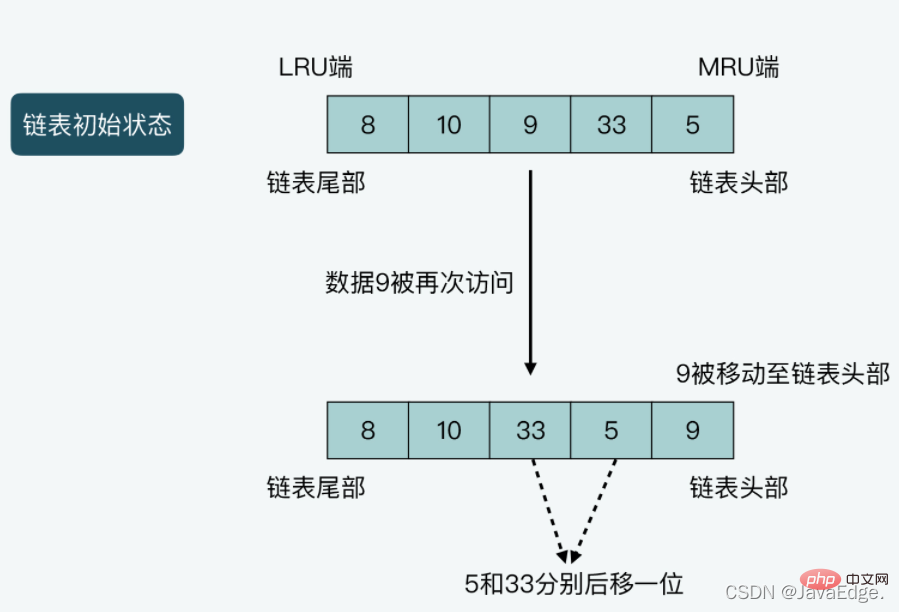

LRU will use a linked list to maintain the access status of each data in the cache, and adjust the position of the data in the linked list based on the real-time access of the data, and then use the position of the data in the linked list to indicate that the data was recently Visited, or haven’t visited for a while.

LRU will set the head and tail of the chain to the MRU end and LRU end respectively:

- MRU, the abbreviation of Most Recently Used, means that the data here has just been accessed

- LRU side, the least recently accessed data here

LRU can be divided into the following situations:

- case1: When new data is inserted , LRU will insert the data into the head of the chain, and at the same time move the data at the head of the original linked list and the data after it by one bit towards the tail

- case2: When there is data that has just been After accessing once, LRU will move the data from its current position in the linked list to the head of the chain. Move other data from the head of the linked list to its current position by one bit towards the tail

- case3: When the length of the linked list cannot accommodate more data and new data is inserted, LRU Removing the data at the end of the linked list is also equivalent to eliminating the data from the cache.

Illustration of case 2: The length of the linked list is 5, and the data saved from the head to the tail of the linked list are 5, 33, and 9 respectively. ,10,8. Assume that data 9 is accessed once, then 9 will be moved to the head of the linked list, and at the same time, data 5 and 33 will both be moved one position to the end of the linked list.

So if it is implemented strictly according to LRU, assuming that Redis saves a lot of data, it needs to be implemented in the code:

-

is When Redis uses the maximum memory, it maintains a linked list for all the data that can be accommodated

Additional memory space is required to save the linked list

-

Whenever new data is inserted or existing data is deleted To access again, you need to perform multiple linked list operations

In the process of accessing data, Redis is affected by the overhead of data movement and linked list operations

Ultimately leading to reduced Redis access performance.

So, whether it is to save memory or maintain Redis high performance, Redis is not implemented strictly according to the basic principles of LRU, but provides an approximate LRU algorithm implementation.

2 Redis's approximate LRU algorithm implementation

How does Redis's memory elimination mechanism enable the approximate LRU algorithm? The following configuration parameters in redis.conf:

maxmemory, set the maximum memory capacity that the Redis server can use. Once the actual memory used by the server exceeds the threshold, The server will perform the memory elimination operation

maxmemory-policy according to the maxmemory-policy configuration policy, and set the Redis server memory elimination policy, including approximate LRU, LFU, and TTL value elimination and random elimination, etc.

So, once the maxmemory option is set, and maxmemory-policy is configured as allkeys-lru or volatile-lru , approximate LRU is enabled. Both allkeys-lru and volatile-lru will use approximate LRU to eliminate data. The difference is:

- allkeys-lru filters the data to be eliminated in all KV pairs

- volatile- lru filters the data to be eliminated in the KV pair with TTL set

How does Redis implement the approximate LRU algorithm?

-

Calculation of the global LRU clock value

How to calculate the global LRU clock value to determine the timeliness of data access

-

Initialization and update of LRU clock value of key-value pair

Which functions initialize and update the LRU clock value corresponding to each key-value pair?

-

The actual execution of the approximate LRU algorithm

How to execute the approximate LRU algorithm, that is, when data elimination is triggered, and the implementation of the actual elimination mechanism

2.1 Calculation of global LRU clock value

The approximate LRU algorithm still needs to distinguish the access timeliness of different data, that is, Redis needs to know the latest access time of the data. Hence, the LRU clock: recording the timestamp of each access to data.

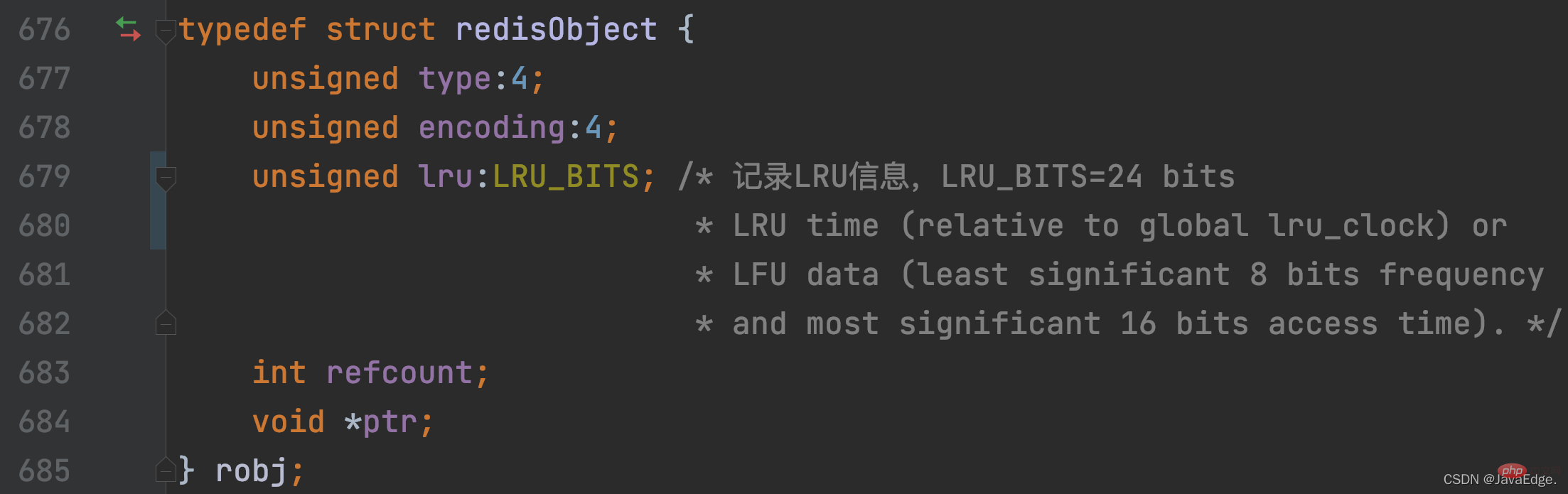

Redis will use a redisObject structure to save the pointer to V for each V in the KV pair. In addition to the pointer that records the value, redisObject also uses 24 bits to save the LRU clock information, which corresponds to the lru member variable. In this way, each KV pair will record its last accessed timestamp in the lru variable.

The redisObject definition contains the definition of lru member variables:

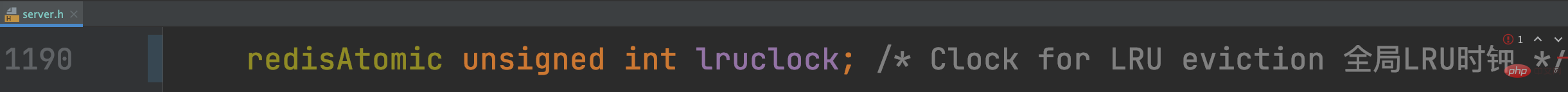

How is the LRU clock value of each KV pair calculated? Redis Server uses an instance-level global LRU clock, and the LRU time of each KV pair is set according to the global LRU clock.

This global LRU clock is stored in the member variable of the Redis global variable serverlruclock

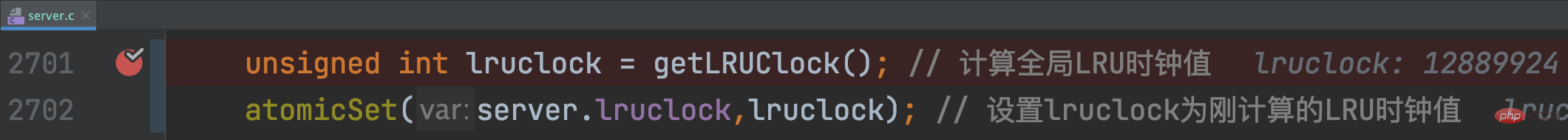

When the Redis Server starts, call initServerConfig to initialize When setting various parameters, getLRUClock will be called to set the value of lruclock:

So, you have to pay attention, **If the time interval between two accesses to a data is

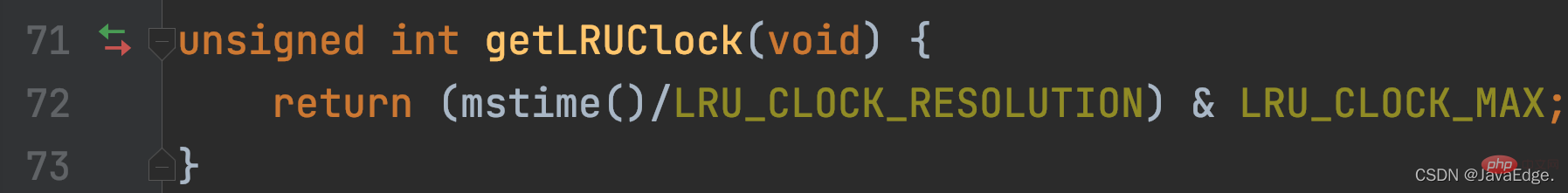

The getLRUClock function divides the UNIX timestamp obtained by LRU_CLOCK_RESOLUTION to obtain the UNIX timestamp calculated with LRU clock precision, which is the current LRU clock value.

getLRUClock will do an AND operation between the LRU clock value and the macro definition LRU_CLOCK_MAX (the maximum value that the LRU clock can represent).

So by default, the global LRU clock value is a UNIX timestamp calculated with a precision of 1s, and is initialized in initServerConfig.

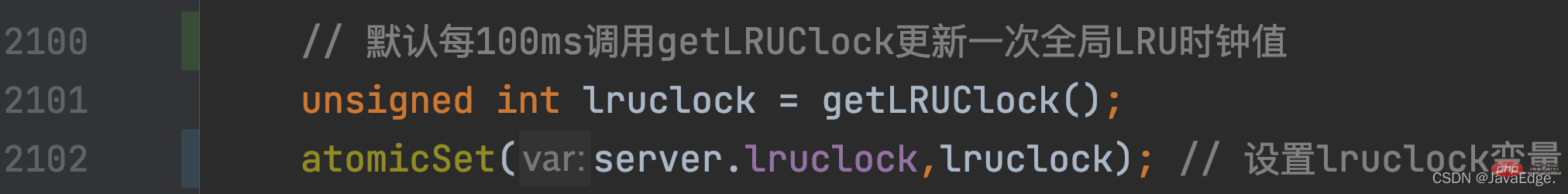

How is the global LRU clock value updated when Redis Server is running? It is related to the serverCron corresponding to the regularly running time event of Redis Server in the event-driven framework.

serverCron, as a callback function for time events, will be executed periodically. Its frequency value is determined by the hz configuration item of redis.conf. The default value is 10, that is, the serverCron function will execute every 100ms ( 1s/10 = 100ms) run once. In serverCron, the global LRU clock value will be updated regularly according to the execution frequency of this function by calling getLRUClock:

In this way, each KV pair can obtain the latest from the global LRU clock Access timestamp.

For each KV pair, in which functions is its corresponding redisObject.lru initialized and updated?

2.2 Initialization and update of LRU clock value of key-value pair

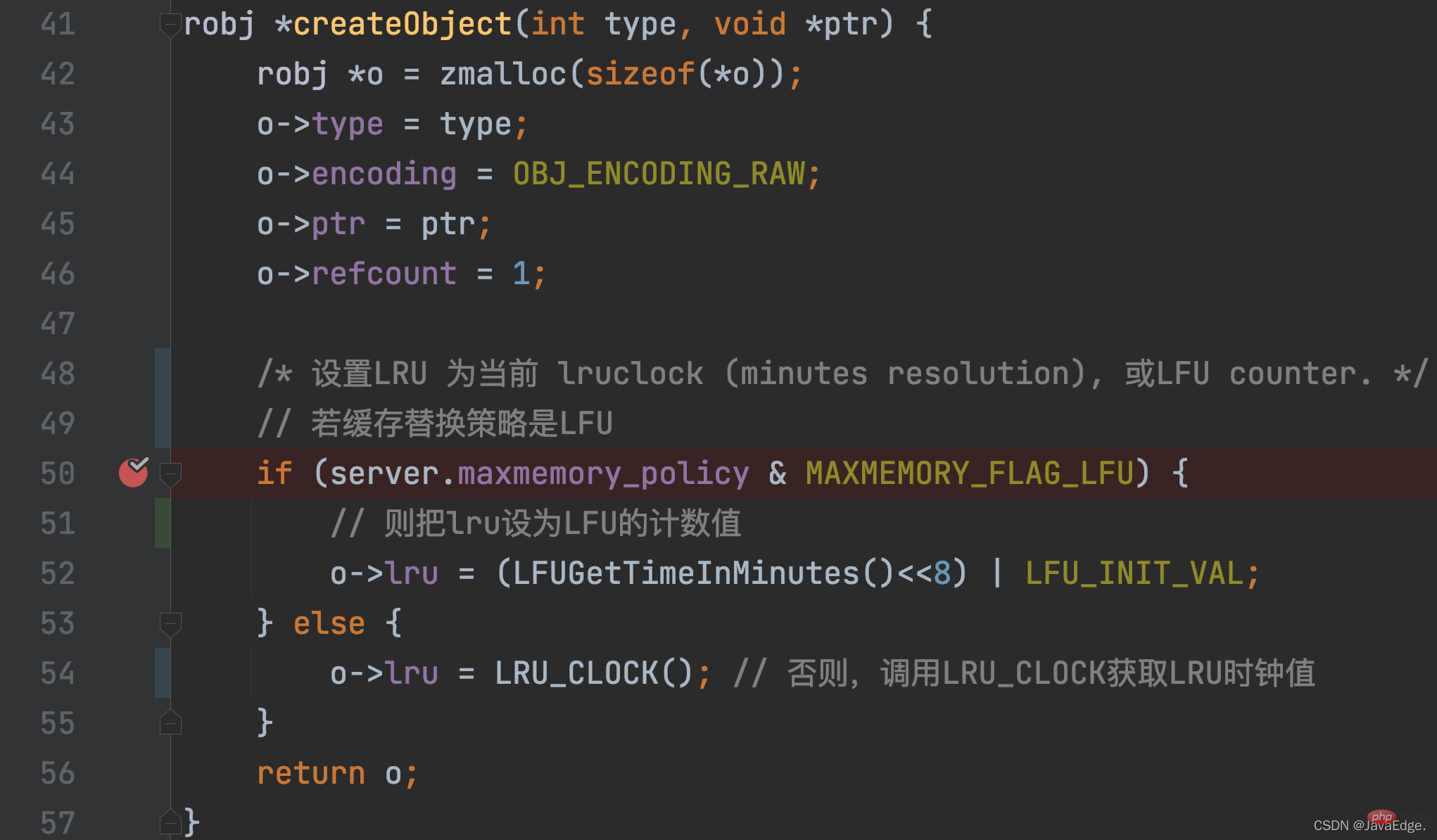

For a KV pair, the LRU clock value is initially set when the KV pair is created. This initialization operation Called in createObject function, this function will be called when Redis wants to create a KV pair.

In addition to allocating memory space to redisObject, createObject will also initialize redisObject.lru according to the maxmemory_policy configuration.

- If maxmemory_policy=LFU, the lru variable value will be initialized and set to the calculated value of the LFU algorithm

- maxmemory_policy≠LFU, then createObject calls LRU_CLOCK to set the lru value, which is the corresponding KV pair LRU clock value.

LRU_CLOCK returns the current global LRU clock value. Because once a KV pair is created, it is equivalent to an access, and its corresponding LRU clock value represents its access timestamp:

Which KV pair? When will the LRU clock value be updated again?

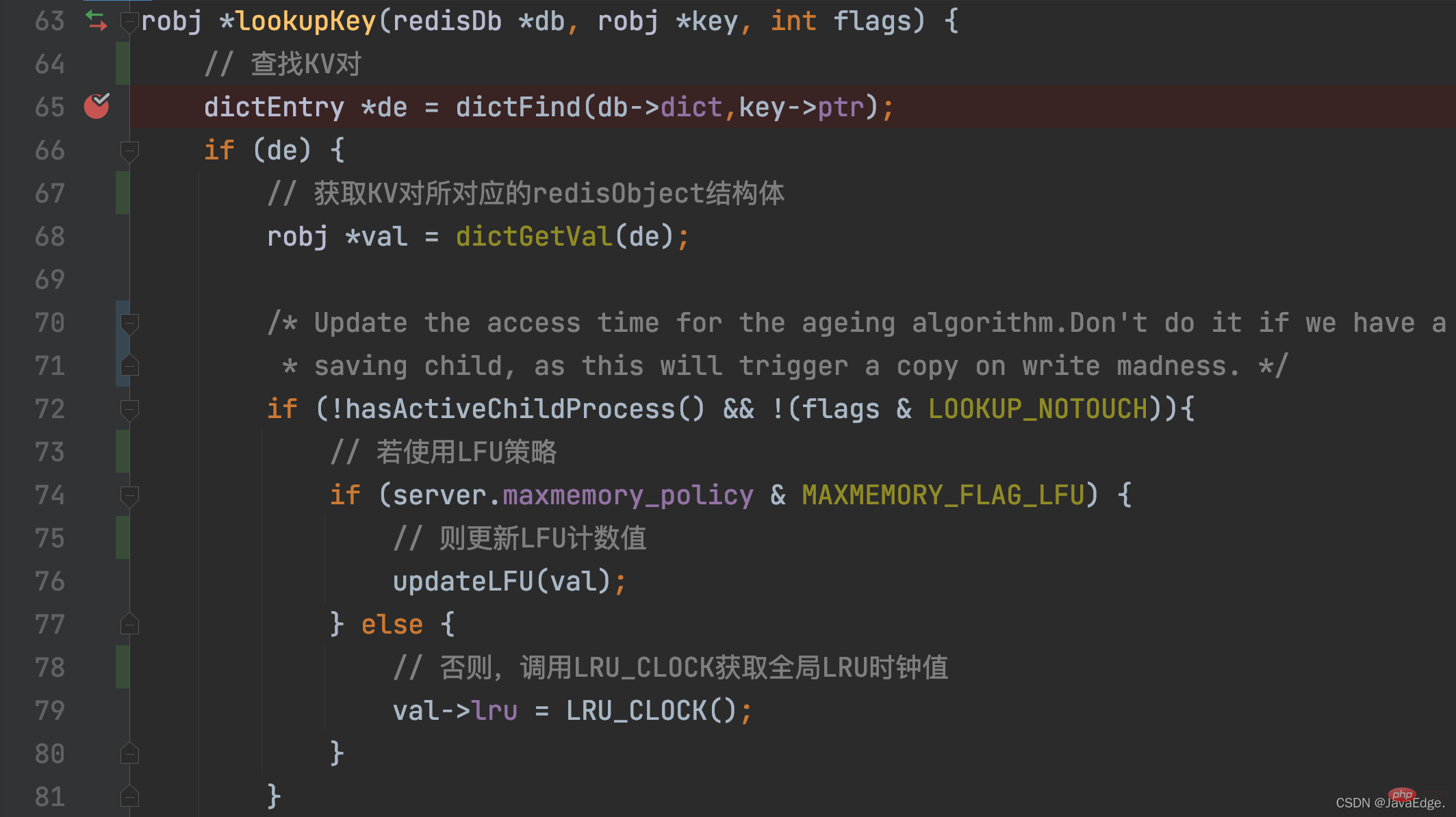

As long as a KV pair is accessed, its LRU clock value will be updated! When a KV pair is accessed, the access operation will eventually call lookupKey.

lookupKey will look up the KV pair to be accessed from the global hash table. If the KV pair exists, lookupKey will update the LRU clock value of the key-value pair, which is its access timestamp, based on the configuration value of maxmemory_policy.

When maxmemory_policy is not configured as an LFU policy, the lookupKey function will call the LRU_CLOCK function to obtain the current global LRU clock value and assign it to the lru variable in the redisObject structure of the key-value pair

In this way, once each KV pair is accessed, it can obtain the latest access timestamp. But you may be curious: How are these access timestamps ultimately used to approximate the LRU algorithm for data elimination?

2.3 Actual execution of approximate LRU algorithm

The reason why Redis implements approximate LRU is to reduce the overhead of memory resources and operation time.

2.3.1 When is algorithm execution triggered?

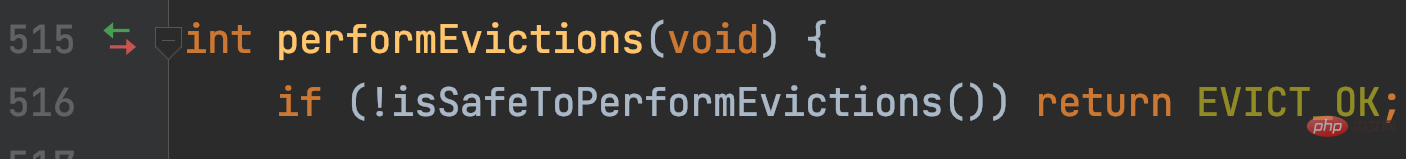

The main logic of approximate LRU is in performEvictions.

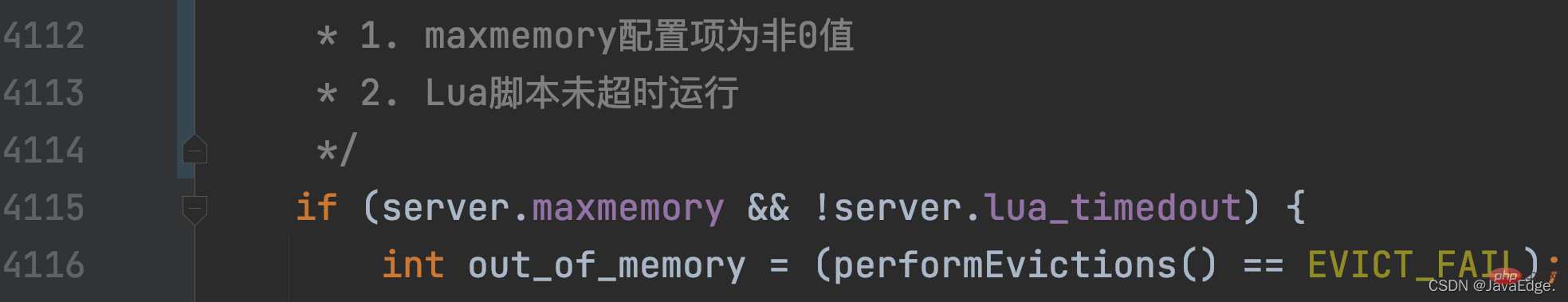

performEvictions is called by evictionTimeProc, and the evictionTimeProc function is called by processCommand.

processCommand, Redis will call when processing each command:

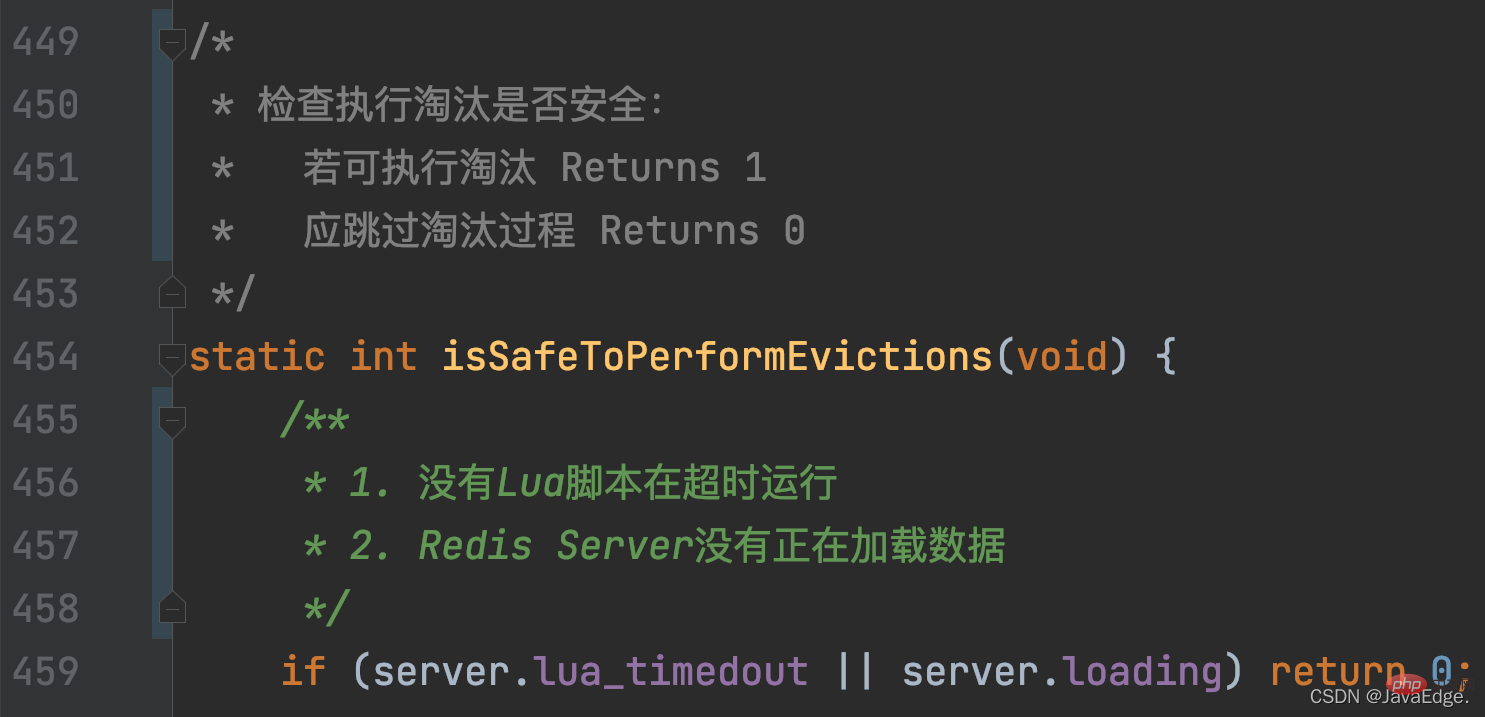

Then, isSafeToPerformEvictions will again judge whether to continue executing performEvictions based on the following conditions:

Then, isSafeToPerformEvictions will again judge whether to continue executing performEvictions based on the following conditions:

Once performEvictions is called and maxmemory-policy is set to allkeys-lru or volatile-lru, approximate LRU execution is triggered.

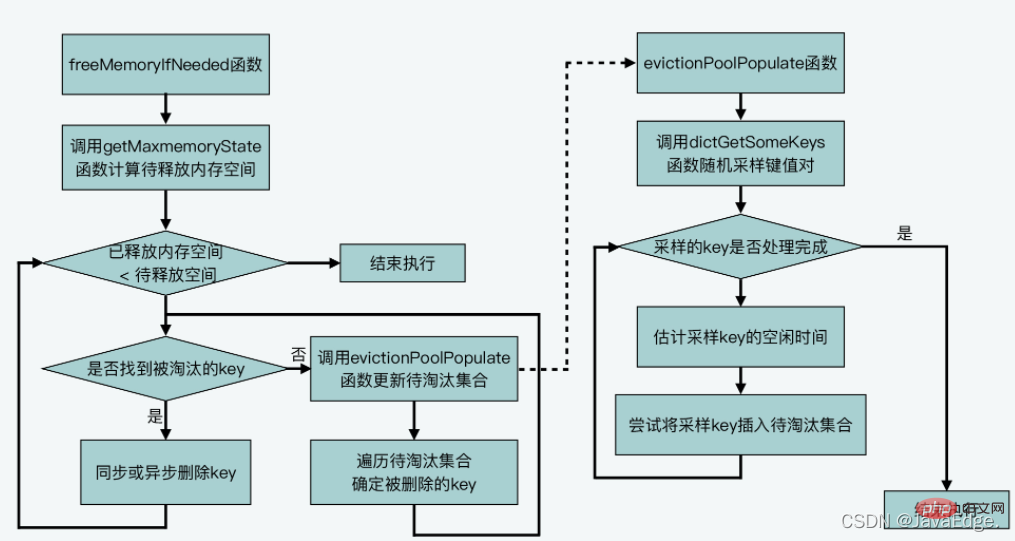

2.3.2 Approximate LRU specific execution process

The execution can be divided into the following steps:

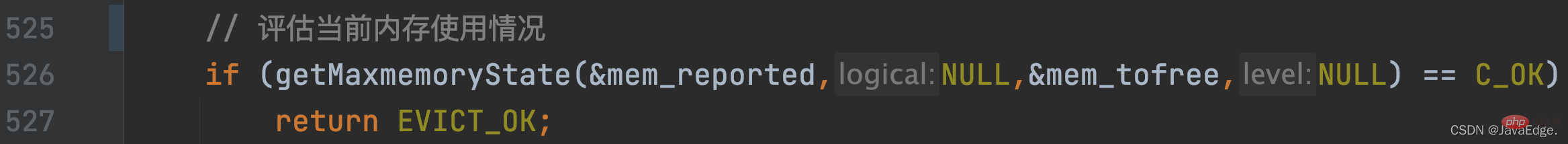

2.3.2.1 Determine the current memory usage

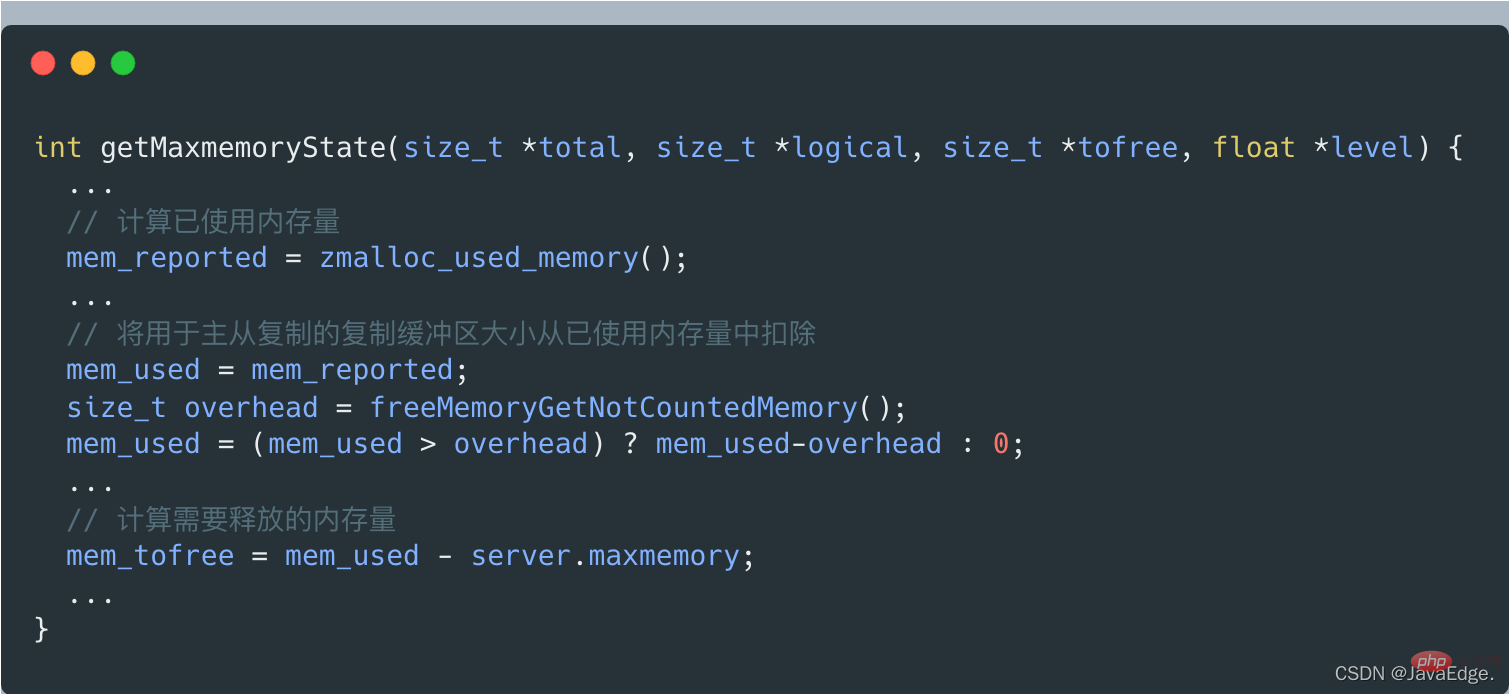

Call getMaxmemoryState to evaluate the current memory usage and determine whether the current memory capacity used by Redis Server exceeds the maxmemory configuration value.

If it does not exceed maxmemory, C_OK will be returned, and performEvictions will also be returned directly.

When getMaxmemoryState evaluates the current memory usage, if it is found that the used memory exceeds maxmemory, it will calculate the amount of memory that needs to be released. The size of this released memory = the amount of used memory - maxmemory.

But the amount of used memory does not include the copy buffer size used for master-slave replication, which is calculated by getMaxmemoryState by calling freeMemoryGetNotCountedMemory.

#If the amount of memory currently used by the Server exceeds the maxmemory upper limit, performEvictions will execute a while loop to eliminate data and release memory.

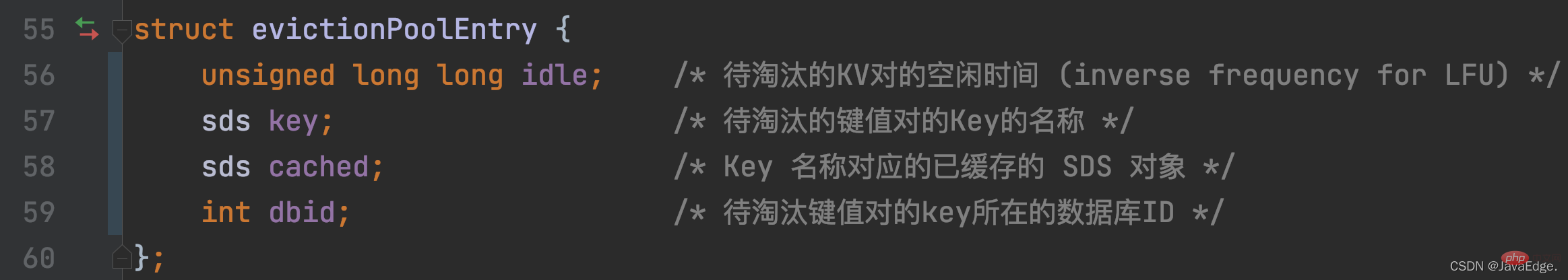

For elimination data, Redis defines the array EvictionPoolLRU to save the candidate KV pairs to be eliminated. The element type is the evictionPoolEntry structure, which saves the idle time idle, corresponding K and other information of the KV pairs to be eliminated:

In this way, when Redis Server executes initSever for initialization, it will call evictionPoolAlloc to allocate memory space for the EvictionPoolLRU array. The size of the array is determined by EVPOOL_SIZE. The default is Save 16 candidate KV pairs to be eliminated.

performEvictions In the cyclic process of eliminating data, the set of candidate KV pairs to be eliminated, that is, the EvictionPoolLRU array, will be updated.

2.3.2.2 Update the set of candidate KV pairs to be eliminated

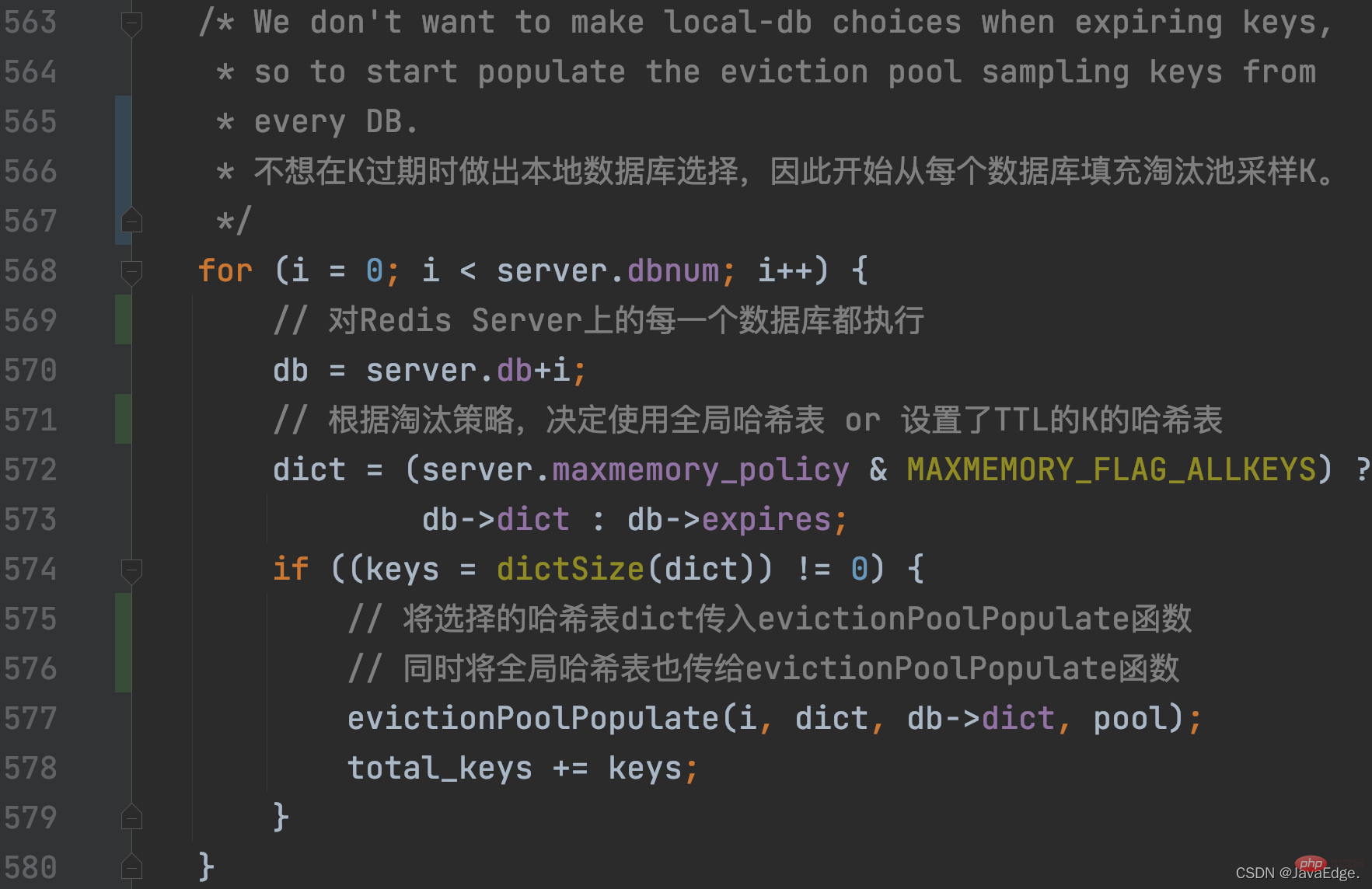

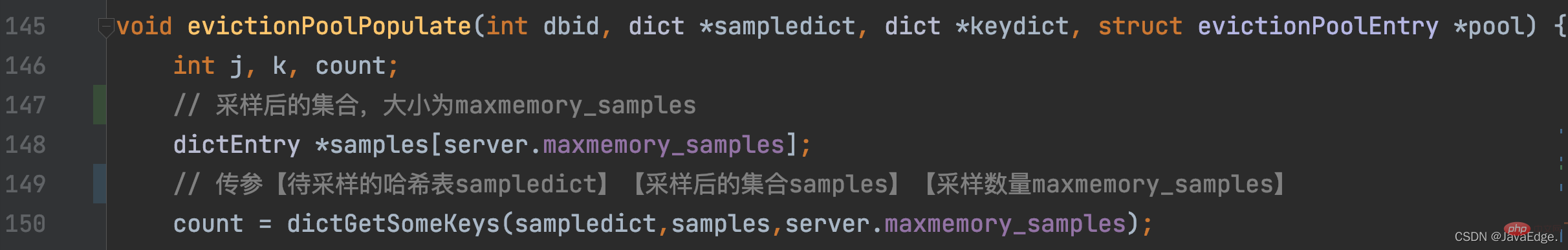

performEvictions calls evictionPoolPopulate, which will first call dictGetSomeKeys to randomly obtain a certain number of K from the hash table to be sampled :

- The hash table sampled by dictGetSomeKeys is determined by the maxmemory_policy configuration item:

- If maxmemory_policy=allkeys_lru, the hash table to be sampled is the global hash table of Redis Server, that is Sampling in all KV pairs

- Otherwise, the hash table to be sampled is the hash table that holds K with TTL set.

- The number of K samples sampled by dictGetSomeKeys is determined by the configuration item maxmemory-samples, default 5:

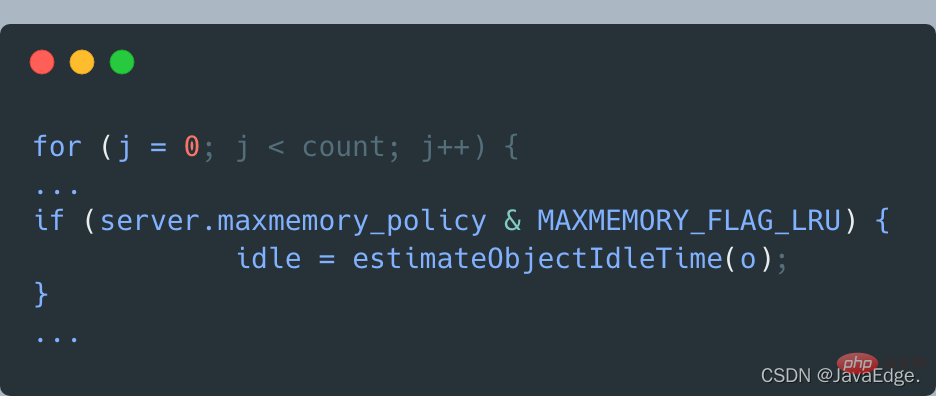

So, dictGetSomeKeys returns the sampled KV pair set. evictionPoolPopulate executes a loop based on the actual number of sampled KV pairs count: call estimateObjectIdleTime to calculate the idle time of each KV pair in the sampling set:

Then, evictionPoolPopulate traverses the waiting The set of eliminated candidate KV pairs, that is, the EvictionPoolLRU array, attempts to insert each sampled KV pair into the EvictionPoolLRU array, depending on one of the following conditions:

- can find a KV pair that has not yet been inserted in the array EvictionPoolPopulate can insert the sampling KV pair into the EvictionPoolLRU array once the empty bit

- can find a KV pair in the array. The idle time

is established. After all sampled key-value pairs are processed, the evictionPoolPopulate function completes updating the set of candidate key-value pairs to be eliminated.

Next, performEvictions begins to select the KV pair that will eventually be eliminated.

2.3.2.3 Select the eliminated KV pair and delete it

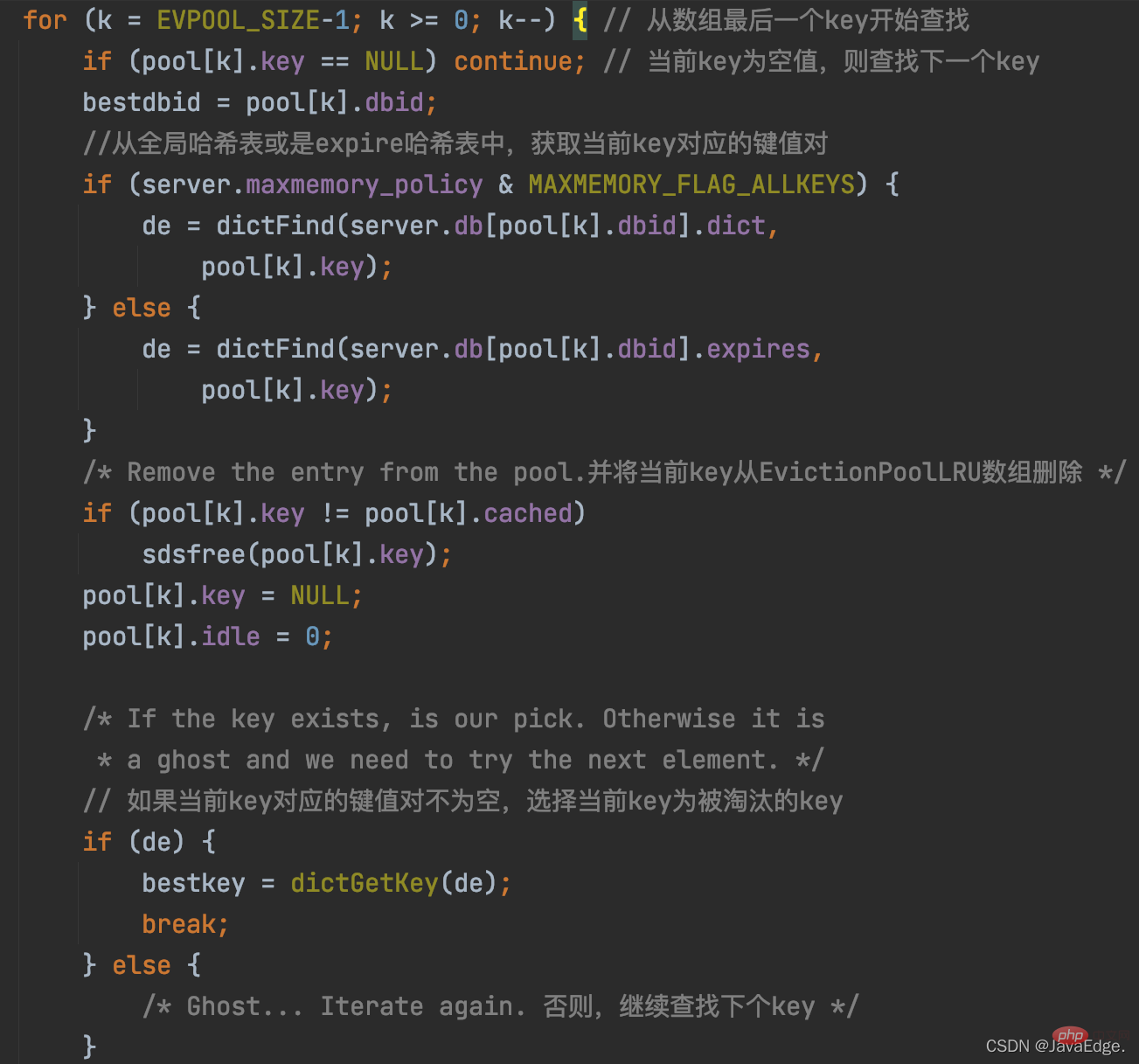

Because evictionPoolPopulate has updated the EvictionPoolLRU array, and the K in the array is in ascending order of idle time Already sorted. Therefore, performEvictions traverses the EvictionPoolLRU array once and starts selecting from the last K in the array. If the selected K is not empty, it will be used as the final K to be eliminated.

The execution logic of this process:

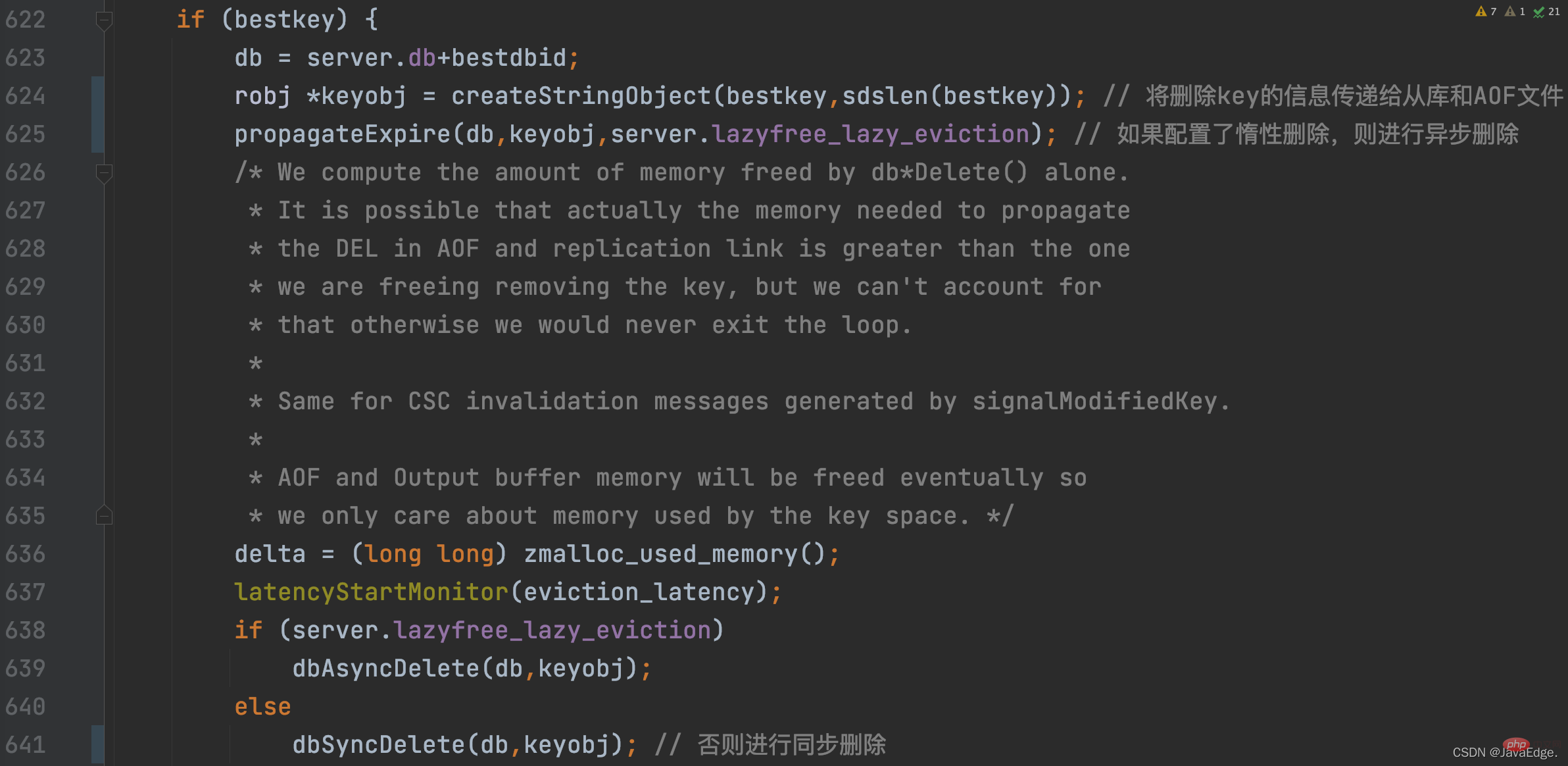

Once the eliminated K is selected, performEvictions will perform synchronous deletion or asynchronous deletion based on the lazy deletion configuration of the Redis server. Delete:

At this point, performEvictions has eliminated a K. If the memory space released at this time is not enough, that is, the space to be released is not reached, performEvictions will repeatedly executethe process of updating the set of candidate KV pairs to be eliminated and selecting the final KV pair until it is satisfied The size requirement of the space to be released.

performEvictions process:

The approximate LRU algorithm does not use a time-consuming and space-consuming linked list, but uses a fixed-size data set to be eliminated, and randomly selects some K to be added each time Eliminate data collections.

Finally, according to the length of idle time of K in the set to be eliminated, delete the K with the longest idle time.

Summary

According to the basic principles of the LRU algorithm, it is found that if the LRU algorithm is implemented strictly according to the basic principles, the developed system will need additional memory space to save the LRU linked list, and the system will also be affected by LRU when running. The overhead impact of linked list operations.

The memory resources and performance of Redis are very important, so Redis implements the approximate LRU algorithm:

- First, the global LRU clock is set, and in KV Obtain the global LRU clock value as the access timestamp when creating, and obtain the global LRU clock value during each access, and update the access timestamp

- Then, when Redis processes a command, performEvictions is called to determine whether it is needed. Free up memory. If the used memory exceeds maxmemory, randomly select some KV pairs to form a candidate set to be eliminated, and select the oldest data for elimination based on their access timestamps

Basic principles and algorithms of an algorithm There will be certain compromises in the actual implementation of the system development, and the resource and performance requirements of the developed system need to be comprehensively considered to avoid the resource and performance overhead caused by strictly following the algorithm implementation.

Recommended learning: Redis tutorial

The above is the detailed content of Completely master the implementation of Redis's LRU cache elimination algorithm. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

Redis cluster mode deploys Redis instances to multiple servers through sharding, improving scalability and availability. The construction steps are as follows: Create odd Redis instances with different ports; Create 3 sentinel instances, monitor Redis instances and failover; configure sentinel configuration files, add monitoring Redis instance information and failover settings; configure Redis instance configuration files, enable cluster mode and specify the cluster information file path; create nodes.conf file, containing information of each Redis instance; start the cluster, execute the create command to create a cluster and specify the number of replicas; log in to the cluster to execute the CLUSTER INFO command to verify the cluster status; make

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear Redis data: Use the FLUSHALL command to clear all key values. Use the FLUSHDB command to clear the key value of the currently selected database. Use SELECT to switch databases, and then use FLUSHDB to clear multiple databases. Use the DEL command to delete a specific key. Use the redis-cli tool to clear the data.

How to read redis queue

Apr 10, 2025 pm 10:12 PM

How to read redis queue

Apr 10, 2025 pm 10:12 PM

To read a queue from Redis, you need to get the queue name, read the elements using the LPOP command, and process the empty queue. The specific steps are as follows: Get the queue name: name it with the prefix of "queue:" such as "queue:my-queue". Use the LPOP command: Eject the element from the head of the queue and return its value, such as LPOP queue:my-queue. Processing empty queues: If the queue is empty, LPOP returns nil, and you can check whether the queue exists before reading the element.

How to use the redis command

Apr 10, 2025 pm 08:45 PM

How to use the redis command

Apr 10, 2025 pm 08:45 PM

Using the Redis directive requires the following steps: Open the Redis client. Enter the command (verb key value). Provides the required parameters (varies from instruction to instruction). Press Enter to execute the command. Redis returns a response indicating the result of the operation (usually OK or -ERR).

How to use redis lock

Apr 10, 2025 pm 08:39 PM

How to use redis lock

Apr 10, 2025 pm 08:39 PM

Using Redis to lock operations requires obtaining the lock through the SETNX command, and then using the EXPIRE command to set the expiration time. The specific steps are: (1) Use the SETNX command to try to set a key-value pair; (2) Use the EXPIRE command to set the expiration time for the lock; (3) Use the DEL command to delete the lock when the lock is no longer needed.

How to read the source code of redis

Apr 10, 2025 pm 08:27 PM

How to read the source code of redis

Apr 10, 2025 pm 08:27 PM

The best way to understand Redis source code is to go step by step: get familiar with the basics of Redis. Select a specific module or function as the starting point. Start with the entry point of the module or function and view the code line by line. View the code through the function call chain. Be familiar with the underlying data structures used by Redis. Identify the algorithm used by Redis.

How to solve data loss with redis

Apr 10, 2025 pm 08:24 PM

How to solve data loss with redis

Apr 10, 2025 pm 08:24 PM

Redis data loss causes include memory failures, power outages, human errors, and hardware failures. The solutions are: 1. Store data to disk with RDB or AOF persistence; 2. Copy to multiple servers for high availability; 3. HA with Redis Sentinel or Redis Cluster; 4. Create snapshots to back up data; 5. Implement best practices such as persistence, replication, snapshots, monitoring, and security measures.

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

Use the Redis command line tool (redis-cli) to manage and operate Redis through the following steps: Connect to the server, specify the address and port. Send commands to the server using the command name and parameters. Use the HELP command to view help information for a specific command. Use the QUIT command to exit the command line tool.