Web Front-end

Web Front-end

Front-end Q&A

Front-end Q&A

Take a look at these browser interview questions. How many can you answer correctly?

Take a look at these browser interview questions. How many can you answer correctly?

Take a look at these browser interview questions. How many can you answer correctly?

This article will share with you some interview questions about browsers. See how many you can answer? Analyze the correct answers and see how many you can get right!

#1. What are the common browser kernels?

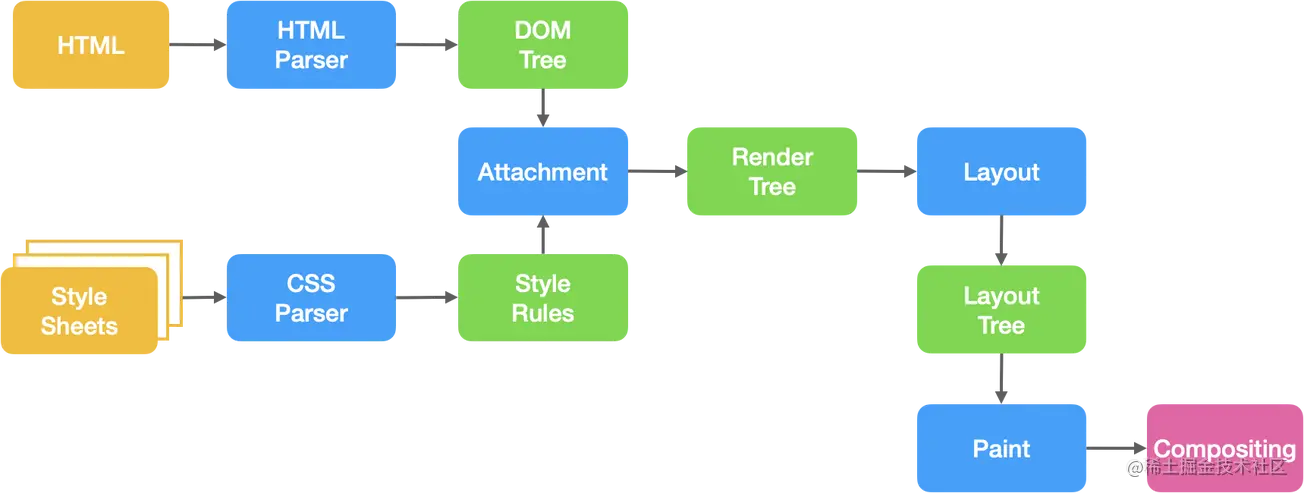

The core of the browser can be divided into two parts:

Rendering engine and JS engine (Note: The browser kernel we often refer to refers to the rendering engine)

As the JS engine becomes more and more independent, the kernel refers only to the rendering engine, which is mainly used to request The network page resources are parsed and typed and presented to the user

| Kernel (rendering engine) | JavaScript engine | |

|---|---|---|

| Blink (28~) Webkit (Chrome 27) | V8 | |

| Gecko | SpiderMonkey | |

| Webkit | JavaScriptCore | |

| EdgeHTML | Chakra(For JavaScript) | |

| Trident | Chakra (For JScript) | |

| Presto->blink | Linear A (4.0-6.1)/Linear B (7.0-9.2) / Futhark (9.5-10.2) / Carakan (10.5-) | |

| - | V8 |

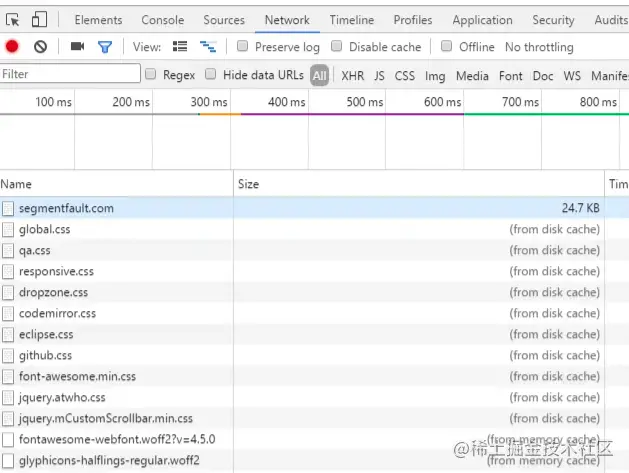

| - | memory cache | disk cache |

|---|---|---|

| Same point | Can only store some derived resource files | Only Store some derived class resource files |

| Difference | The data will be cleared when exiting the process | The data will not be cleared when exiting the process |

| Storage resources | Generally scripts, fonts, and pictures will be stored in memory | Generally non-scripts will be stored in memory, such as css, etc. |

Browser cache classification

- Strong cache

- Negotiation cache

When the browser requests resources from the server, it first determines whether it hits the strong cache. If it fails, it then determines whether it hits the negotiated cache

Strong cache

When the browser loads resources, it will first determine whether the strong cache is hit based on the header of the local cache resource. If it hits, the resource in the cache will be used directly without sending a request to the server. (The information in the header here refers to expires and cache-control)

##Expires

http1.0, and its value is a time character in GMT format of absolute time String, such as Expires:Mon,18 Oct 2066 23:59:59 GMT. This time represents the expiration time of this resource. Before this time, the cache is hit. This method has an obvious shortcoming. Since the expiration time is an absolute time, when the time deviation between the server and the client is large, it will cause cache confusion. So this method was quickly abandoned in the later version of HTTP 1.1.

Cache-Control

http1.1 Information is mainly judged by using the max-age value of this field, which is a relative time, such as Cache-Control:max-age=3600 , which means that the validity period of the resource is 3600 seconds. In addition to this field, cache-control also has the following more commonly used setting values:

no-cache: You need to negotiate the cache and send a request to the server to confirm whether to use the cache.

no-store: Disable the use of cache and re-request data every time.

public: Can be cached by all users, including end users and intermediate proxy servers such as CDN.

private: It can only be cached by the end user's browser and is not allowed to be cached by relay cache servers such as CDN.

Cache-Control and Expires can be enabled at the same time in the server configuration. When enabled at the same time, Cache-Control has higher priority.

Negotiation Cache

When the strong cache misses, the browser will send a request to the server, and the server will follow The information in header is used to determine whether the negotiation cache is hit. If it hits, 304 is returned, telling the browser that the resource has not been updated and the local cache can be used. (The header information here refers to Last-Modify/If-Modify-Since and ETag/If-None-Match)

Last-Modify/If-Modify-Since

ETag/If-None-Match

Last-Modified and ETag can be used together. The server will verify the ETag first. If it is consistent, it will continue to compare Last-Modified and finally decide whether to return 304.

Summary

When the browser accesses an already visited resource, its steps are:

1. First check whether the strong cache is hit. If it hits?, use the cache directly.2. If the strong cache is not hit, a request will be sent to the server to see if it hits? Negotiate cache3. If the negotiation cache is hit, the server will return 304 to tell the browser that the local cache can be used 4. If the negotiation cache is not hit, the server will return new resources to the browser

13.什么是浏览器的同源策略,以及跨域?

同源策略

同源策略是浏览器的一种自我保护行为。所谓的同源指的是:协议,域名,端口均要相同

浏览器中大部分内容都是受同源策略限制的,但是以下三个标签不受限制:

<img src="/static/imghw/default1.png" data-src="..." class="lazy" / alt="Take a look at these browser interview questions. How many can you answer correctly?" > <link href="..." /> <script src="..."></script>

跨域

跨域指的是浏览器不能执行其它域名下的脚本。它是由浏览器的同源策略限制的。

你可能会想跨域请求到底有没有发送到服务器?

事实上,跨域请求时能够发送到服务器的,并且服务器也能过接受的请求并正常返回结果,只是结果被浏览器拦截了。

跨域解决方案(列出几个常用的)

JSONP

它主要是利用script标签不受浏览器同源策略的限制,可以拿到从其他源传输过来的数据,需要服务端支持。

优缺点:

兼容性比较好,可用于解决主流浏览器的跨域数据访问的问题。缺点就是仅支持get请求,具有局限性,不安全,可能会受到XSS攻击。

思路:

- 声明一个回调函数,其函数名(如show)当做参数值,要传递给跨域请求数据的服务器,函数形参为要获取目标数据(服务器返回的data)。

- 创建一个

<script>标签,把那个跨域的API数据接口地址,赋值给script的src,还要在这个地址中向服务器传递该函数名(可以通过问号传参:?callback=show)。

- 服务器接收到请求后,需要进行特殊的处理:把传递进来的函数名和它需要给你的数据拼接成一个字符串,例如:传递进去的函数名是show,它准备好的数据是

show('南玖')。

- 最后服务器把准备的数据通过HTTP协议返回给客户端,客户端再调用执行之前声明的回调函数(show),对返回的数据进行操作。

// front

function jsonp({ url, params, callback }) {

return new Promise((resolve, reject) => {

let script = document.createElement('script')

window[callback] = function(data) {

resolve(data)

document.body.removeChild(script)

}

params = { ...params, callback } // wd=b&callback=show

let arrs = []

for (let key in params) {

arrs.push(`${key}=${params[key]}`)

}

script.src = `${url}?${arrs.join('&')}`

document.body.appendChild(script)

})

}

jsonp({

url: 'http://localhost:3000/say',

params: { wd: 'wxgongzhonghao' },

callback: 'show'

}).then(data => {

console.log(data)

})// server 借助express框架

let express = require('express')

let app = express()

app.get('/say', function(req, res) {

let { wd, callback } = req.query

console.log(wd) // Iloveyou

console.log(callback) // show

res.end(`${callback}('关注前端南玖')`)

})

app.listen(3000)上面这段代码相当于向http://localhost:3000/say?wd=wxgongzhonghao&callback=show这个地址请求数据,然后后台返回show('关注前端南玖'),最后会运行show()这个函数,打印出'关注前端南玖'

跨域资源共享(CORS)

CORS(Cross-Origin Resource Sharing)跨域资源共享,定义了必须在访问跨域资源时,浏览器与服务器应该如何沟通。CORS背后的基本思想是使用自定义的HTTP头部让浏览器与服务器进行沟通,从而决定请求或响应是应该成功还是失败。

CORS 需要浏览器和后端同时支持。IE 8 和 9 需要通过 XDomainRequest 来实现。

浏览器会自动进行 CORS 通信,实现 CORS 通信的关键是后端。只要后端实现了 CORS,就实现了跨域。

服务端设置 Access-Control-Allow-Origin 就可以开启 CORS。 该属性表示哪些域名可以访问资源,如果设置通配符则表示所有网站都可以访问资源。

虽然设置 CORS 和前端没什么关系,但是通过这种方式解决跨域问题的话,会在发送请求时出现两种情况,分别为简单请求和复杂请求。

简单请求: (满足以下两个条件,就是简单请求)

1.请求方法为以下三个之一:

- GET

- POST

- HEAD

2.Content-Type的为以下三个之一:

- text-plain

- multiparty/form-data

- application/x-www-form-urlencoded

复杂请求:

不是简单请求那它肯定就是复杂请求了。复杂请求的CORS请求,会在正式发起请求前,增加一次HTTP查询请求,称为预检 请求,该请求是option方法的,通过该请求来知道服务端是否允许该跨域请求。

Nginx反向代理

Nginx 反向代理的原理很简单,即所有客户端的请求都必须经过nginx处理,nginx作为代理服务器再将请求转发给后端,这样就规避了浏览器的同源策略。

14.说说什么是XSS攻击

什么是XSS?

XSS 全称是

Cross Site Scripting,为了与css区分开来,所以简称XSS,中文叫作跨站脚本XSS是指黑客往页面中注入恶意脚本,从而在用户浏览页面时利用恶意脚本对用户实施攻击的一种手段。

XSS能够做什么?

- 窃取Cookie

- 监听用户行为,比如输入账号密码后之间发给黑客服务器

- 在网页中生成浮窗广告

- 修改DOM伪造登入表单

XSS实现方式

- 存储型XSS攻击

- 反射型XSS攻击

- 基于DOM的XSS攻击

如何阻止XSS攻击?

对输入脚本进行过滤或转码

对用户输入的信息过滤或者转码,保证用户输入的内容不能在HTML解析的时候执行。

利用CSP

该安全策略的实现基于一个称作

Content-Security-Policy的HTTP首部。(浏览器内容安全策略)它的核心思想就是服务器决定浏览器加载那些资源。

- 限制加载其他域下的资源文件,这样即使黑客插入了一个 JavaScript 文件,这个 JavaScript 文件也是无法被加载的;

- 禁止向第三方域提交数据,这样用户数据也不会外泄;

- 提供上报机制,能帮助我们及时发现 XSS 攻击。

- 禁止执行内联脚本和未授权的脚本;

利用 HttpOnly

由于很多 XSS 攻击都是来盗用 Cookie 的,因此还可以通过使用 HttpOnly 属性来保护我们 Cookie 的安全。这样子的话,JavaScript 便无法读取 Cookie 的值。这样也能很好的防范 XSS 攻击。

通常服务器可以将某些 Cookie 设置为 HttpOnly 标志,HttpOnly 是服务器通过 HTTP 响应头来设置的,下面是打开 Google 时,HTTP 响应头中的一段:

set-cookie: NID=189=M8l6-z41asXtm2uEwcOC5oh9djkffOMhWqQrlnCtOI; expires=Sat, 18-Apr-2020 06:52:22 GMT; path=/; domain=.google.com; HttpOnly

对于不受信任的输入,可以限制输入长度

15.说说什么是CSRF攻击?

什么是CSRF攻击?

CSRF 全称

Cross-site request forgery,中文为跨站请求伪造 ,攻击者诱导受害者进入第三方网站,在第三方网站中,向被攻击网站发送跨站请求。利用受害者在被攻击网站已经获取的注册凭证,绕过后台的用户验证,达到冒充用户对被攻击的网站执行某项操作的目的。 CSRF攻击就是黑客利用用户的登录状态,并通过第三方站点来干一些嘿嘿嘿的坏事。

几种常见的攻击类型

1.GET类型的CSRF

GET类型的CSRF非常简单,通常只需要一个HTTP请求:

<img src="/static/imghw/default1.png" data-src="http://bank.example/withdraw?amount=10000&for=hacker" class="lazy" alt="Take a look at these browser interview questions. How many can you answer correctly?" >

在受害者访问含有这个img的页面后,浏览器会自动向http://bank.example/withdraw?account=xiaoming&amount=10000&for=hacker发出一次HTTP请求。bank.example就会收到包含受害者登录信息的一次跨域请求。

2.POST类型的CSRF

这种类型的CSRF利用起来通常使用的是一个自动提交的表单,如:

<form action="http://bank.example/withdraw" method=POST>

<input type="hidden" name="account" value="xiaoming" />

<input type="hidden" name="amount" value="10000" />

<input type="hidden" name="for" value="hacker" />

</form>

<script> document.forms[0].submit(); </script>访问该页面后,表单会自动提交,相当于模拟用户完成了一次POST操作。

3.链接类型的CSRF

链接类型的CSRF并不常见,比起其他两种用户打开页面就中招的情况,这种需要用户点击链接才会触发。这种类型通常是在论坛中发布的图片中嵌入恶意链接,或者以广告的形式诱导用户中招,攻击者通常会以比较夸张的词语诱骗用户点击,例如:

<a href="http://test.com/csrf/withdraw.php?amount=1000&for=hacker" taget="_blank"> 重磅消息!! <a/>

由于之前用户登录了信任的网站A,并且保存登录状态,只要用户主动访问上面的这个PHP页面,则表示攻击成功。

CSRF的特点

- 攻击一般发起在第三方网站,而不是被攻击的网站。被攻击的网站无法防止攻击发生。

- 攻击利用受害者在被攻击网站的登录凭证,冒充受害者提交操作;而不是直接窃取数据。

- 整个过程攻击者并不能获取到受害者的登录凭证,仅仅是“冒用”。

- 跨站请求可以用各种方式:图片URL、超链接、CORS、Form提交等等。部分请求方式可以直接嵌入在第三方论坛、文章中,难以进行追踪。

CSRF通常是跨域的,因为外域通常更容易被攻击者掌控。但是如果本域下有容易被利用的功能,比如可以发图和链接的论坛和评论区,攻击可以直接在本域下进行,而且这种攻击更加危险。

防护策略

Hackers can only use the victim's cookie to defraud the server's trust, but hackers cannot rely on getting **"cookie"**, nor can they see **"cookie" content. In addition, hackers cannot parse the results returned by the server due to the browser's "same-origin policy" restrictions.

This tells us that the objects we want to protect are services that can directly produce data changes, and for services that read data, there is no need to protectCSRF

. The key to protection is"Put information in the request that hackers cannot forge"

Original detection

Since most CSRFs come from third-party websites, we directly prohibit external domains (or untrusted domain names) from making requests to us. Then the question is, how do we determine whether the request comes from an external domain? In the HTTP protocol, each asynchronous request carries two Headers, used to mark the source domain name:- Origin HeaderReferer Header

Use the Origin Header to determine the source domain name

In some CSRF-related requests, the Origin field will be carried in the requested header. The field contains the requested domain name (excluding path and query). If Origin exists, just use the fields in Origin to confirm the source domain name. But Origin does not exist in the following two cases:- IE11 Same Origin Policy: IE 11 will not add Origin on cross-site CORS requests header, the Referer header will still be the only identifier. The most fundamental reason is that IE 11’s definition of same origin is different from other browsers. There are two main differences. You can refer to MDN Same-origin_policy#IE_Exceptions ##302 Redirects:

- The Origin is not included in the redirected request after a 302 redirect because the Origin may be considered sensitive information from other sources. In the case of 302 redirects, the URL is directed to the new server, so the browser does not want to leak the Origin to the new server.

According to the HTTP protocol, there is a field called Referer in the HTTP header, which records the source address of the HTTP request. For Ajax requests, resource requests such as pictures and scripts, the Referer is the page address that initiates the request. For page jumps, Referer is the address of the previous page that opened the page history. Therefore, we can use the Origin part of the link in the Referer to know the source domain name of the request.

This method is not foolproof. The value of Referer is provided by the browser. Although there are clear requirements on the HTTP protocol, each browser may have different implementations of Referer, and there is no guarantee that the browser will There are no security holes of its own. The method of verifying the Referer value relies on the third party (i.e. the browser) to ensure security. In theory, this is not very safe. In some cases, attackers can hide or even modify the Referer they request.

In 2014, the W3C's Web Application Security Working Group released a Referrer Policy draft, which made detailed provisions on how browsers should send Referer. As of now, most new browsers have supported this draft, and we can finally flexibly control the Referer strategy of our website. The new version of Referrer Policy stipulates five Referrer policies: No Referrer, No Referrer When Downgrade, Origin Only, Origin When Cross-origin, and Unsafe URL. The three existing strategies: never, default, and always have been renamed in the new standard. Their correspondence is as follows:

| Attribute value (new) | Attribute value (old) | |

|---|---|---|

| no-Referrer | never | |

| no-Referrer-when-downgrade | default | |

| (same or strict) origin | origin | |

| (strict) origin-when-crossorigin | - | |

| unsafe-url | always | ## |

The above is the detailed content of Take a look at these browser interview questions. How many can you answer correctly?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Why can custom style sheets take effect on local web pages in Safari but not on Baidu pages?

Apr 05, 2025 pm 05:15 PM

Why can custom style sheets take effect on local web pages in Safari but not on Baidu pages?

Apr 05, 2025 pm 05:15 PM

Discussion on using custom stylesheets in Safari Today we will discuss a custom stylesheet application problem for Safari browser. Front-end novice...

How to customize the resize symbol through CSS and make it uniform with the background color?

Apr 05, 2025 pm 02:30 PM

How to customize the resize symbol through CSS and make it uniform with the background color?

Apr 05, 2025 pm 02:30 PM

The method of customizing resize symbols in CSS is unified with background colors. In daily development, we often encounter situations where we need to customize user interface details, such as adjusting...

How to correctly display the locally installed 'Jingnan Mai Round Body' on the web page?

Apr 05, 2025 pm 10:33 PM

How to correctly display the locally installed 'Jingnan Mai Round Body' on the web page?

Apr 05, 2025 pm 10:33 PM

Using locally installed font files in web pages Recently, I downloaded a free font from the internet and successfully installed it into my system. Now...

How to control the top and end of pages in browser printing settings through JavaScript or CSS?

Apr 05, 2025 pm 10:39 PM

How to control the top and end of pages in browser printing settings through JavaScript or CSS?

Apr 05, 2025 pm 10:39 PM

How to use JavaScript or CSS to control the top and end of the page in the browser's printing settings. In the browser's printing settings, there is an option to control whether the display is...

How to use locally installed font files on web pages?

Apr 05, 2025 pm 10:57 PM

How to use locally installed font files on web pages?

Apr 05, 2025 pm 10:57 PM

How to use locally installed font files on web pages Have you encountered this situation in web page development: you have installed a font on your computer...

Why does negative margins not take effect in some cases? How to solve this problem?

Apr 05, 2025 pm 10:18 PM

Why does negative margins not take effect in some cases? How to solve this problem?

Apr 05, 2025 pm 10:18 PM

Why do negative margins not take effect in some cases? During programming, negative margins in CSS (negative...

How to use CSS and Flexbox to implement responsive layout of images and text at different screen sizes?

Apr 05, 2025 pm 06:06 PM

How to use CSS and Flexbox to implement responsive layout of images and text at different screen sizes?

Apr 05, 2025 pm 06:06 PM

Implementing responsive layouts using CSS When we want to implement layout changes under different screen sizes in web design, CSS...

The text under Flex layout is omitted but the container is opened? How to solve it?

Apr 05, 2025 pm 11:00 PM

The text under Flex layout is omitted but the container is opened? How to solve it?

Apr 05, 2025 pm 11:00 PM

The problem of container opening due to excessive omission of text under Flex layout and solutions are used...