Web Front-end

Web Front-end

JS Tutorial

JS Tutorial

Practical sharing: Use nodejs to crawl and download more than 10,000 images

Practical sharing: Use nodejs to crawl and download more than 10,000 images

Practical sharing: Use nodejs to crawl and download more than 10,000 images

This article will share with you a node practical experience to see how the author used nodejs to crawl more than 10,000 little sister wallpapers. I hope it will be helpful to everyone!

Hello, everyone, I am Xiaoma, why do I need to download so many pictures? A few days ago, I used uni-app uniCloud to deploy a wallpaper applet for free. Then I need some resources to fill the applet with content.

Crawling pictures

First initialize the project and install axios and cheerio

npm init -y && npm i axios cheerio

axios Used to crawl web page content, cheerio is the jquery api on the server side, we use it to obtain the image address in the dom;

const axios = require('axios')

const cheerio = require('cheerio')

function getImageUrl(target_url, containerEelment) {

let result_list = []

const res = await axios.get(target_url)

const html = res.data

const $ = cheerio.load(html)

const result_list = []

$(containerEelment).each((element) => {

result_list.push($(element).find('img').attr('src'))

})

return result_list

}allows us to obtain the image URL in the page. Next, you need to download the image according to the url.

How to use nodejs to download files

Method 1: Use the built-in modules 'https' and 'fs'

Usenodejs Downloading files can be done using built-in packages or third-party libraries.

The GET method is used with HTTPS to get the file to download. createWriteStream() is a method used to create a writable stream. It only receives one parameter, which is the location where the file is saved. Pipe() is a method that reads data from a readable stream and writes it to a writable stream.

const fs = require('fs')

const https = require('https')

// URL of the image

const url = 'GFG.jpeg'

https.get(url, (res) => {

// Image will be stored at this path

const path = `${__dirname}/files/img.jpeg`

const filePath = fs.createWriteStream(path)

res.pipe(filePath)

filePath.on('finish', () => {

filePath.close()

console.log('Download Completed')

})

})Method 2: DownloadHelper

npm install node-downloader-helper

The following is the code to download images from the website. An object dl is created by the class DownloadHelper, which receives two parameters:

- The image to be downloaded.

- The path where the image must be saved after downloading.

The File variable contains the URL of the image that will be downloaded, and the filePath variable contains the path to the file that will be saved.

const { DownloaderHelper } = require('node-downloader-helper')

// URL of the image

const file = 'GFG.jpeg'

// Path at which image will be downloaded

const filePath = `${__dirname}/files`

const dl = new DownloaderHelper(file, filePath)

dl.on('end', () => console.log('Download Completed'))

dl.start()Method 3: Use download

is written by npm master sindresorhus, very easy to use

npm install download

The following is the code to download images from the website. The download function receives a file and file path.

const download = require('download')

// Url of the image

const file = 'GFG.jpeg'

// Path at which image will get downloaded

const filePath = `${__dirname}/files`

download(file, filePath).then(() => {

console.log('Download Completed')

})Final code

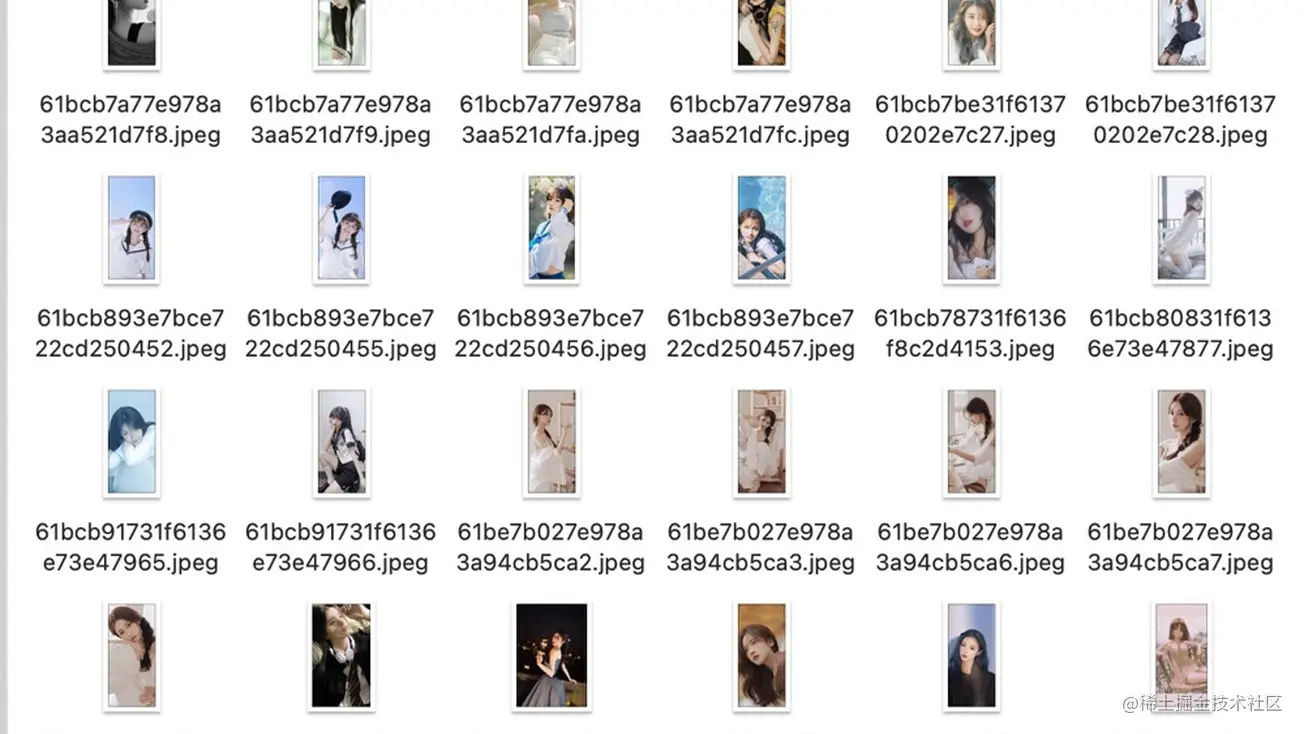

I originally wanted to crawl Baidu wallpapers, but the resolution was not enough, and there were watermarks, etc. Later, a friend in the group found an API, which I guess. For high-definition wallpapers on a certain mobile app, you can directly get the download URL, so I used it directly.

The following is the complete code

const download = require('download')

const axios = require('axios')

let headers = {

'User-Agent':

'Mozilla/5.0 (Macintosh; Intel Mac OS X 11_1_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36',

}

function sleep(time) {

return new Promise((reslove) => setTimeout(reslove, time))

}

async function load(skip = 0) {

const data = await axios

.get(

'http://service.picasso.adesk.com/v1/vertical/category/4e4d610cdf714d2966000000/vertical',

{

headers,

params: {

limit: 30, // 每页固定返回30条

skip: skip,

first: 0,

order: 'hot',

},

}

)

.then((res) => {

return res.data.res.vertical

})

.catch((err) => {

console.log(err)

})

await downloadFile(data)

await sleep(3000)

if (skip < 1000) {

load(skip + 30)

} else {

console.log('下载完成')

}

}

async function downloadFile(data) {

for (let index = 0; index < data.length; index++) {

const item = data[index]

// Path at which image will get downloaded

const filePath = `${__dirname}/美女`

await download(item.wp, filePath, {

filename: item.id + '.jpeg',

headers,

}).then(() => {

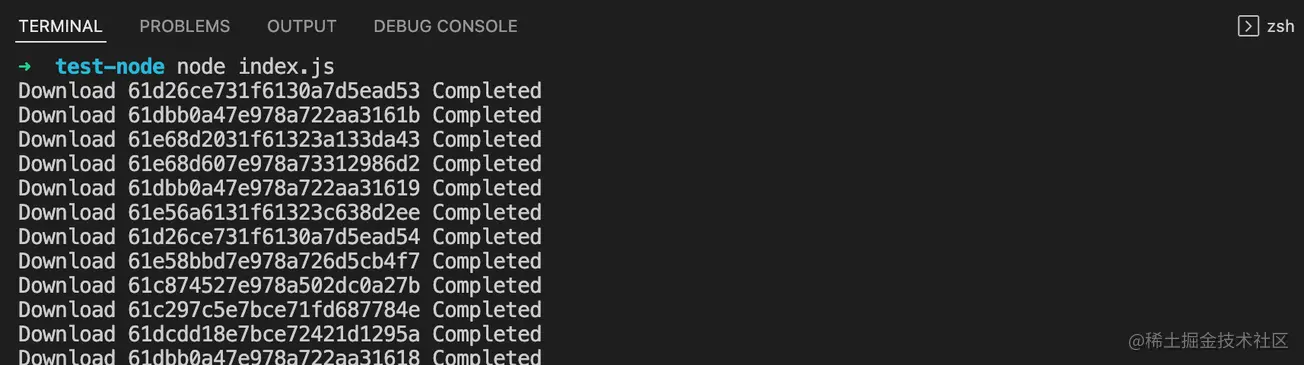

console.log(`Download ${item.id} Completed`)

return

})

}

}

load()In the above code, you must first set User-Agent and set a 3s delay. This can prevent the server from blocking the crawler and directly return 403.

Directly node index.js will automatically download the image.

、

、

experience

WeChat applet search "水瓜图" experience.

https://p6-juejin.byteimg.com/tos-cn-i-k3u1fbpfcp/c5301b8b97094e92bfae240d7eb1ec5e~tplv-k3u1fbpfcp-zoom-1.awebp?

More nodes For related knowledge, please visit: nodejs tutorial!

The above is the detailed content of Practical sharing: Use nodejs to crawl and download more than 10,000 images. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

What are the differences between Node.js versions? How to choose the right version?

Aug 01, 2022 pm 08:00 PM

What are the differences between Node.js versions? How to choose the right version?

Aug 01, 2022 pm 08:00 PM

Node.js has LTS version and Current version. What is the difference between these two versions? The following article will help you quickly understand the differences between Node.js versions, and talk about how to choose the appropriate version. I hope it will be helpful to you!

Let's talk about how to implement lightweight process pool and thread pool using Node

Oct 14, 2022 pm 08:05 PM

Let's talk about how to implement lightweight process pool and thread pool using Node

Oct 14, 2022 pm 08:05 PM

Node.js is a single-threaded language. It is developed based on the V8 engine. V8 was originally designed to parse and run the JavaScript language on the browser side. Its biggest feature is that it is single-threaded. This design avoids some Multi-threaded state synchronization issues make it lighter and easier to use.

How does Node.js perform version management? 3 practical version management tools to share

Aug 10, 2022 pm 08:20 PM

How does Node.js perform version management? 3 practical version management tools to share

Aug 10, 2022 pm 08:20 PM

How does Node.js perform version management? The following article will share with you 3 very practical Node.js version management tools. I hope it will be helpful to you!

This article will help you understand the principles of npm

Aug 09, 2022 am 09:23 AM

This article will help you understand the principles of npm

Aug 09, 2022 am 09:23 AM

npm is the package management tool of the JavaScript world and is the default package management tool for the Node.js platform. Through npm, you can install, share, distribute code, and manage project dependencies. This article will take you through the principles of npm, I hope it will be helpful to you!

How to configure and install node.js in IDEA? Brief analysis of methods

Dec 21, 2022 pm 08:28 PM

How to configure and install node.js in IDEA? Brief analysis of methods

Dec 21, 2022 pm 08:28 PM

How to run node in IDEA? The following article will introduce to you how to configure, install and run node.js in IDEA. I hope it will be helpful to you!

What are asynchronous resources? A brief analysis of Node's method of realizing asynchronous resource context sharing

May 31, 2022 pm 12:56 PM

What are asynchronous resources? A brief analysis of Node's method of realizing asynchronous resource context sharing

May 31, 2022 pm 12:56 PM

How does Node.js implement asynchronous resource context sharing? The following article will introduce to you how Node implements asynchronous resource context sharing. Let’s talk about the use of asynchronous resource context sharing for us. I hope it will be helpful to everyone!

See how to use nodejs to generate QR codes

Oct 25, 2022 am 09:28 AM

See how to use nodejs to generate QR codes

Oct 25, 2022 am 09:28 AM

QR codes are everywhere in life. I have also used Java’s zxing library to generate QR codes before, and it is very powerful. But in fact, there are many third-party libraries on nodejs that can generate QR codes. Today we are using the qrcode library to generate QR codes.

An article to talk about the fs file module and path module in Node (case analysis)

Nov 18, 2022 pm 08:36 PM

An article to talk about the fs file module and path module in Node (case analysis)

Nov 18, 2022 pm 08:36 PM

This article uses the case of reading and writing files and processing paths to learn about the fs file module and path module in Node. I hope it will be helpful to everyone!