What redundancy is generally used to compress image files?

Image files are generally compressed using coding redundancy, inter-pixel redundancy and psychovisual redundancy. Data redundancy is the main problem of digital image compression. In digital image compression, three basic data redundancies can be determined and utilized: coding redundancy, inter-pixel redundancy and psychovisual redundancy; when these three types of redundancy Data compression (reducing the amount of data required to represent a given amount of information) is achieved when one or more of are reduced or eliminated.

The operating environment of this tutorial: Windows 7 system, Dell G3 computer.

The problem solved by image compression is to minimize the amount of data required to represent digital images, and to remove excess data based on the basic principle of reducing the amount of data.

1. Basic introduction

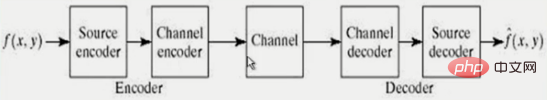

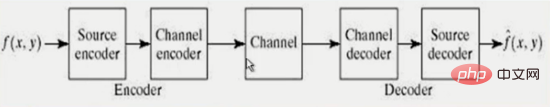

Image compression model: Mainly introduces the encoding and decoding of signal sources, and does not discuss the signal channel of the transmission process. .

Data compression refers to reducing the amount of data required to represent a given amount of information.

Data is the means of information transmission. The same amount of information can be represented by different amounts of data.

Information: used to represent the information of the image itself.

Data redundancy is a major problem in digital image compression. If n1 and n2 represent the number of information units carried in two data sets representing the same information, then the relative data redundancy RD of the first data set (the set represented by n1) can be defined as :

Here C is usually called the compression ratio, which is defined as:

In digital image compression , three basic types of data redundancy can be identified and exploited: coding redundancy, inter-pixel redundancy, and psychovisual redundancy. Data compression is achieved when one or more of these three redundancies are reduced or eliminated.

2. Coding redundancy

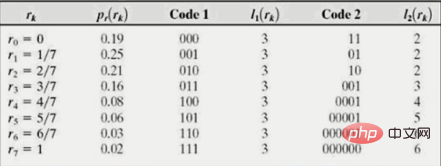

For images, it can be assumed that a discrete random variable represents the gray level of the image, and each gray level The probability of (rk) appearing is pr

where L is the gray level, nk is the number of times the k-th gray level appears in the image, and n is the total number of pixels in the image. If the number of bits used to represent each rk value is l(rk), then the average number of bits required to represent each pixel is:

That is to say, the number of bits used to represent each gray level value is multiplied by the probability of gray level occurrence, and the resulting products are added to obtain the average codeword of different gray level values. length. If the average number of bits of a certain encoding is closer to the entropy, the encoding redundancy is smaller.

[Note]

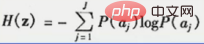

Entropy: It defines the average amount of information obtained by observing the output of a single source

For example:

The entropy of the original image is: 2.588

Using natural binary encoding, the average length is;3

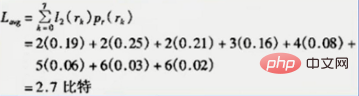

If using the table Medium encoding 2, the average number of bits is:

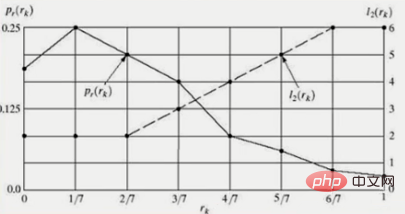

achieves coding compression, pr(rk) and l(r k) These two functions are multiplied and inversely proportional. That is to say, the greater the probability pr(rk) of a certain grayscale rk, the greater the coding length l(rk) should be smaller, which can reduce the average number of bits and bring it closer to the entropy. As shown below:

3. Inter-pixel redundancy

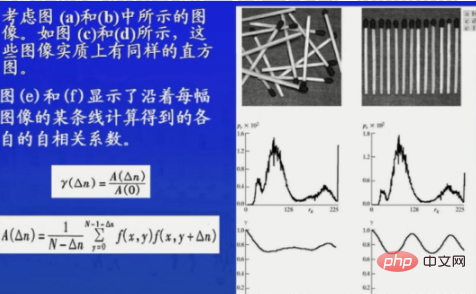

Inter-pixel redundancy is a kind of Inter-pixel correlations are directly linked to data redundancy.

For a static picture, there is spatial redundancy (geometric redundancy). This is because the visual contribution of a single pixel to the image in a picture is often redundant. Grayscale values are inferred.

For continuous pictures or videos, there will also be temporal redundancy (inter-frame redundancy). Most of the corresponding pixels between adjacent pictures are slowly excessive.

3. Psychovisual redundancy

Psychovisual redundancy is related to real visual information. It varies from person to person. Different people have different psychological visual redundancy for the same photo. . Removing redundant psychovisual data will inevitably lead to the loss of quantitative information, and this loss of visual information is an irreversible operation. Just like an image (which cannot be enlarged) is relatively small, the human eye cannot directly judge its resolution. In order to compress the data volume of the image, some information that cannot be directly observed by the human eye can be removed, but when it is enlarged, it is not removed. An image with psychovisual redundancy will be significantly different from an image with psychovisual redundancy removed.

Figure C illustrates that the quantization process that fully utilizes the characteristics of the human visual system can greatly improve the performance of the image. Although the compression ratio of this quantization process is still only 2: 1, Additional overhead is added to reduce false contours, but unwanted graininess is reduced. The method used to produce this result is the improved gray scale (IGS) quantization method. This method is illustrated in the table below. First, the current 8-bit gray level value and the 4 least significant bits generated previously form a sum with an initial value of zero. If the 4 most significant bits of the current value are 1111, add 0000 to it. The value of the 4 most significant bits of the obtained sum is used as the encoded pixel value.

4. Fidelity criterion

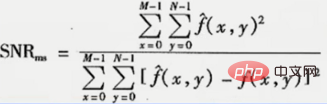

4.1 Objective Fidelity Criterion

This is said when the degree of information loss can be expressed as a function of the initial image or input image and the output image that is first compressed and then decompressed Functions are based on objective fidelity criteria.

-

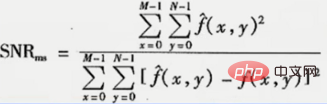

Root mean square error (rms)

Overall error of the two pictures:

Among them, f (x,y) represents the input image, and f(x,y) represents the estimate or approximation obtained by compressing and decompressing the input image

The root mean square error of the two pictures;

- Mean square signal-to-noise ratio

FAQ column!

The above is the detailed content of What redundancy is generally used to compress image files?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1375

1375

52

52

How can Java development improve image processing performance?

Jun 30, 2023 pm 02:09 PM

How can Java development improve image processing performance?

Jun 30, 2023 pm 02:09 PM

With the rapid development of the Internet, image processing plays an important role in various applications. For Java developers, how to optimize the performance of image processing is an issue that cannot be ignored. This article will introduce some methods to optimize image processing performance. First of all, for image processing in Java development, we can consider using specialized image processing libraries, such as JAI (JavaAdvancedImaging) and ImageIO. These libraries provide rich image processing functions and have been optimized to

How to implement image compression algorithm in C#

Sep 19, 2023 pm 02:12 PM

How to implement image compression algorithm in C#

Sep 19, 2023 pm 02:12 PM

How to implement image compression algorithm in C# Summary: Image compression is an important research direction in the field of image processing. This article will introduce the algorithm for implementing image compression in C# and give corresponding code examples. Introduction: With the widespread application of digital images, image compression has become an important part of image processing. Compression can reduce storage space and transmission bandwidth, and improve the efficiency of image processing. In the C# language, we can compress images by using various image compression algorithms. This article will introduce two common image compression algorithms:

How to use C++ for efficient image reconstruction and image compression?

Aug 26, 2023 am 11:07 AM

How to use C++ for efficient image reconstruction and image compression?

Aug 26, 2023 am 11:07 AM

How to use C++ for efficient image reconstruction and image compression? Image is a very common medium in our daily life, and the processing of images is crucial for many applications. In image processing, image reconstruction and image compression are two very important links. This article will introduce how to use C++ for efficient image reconstruction and image compression. Image reconstruction Image reconstruction refers to restoring an image that is too blurry or damaged to its original clear state. One of the commonly used image reconstruction methods is to use convolutional neural networks (Convoluti

What image compression coding standard is jpeg?

Dec 31, 2020 pm 02:32 PM

What image compression coding standard is jpeg?

Dec 31, 2020 pm 02:32 PM

JPEG is a compression coding standard for continuous-tone still images; it mainly uses a joint coding method of predictive coding, discrete cosine transform and entropy coding to remove redundant images and color data. It is a lossy compression format, which can convert images Compression requires a small storage space and will cause damage to image data to a certain extent.

How to optimize image compression speed in C++ development

Aug 22, 2023 pm 04:15 PM

How to optimize image compression speed in C++ development

Aug 22, 2023 pm 04:15 PM

How to optimize image compression speed in C++ development Image compression is a very important part of computer image processing. In practical applications, image files often need to be compressed to reduce storage space and transmission costs. In large-scale image processing tasks, the speed of image compression is also a very critical indicator. This article will introduce some methods and techniques for optimizing image compression speed in C++ development. Use efficient compression algorithms Choosing an efficient compression algorithm suitable for task requirements is one of the important factors in improving image compression speed. Currently commonly used

Distortion control issues in image compression

Oct 08, 2023 pm 07:17 PM

Distortion control issues in image compression

Oct 08, 2023 pm 07:17 PM

Image compression is a commonly used technical means when storing and transmitting images. It can reduce the storage space of images and speed up the transmission of images. The goal of image compression is to reduce the size of the image file as much as possible while trying to maintain the visual quality of the image so that it can be accepted by the human eye. However, during the image compression process, a certain degree of distortion often occurs. This article discusses the issue of distortion control in image compression and provides some concrete code examples. JPEG compression algorithm and its distortion issues JPEG is a common image compression standard that uses

What redundancy is generally used to compress image files?

Jul 26, 2022 am 11:46 AM

What redundancy is generally used to compress image files?

Jul 26, 2022 am 11:46 AM

Image files are generally compressed using coding redundancy, inter-pixel redundancy, and psychovisual redundancy. Data redundancy is the main problem of digital image compression. In digital image compression, three basic data redundancies can be determined and utilized: coding redundancy, inter-pixel redundancy and psychovisual redundancy; when these three types of redundancy Data compression (reducing the amount of data required to represent a given amount of information) is achieved when one or more of are reduced or eliminated.

How does PHP ZipArchive implement image compression function for files in compressed packages?

Jul 21, 2023 pm 01:37 PM

How does PHP ZipArchive implement image compression function for files in compressed packages?

Jul 21, 2023 pm 01:37 PM

How does PHPZipArchive implement image compression function for files in compressed packages? Introduction: With the development of the Internet, images are increasingly used in web pages. In order to improve web page loading speed and save storage space, image compression is an essential task. Using PHP's ZipArchive class, we can easily implement the image compression function for files in compressed packages. This article describes how to use this class to implement image compression. 1. Preparation: Before starting, make sure your PHP