What high-performance computers can do

What can be done: 1. Numerical simulation of cosmic neutrinos and dark matter to reveal the evolution process of the universe; or you can also simulate the time process and accelerate the simulation process to model and model the evolution of celestial bodies. Theoretical experiment. 2. Establish a mathematical model of nuclear explosions and deeply understand the principles of nuclear explosions to serve the development of a new generation of nuclear weapons; or predict when nuclear weapons will fail and which parts need to be replaced. 3. Accurately simulate and observe cloud movements to achieve climate simulation and weather forecasting. 4. Simulate earthquakes to help humans explore earthquake prediction methods, thereby mitigating risks related to earthquakes.

The operating environment of this tutorial: Windows 7 system, Dell G3 computer.

High-performance computers (HPC, also known as supercomputers) are mainly used to solve challenging problems that cannot be solved by other computers. They usually refer to computers with extremely fast computing speed, huge storage capacity, and extremely high communication bandwidth. Computer-like. High-performance computing is regarded as the "crown" of computer science and engineering, and countries have frequently launched research and development plans at the national level in recent years.

High-performance computers are computers with the most powerful functions, fastest computing speed, and largest storage capacity. They are mostly used in national high-tech fields and cutting-edge technology research. They are a reflection of a country’s scientific research strength. It has great influence on National security, economic and social development are of pivotal significance. It is an important symbol of the country's scientific and technological development level and comprehensive national strength.

High-performance computers are computers that can perform large amounts of data and high-speed operations that ordinary personal computers cannot handle. Its basic components are not much different from the concept of a personal computer, but its specifications and performance are much more powerful. It is a super-large electronic computer. It has strong computing and data processing capabilities. Its main features are high speed and large capacity. It is equipped with a variety of external and peripheral devices and rich, high-function software systems. Most of the existing supercomputers can operate at a speed of more than one tera time per second.

As an element of high-tech development, high-performance computers have long become a competitive tool for the economy and national defense of countries around the world. After decades of unremitting efforts by Chinese scientific and technological workers, China's high-performance computer research and development level has improved significantly, becoming the third largest high-performance computer developer and producer after the United States and Japan. China currently has 22 supercomputers (19 in mainland China, 1 in Hong Kong, and 2 in Taiwan), ranking second in the world. It leads the world in terms of ownership and computing speed. With the speed of supercomputers With the rapid development, it is also increasingly used in industry, scientific research and academic fields. However, in terms of the application field of high-performance computers, there is still a big gap between China and developed countries such as the United States and Germany. The development of China's high-performance computers and their applications provides a solid foundation and guarantee for China to become a technological power.

Uses of high-performance computers

1. Revealing the evolution of the universe

The international team for numerical simulation of cosmic neutrinos, led by Chinese scientists, successfully completed a numerical simulation of cosmic neutrinos and dark matter with 30 trillion particles on the "Tianhe-2" supercomputer system, revealing that The long evolution process of 13.7 billion years since the Big Bang 16 million years later. Neutrinos are one of the most fundamental types of particles in nature. They have no charge and act very quickly. Currently, the absolute mass of neutrinos cannot be measured through physical experiments and cosmological observations. Neutrinos could inhibit the formation of galaxies and large-scale structures in the early universe. The latter can be indirectly measured through large-scale cosmological numerical simulations to obtain neutrino mass information. Such large-scale cosmological numerical simulations must rely on supercomputers with powerful computing and storage capabilities.

It is also possible to simulate the time process and accelerate the process of the simulation to conduct modeling and theoretical experiments on the evolution of celestial bodies.

2. Simulated nuclear test

The top 500 supercomputers are Titan and Sequoia in the United States. They are affiliated with the U.S. Department of Energy at Oak Ridge National Laboratory and Lawrence Livermore National Laboratory. Supercomputing applications based on them involve the development and security maintenance of nuclear weapons. Based on the data of 1,054 U.S. nuclear tests from 1945 to 1992, a supercomputer was used to establish a mathematical model of nuclear explosions, which provided a profound understanding of the principles of nuclear explosions and served the development of a new generation of nuclear weapons; a large number of nuclear weapons are approaching the end of their service life. , supercomputer simulations can be used to predict when they will fail and which parts will need to be replaced.

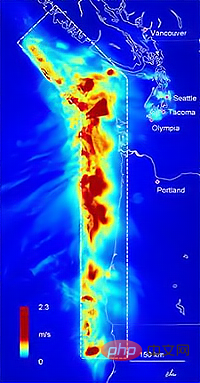

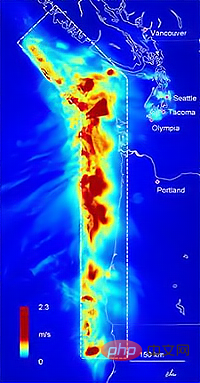

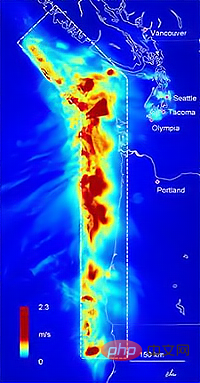

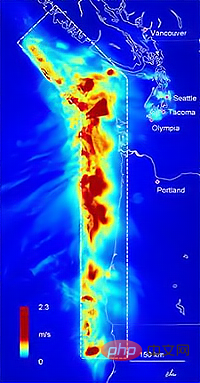

3. Climate simulation and weather forecast

This supercomputing application won the China Gordon Bell Prize and is called "Global Non-static Cloud Resolution Simulation". The project is oriented toward climate and meteorological research. Weather forecasting requires real-time processing, storage, query, analysis and statistics of massive meteorological data. It would take hundreds of years to calculate with an ordinary computer. For example, rainfall is closely related to the movement of clouds. The movement of clouds can be accurately simulated and observed using supercomputers. As the computing speed of supercomputers increases, the accuracy of observations continues to decrease. If we could "tag" every cloud in the future, weather forecasting would be fine.

4. Earthquake simulation

Super computers can simulate earthquakes. It simulates crustal movement by calculating stress changes in different strata. For example, Chinese and German scientists used Tianhe-2 to simulate real seismic wave propagation and reproduced the Rand seismic wave propagation process of the 1992 Rand earthquake in California, USA, providing a new way to study the generation and propagation mechanism of seismic waves and earthquake prediction.

The simulation of earthquakes can help humans explore earthquake prediction methods, thereby mitigating the risks associated with earthquakes.

5. Oil exploration

Supercomputers can also calculate where oil wells should be drilled. At present, seismic exploration is an important means of exploring oil and gas resources before drilling. The so-called seismic exploration is to use the elasticity difference and density difference of underground media to observe and analyze the earth's response to artificially excited seismic waves, infer the nature and shape of underground strata, and thereby determine the accurate distribution of oil and gas. This process requires intensive calculation and simulation of massive data, and the calculation results need to be converted into intuitive three-dimensional images, so it must be achieved with the help of high-performance computers.

6. Tsunami hazard prediction

Japanese scientists used the supercomputer "Beijing" to establish a tsunami simulation model and successfully predicted the damage caused by tsunami after the earthquake. . The results show that in the event of an earthquake in Miyagi Prefecture, the flood area in Sendai City can be predicted within 10 minutes. If this technology is promoted, it will improve the accuracy of tsunami warnings and guide residents to evacuate more effectively. Tsunami disaster simulation requires a large amount of time and takes a long time, making it difficult to perform real-time disaster analysis. During the "3.11" earthquake in Japan, the predicted tsunami wave height was lower than the actual height.

7. Precision Medicine

Precision medicine is medical treatment and treatment tailored for each person. It is not only a medical field, but also related to computing power. closely related. Genetic differences determine individual differences. There are 3 billion base pairs in a person's genome waiting for scientists to analyze, taking into account information such as people's living habits and external environment. But this is not enough. We would need genetic data from at least millions of people to form a database so that we could analyze the relationship between genetic differences and various health problems and thus "treat" people. All of this requires significant computing power to process.

8. Drug development

With the help of the supercomputer "Beijing", scientists have successfully developed new anti-cancer drugs. The researchers focused their research on proteins that play an important role in cancer cell proliferation, and used this special protein as a breakthrough to use supercomputer simulations to calculate chemicals that can effectively inhibit cancer cell proliferation. In general, calculating the binding of this cancer cell-specific protein to potentially effective drugs and its effect on body water is extremely complex, necessitating precise simulation experiments with the help of supercomputers.

9. Simulated blood flow

The total length of human blood vessels is 100,000 to 160,000 kilometers. Without supercomputers, it would be nearly impossible to simulate the flow of blood in blood vessels in real time. US scientists have successfully replicated the entire human arterial system using a supercomputer. Any artery larger than 1 mm in diameter appears in the 3D model with a resolution of 9 microns. Chinese scientists are also using the "Taihu Divine Power Lantern" to simulate and analyze human blood flow. This can promptly and effectively determine whether a person is at risk of cerebral infarction without placing a measuring device in the blood vessel. In addition to simulating blood flow, supercomputers can also model the heart, brain and other parts of the body.

10. Transportation industry

Supercomputers can be used to understand and improve the aerodynamics, fuel consumption, structural design, and collision avoidance of vehicles such as cars, airplanes, or ships, and help improve Occupant comfort, noise reduction, etc., all have potential economic and safety benefits.

The above is the detailed content of What high-performance computers can do. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

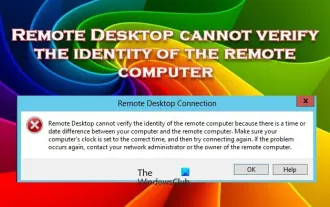

Remote Desktop cannot authenticate the remote computer's identity

Feb 29, 2024 pm 12:30 PM

Remote Desktop cannot authenticate the remote computer's identity

Feb 29, 2024 pm 12:30 PM

Windows Remote Desktop Service allows users to access computers remotely, which is very convenient for people who need to work remotely. However, problems can be encountered when users cannot connect to the remote computer or when Remote Desktop cannot authenticate the computer's identity. This may be caused by network connection issues or certificate verification failure. In this case, the user may need to check the network connection, ensure that the remote computer is online, and try to reconnect. Also, ensuring that the remote computer's authentication options are configured correctly is key to resolving the issue. Such problems with Windows Remote Desktop Services can usually be resolved by carefully checking and adjusting settings. Remote Desktop cannot verify the identity of the remote computer due to a time or date difference. Please make sure your calculations

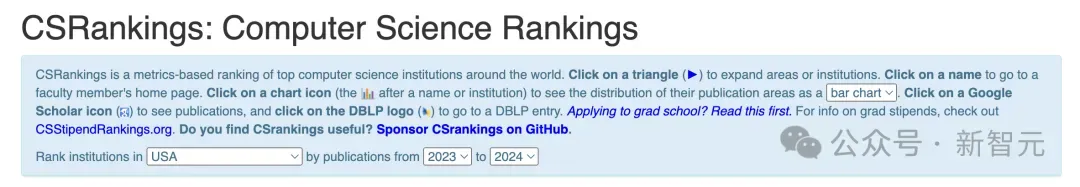

2024 CSRankings National Computer Science Rankings Released! CMU dominates the list, MIT falls out of the top 5

Mar 25, 2024 pm 06:01 PM

2024 CSRankings National Computer Science Rankings Released! CMU dominates the list, MIT falls out of the top 5

Mar 25, 2024 pm 06:01 PM

The 2024CSRankings National Computer Science Major Rankings have just been released! This year, in the ranking of the best CS universities in the United States, Carnegie Mellon University (CMU) ranks among the best in the country and in the field of CS, while the University of Illinois at Urbana-Champaign (UIUC) has been ranked second for six consecutive years. Georgia Tech ranked third. Then, Stanford University, University of California at San Diego, University of Michigan, and University of Washington tied for fourth place in the world. It is worth noting that MIT's ranking fell and fell out of the top five. CSRankings is a global university ranking project in the field of computer science initiated by Professor Emery Berger of the School of Computer and Information Sciences at the University of Massachusetts Amherst. The ranking is based on objective

What is e in computer

Aug 31, 2023 am 09:36 AM

What is e in computer

Aug 31, 2023 am 09:36 AM

The "e" of computer is the scientific notation symbol. The letter "e" is used as the exponent separator in scientific notation, which means "multiplied to the power of 10". In scientific notation, a number is usually written as M × 10^E, where M is a number between 1 and 10 and E represents the exponent.

Fix: Microsoft Teams error code 80090016 Your computer's Trusted Platform module has failed

Apr 19, 2023 pm 09:28 PM

Fix: Microsoft Teams error code 80090016 Your computer's Trusted Platform module has failed

Apr 19, 2023 pm 09:28 PM

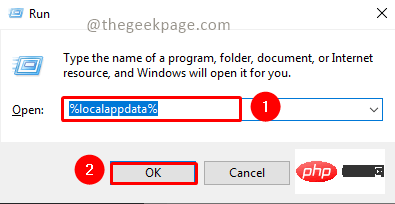

<p>MSTeams is the trusted platform to communicate, chat or call with teammates and colleagues. Error code 80090016 on MSTeams and the message <strong>Your computer's Trusted Platform Module has failed</strong> may cause difficulty logging in. The app will not allow you to log in until the error code is resolved. If you encounter such messages while opening MS Teams or any other Microsoft application, then this article can guide you to resolve the issue. </p><h2&

What does computer cu mean?

Aug 15, 2023 am 09:58 AM

What does computer cu mean?

Aug 15, 2023 am 09:58 AM

The meaning of cu in a computer depends on the context: 1. Control Unit, in the central processor of a computer, CU is the component responsible for coordinating and controlling the entire computing process; 2. Compute Unit, in a graphics processor or other accelerated processor, CU is the basic unit for processing parallel computing tasks.

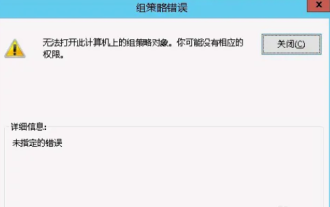

Unable to open the Group Policy object on this computer

Feb 07, 2024 pm 02:00 PM

Unable to open the Group Policy object on this computer

Feb 07, 2024 pm 02:00 PM

Occasionally, the operating system may malfunction when using a computer. The problem I encountered today was that when accessing gpedit.msc, the system prompted that the Group Policy object could not be opened because the correct permissions may be lacking. The Group Policy object on this computer could not be opened. Solution: 1. When accessing gpedit.msc, the system prompts that the Group Policy object on this computer cannot be opened because of lack of permissions. Details: The system cannot locate the path specified. 2. After the user clicks the close button, the following error window pops up. 3. Check the log records immediately and combine the recorded information to find that the problem lies in the C:\Windows\System32\GroupPolicy\Machine\registry.pol file

What should I do if steam cannot connect to the remote computer?

Mar 01, 2023 pm 02:20 PM

What should I do if steam cannot connect to the remote computer?

Mar 01, 2023 pm 02:20 PM

Solution to the problem that steam cannot connect to the remote computer: 1. In the game platform, click the "steam" option in the upper left corner; 2. Open the menu and select the "Settings" option; 3. Select the "Remote Play" option; 4. Check Activate the "Remote Play" function and click the "OK" button.

Python script to log out of computer

Sep 05, 2023 am 08:37 AM

Python script to log out of computer

Sep 05, 2023 am 08:37 AM

In today's digital age, automation plays a vital role in streamlining and simplifying various tasks. One of these tasks is to log off the computer, which is usually done manually by selecting the logout option from the operating system's user interface. But what if we could automate this process using a Python script? In this blog post, we'll explore how to create a Python script that can log off your computer with just a few lines of code. In this article, we'll walk through the step-by-step process of creating a Python script for logging out of your computer. We'll cover the necessary prerequisites, discuss different ways to log out programmatically, and provide a step-by-step guide to writing the script. Additionally, we will address platform-specific considerations and highlight best practices