What are the ways Node handles CPU-intensive tasks? The following article will show you how Node handles CPU-intensive tasks. I hope it will be helpful to you!

We have more or less heard the following words in our daily work:

Node is a

Non-blocking I/O(non-blocking I/O) andevent-driven(event-driven)JavaScript running environment(runtime), so it is very suitable for building I/O-intensive Applications, such as web services, etc.

I wonder if you will have the same doubts as me when you hear similar words: Why is single-threaded Node suitable for developing I/O-intensive applications? Logically speaking, wouldn’t languages that support multi-threading (such as Java and Golang) have more advantages in doing these tasks?

To understand the above problem, we need to know what Node's single thread refers to. [Related tutorial recommendations: nodejs video tutorial]

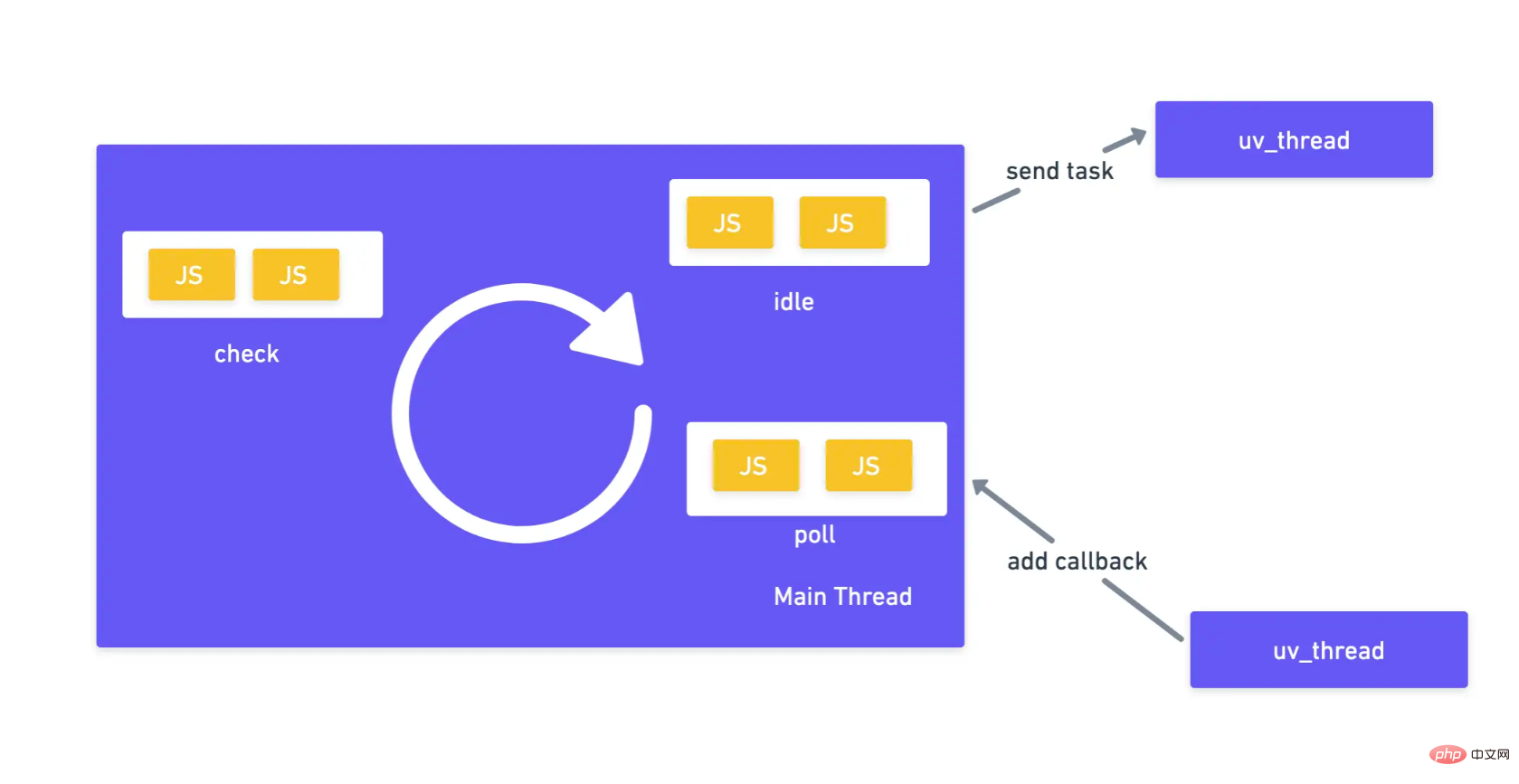

In fact, when we say that Node is single-threaded, we just mean Our JavaScript code is running in the same thread (we can call it main thread), instead of saying that Node has only one thread working. In fact, the bottom layer of Node will use libuv's multi-threading capability to execute part of the work (basically I/O related operations) in some main thread threads. When these After the task is completed, the results are returned to the JavaScript execution environment of the main thread in the form of callback function. You can take a look at the schematic diagram:

Note: The above picture is a simplified version of NodeEvent Loop(Event Loop). In fact, the complete event loop will have More stages such as timers, etc.

From the above analysis, we know that Node will disperse all I/O operations through libuv’s multi-threading capabilities Execute in different threads, and other operations are executed in the main thread. So why is this approach more suitable for I/O-intensive applications than other languages such as Java or Golang? Let's take the development of Web services as an example. The concurrency model of mainstream back-end programming languages such as Java and Golang is based on threads (Thread-Based), which means that they will create a for every network request. ##Separate thread to process. However, for Web applications, the main tasks include adding, deleting, modifying, and querying databases, or requesting other external services and other network I/O operations , and these operations are ultimately handed over to the system calls of the operating system. to process (without application thread participation), and is very slow (relative to the CPU clock cycle), so the created thread has nothing to do most of the time and our The service also bears additional thread switching overhead. Unlike these languages, Node does not create a thread for each request. The processing of all requests occurs in the main thread, so there is no thread switching overhead, and It will also process these I/O operations asynchronously in the form of thread pool, and then tell the main thread the results in the form of events to avoid blocking the execution of the main thread, so it Theoretically is more efficient. It is worth noting here that I just said that Node is faster in theory, but in fact it is not necessarily faster. This is because in reality the performance of a service will be affected by many aspects. We only consider the Concurrency model factor here, and other factors such as runtime consumption will also affect the performance of the service. For example For example, JavaScript is a dynamic language, and the data type needs to be inferred at runtime, while Golang and Java are both static languages, and their data types need to be inferred at compile time. That's for sure, so they may actually execute faster and take up less memory.

tasks, the main thread will be blocked. Let’s look at an example of a CPU-intensive task:<div class="code" style="position:relative; padding:0px; margin:0px;"><pre class="brush:php;toolbar:false">// node/cpu_intensive.js

const http = require('http')

const url = require('url')

const hardWork = () => {

// 100亿次毫无意义的计算

for (let i = 0; i {

const urlParsed = url.parse(req.url, true)

if (urlParsed.pathname === '/hard_work') {

hardWork()

resp.write('hard work')

resp.end()

} else if (urlParsed.pathname === '/easy_work') {

resp.write('easy work')

resp.end()

} else {

resp.end()

}

})

server.listen(8080, () => {

console.log('server is up...')

})</pre><div class="contentsignin">Copy after login</div></div>

<p>In the above code we implement an HTTP service with two interfaces: <code>/hard_workThe interface is a CPU-intensive interface because it calls hardWork This CPU-intensive function, while the /easy_work interface is very simple, just return a string to the client directly. Why is it said that the hardWork function is CPU-intensive? This is because it performs arithmetic operations on i in the arithmetic unit of the CPU without performing any I/O operations. After starting our Node service, we try to call the /hard_word interface:

We can see /hard_work The interface will get stuck because it requires a lot of CPU calculations, so it will take a long time to complete. At this time, let’s take a look at whether the interface /easy_work has any impact:

We found that the CPU is occupied in /hard_work After resources, the innocent /easy_work interface is also stuck. The reason is that the hardWork function blocks the main thread of Node and the logic of /easy_work will not be executed. It is worth mentioning here that only single-threaded execution environments based on event loops such as Node will have this problem. Thread-Based languages such as Java and Golang will not have this problem. So what if our service really needs to run CPU-intensive tasks? You can’t change the language, right? What about All in JavaScript as promised? Don't worry, Node has prepared many solutions for us to handle CPU-intensive tasks. Next, let me introduce three commonly used solutions to you. They are: Cluster Module, Child Process and Worker Thread.

Node launched the Cluster module very early (v0.8 version). The function of this module is to start a group of child processes through a parent process to load balance network requests . Due to the length limit of the article, we will not discuss in detail the APIs of the Cluster module. Interested readers can read the official documentation later. Here we will directly look at how to use the Cluster module to optimize the above CPU-intensive scenarios:

// node/cluster.js

const cluster = require('cluster')

const http = require('http')

const url = require('url')

// 获取CPU核数

const numCPUs = require('os').cpus().length

const hardWork = () => {

// 100亿次毫无意义的计算

for (let i = 0; i {

console.log(`worker ${worker.process.pid} is online`)

})

cluster.on('exit', (worker, code, signal) => {

// 某个工作进程挂了之后,我们需要立马启动另外一个工作进程来替代

console.log(`worker ${worker.process.pid} exited with code ${code}, and signal ${signal}, start a new one...`)

cluster.fork()

})

} else {

// 工作进程启动一个HTTP服务器

const server = http.createServer((req, resp) => {

const urlParsed = url.parse(req.url, true)

if (urlParsed.pathname === '/hard_work') {

hardWork()

resp.write('hard work')

resp.end()

} else if (urlParsed.pathname === '/easy_work') {

resp.write('easy work')

resp.end()

} else {

resp.end()

}

})

// 所有的工作进程都监听在同一个端口

server.listen(8080, () => {

console.log(`worker ${process.pid} server is up...`)

})

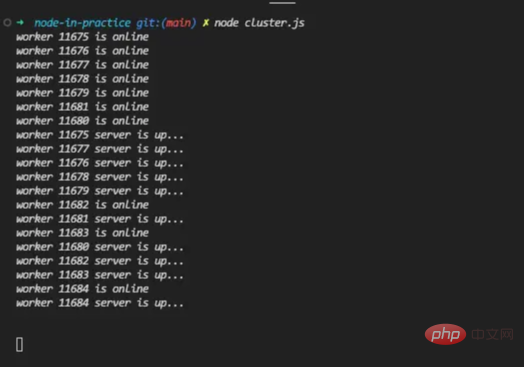

}In the above code, we use the cluster.fork function to create an equal number of worker processes based on the number of CPU cores of the current device, and these worker processes are all listening in 8080On the port. Seeing this, you may ask whether there will be a problem if all processes are listening on the same port. In fact, there will be no problem here, because the bottom layer of the Cluster module will do some work so that the final listening port is The 8080 port is the main process, and the main process is the entrance for all traffic. It will receive HTTP connections and route them to different worker processes. Without further ado, let’s run this node service:

#From the above output, the cluster has started 10 workers (my computer has 10 cores) ) to handle web requests. At this time, we will request the /hard_work interface again:

We found that this request is still stuck, and then we will try again. See if the Cluster module has solved the problem of other requests being blocked:

We can see the previous 9 requests The results were returned smoothly, but at the 10th request our interface got stuck. Why is this? The reason is that we have opened a total of 10 worker processes. The default load balancing strategy used by the main process when sending traffic to the child process is round-robin (turn), so the 10th request (actually The 11th one (because it includes the first hard_work request) just returns to the first worker, and this worker has not finished processing the hard_work task, so the easy_work task It's stuck. The load balancing algorithm of the cluster can be modified through cluster.schedulingPolicy. Interested readers can take a look at the official documentation.

从上面的结果来看Cluster Module似乎解决了一部分我们的问题,可是还是有一些请求受到了影响。那么Cluster Module在实际开发里面能不能被用来解决这个CPU密集型任务的问题呢?我的意见是:看情况。如果你的CPU密集型接口调用不频繁而且运算时间不会太长,你完全可以使用这种Cluster Module来优化。可是如果你的接口调用频繁并且每个接口都很耗时间的话,可能你需要看一下采用Child Process或者Worker Thread的方案了。

最后我们总结一下Cluster Module有什么优点:

资源利用率高:可以充分利用CPU的多核能力来提升请求处理效率。API设计简单:可以让你实现简单的负载均衡和一定程度的高可用。这里值得注意的是我说的是一定程度的高可用,这是因为Cluster Module的高可用是单机版的,也就是当宿主机器挂了,你的服务也就挂了,因此更高的高可用肯定是使用分布式集群做的。进程之间高度独立,避免某个进程发生系统错误导致整个服务不可用。优点说完了,我们再来说一下Cluster Module不好的地方:

资源消耗大:每一个子进程都是独立的Node运行环境,也可以理解为一个独立的Node程序,因此占用的资源也是巨大的。进程通信开销大:子进程之间的通信通过跨进程通信(IPC)来进行,如果数据共享频繁是一笔比较大的开销。没能完全解决CPU密集任务:处理CPU密集型任务时还是有点抓紧见肘。在Cluster Module中我们可以通过启动更多的子进程来将一些CPU密集型的任务负载均衡到不同的进程里面,从而避免其余接口卡死。可是你也看到了,这个办法治标不治本,如果用户频繁调用CPU密集型的接口,那么还是会有一大部分请求会被卡死的。优化这个场景的另外一个方法就是child_process模块。

Child Process可以让我们启动子进程来完成一些CPU密集型任务。我们先来看一下主进程master_process.js的代码:

// node/master_process.js

const { fork } = require('child_process')

const http = require('http')

const url = require('url')

const server = http.createServer((req, resp) => {

const urlParsed = url.parse(req.url, true)

if (urlParsed.pathname === '/hard_work') {

// 对于hard_work请求我们启动一个子进程来处理

const child = fork('./child_process')

// 告诉子进程开始工作

child.send('START')

// 接收子进程返回的数据,并且返回给客户端

child.on('message', () => {

resp.write('hard work')

resp.end()

})

} else if (urlParsed.pathname === '/easy_work') {

// 简单工作都在主进程进行

resp.write('easy work')

resp.end()

} else {

resp.end()

}

})

server.listen(8080, () => {

console.log('server is up...')

})在上面的代码中对于/hard_work接口的请求,我们会通过fork函数开启一个新的子进程来处理,当子进程处理完毕我们拿到数据后就给客户端返回结果。这里值得注意的是当子进程完成任务后我没有释放子进程的资源,在实际项目里面我们也不应该频繁创建和销毁子进程因为这个消耗也是很大的,更好的做法是使用进程池。下面是子进程(child_process.js)的实现逻辑:

// node/child_process.js

const hardWork = () => {

// 100亿次毫无意义的计算

for (let i = 0; i {

if (message === 'START') {

// 开始干活

hardWork()

// 干完活就通知子进程

process.send(message)

}

})子进程的代码也很简单,它在启动后会通过process.on的方式监听来自父进程的消息,在接收到开始命令后进行CPU密集型的计算,得出结果后返回给父进程。

运行上面master_process.js的代码,我们可以发现即使调用了/hard_work接口,我们还是可以任意调用/easy_work接口并且马上得到响应的,此处没有截图,过程大家脑补一下就可以了。

除了fork函数,child_process还提供了诸如exec和spawn等函数来启动子进程,并且这些进程可以执行任何的shell命令而不只是局限于Node脚本,有兴趣的读者后面可以通过官方文档了解一下,这里就不过多介绍了。

最后让我们来总结一下Child Process的优点有哪些:

灵活:不只局限于Node进程,我们可以在子进程里面执行任何的shell命令。这个其实是一个很大的优点,假如我们的CPU密集型操作是用其它语言实现的(例如c语言处理图像),而我们不想使用Node或者C++ Binding重新实现一遍的话我们就可以通过shell命令调用其它语言的程序,并且通过标准输入输出和它们进行通信从而得到结果。细粒度的资源控制:不像Cluster Module,Child Process方案可以按照实际对CPU密集型计算的需求大小动态调整子进程的个数,做到资源的细粒度控制,因此它理论上是可以解决Cluster Module解决不了的CPU密集型接口调用频繁的问题。不过Child Process的缺点也很明显:

资源消耗巨大:上面说它可以对资源进行细粒度控制的优点时,也说了它只是理论上可以解决CPU密集型接口频繁调用的问题,这是因为实际场景下我们的资源也是有限的,而每一个Child Process都是一个独立的操作系统进程,会消耗巨大的资源。因此对于频繁调用的接口我们需要采取能耗更低的方案也就是下面我会说的Worker Thread。进程通信麻烦:如果启动的子进程也是Node应用的话还好办点,因为有内置的API来和父进程通信,如果子进程不是Node应用的话,我们只能通过标准输入输出或者其它方式来进行进程间通信,这是一件很麻烦的事。无论是Cluster Module还是Child Process其实都是基于子进程的,它们都有一个巨大的缺点就是资源消耗大。为了解决这个问题Node从v10.5.0版本(v12.11.0 stable)开始就支持了worker_threads模块,worker_thread是Node对于CPU密集型操作的轻量级的线程解决方案。

Node的Worker Thread和其它语言的thread是一样的,那就是并发地运行你的代码。这里要注意是并发而不是并行。并行只是意味着一段时间内多件事情同时发生,而并发是某个时间点多件事情同时发生。一个典型的并行例子就是React的Fiber架构,因为它是通过时分复用的方式来调度不同的任务来避免React渲染阻塞浏览器的其它行为的,所以本质上它所有的操作还是在同一个操作系统线程执行的。不过这里值得注意的是:虽然并发强调多个任务同时执行,在单核CPU的情况下,并发会退化为并行。这是因为CPU同一个时刻只能做一件事,当你有多个线程需要执行的话就需要通过资源抢占的方式来时分复用执行某些任务。不过这都是操作系统需要关心的东西,和我们没什么关系了。

上面说了Node的Worker Thead和其他语言线程的thread类似的地方,接着我们来看一下它们不一样的地方。如果你使用过其它语言的多线程编程方式,你会发现Node的多线程和它们很不一样,因为Node多线程数据共享起来实在是太麻烦了!Node是不允许你通过共享内存变量的方式来共享数据的,你只能用ArrayBuffer或者SharedArrayBuffer的方式来进行数据的传递和共享。虽然说这很不方便,不过这也让我们不需要过多考虑多线程环境下数据安全等一系列问题,可以说有好处也有坏处吧。

接着我们来看一下如何使用Worker Thread来处理上面的CPU密集型任务,先看一下主线程(master_thread.js)的代码:

// node/master_thread.js

const { Worker } = require('worker_threads')

const http = require('http')

const url = require('url')

const server = http.createServer((req, resp) => {

const urlParsed = url.parse(req.url, true)

if (urlParsed.pathname === '/hard_work') {

// 对于每一个hard_work接口,我们都启动一个子线程来处理

const worker = new Worker('./child_process')

// 告诉子线程开始任务

worker.postMessage('START')

worker.on('message', () => {

// 在收到子线程回复后返回结果给客户端

resp.write('hard work')

resp.end()

})

} else if (urlParsed.pathname === '/easy_work') {

// 其它简单操作都在主线程执行

resp.write('easy work')

resp.end()

} else {

resp.end()

}

})

server.listen(8080, () => {

console.log('server is up...')

})在上面的代码中,我们的服务器每次接收到/hard_work请求都会通过new Worker的方式启动一个Worker线程来处理,在worker处理完任务之后我们再将结果返回给客户端,这个过程是异步的。接着再看一下子线程(worker_thead.js)的代码实现:

// node/worker_thread.js

const { parentPort } = require('worker_threads')

const hardWork = () => {

// 100亿次毫无意义的计算

for (let i = 0; i {

if (message === 'START') {

hardWork()

parentPort.postMessage()

}

})在上面的代码中,worker thread在接收到主线程的命令后开始执行CPU密集型操作,最后通过parentPort.postMessage的方式告知父线程任务已经完成,从API上看父子线程通信还是挺方便的。

Finally, we will summarize the advantages and disadvantages of Worker Thread. First of all, I think its advantages are:

Small resource consumption: Different from the process-based approach of Cluster Module and Child Process, Worker Thread is based on more lightweight threads , so its resource overhead is relatively small. However, although Sparrow is small and well-equipped, each Worker Thread has its own independent v8 engine instance and event loop system. This means that even if the main thread is stuckour Worker Thread can continue to work. Based on this, we can actually do a lot of interesting things.

: Unlike the previous two methods, Worker Thread does not need to communicate through IPC, and all data is shared and transferred within the process.

: Because the child thread is not in an independent environmentExecution, so if a certain child thread hangs up, it will still affect other threads. In this case, you need to take some additional measures to protect the other threads from being affected.

: Compared with other back-end languages, Node’s data sharing is still more troublesome, but this actually avoids the need to consider a lot of data security under multi-threading. The problem.

Never have to adopt a certain solution just to use a certain technology.

nodejs tutorial!

The above is the detailed content of A brief analysis of Node's method of handling CPU-intensive tasks. For more information, please follow other related articles on the PHP Chinese website!