How does GitHub achieve high availability for MySQL? The following article will share with you, I hope it will be helpful to everyone.

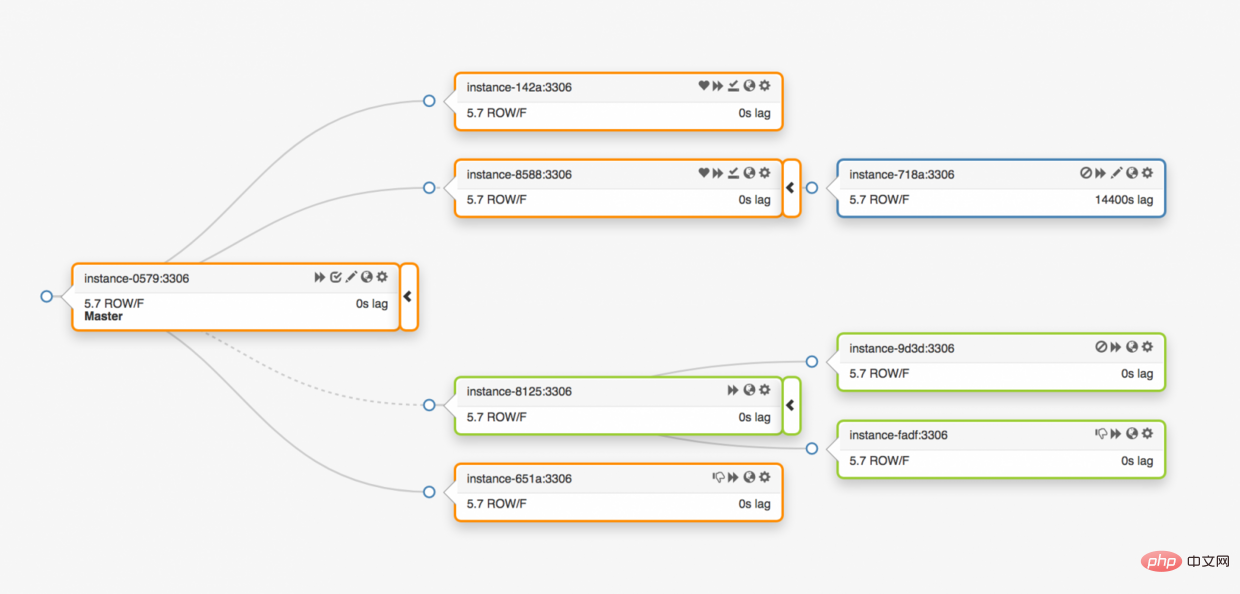

Github uses a MySQL database as the data store for all non-git transactions. Database availability is critical to the proper functioning of Github. Both the Github website itself, the Github API, the authentication service, etc. all need to access the database. Github runs multiple database clusters to support different service tasks. The database architecture adopts a traditional master-slave structure. One node (master database) in the cluster supports write access, and the remaining nodes (slave databases) synchronize changes to the master database and support read services.

The availability of the main library is crucial. Once the main database goes down, the cluster will not be able to support data writing services: any data that needs to be saved cannot be written to the database for storage. As a result, any changes on Github, such as code submission, questions, user creation, code review, warehouse creation, etc., cannot be completed.

In order to ensure the normal operation of the business, we naturally need to have an available database node in the cluster that supports writing. At the same time, we must also be able to quickly discover available writable service database nodes.

That is to say, under abnormal circumstances, if the main database is down, we must ensure that the new main database can go online immediately to support services, and at the same time ensure that other nodes in the cluster can quickly identify the new main database. The total time for fault detection, master migration, and other data nodes in the cluster to identify the new master constitutes the total time of service interruption.

This article explains GitHub's MySQL high availability and master service discovery solution, which allows us to reliably run operations across data centers, tolerate data center isolation, and shorten the time it takes to Downtime in case of failure.

#The solution described in this article is an improved version of the previous Github high availability solution. As mentioned before, MySQL's high availability strategy must adapt to business changes. We expect MySQL and other services on GitHub to have highly available solutions that can cope with changes.

When designing high availability and service discovery system solutions, starting from the following questions may help us quickly find a suitable solution:

In order to explain the above issues, let’s first take a look at our previous high availability solution and why we want to improve it.

#In the previous solution, the following technical solution was applied:

The client finds the master node by parsing the node name, such as mysql-writer-1.github.net, into the virtual IP address (VIP) of the master node.

Therefore, under normal circumstances, the client can connect to the master node of the corresponding IP by parsing the node name.

Consider the topology of three data centers:

Once the main database is abnormal, one of the data replica servers must be updated to the main database server.

orchestrator will detect anomalies, select a new master database, and then reassign the database name and virtual IP (VIP). The client itself does not know the changes to the main library. The information the client has is only the name of the main library, so this name must be able to be resolved to the new main library server. Consider the following:

VIP needs to be negotiated: The virtual IP is held by the database itself. The server must send an ARP request to occupy or release the VIP. Before the new database can allocate new VIPs, the old server must first release the VIPs it holds. This process will cause some abnormal problems:

When we actually set up the VIP, the VIP is also subject to the actual physical location. This mainly depends on where the switch or router is located. Therefore, we can only reassign the VIP on the same local server. In particular, there are cases where we cannot assign VIPs to servers in other data centers and must make DNS changes.

These limitations alone are enough to push us to find new solutions, but here are the things to consider:

Master server usagept-heartbeat The service goes to inject access heartbeats for the purpose of latency measurement and throttling control . The service must be started on the new primary server. If possible, the service of the old primary server will be shut down when the primary server is replaced.

Similarly, Pseudo-GTID is managed by the server itself. It needs to be started on the new master and preferably stopped on the old master.

The new master will be set up to be writable. If possible, the old master will be set to read_only(read-only).

These extra steps are a factor in total downtime and introduce their own glitches and friction.

The solution works, GitHub has successfully failed over MySQL, but we would like HA to improve in the following areas:

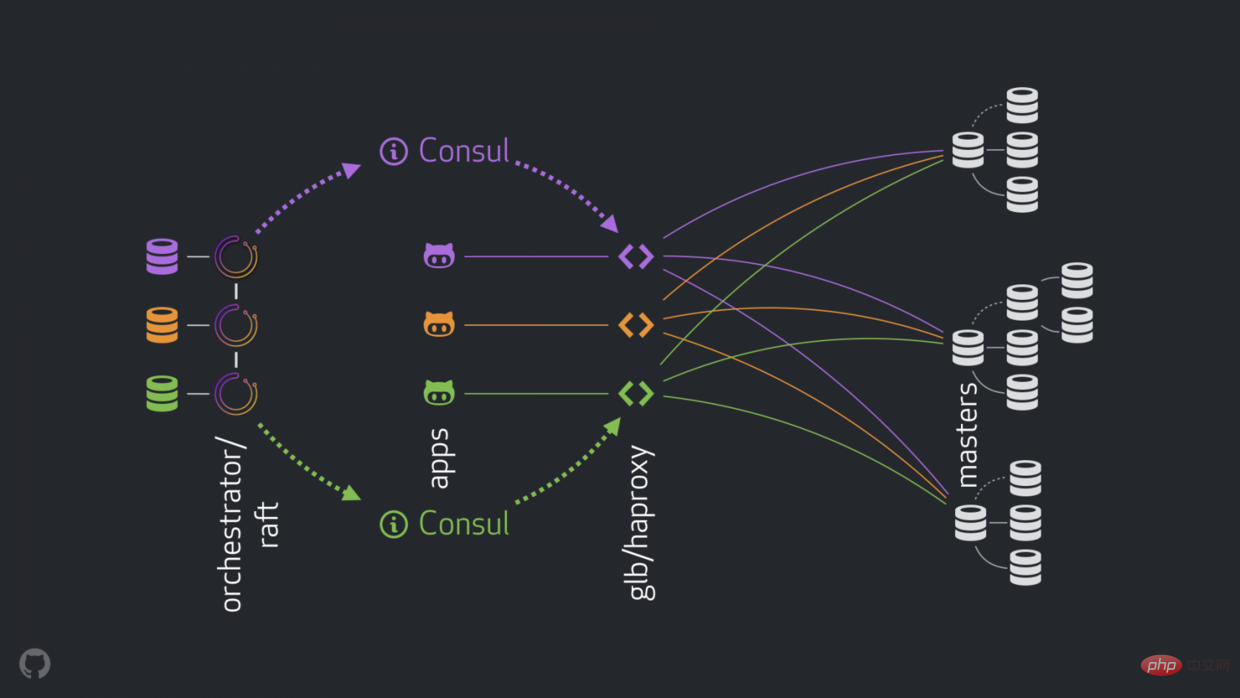

New strategies can improve, solve or optimize the problems mentioned above. The current high availability components are as follows:

anycast as a network router.

#The new structure removes VIP and DNS. As we introduce more components, we are able to decouple them and simplify the associated tasks and make it easier to leverage a reliable and stable solution. Details are as follows.

Normally, the application connects to the write node through GLB/HAProxy.

The application cannot sense the master identity. Previously, names were used. For example, the master of cluster1 is mysql-writer-1.github.net. In the current structure, this name is replaced by anycast IP.

With anycast, the name is replaced by the same IP, but the traffic is routed by the client's location. In particular, when the data center has GLB, the high-availability load balancer is deployed in a different box. Traffic to mysql-writer-1.github.net is directed to the GLB cluster in the local data center. This way all clients are served by the local proxy.

Use GLB on top of HAProxy. HAProxy has a write pool : one for each MySQL cluster. Each pool has a backend service: the cluster master node. All GLB/HAProxy boxes in the data center have the same pool, which means that these pools correspond to the same backend services. So if the application expects to write mysql-writer-1.github.net, it does not care which GLB service to connect to. It will lead to the actual cluster1 master node.

As far as the application is connected to GLB and the discovery service is concerned, rediscovery is not required. GLB is responsible for directing all traffic to the correct destination.

How does GLB know which services are backends, and how does it notify GLB of changes?

Consul is known for its service discovery solutions and also provides DNS services. However, in our solution we use it as a highly available key-value (KV) store.

In Consul’s KV storage, we write the identity of the cluster master node. For each cluster, there is a set of KV entries indicating the cluster's master fqdn, port, ipv4, ipv6.

Each GLB/HAProxy node runs consul-template: a service that listens for changes in Consul data (in our case: changes in the cluster master data). consul-template Generates a valid configuration file and the ability to reload HAProxy when configuration changes.

So each GLB/HAProxy machine observes Consul's change to the master identity, then it reconfigures itself, sets the new master as a single entity in the cluster backend pool, and reloads to reflect these changes.

At GitHub, we have a Consul setup in each data center, and each setup is highly available. However, these settings are independent of each other. They do not copy between each other and do not share any data.

How does Consul learn about changes, and how is information distributed across DCs?

We run an orchestrator/raft setup: orchestrator node passes raft Mechanisms communicate with each other. Each datacenter has one or two orchestrator nodes.

orchestrator is responsible for fault detection and MySQL failover, as well as communicating changes from master to Consul. Failover is operated by a single orchestrator/raft leader node, but the message that the cluster now has a new master is propagated to all orchestrator via the raft mechanism node.

When orchestrator nodes receive the message of master changes, they each communicate with the local Consul settings: they each call KV write. A DC with multiple orchestrator agents will write multiple (identical) times to Consul.

In the case of master crash:

orchestrator Node detection failure.orchestrator/raft The leader node begins to recover, and a data server is updated to be the master. orchestrator/raft Announce that all raft sub-cluster nodes have changed master. orchestrator/raft member receives a notification of a leader node change. Each of their members updates the new master to the local Consul KV store. consul-template, which observes changes in the Consul KV store and reconfigures and reloads HAProxy. Each component has clear responsibility, and the entire design is both decoupled and simplified. orchestrator Don’t know about the load balancer. Consul No need to know the source of the message. The agent only cares about Consul. The client only cares about the proxy.

Additionally:

To further protect traffic, we also have the following:

hard-stop-after. When it reloads the new backend server in the writer pool, it automatically terminates any existing connections to the old master server. hard-stop-after, we don’t even need the customer’s cooperation, which mitigates split-brain situations. It's worth noting that this is not closed, and some time passes before we terminate the old connection. But after a certain point, we feel comfortable and don't expect any nasty surprises. We will further address the problem and pursue the HA goals in the following sections.

orchestrator uses a holistic approach to detect faults and is therefore very reliable. We observed no false positives: we had no premature failovers and therefore did not suffer unnecessary downtime.

orchestrator/raft Further addresses the case of complete DC network isolation (aka DC fencing). DC network isolation can lead to confusion: servers within that DC can communicate with each other. Are they isolated from other DC networks, or are other DCs isolated from the network?

In an orchestrator/raft setup, the raft leader node is the node running failover. The leader is the node that has the support of the majority of the group (the quorum). Our coordinator node deployment is such that no one data center has a majority, any n-1 data centers will do.

When the DC network is completely isolated, orchestrator nodes in the DC will be disconnected from peer nodes in other DCs. Therefore, the orchestrator node in an isolated DC cannot become the leader of the raft cluster. If any such node happens to be the leader, it will step down. A new leader will be assigned from any other DC. This leader will be supported by all other DCs that can communicate with each other.

Therefore, the orchestrator node giving the orders will be a node outside the network isolation data center. If there is a master in a standalone DC, orchestrator will initiate a failover, replacing it with a server from one of the available DCs. We mitigate DC isolation by delegating decisions to a quorum in a non-isolated DC.

Total downtime can be further reduced by publishing major changes faster. How to achieve this?

When orchestrator starts a failover, it observes the queue of servers available for promotion. Understanding replication rules and adhering to tips and limitations enables you to make educated decisions about the best course of action.

It may be recognized that a server available for promotion is also an ideal candidate, for example:

first make the server writable and then immediately advertise the server (in our case writing to the Consul KV store ), even if repairing the replication tree starts asynchronously, this operation usually takes several seconds. It is possible that the replication tree will be intact before the GLB server is completely reloaded, but it is not strictly required. The server accepts writes very well!

, the master server does not acknowledge transaction commits until known changes have been sent to One or more copies. It provides a way to achieve lossless failover: any changes applied to the primary server are either applied to the primary server or wait to be applied to one of the replicas. Consistency comes with a cost: the risk of availability. If no replica acknowledges receipt of the changes, the master blocks and stops writing. Fortunately, there is a timeout configuration after which the master can revert to asynchronous replication mode, making writes available again. We have set the timeout to a reasonably low value: We enable semi-sync on the local DC replica, and in the event of the master's death we expect (although not strictly enforce) a lossless failover. Lossless failover of a complete DC fault is expensive and we do not expect it. While experimenting with semi-synchronous timeouts, we also observed a behavior that worked in our favor: in the case of primary failure, we were able to influence the identity of the ideal candidate. By enabling semi-sync on designated servers and marking them as candidate servers, we are able to reduce overall downtime by influencing the outcome of a failure. In our experiments, we observed that we often ended up with ideal candidates to advertise quickly. We do not manage the startup/shutdown of the In our current setup, the We further orchestrator: This reduces friction on top of all new masters. A newly promoted master should obviously be alive and acceptable, otherwise we won't promote it. Therefore, it makes sense to let the We further orchestrator: This reduces friction on top of all new masters. A newly promoted master should obviously be live and acceptable, otherwise we won't promote it. Therefore, it makes sense to have the The proxy layer keeps the application unaware of the identity of the primary server, but it also masks the identity of the application's primary server. All that is primarily seen is the connection coming from the proxy layer, and we lose information about the actual origin of the connection. With the development of distributed systems, we are still faced with unhandled scenarios. It is worth noting that in a data center isolation scenario, assuming the primary server is located in an isolated DC, applications in the DC can still write to the primary server. This can lead to inconsistent state once the network is restored. We are working to mitigate this split brain by implementing a reliable STONITH from within a very siled DC. As before, it will be a while before the primaries are destroyed, and there may be a brief period of brain-splitting. The operational cost of avoiding brain splitting is very high. There are more cases: stopping consul service on failover; partial DC isolation; other cases. We know it's impossible to plug all vulnerabilities in a distributed system of this nature, so we focus on the most important cases. Our coordinator/GLB/Consul provided us with: The business process/proxy/service discovery paradigm uses well-known and trusted components in a separated architecture, This makes it easier to deploy, operate, and observe, and each component can be scaled up or down independently. We can constantly test settings and look for improvements. Original address: https://github.blog/2018-06-20-mysql-high-availability-at-github/ Translation address: https://learnku .com/mysql/t/36820 [Related recommendations: mysql video tutorial]500ms. It is sufficient to send changes from the master DC replica to the local DC replica and also to the remote DC. With this timeout we can observe perfect semi-synchronous behavior (no fallback to asynchronous replication) and can easily use very short blocking periods in case of acknowledgment failure. Inject heartbeat

pt-heart service on the upgraded/downgraded host, but choose to Run it anywhere anytime. This requires some patching to enable pt-heart to adapt to situations where the server changes back and forth to read_only (read-only status) or crashes completely. pt-heart service runs on the master and replicas. On the host, they generate heartbeat events. On replicas, they identify servers as read_only(read-only) and periodically recheck their status. Once a server is promoted to master, pt-heart on that server identifies the server as writable and begins injecting heartbeat events. orchestrator ownership delegation

read_only. orchestrator speak directly about changing the msater that is applied to the boost. orchestrator ownership delegation

read_only. orchestrator apply the changes directly to the promoted msater. Limitations and Disadvantages

Conclusion

10 and 13 seconds between. 20 seconds of total downtime, and in extreme cases up to 25 seconds time. Conclusion

The above is the detailed content of Let's talk about how GitHub achieves MySQL high availability. For more information, please follow other related articles on the PHP Chinese website!