A brief analysis of the principles of high concurrency in Node

Let’s first look at a few common statements

- nodejs is a single-threaded non-blocking I/O model

- nodejs is suitable for high concurrency

- nodejs is suitable for I/O-intensive applications, but not for CPU-intensive applications [Related tutorial recommendations: nodejs video tutorial]

Let’s analyze these in detail Before saying yes and why, let’s do some preparatory work

Let’s start from the beginning

What a common web application will do

- Operation (execute business logic, mathematical operations, function calls, etc. The main work is performed on the CPU)

- I/O (such as reading and writing files, reading and writing databases, reading and writing networks Requests, etc. Mainly work on various I/O devices, such as disks, network cards, etc.)

A typical traditional web application implementation

- Multi-process, one request forks a (child) process blocking I/O (that is, blocking I/O or BIO)

- Multi-threading, one request creates a thread blocking I/O

Multi-process web application example pseudo code

listenFd = new Socket(); // 创建监听socket

Bind(listenFd, 80); // 绑定端口

Listen(listenFd); // 开始监听

for ( ; ; ) {

// 接收客户端请求,通过新的socket建立连接

connFd = Accept(listenFd);

// fork子进程

if ((pid = Fork()) === 0) {

// 子进程中

// BIO读取网络请求数据,阻塞,发生进程调度

request = connFd.read();

// BIO读取本地文件,阻塞,发生进程调度

content = ReadFile('test.txt');

// 将文件内容写入响应

Response.write(content);

}

}Multi-threaded applications are actually similar to multi-processes, except that one request is allocated to one process instead of one request to one thread. Threads are lighter than processes and occupy less system resources. Context switching (ps: the so-called context switching, a little explanation: a single-core CPU can only execute tasks in one process or thread at the same time, but for the sake of macro Parallelism on the Internet requires switching back and forth between multiple processes or threads according to time slices to ensure that each process and thread has a chance to be executed.) The overhead is also smaller; at the same time, it is easier to share memory between threads, which facilitates development

The two core points of web applications are mentioned above, one is the thread (thread) model and the other is the I/O model. So what exactly is blocking I/O? What other I/O models are there? Don't worry, first let's take a look at what is blocking

What is blocking? What is blocking I/O?

In short, blocking means that before the function call returns, the current (thread) thread will be suspended and enter the waiting state. In this state, the current (thread) thread will pause. , causing the CPU's incoming (thread) process scheduling. The function will only return to the caller after all the internal work is completed

So blocking I/O means that after the application calls the I/O operation through the API, the current thread (thread) will enter the waiting state , the code cannot continue to execute. At this time, the CPU can perform thread (thread) scheduling, that is, switch to other executable threads to continue execution. After the current thread (thread) completes processing of the underlying I/O request Only then will it return and continue execution

What's wrong with the multi-thread (thread) blocking I/O model?

After understanding what blocking and blocking I/O are, let’s analyze the disadvantages of the multi-thread (thread) blocking I/O model of traditional web applications.

Because a request needs to be allocated a thread (thread), such a system needs to maintain a large number of threads (threads) when the amount of concurrency is large, and a large number of context switches are required, which requires a large amount of CPU, System resources such as memory support, so when high concurrent requests come in, the CPU and memory overhead will rise sharply, which may quickly bring down the entire system and cause the service to be unavailable

nodejs application implementation

Next let’s take a look at how nodejs applications are implemented.

- Event-driven, single-threaded (main thread)

- Non-blocking I/O As you can see on the official website, the two main features of nodejs are single-threaded event-driven and "non-blocking" I/O model. Single-threaded event-driven is easier to understand. Front-end students should be familiar with the single-thread and event loop mechanisms of js, so let's mainly study what this "non-blocking I/O" is all about. First, let’s look at a piece of common code for nodejs server applications.

const net = require('net');

const server = net.createServer();

const fs = require('fs');

server.listen(80); // 监听端口

// 监听事件建立连接

server.on('connection', (socket) => {

// 监听事件读取请求数据

socket.on('data', (data) => {

// 异步读取本地文件

fs.readFile('test.txt', (err, data) => {

// 将读取的内容写入响应

socket.write(data);

socket.end();

})

});

});You can see that in nodejs, we can perform I/O operations in an asynchronous manner. After calling the I/O operation through the API It will return immediately, and then you can continue to execute other code logic. So why is I/O in nodejs "non-blocking"? Before answering this question, let’s do some preparation work. Refer to the nodejs advanced video explanation: Enter learning

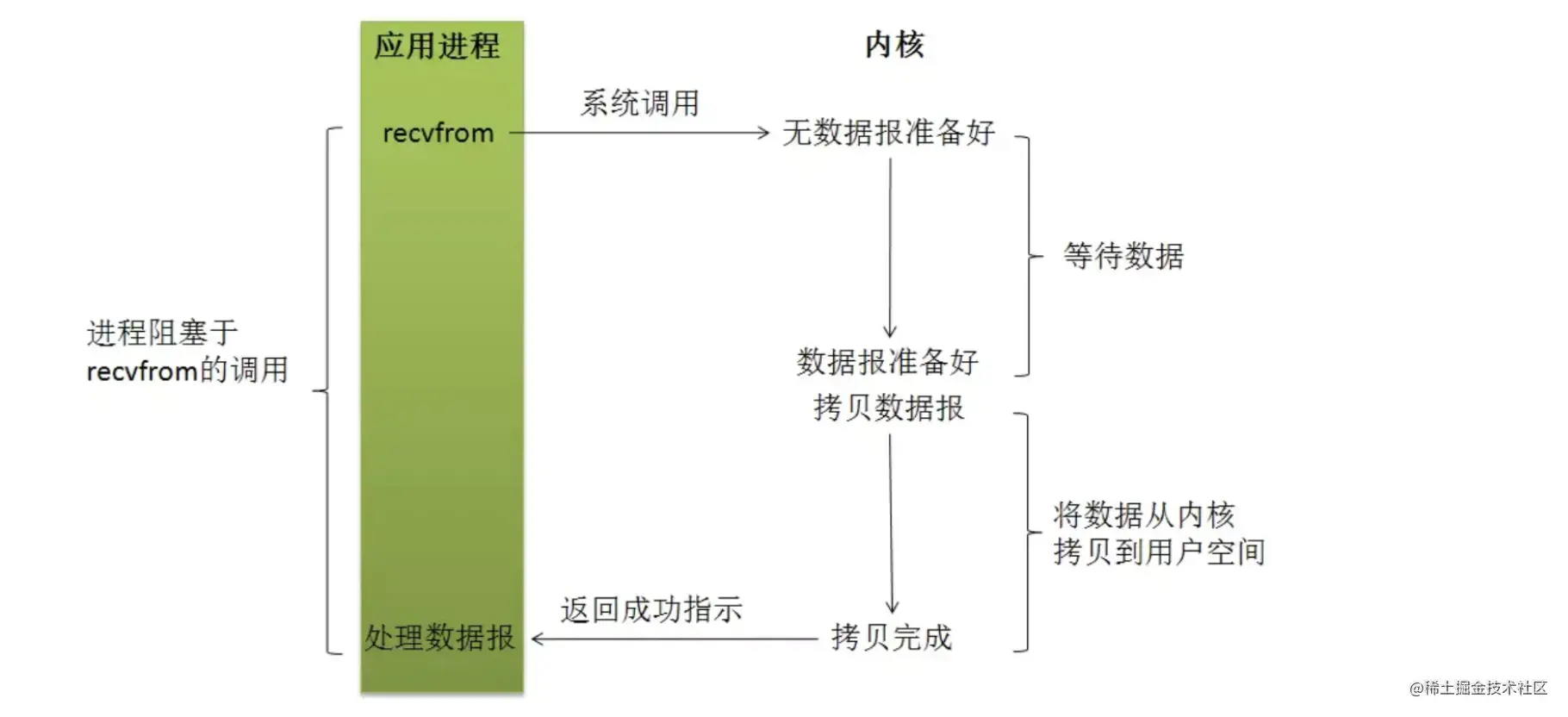

Read the basic steps of operation

Watch first What steps do the next read operation need to go through

- 用户程序调用I/O操作API,内部发出系统调用,进程从用户态转到内核态

- 系统发出I/O请求,等待数据准备好(如网络I/O,等待数据从网络中到达socket;等待系统从磁盘上读取数据等)

- 数据准备好后,复制到内核缓冲区

- 从内核空间复制到用户空间,用户程序拿到数据

接下来我们看一下操作系统中有哪些I/O模型

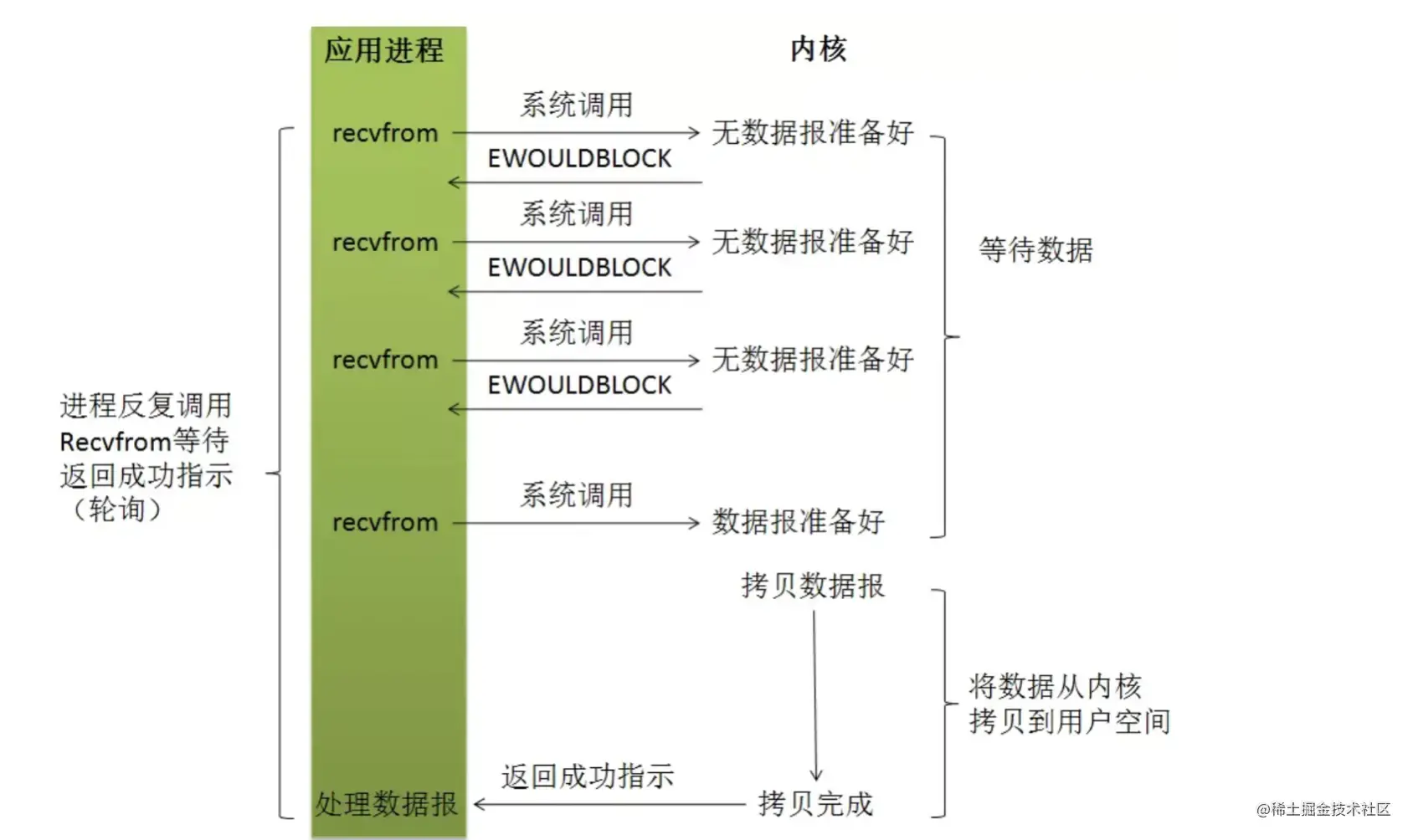

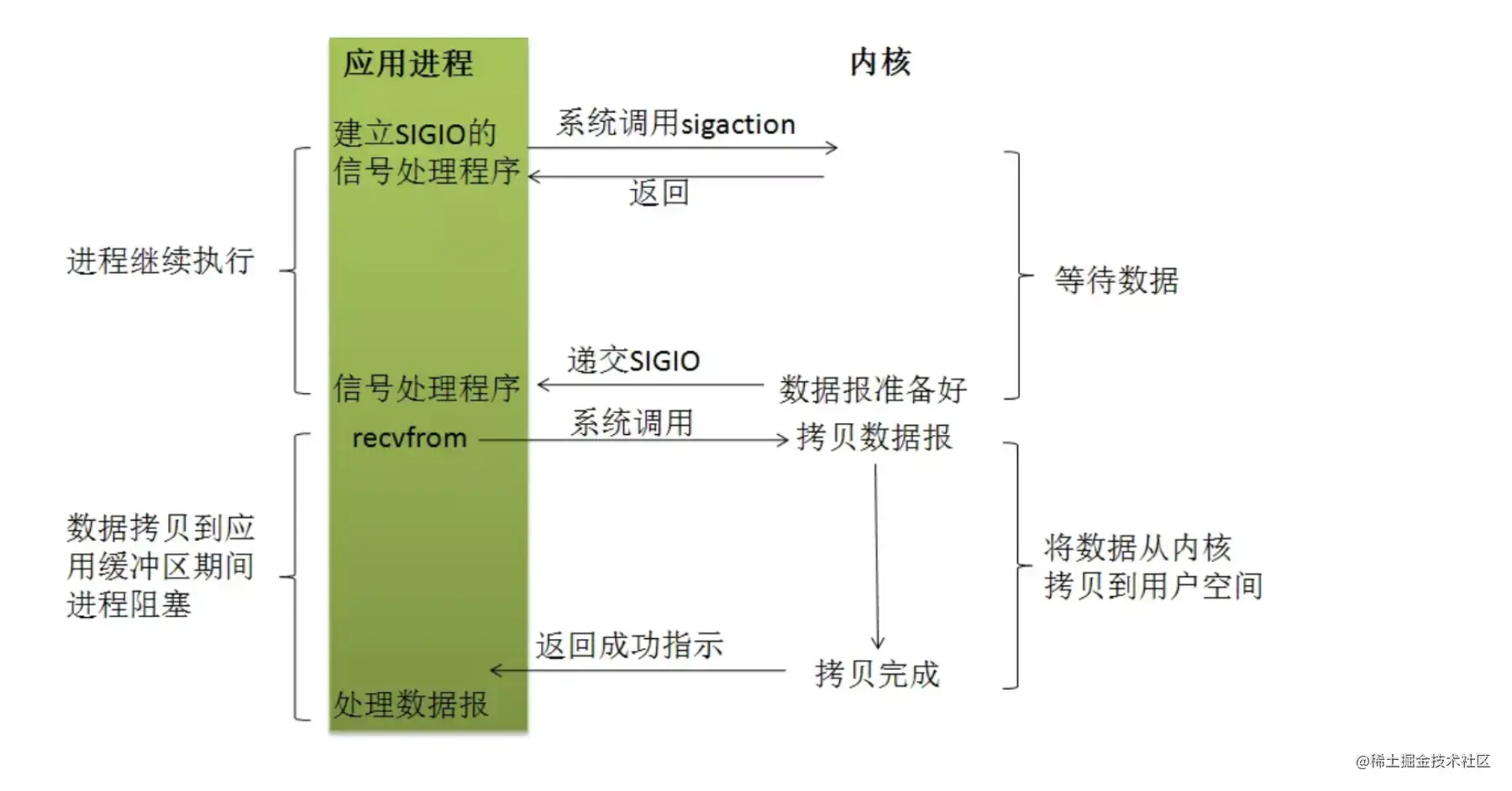

几种I/O模型

阻塞式I/O

非阻塞式I/O

I/O多路复用(进程可同时监听多个I/O设备就绪)

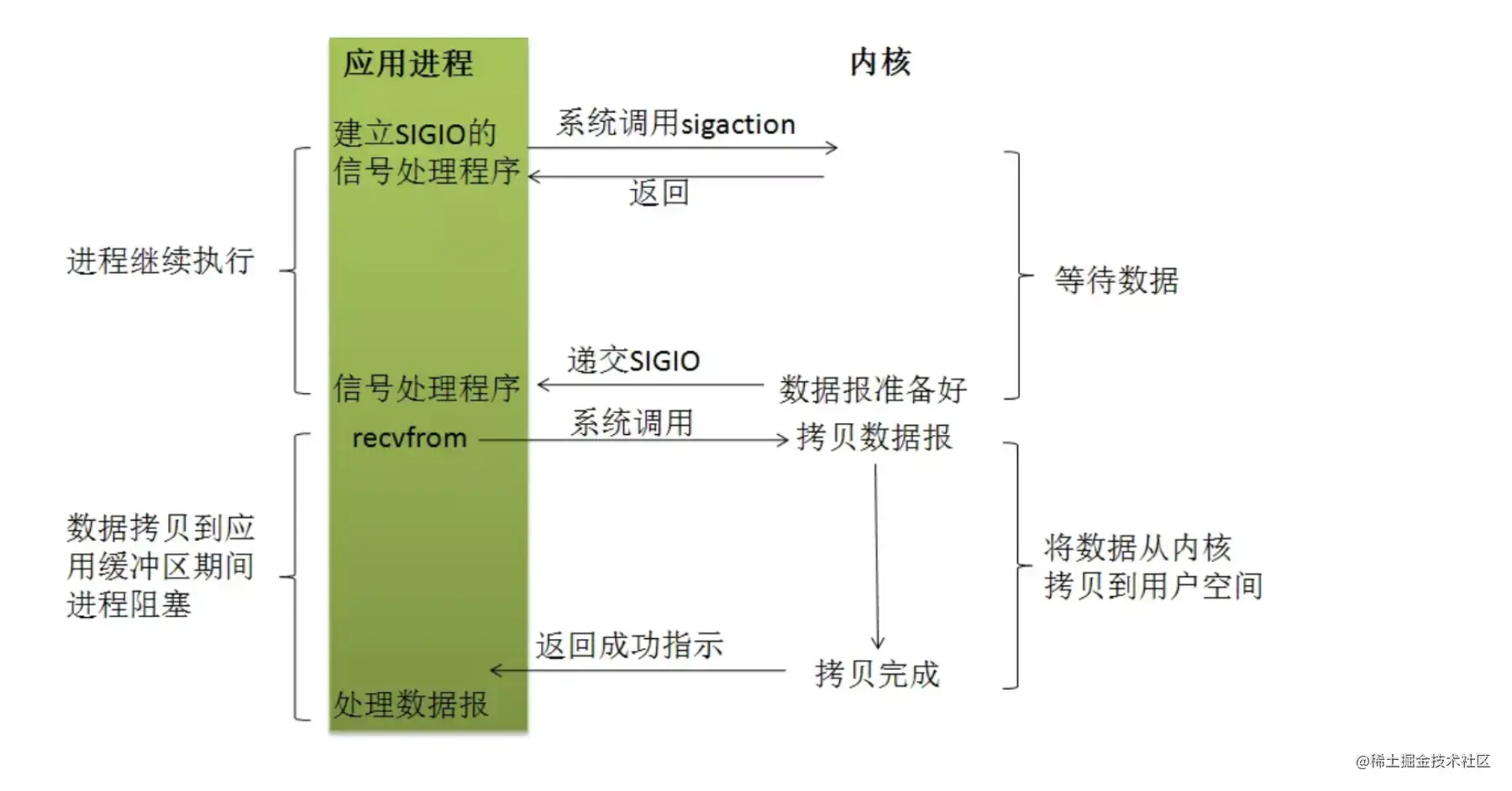

信号驱动I/O

异步I/O

那么nodejs里到底使用了哪种I/O模型呢?是上图中的“非阻塞I/O”吗?别着急,先接着往下看,我们来了解下nodejs的体系结构

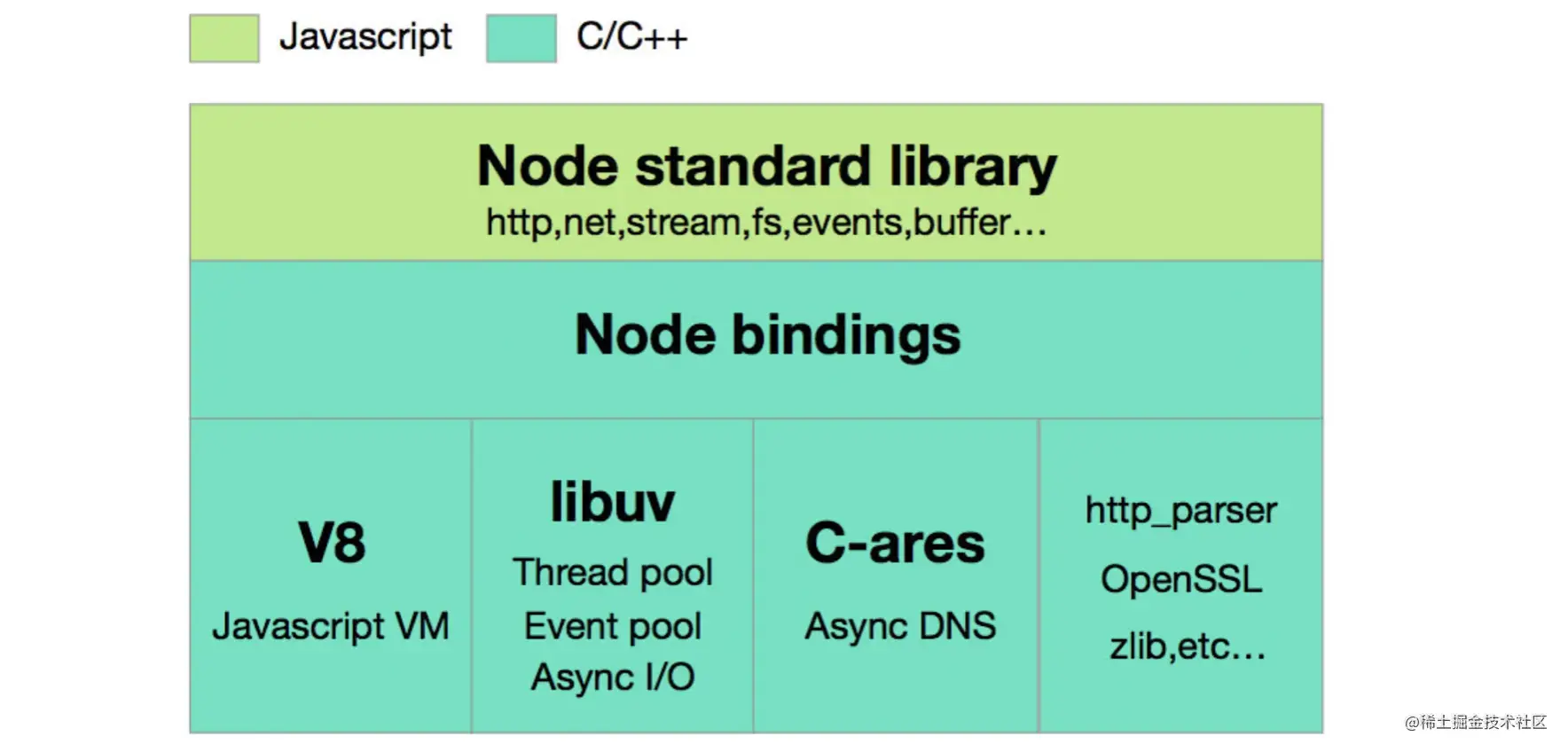

nodejs体系结构,线程、I/O模型分析

最上面一层是就是我们编写nodejs应用代码时可以使用的API库,下面一层则是用来打通nodejs和它所依赖的底层库的一个中间层,比如实现让js代码可以调用底层的c代码库。来到最下面一层,可以看到前端同学熟悉的V8,还有其他一些底层依赖。注意,这里有一个叫libuv的库,它是干什么的呢?从图中也能看出,libuv帮助nodejs实现了底层的线程池、异步I/O等功能。libuv实际上是一个跨平台的c语言库,它在windows、linux等不同平台下会调用不同的实现。我这里主要分析linux下libuv的实现,因为我们的应用大部分时候还是运行在linux环境下的,且平台间的差异性并不会影响我们对nodejs原理的分析和理解。好了,对于nodejs在linux下的I/O模型来说,libuv实际上提供了两种不同场景下的不同实现,处理网络I/O主要由epoll函数实现(其实就是I/O多路复用,在前面的图中使用的是select函数来实现I/O多路复用,而epoll可以理解为select函数的升级版,这个暂时不做具体分析),而处理文件I/O则由多线程(线程池) + 阻塞I/O模拟异步I/O实现

下面是一段我写的nodejs底层实现的伪代码帮助大家理解

listenFd = new Socket(); // 创建监听socket

Bind(listenFd, 80); // 绑定端口

Listen(listenFd); // 开始监听

for ( ; ; ) {

// 阻塞在epoll函数上,等待网络数据准备好

// epoll可同时监听listenFd以及多个客户端连接上是否有数据准备就绪

// clients表示当前所有客户端连接,curFd表示epoll函数最终拿到的一个就绪的连接

curFd = Epoll(listenFd, clients);

if (curFd === listenFd) {

// 监听套接字收到新的客户端连接,创建套接字

int connFd = Accept(listenFd);

// 将新建的连接添加到epoll监听的list

clients.push(connFd);

}

else {

// 某个客户端连接数据就绪,读取请求数据

request = curFd.read();

// 这里拿到请求数据后可以发出data事件进入nodejs的事件循环

...

}

}

// 读取本地文件时,libuv用多线程(线程池) + BIO模拟异步I/O

ThreadPool.run((callback) => {

// 在线程里用BIO读取文件

String content = Read('text.txt');

// 发出事件调用nodejs提供的callback

});通过I/O多路复用 + 多线程模拟的异步I/O配合事件循环机制,nodejs就实现了单线程处理并发请求并且不会阻塞。所以回到之前所说的“非阻塞I/O”模型,实际上nodejs并没有直接使用通常定义上的非阻塞I/O模型,而是I/O多路复用模型 + 多线程BIO。我认为“非阻塞I/O”其实更多是对nodejs编程人员来说的一种描述,从编码方式和代码执行顺序上来讲,nodejs的I/O调用的确是“非阻塞”的

总结

至此我们应该可以了解到,nodejs的I/O模型其实主要是由I/O多路复用和多线程下的阻塞I/O两种方式一起组成的,而应对高并发请求时发挥作用的主要就是I/O多路复用。好了,那最后我们来总结一下nodejs线程模型和I/O模型对比传统web应用多进(线)程 + 阻塞I/O模型的优势和劣势

- Nodejs uses the single-threaded model to save the cost of system maintenance and switching of multiple threads. At the same time, the multiplexed I/O model can prevent the single-thread of nodejs from blocking a certain connection. superior. In high-concurrency scenarios, nodejs applications only need to create and manage socket descriptors corresponding to multiple client connections without creating corresponding processes or threads. The system overhead is greatly reduced, so more client connections can be processed simultaneously

- Nodejs cannot improve the efficiency of underlying real I/O operations. If the underlying I/O becomes the performance bottleneck of the system, nodejs still cannot solve it. That is, nodejs can receive high concurrent requests, but if it needs to handle a large number of slow I/O operations (such as reading and writing disks), it may still cause system resource overload. Therefore, high concurrency cannot be solved simply through the single-threaded non-blocking I/O model.

- CPU-intensive applications may make the single-threaded model of nodejs become a performance bottleneck.

- nodejs is suitable for high-concurrency processing A small amount of business logic or fast I/O (such as reading and writing memory)

For more node-related knowledge, please visit: nodejs tutorial!

The above is the detailed content of A brief analysis of the principles of high concurrency in Node. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Is nodejs a backend framework?

Apr 21, 2024 am 05:09 AM

Is nodejs a backend framework?

Apr 21, 2024 am 05:09 AM

Node.js can be used as a backend framework as it offers features such as high performance, scalability, cross-platform support, rich ecosystem, and ease of development.

How to connect nodejs to mysql database

Apr 21, 2024 am 06:13 AM

How to connect nodejs to mysql database

Apr 21, 2024 am 06:13 AM

To connect to a MySQL database, you need to follow these steps: Install the mysql2 driver. Use mysql2.createConnection() to create a connection object that contains the host address, port, username, password, and database name. Use connection.query() to perform queries. Finally use connection.end() to end the connection.

What is the difference between npm and npm.cmd files in the nodejs installation directory?

Apr 21, 2024 am 05:18 AM

What is the difference between npm and npm.cmd files in the nodejs installation directory?

Apr 21, 2024 am 05:18 AM

There are two npm-related files in the Node.js installation directory: npm and npm.cmd. The differences are as follows: different extensions: npm is an executable file, and npm.cmd is a command window shortcut. Windows users: npm.cmd can be used from the command prompt, npm can only be run from the command line. Compatibility: npm.cmd is specific to Windows systems, npm is available cross-platform. Usage recommendations: Windows users use npm.cmd, other operating systems use npm.

What are the global variables in nodejs

Apr 21, 2024 am 04:54 AM

What are the global variables in nodejs

Apr 21, 2024 am 04:54 AM

The following global variables exist in Node.js: Global object: global Core module: process, console, require Runtime environment variables: __dirname, __filename, __line, __column Constants: undefined, null, NaN, Infinity, -Infinity

Is there a big difference between nodejs and java?

Apr 21, 2024 am 06:12 AM

Is there a big difference between nodejs and java?

Apr 21, 2024 am 06:12 AM

The main differences between Node.js and Java are design and features: Event-driven vs. thread-driven: Node.js is event-driven and Java is thread-driven. Single-threaded vs. multi-threaded: Node.js uses a single-threaded event loop, and Java uses a multi-threaded architecture. Runtime environment: Node.js runs on the V8 JavaScript engine, while Java runs on the JVM. Syntax: Node.js uses JavaScript syntax, while Java uses Java syntax. Purpose: Node.js is suitable for I/O-intensive tasks, while Java is suitable for large enterprise applications.

Pi Node Teaching: What is a Pi Node? How to install and set up Pi Node?

Mar 05, 2025 pm 05:57 PM

Pi Node Teaching: What is a Pi Node? How to install and set up Pi Node?

Mar 05, 2025 pm 05:57 PM

Detailed explanation and installation guide for PiNetwork nodes This article will introduce the PiNetwork ecosystem in detail - Pi nodes, a key role in the PiNetwork ecosystem, and provide complete steps for installation and configuration. After the launch of the PiNetwork blockchain test network, Pi nodes have become an important part of many pioneers actively participating in the testing, preparing for the upcoming main network release. If you don’t know PiNetwork yet, please refer to what is Picoin? What is the price for listing? Pi usage, mining and security analysis. What is PiNetwork? The PiNetwork project started in 2019 and owns its exclusive cryptocurrency Pi Coin. The project aims to create a one that everyone can participate

Is nodejs a back-end development language?

Apr 21, 2024 am 05:09 AM

Is nodejs a back-end development language?

Apr 21, 2024 am 05:09 AM

Yes, Node.js is a backend development language. It is used for back-end development, including handling server-side business logic, managing database connections, and providing APIs.

How to deploy nodejs project to server

Apr 21, 2024 am 04:40 AM

How to deploy nodejs project to server

Apr 21, 2024 am 04:40 AM

Server deployment steps for a Node.js project: Prepare the deployment environment: obtain server access, install Node.js, set up a Git repository. Build the application: Use npm run build to generate deployable code and dependencies. Upload code to the server: via Git or File Transfer Protocol. Install dependencies: SSH into the server and use npm install to install application dependencies. Start the application: Use a command such as node index.js to start the application, or use a process manager such as pm2. Configure a reverse proxy (optional): Use a reverse proxy such as Nginx or Apache to route traffic to your application