What is cache?

cache is called cache memory, which is a high-speed small-capacity memory between the central processing unit and the main memory. It is generally composed of high-speed SRAM; this kind of local memory is oriented to the CPU and is introduced to reduce the Or eliminate the impact of the speed difference between CPU and memory on system performance. Cache capacity is small but fast, memory speed is low but capacity is large. By optimizing the scheduling algorithm, the performance of the system will be greatly improved.

The operating environment of this tutorial: Windows 7 system, Dell G3 computer.

What is cache?

Cache memory: Cache memory in the computer, located in the CPU and main memory DRAM (Dynamic Random Access Memory ), a smaller but very high-speed memory, usually composed of SRAM (Static Random Access Memory).

As shown in Figure 3.28, the cache is a small-capacity memory between the CPU and the main memory M2, but the access speed is faster than the main memory and the capacity is much smaller than the main memory. live. The cache can provide instructions and data to the CPU at high speed, thereby speeding up program execution. From a functional point of view, it is a buffer memory of the main memory, which is composed of high-speed SRAM. In pursuit of high speed, all functions, including management, are implemented in hardware and are thus transparent to programmers.

At present, with the advancement of semiconductor device integration, small-capacity cache and CPU can be integrated into the same chip, and its working speed is close to the speed of the CPU, thus forming a two-level or more cache system.

The function of cache

The function of Cache is to increase the rate of CPU data input and output. Cache capacity is small but fast, memory speed is low but capacity is large. By optimizing the scheduling algorithm, the performance of the system will be greatly improved, as if the storage system capacity is equivalent to the memory and the access speed is similar to the Cache.

The speed of the CPU is much higher than that of the memory. When the CPU directly accesses data from the memory, it has to wait for a certain period of time, while the Cache can save a part of the data that the CPU has just used or recycled. If the CPU needs to access data again, When using this part of the data, it can be called directly from the Cache, thus avoiding repeated access to data and reducing the waiting time of the CPU, thus improving the efficiency of the system. Cache is divided into L1Cache (level one cache) and L2Cache (level two cache). L1Cache is mainly integrated inside the CPU, while L2Cache is integrated on the motherboard or CPU.

Basic principles of cache

In addition to SRAM, cache also has control logic. If the cache is outside the CPU chip, its control logic is generally combined with the main memory control logic, called the main memory/chace controller; if the cache is inside the CPU, the CPU provides its control logic.

The data exchange between CPU and cache is in word units, while the data exchange between cache and main memory is in block units. A block consists of several words and is of fixed length. When the CPU reads a word in memory, it sends the memory address of the word to the cache and main memory. At this time, the cache control logic determines whether the word is currently in the cache based on the address: if so, the cache hits and the word is immediately transferred to the CPU; if not, the cache is missing (missed), and the main memory read cycle is used to read the word from the main memory. The read is sent to the CPU. At the same time, the entire data block containing this word is read from the main memory and sent to the cache.

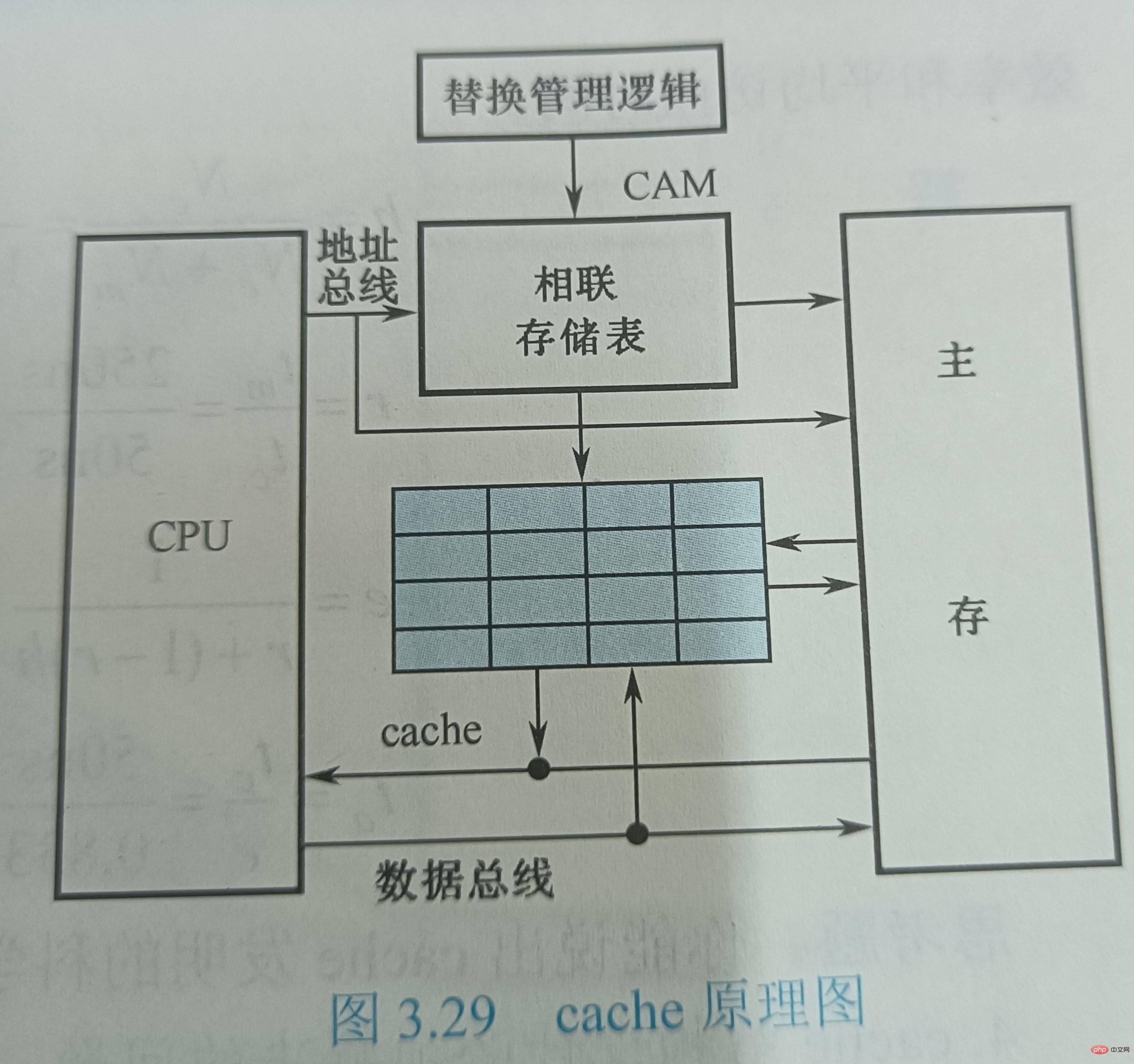

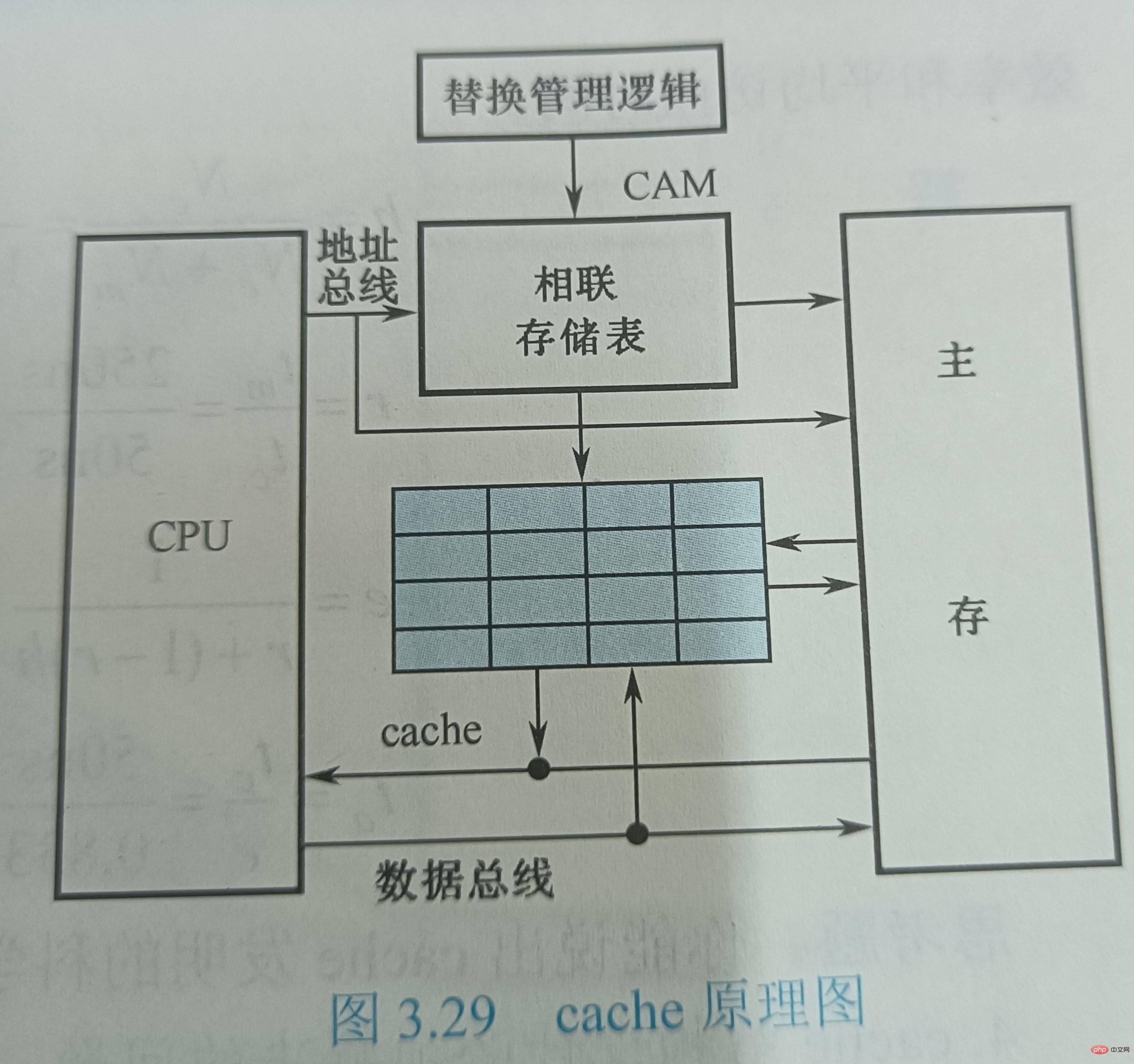

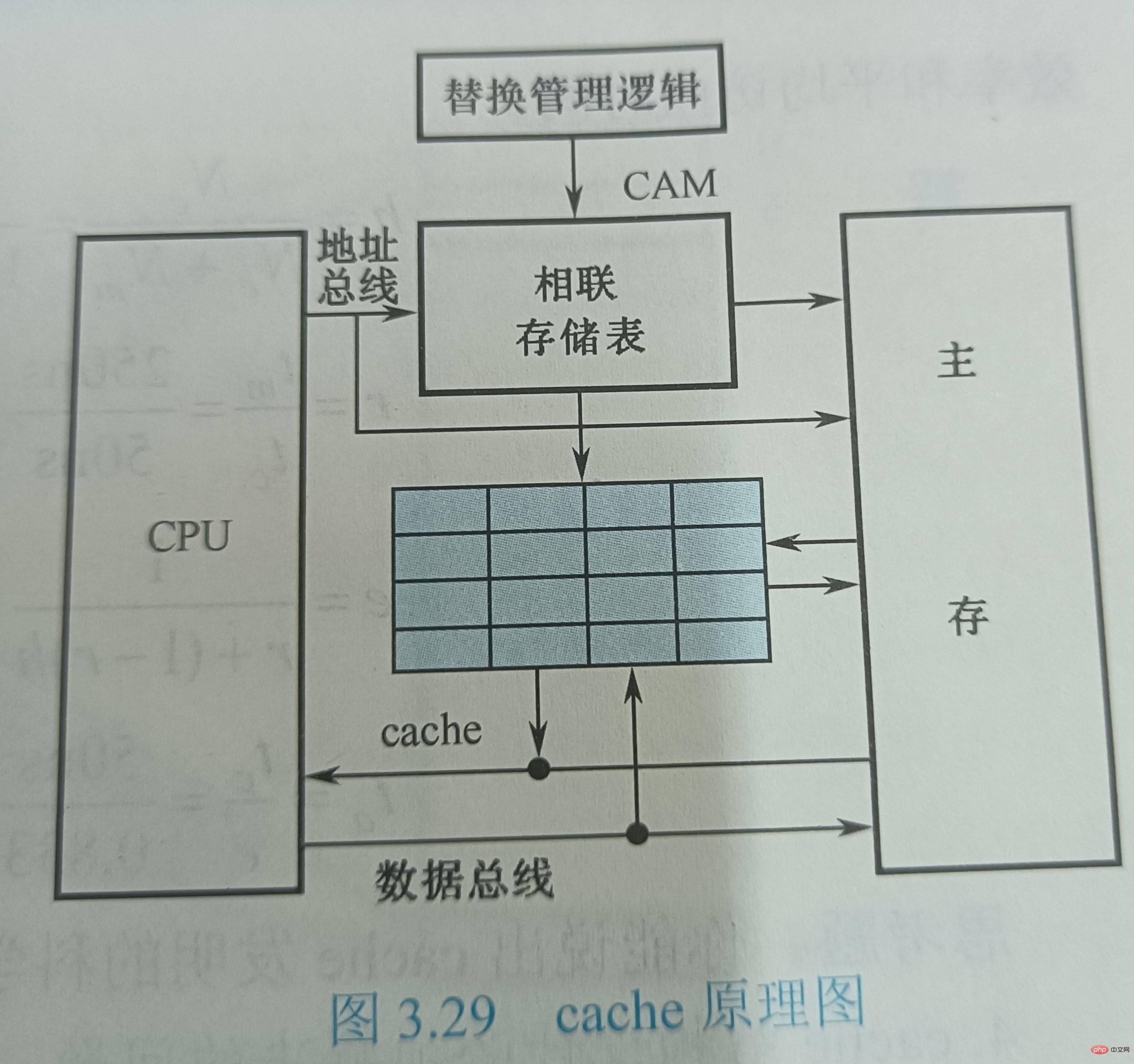

Figure 3.29 shows the schematic diagram of the cache. Assume that the cache read time is 50ns and the main memory read time is 250ns. The storage system is modular, and each 8K module in the main memory is associated with a cache with a capacity of 16 words. The cache is divided into 4 lines, each line has 4 words (W). The address assigned to the cache is stored in an associative memory CAM, which is content-addressable memory. When the CPU executes a memory access instruction, it sends the address of the word to be accessed to the CAM; if W is not in the cache, W is transferred from the main memory to the CPU. At the same time, a row of data consisting of four consecutive words containing W is sent to the cache, replacing the original row of data in the cache. Here, the replacement algorithm is implemented by hardware logic circuits that always manage cache usage.

cache hit rate

From a CPU perspective, the purpose of increasing cache is to increase the average read time of main memory in terms of performance As close as possible to the cache read time. In order to achieve this goal, the portion of all memory accesses that is satisfied by the cache to meet the CPU needs should account for a high proportion, that is, the cache hit rate should be close to 1. Achieving this goal is possible due to the locality of program access.

During the execution of a program, let Nc represent the total number of cache accesses, Nₘ represents the total number of main memory accesses, and h is defined as the hit rate, then h=Nc/(Nc+Nₘ)

If tc represents the cache access time when a hit occurs, tₘ represents the main memory access time when a miss occurs, and 1-h represents the miss rate (missing rate), then the average access time tₐ of the cache/main memory system is

tₐ=htc+(1−h)tₘ

The goal we pursue is to make the average access time tₐ of the cache/main memory system as close to tc as possible at a small hardware cost, the better. Assume r=tₘ/tc represents the ratio of access time between main memory and cache, and e represents access efficiency, then

e=tc/ta=tc/(htc+(1−h)tm)=1/(h+(1−h)r)=1/(r+(1−r)h)

It can be seen from this formula that in order to improve access efficiency, the closer the hit rate h is to 1, the better . The appropriate r value is 5 to 10, and should not be too large.

The hit rate h is related to the behavior of the program, cache capacity, organization method, and block size.

Problems that must be solved in cache structure design

It can be seen from the basic working principle of cache that the design of cache needs to follow two principles: One is to hope that the cache hit rate is as high as possible, which should actually be close to 1; the other is to hope that the cache is transparent to the CPU, that is, regardless of whether there is a cache, the CPU access memory method is the same, and the software does not need to add anything. The command can access the cache. The hit rate and transparency issues are solved. From the perspective of CPU memory access, the memory will have the capacity of main memory and a speed close to cache. To this end, a certain hardware circuit must be added to complete the control function, that is, the cache controller.

When designing the cache structure, several issues must be solved: ① How to store the contents of the main memory when they are transferred into the cache? ② How to find the information in the cache when accessing the memory? ③ How to replace the cache when the cache space is insufficient The content already in Convert to cache address. Compared with the main memory capacity, the cache capacity is very small. The content it saves is only a subset of the main memory content, and the data exchange between the cache and the main memory is in block units. In order to put the main memory block into the cache, some method must be used to locate the main memory address in the cache, which is called address mapping. The physical meaning of the word "mapping" is to determine the corresponding relationship between locations and implement it with hardware. In this way, when the CPU accesses the memory, the memory address of a word given by it will automatically be converted into the cache address, that is, cache address conversion.

The cache replacement problem is mainly to select and execute the replacement algorithm to replace the contents in the cache when the cache misses. The last question involves the cache's write operation strategy, which focuses on maintaining the consistency between the main memory and the cache during updates.

For more related knowledge, please visit the

FAQThe above is the detailed content of What is cache?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Which memory has the fastest access speed?

Jul 26, 2022 am 10:54 AM

Which memory has the fastest access speed?

Jul 26, 2022 am 10:54 AM

The fastest access memory is "internal memory"; the memory in the computer adopts a hierarchical structure, arranged in order of speed, including internal memory, cache memory, the computer's main memory, and large-capacity disks. The memory is generally divided into RAM Random Access Memory and ROM Read Only Memory.

After joining the company, I understood what Cache is

Jul 31, 2023 pm 04:03 PM

After joining the company, I understood what Cache is

Jul 31, 2023 pm 04:03 PM

The thing is actually like this. At that time, my leader gave me a perf hardware performance monitoring task. During the process of using perf, I entered the command perf list and I saw the following information: My task is to enable these cache events to be counted normally. But the point is, I have no idea what these misses and loads mean.

Can the CPU directly access data in the internal memory?

Nov 25, 2022 pm 02:02 PM

Can the CPU directly access data in the internal memory?

Nov 25, 2022 pm 02:02 PM

Can be accessed directly. Internal memory, also called memory, is the bridge between external memory and the CPU. All programs in the computer run in memory. The function of memory is to temporarily store calculation data in the CPU and data exchanged with external memories such as hard disks. As long as the computer is running, the operating system will transfer the data that needs to be calculated from the memory to the CPU for calculation; when the calculation is completed, the CPU will transmit the results. The operation of the memory also determines the stable operation of the computer.

What is the basis for computers to have strong memory capabilities?

Jul 09, 2021 pm 03:59 PM

What is the basis for computers to have strong memory capabilities?

Jul 09, 2021 pm 03:59 PM

The basis for a computer to have a strong memory capability is a storage device with sufficient capacity. Memory can be divided into internal memory and external memory. They enable computers to have powerful memory functions and can store large amounts of information. This information includes not only various types of data information, but also programs for processing these data.

What is the memory system in a computer system?

Jul 22, 2022 pm 02:23 PM

What is the memory system in a computer system?

Jul 22, 2022 pm 02:23 PM

The memory system refers to a system in a computer consisting of various storage devices that store programs and data, control components, and devices (hardware) and algorithms (software) that manage information scheduling. The storage system provides the ability to write and read information (programs and data) required for computer work, and realizes the computer's information memory function. The hierarchical structure of the storage system can be divided into five levels: register group, cache cache, main memory, virtual memory and external memory.

Which country invented the USB flash drive?

Oct 09, 2022 pm 02:26 PM

Which country invented the USB flash drive?

Oct 09, 2022 pm 02:26 PM

The USB flash drive was invented in China, and Netac is the global inventor of the USB flash drive. In July 2002, Netac's "Fast Lightning External Storage Method and Device for Data Processing Systems" was officially authorized by the State Intellectual Property Office. This patent filled the 20-year gap in invention patents in the field of computer storage in China; 2004 On December 7, 2019, Netac received the basic invention patent for flash disks officially authorized by the U.S. National Patent Office.

Why does using cache increase computer speed?

Dec 09, 2020 am 11:28 AM

Why does using cache increase computer speed?

Dec 09, 2020 am 11:28 AM

Using the cache can increase the speed of the computer because the cache shortens the waiting time of the CPU. Cache is a small but high-speed memory located between the CPU and the main memory DRAM. The function of Cache is to increase the rate of CPU data input and output; Cache has a small capacity but fast speed, while the memory speed is low but has a large capacity. By optimizing the scheduling algorithm, the performance of the system will be greatly improved.

What is cache?

Nov 25, 2022 am 11:48 AM

What is cache?

Nov 25, 2022 am 11:48 AM

Cache is called cache memory. It is a high-speed small-capacity memory between the central processing unit and the main memory. It is generally composed of high-speed SRAM. This kind of local memory is oriented to the CPU. It is introduced to reduce or eliminate the gap between the CPU and the memory. The impact of the speed difference between them on system performance. Cache capacity is small but fast, memory speed is low but capacity is large. By optimizing the scheduling algorithm, the performance of the system will be greatly improved.