Backend Development

Backend Development

PHP Problem

PHP Problem

How to solve the syntax error in high concurrency reporting in PHP

How to solve the syntax error in high concurrency reporting in PHP

How to solve the syntax error in high concurrency reporting in PHP

Solution to the syntax error reported by high concurrency in php: 1. Check the number of configured accesses or connections of nginx, and add the two parameters of nginx; 2. Confirm whether the worker process of php-fpm is sufficient, and then Increase the number of worker_connections processes; 3. Disable the recorded slow log.

The operating environment of this tutorial: Windows 10 system, PHP version 8.1, Dell G3 computer.

How to solve the syntax error in high concurrency reporting in php?

Nginx Php is high and concurrently reports 502 and 504 problem solving:

Recently I am helping the company optimize the PHP project. Baidu while optimizing. The number of visits to this project is quite large (the average number of requests per minute is 80,000).

Used three aws servers. Two 8-core 16G and one 4-core 16G. The small one runs Nginx and runs a small number of php-fpm processes. Basically put it up and hang it up. The accesses are all 502 and 504. Because there is no problem with the project and the test has been run before. Then I started looking for problems on Baidu.

1. It is suspected that the number of configured accesses or connections of nginx is too small to handle, and then increase the two parameters of nginx.

The maximum number of connections allowed by each process. Theoretically, the maximum number of connections per nginx server is worker_processes*worker_connections

worker_connections 5000;

The maximum number of file descriptors opened by an nginx process. The theoretical value should be It is the maximum number of open files (ulimit -n) divided by the number of nginx processes

worker_rlimit_nofile 20000;

PHP request timeout and cache, etc.

fastcgi_connect_timeout 300; fastcgi_send_timeout 300; fastcgi_read_timeout 300; fastcgi_buffer_size 64k; fastcgi_buffers 4 64k; fastcgi_busy_buffers_size 128k; fastcgi_temp_file_write_size 256k;

Restart nginx after setting it up. . But when I tested it, there was no response at all.

2. It is suspected to be a php configuration problem.

Confirm whether the worker process of php-fpm is enough. If it is not enough, it means it is not enabled.

Calculate the number of worker processes that are opened:

ps -ef | grep 'php-fpm'|grep -v 'master'|grep -v 'grep' |wc -l

Calculate the worker processes in use , the request being processed

netstat -anp | grep 'php-fpm'|grep -v 'LISTENING'|grep -v 'php-fpm.conf'|wc -l

If the above two values are close, you can consider increasing the number of worker_connections processes

and modifying the number of php processes in php-fpm.conf. It doesn't matter whether you turn these parameters up or down. . . . Desperate!

Modified the log level log_level = debug of php-fpm.conf. I saw an error in the error_log file:

[29-Mar-2014 22:40:10] ERROR: failed to ptrace(PEEKDATA) pid 4276: Input/output error (5) [29-Mar-2014 22:53:54] ERROR: failed to ptrace(PEEKDATA) pid 4319: Input/output error (5) [29-Mar-2014 22:56:30] ERROR: failed to ptrace(PEEKDATA) pid 4342: Input/output error (5)

So, I started to Google this error again. Find the article (http://www.mamicode.com/info-detail-1488604.html). It says above that the recorded slow log needs to be disabled; slowlog = /var/log/php-fpm/slow.log; request_slowlog_timeout = 15s. At this time, I realized that PHP also records slow requests during access logs. Then open the slow log file. It was found that all error logs were caused by PHP requesting redis. . .

The cause of the problem is found. When PHP requests redis data, it should be that too many connections are requested. Problems caused by redis failure to connect. . Because the business here is relatively complex, the redis key is spliced into multiple fields. Fuzzy query is used when querying. All this leads to a decrease in the performance of redis, and a large number of subsequent requests cannot connect to redis. Because the code for linking to redis was changed by me. . So I restored the original code for requesting mysql. .

Currently, the project is running normally, and the CPU of each server is basically close to 100%. I am worried that there will be problems, the CPU will be full, and the MySQL connection request will not be able to withstand it. . . Let’s optimize it later! ! ! !

Recommended learning: "PHP Video Tutorial"

The above is the detailed content of How to solve the syntax error in high concurrency reporting in PHP. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

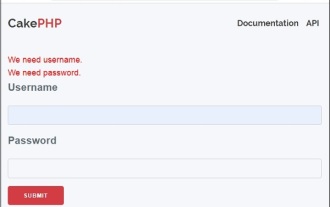

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

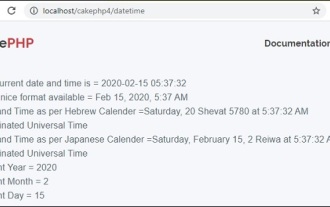

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

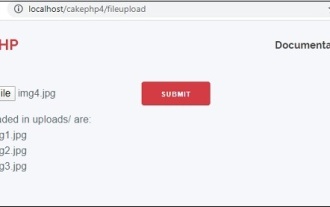

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.