An in-depth analysis of Stream in Node

What is a stream? How to understand flow? The following article will give you an in-depth understanding of streams in Nodejs. I hope it will be helpful to you!

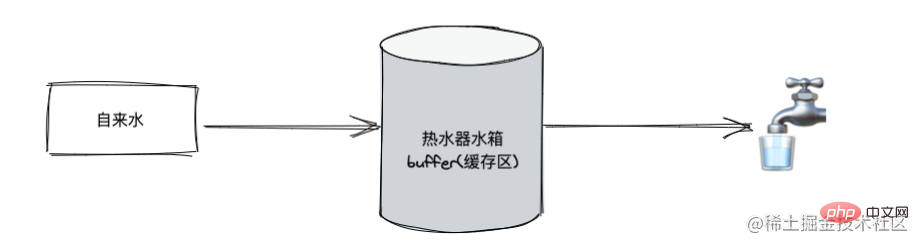

stream is an abstract data interface, which inherits EventEmitter. It can send/receive data. Its essence is to let the data flow, as shown below:

Stream is not a unique concept in Node, it is the most basic operation method of the operating system. In Linux | it is Stream, but it is encapsulated at the Node level and provides the corresponding API

Why do we need to do it bit by bit?

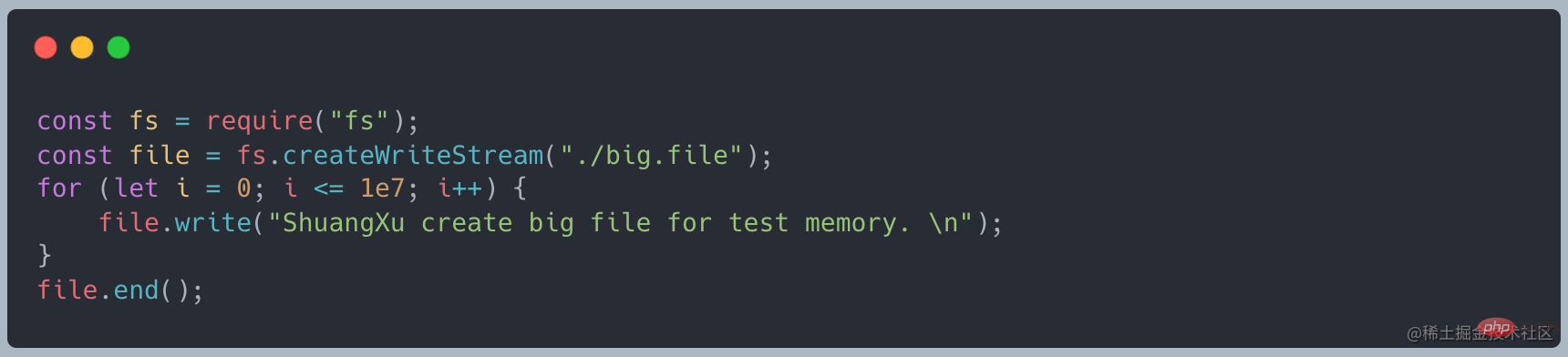

First use the following code to create a file, about 400MB [Related tutorial recommendations: nodejs video tutorial]

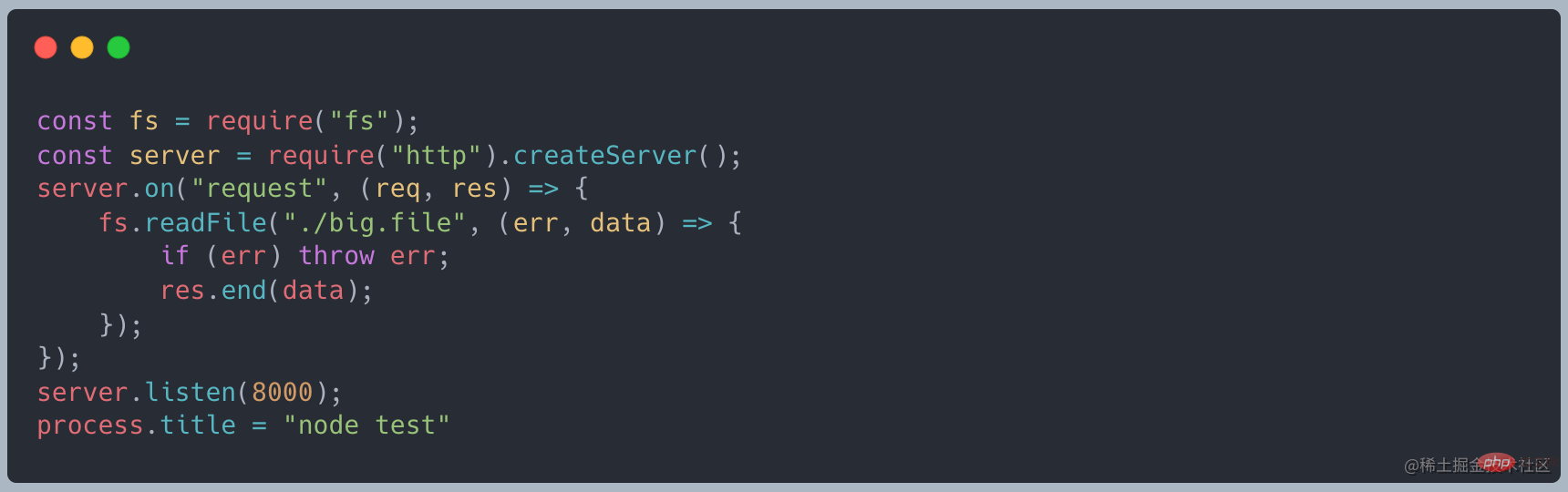

When we use readFile to read, the following code

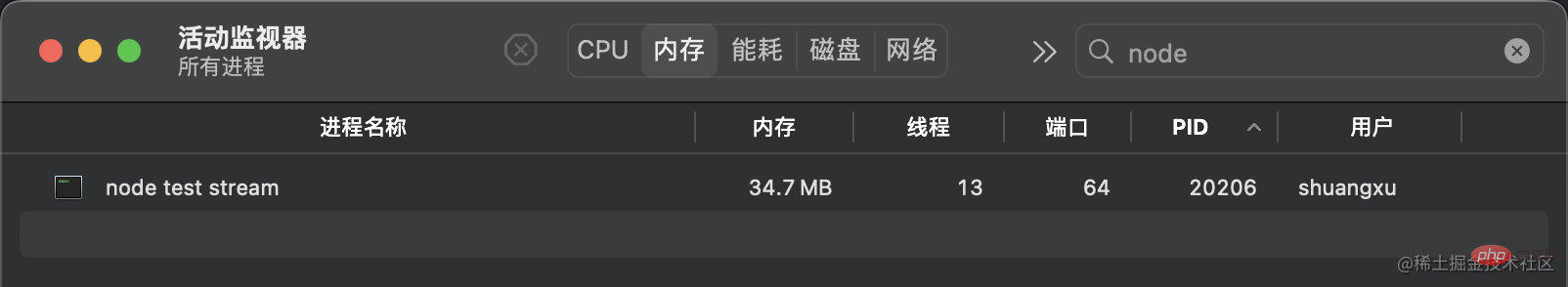

#When the service is started normally, it takes up about 10MB of memory

Use curl http://127.0.0.1:8000When making a request, the memory becomes about 420MB, which is about the same size as the file we created

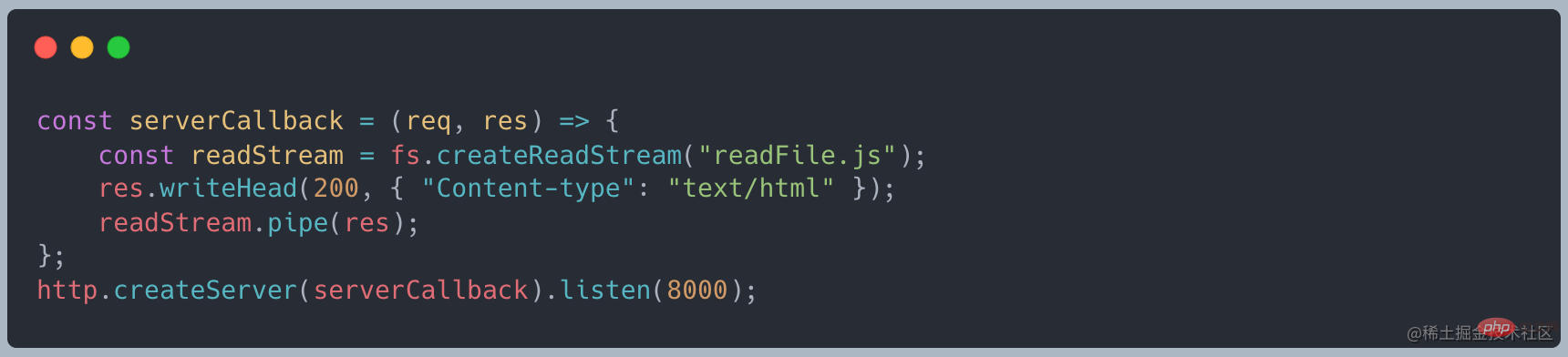

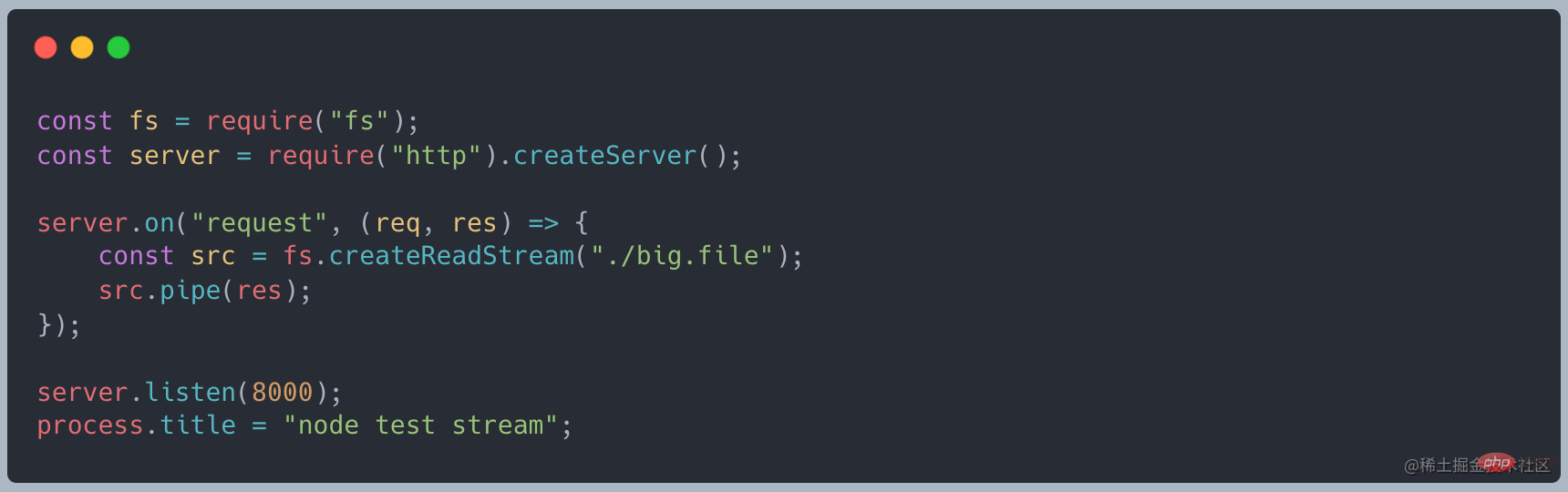

Instead use the stream writing method, the code is as follows

When the request is made again, it is found that the memory only takes up about 35MB, which is significantly reduced compared to readFile

If we do not use the streaming mode and wait for the large file to be loaded before operating, there will be the following problems:

- The memory is temporarily used too much, causing the system to Crash

- The CPU operation speed is limited and it serves multiple programs. The loading of large files is too large and takes a long time

In summary, reading large files at one time consumes the memory I can’t stand it and the Internet

How can I do it bit by bit?

When we read a file, we can output the data after the reading is completed.

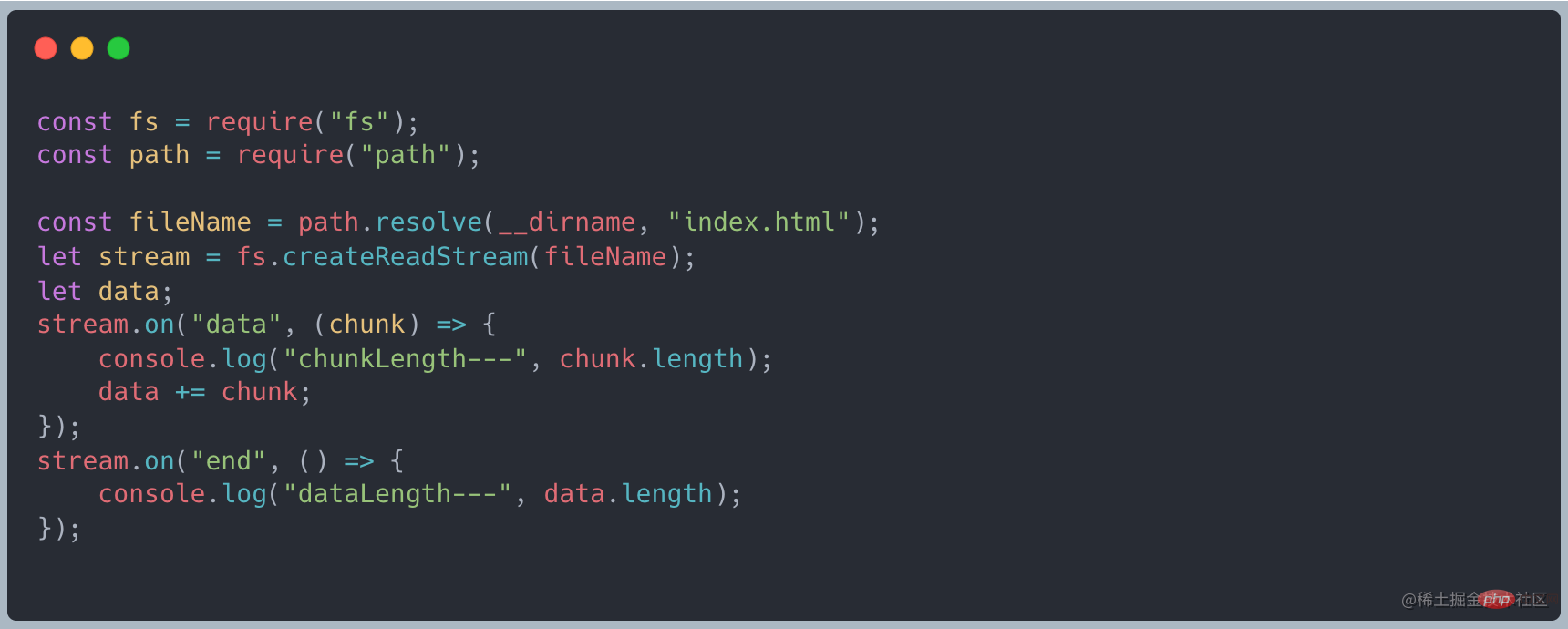

As mentioned above, stream inherits EventEmitter and can be implemented Monitor data. First, change the reading data to streaming reading, use on("data", ()⇒{}) to receive the data, and finally use on("end", ()⇒{} ) The final result

When data is transferred, the data event will be triggered, receive this data for processing, and finally wait for all data to be transferred. Trigger the end event.

Data flow process

Where data comes from—source

Data flows from one place to another. Let’s first look at the source of the data.

-

http request, request data from the interface

-

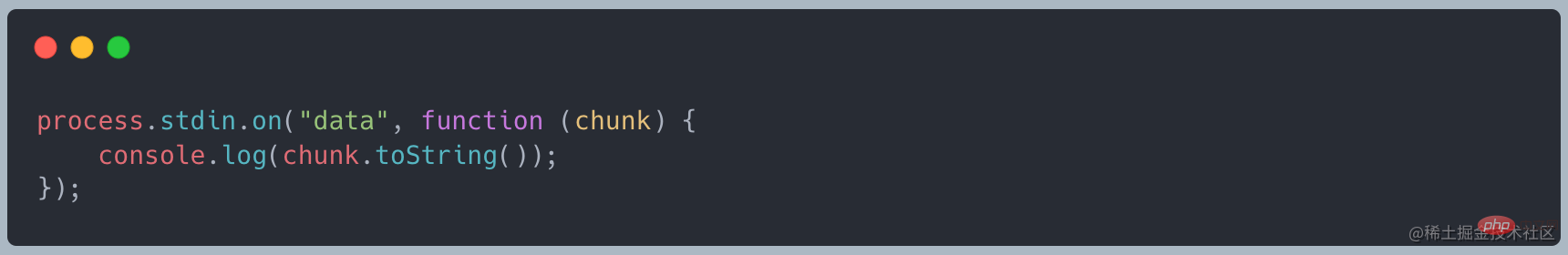

console console, standard input stdin

file file, read the file content, such as the above example

Connected pipe— pipe

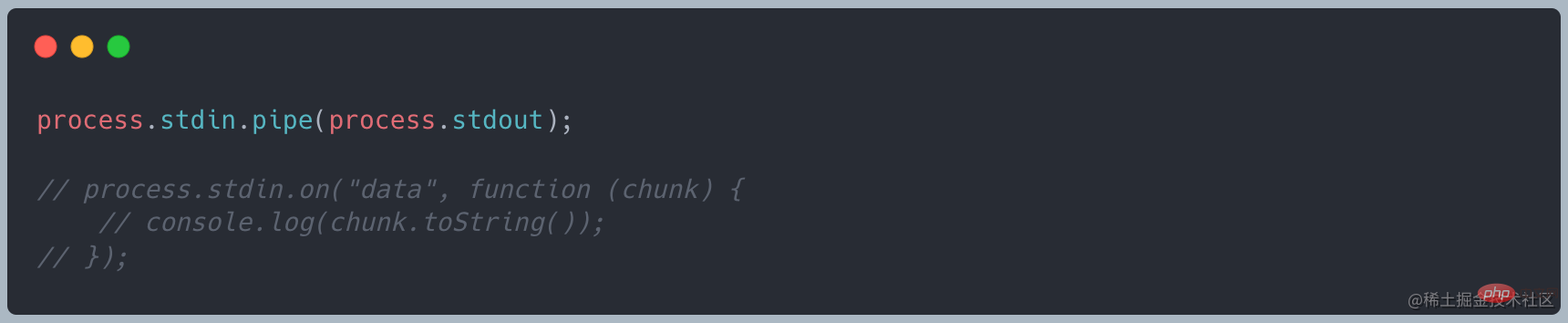

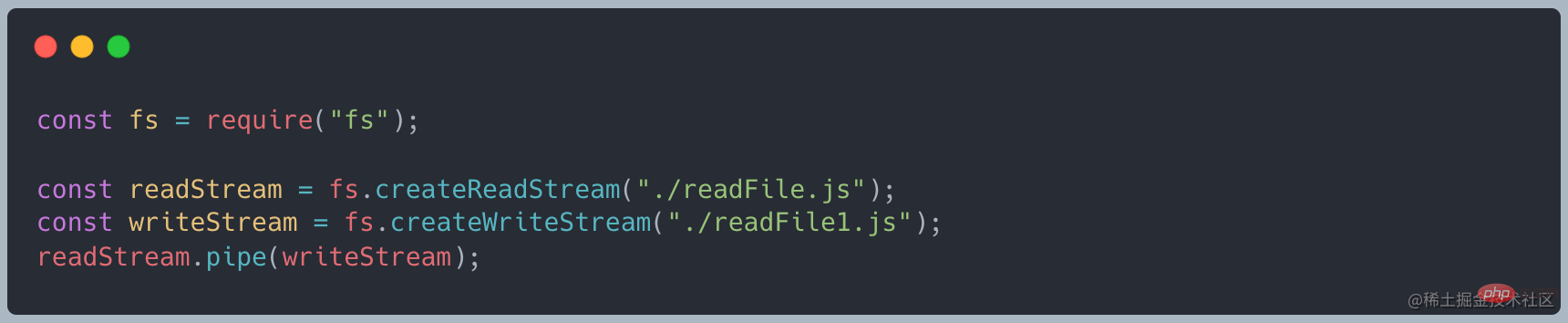

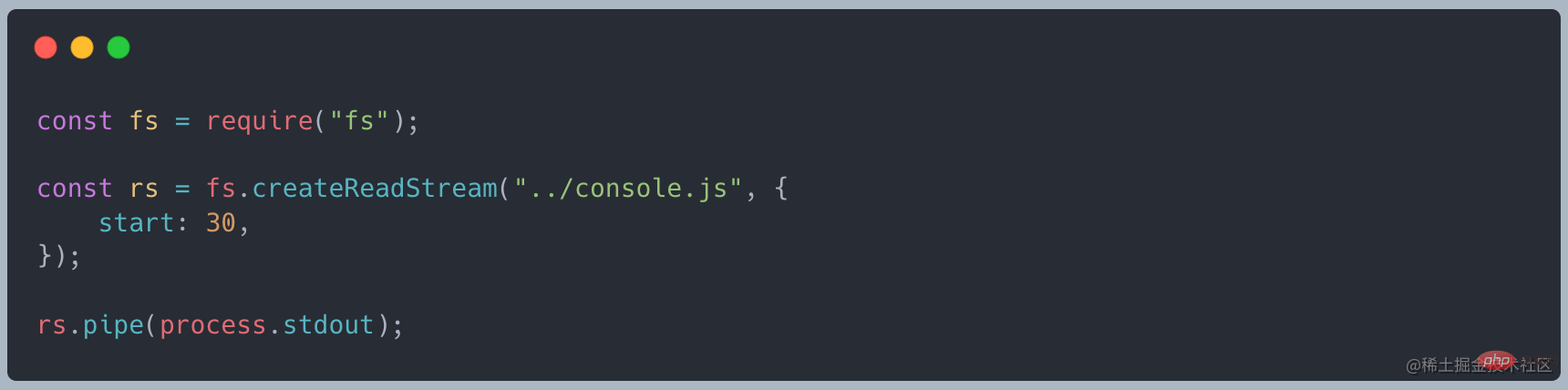

There is a connected pipe pipe in source and dest. The basic syntax is source.pipe(dest). Source and dest are connected through pipe, allowing data to flow from source to dest

We don’t need to manually monitor the data/end event like the above code.

There are strict requirements when using pipe. Source must be a readable stream, and dest must be a writable stream. Stream

??? What exactly is flowing data? What is chunk in the code?

Where to go—dest

stream Three common output methods

-

console console, standard output stdout

-

http request, response in the interface request

-

file file, write file

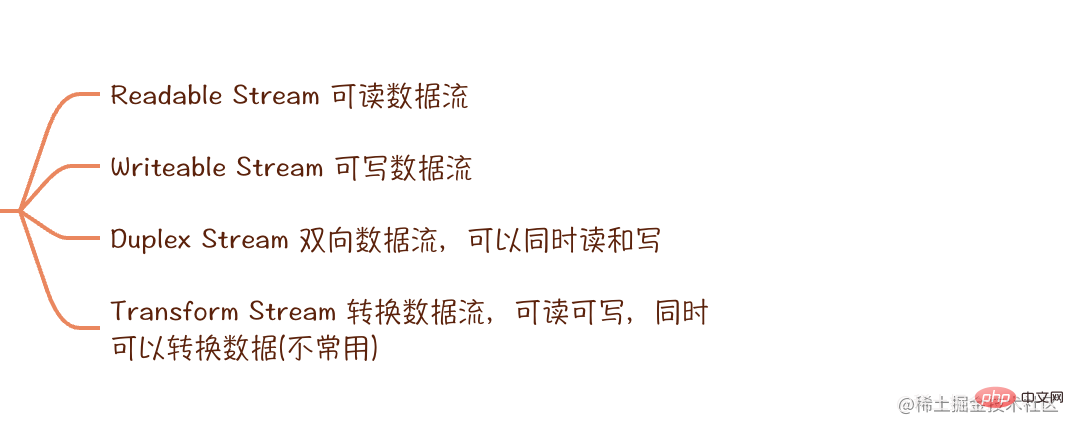

Types of streams

##Readable StreamsReadable Streams

A readable stream is an abstraction of the source that provides dataAll Readables implement the interface defined by the stream.Readable class

Read mode

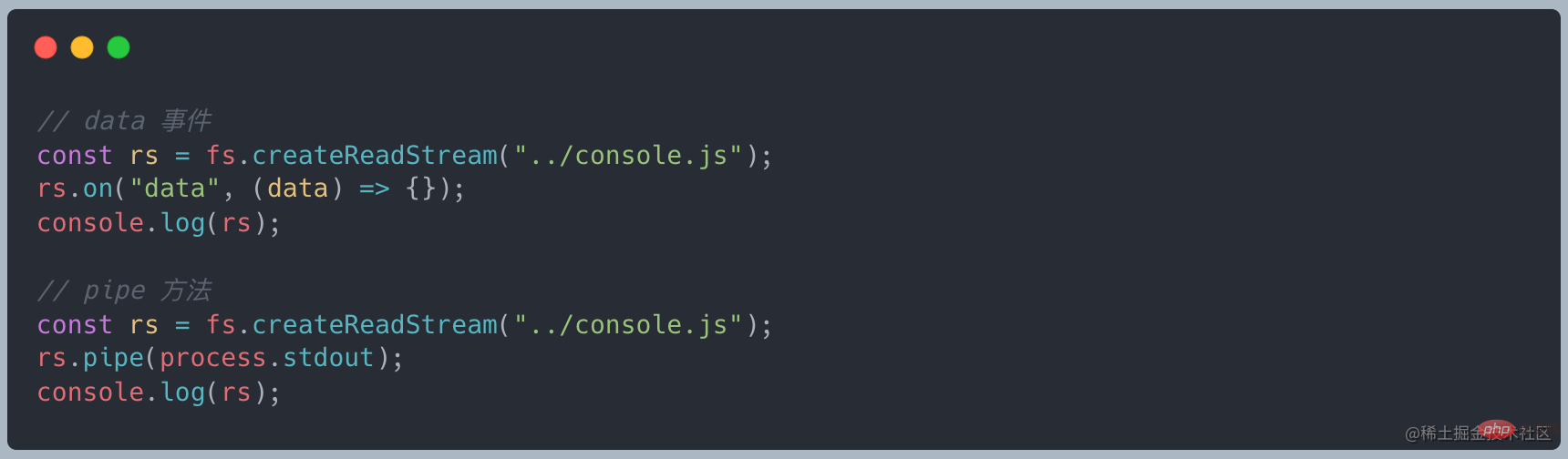

There are two modes for readable streams,flowing mode and pause mode, which determines the flow mode of chunk data: automatic flow and manual flow Flow

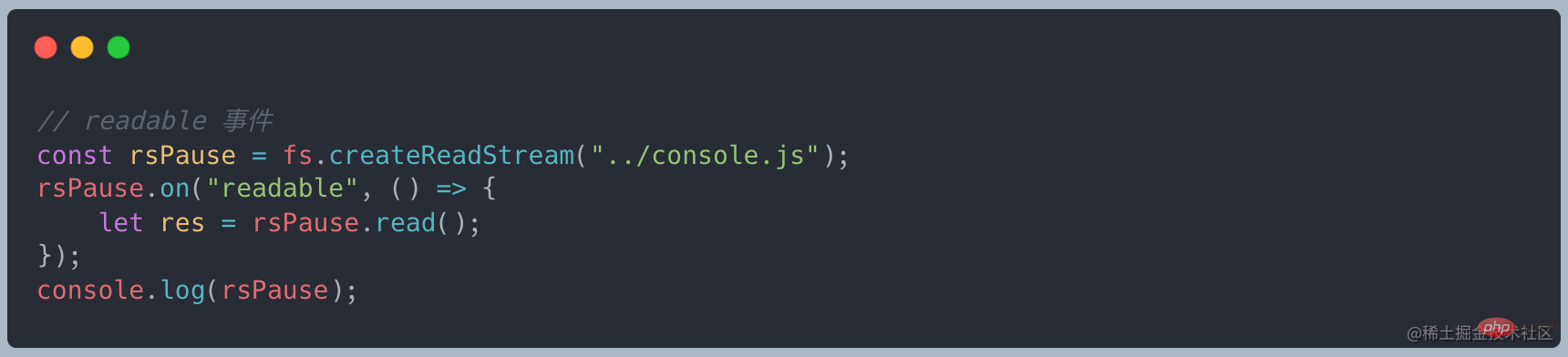

There is a _readableState attribute in ReadableStream, in which there is a flowing attribute to determine the flow mode. It has three state values:- ture: expressed as flowing Mode

- false: Represented as pause mode

- null: Initial state

Flow mode

Data is automatically read from the bottom layer, forming a flow phenomenon, and provided to the application through events.- You can enter this mode by listening to the data event

When the data event is added, when there is data in the writable stream, the data will be pushed to the event callback function. You need to do it yourself To consume the data block, if not processed, the data will be lost

- Call the stream.pipe method to send the data to Writeable

- Call stream. resume method

Pause mode

Data will accumulate in the internal buffer and must be called explicitly stream.read() reads data blocks- #Listen to the readable event

The writable stream will trigger this event callback after the data is ready. At this time, you need to use stream.read() in the callback function to actively consume data. The readable event indicates that the stream has new dynamics: either there is new data, or the stream has read all the data

- The readable stream is in the initial state after creation //TODO: inconsistent with online sharing

- Switch pause mode to flow Mode

- 监听 data 事件 - 调用 stream.resume 方法 - 调用 stream.pipe 方法将数据发送到 Writable

Copy after login

- Switch flow mode to pause mode

- 移除 data 事件 - 调用 stream.pause 方法 - 调用 stream.unpipe 移除管道目标

Copy after login

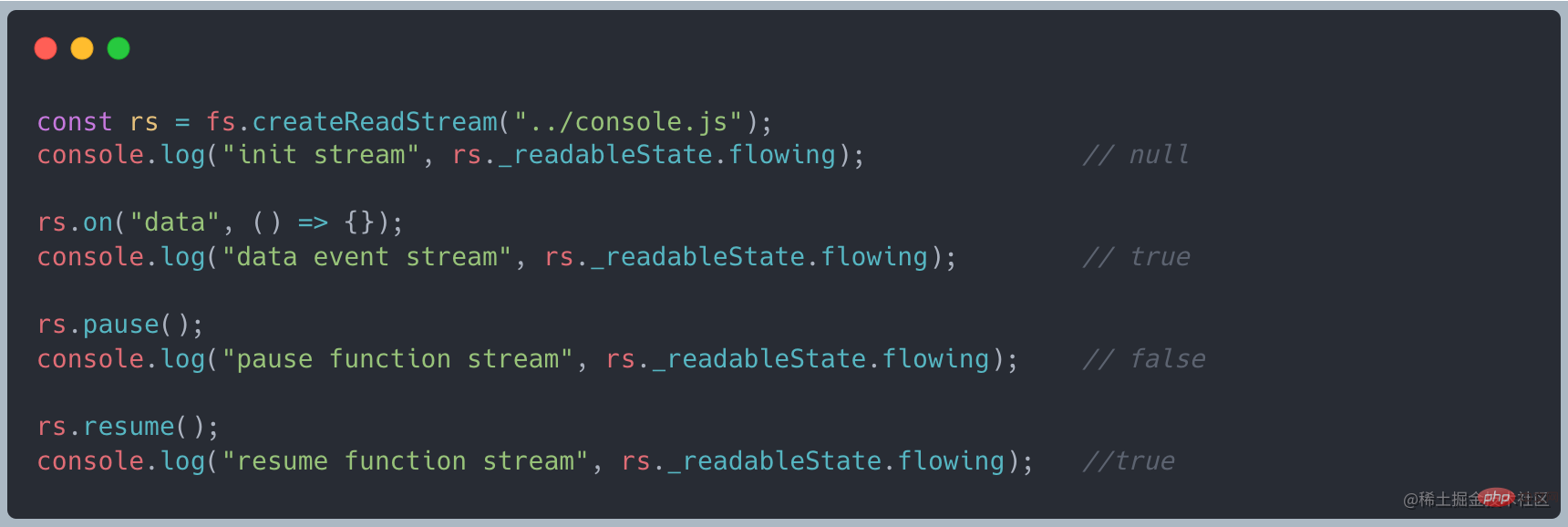

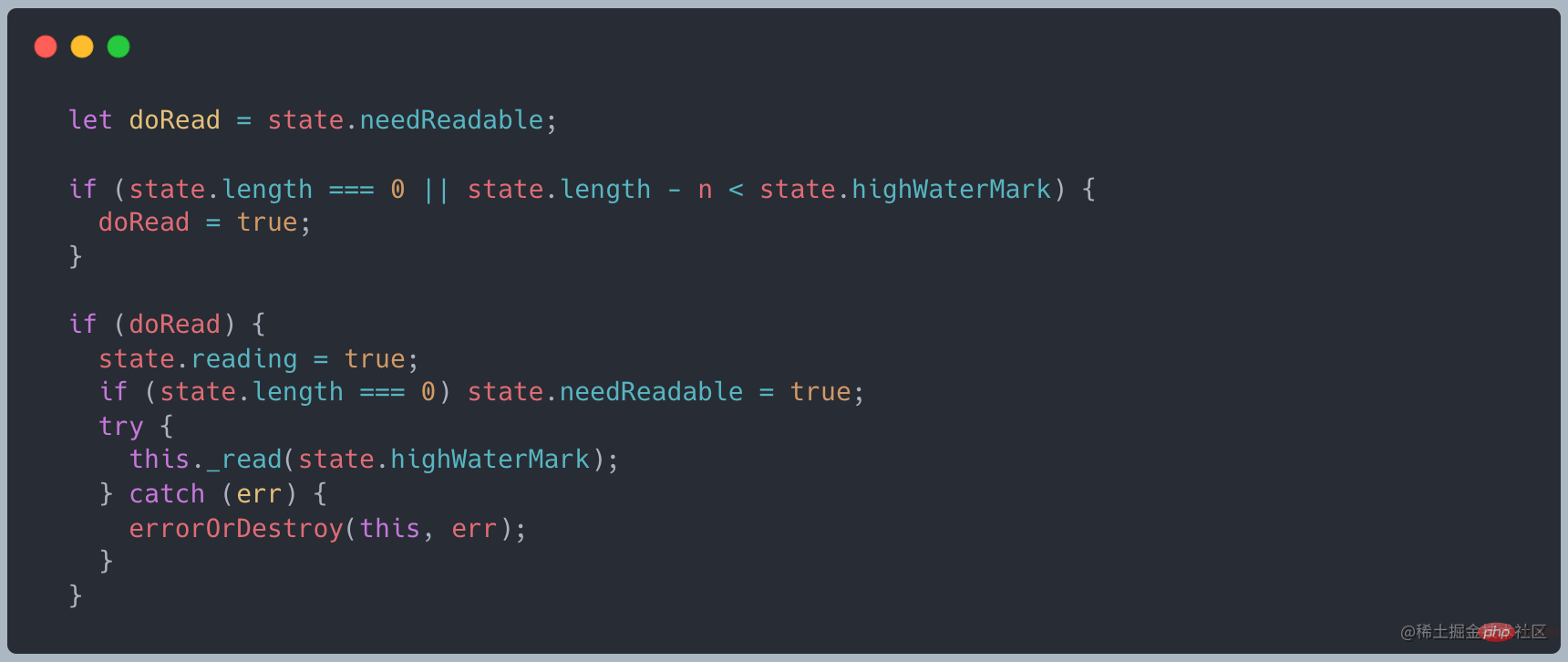

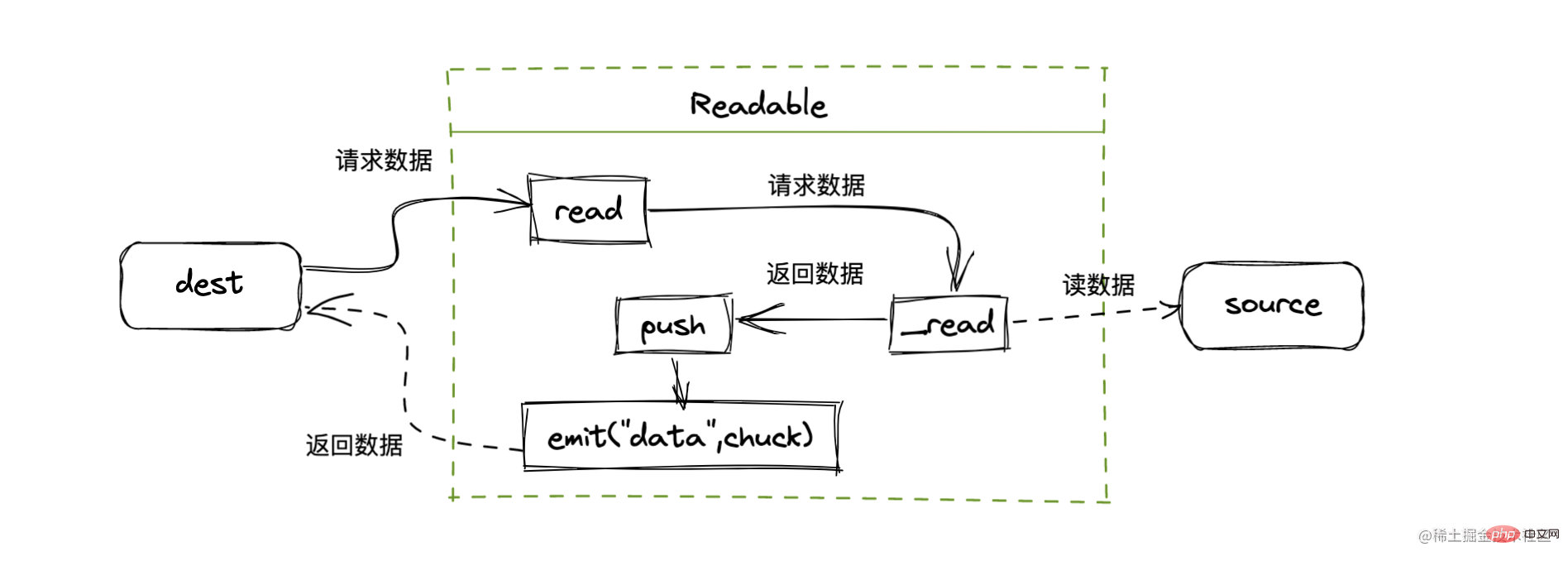

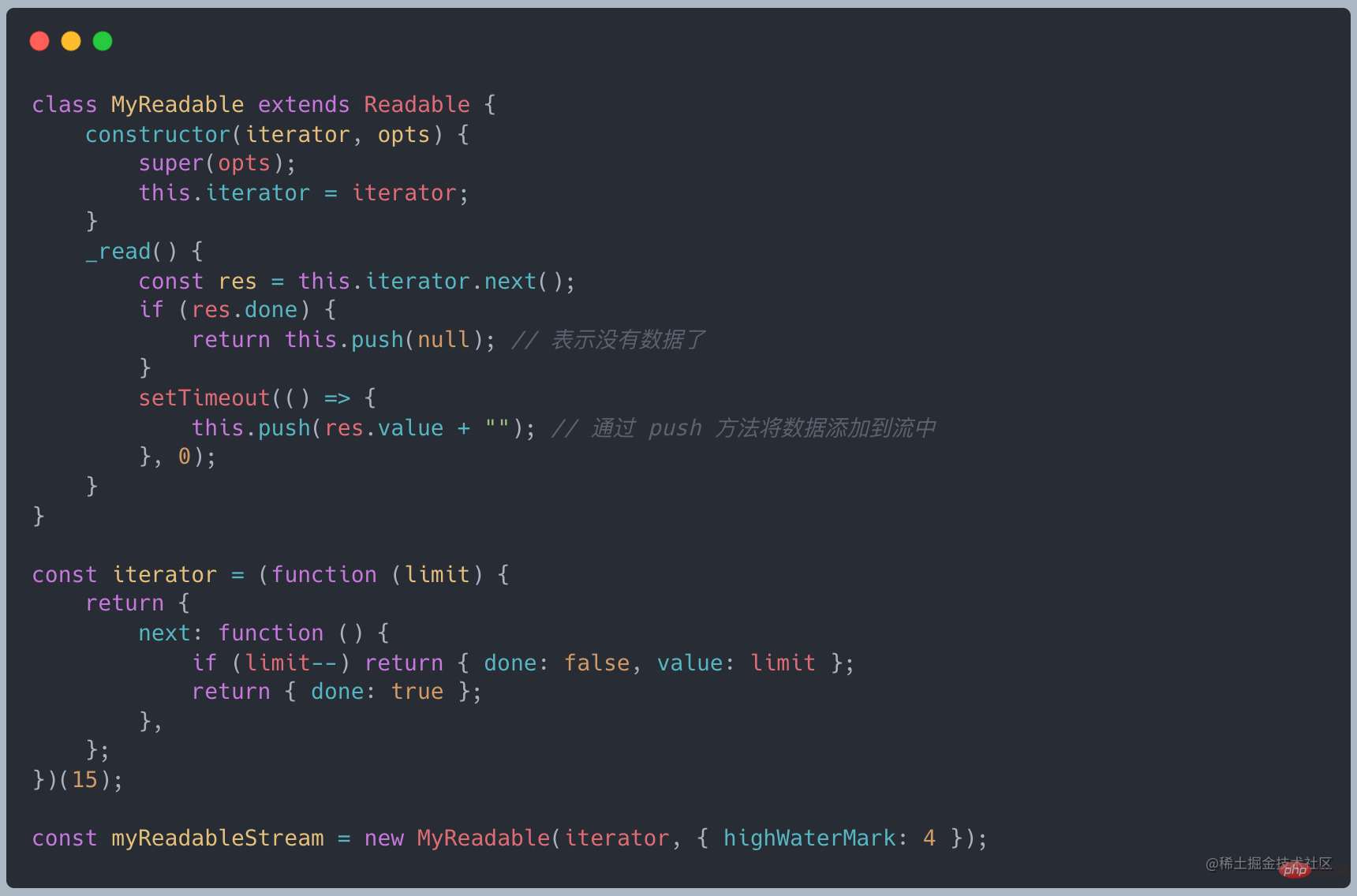

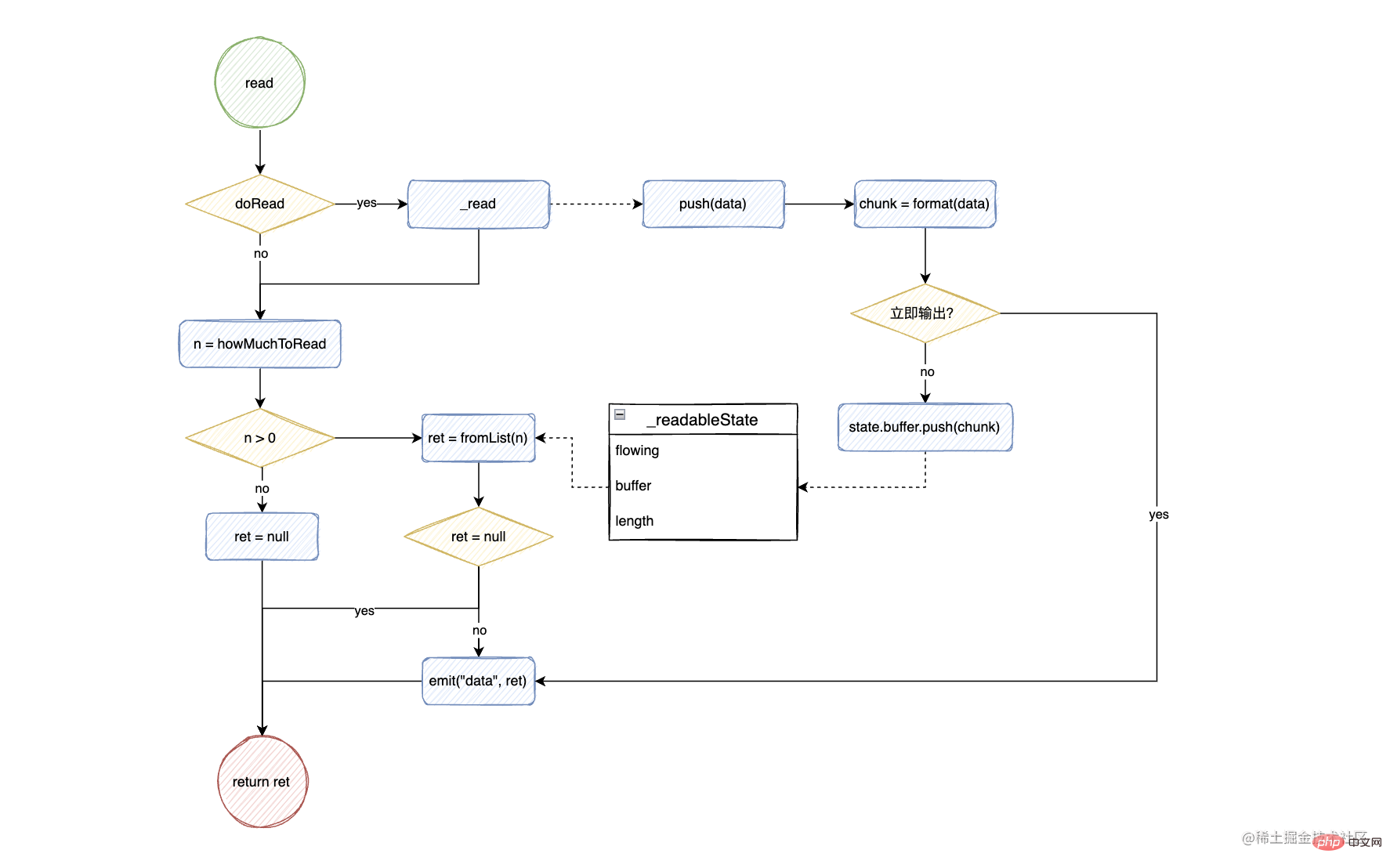

Implementation principle

When creating a readable stream, you need to inherit the Readable object and implement the _read method

- doReadA cache is maintained in the stream , when calling the read method to determine whether it is necessary to request data from the bottom layer

When the buffer length is 0 or less than the value of highWaterMark, _read will be called to get the data from the bottom layerSource code link

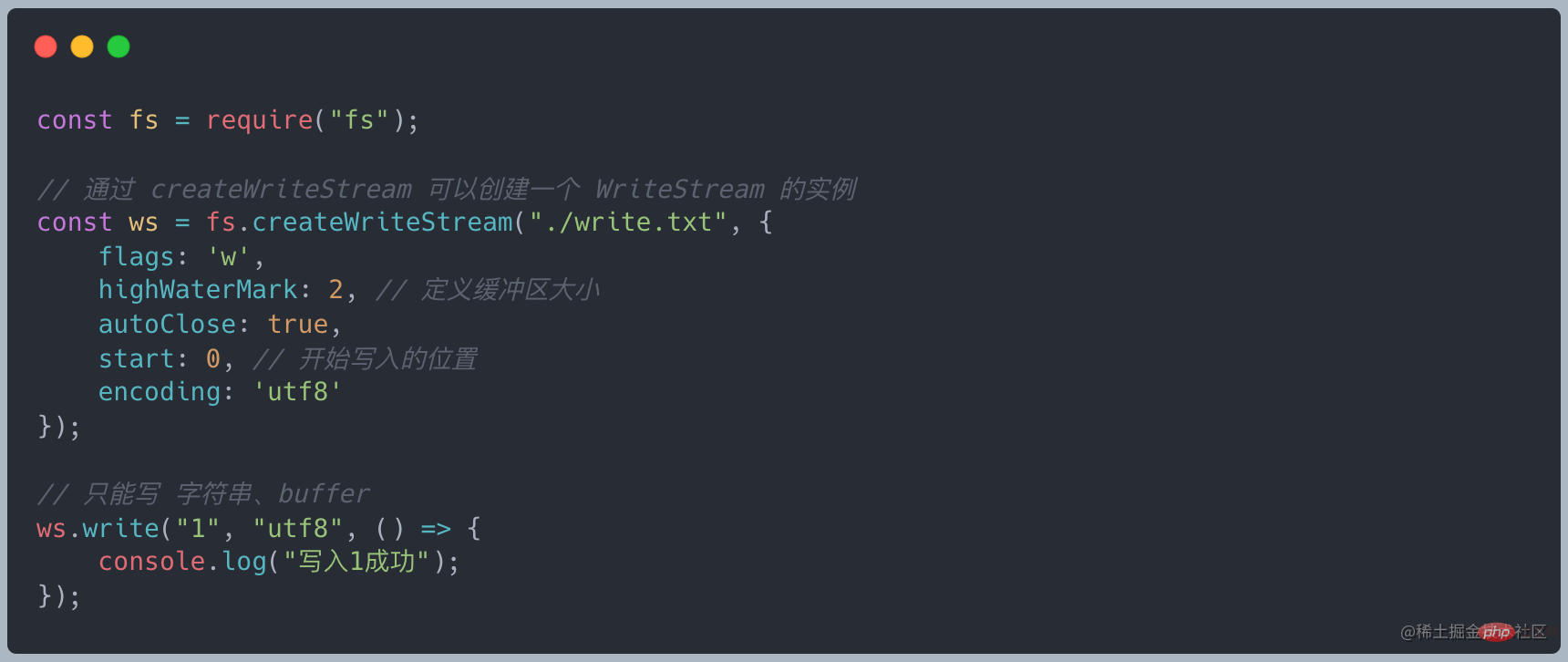

Writable StreamWritable Stream

The writable stream is an abstraction of the data writing destination. It is used to consume the data flowing from the upstream. Through the writable stream, Data is written to the device. A common write stream is writing to the local disk

Characteristics of writable streams

-

Write data through write

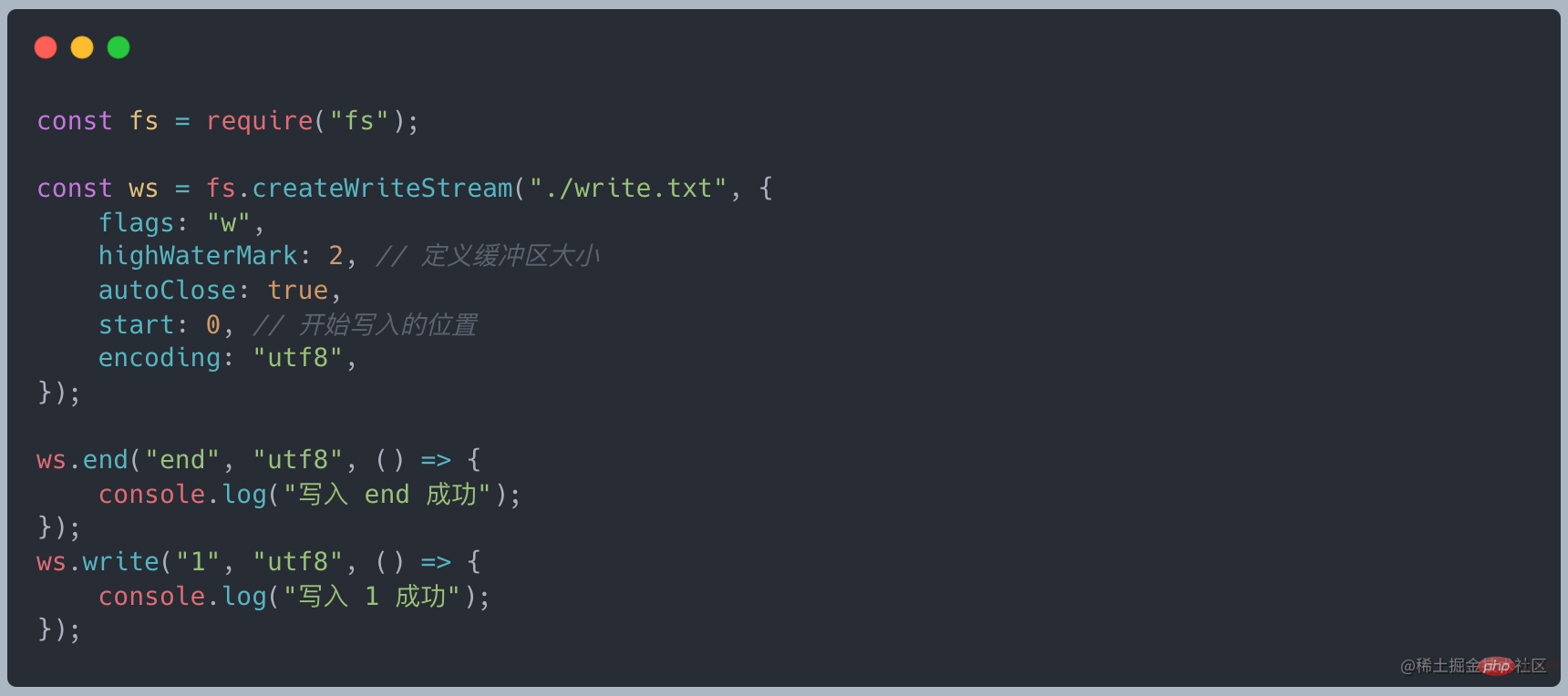

-

Write data through end and close the stream, end = write close

-

When the written data reaches the size of highWaterMark, the drain event will be triggered

Call ws.write( chunk) returns false, indicating that the current buffer data is greater than or equal to the value of highWaterMark, and the drain event will be triggered. In fact, it serves as a warning. We can still write data, but the unprocessed data will always be backlogged in the internal buffer of the writable stream until the backlog is filled with the Node.js buffer. Will it be forcibly interrupted

Customized writable stream

All Writeables implement the interface defined by the stream.Writeable class

You only need to implement the _write method to write data to the underlying layer

- Writing data to the stream by calling the writable.write method will call The _write method writes data to the bottom layer

- When _write data is successful, the next method needs to be called to process the next data

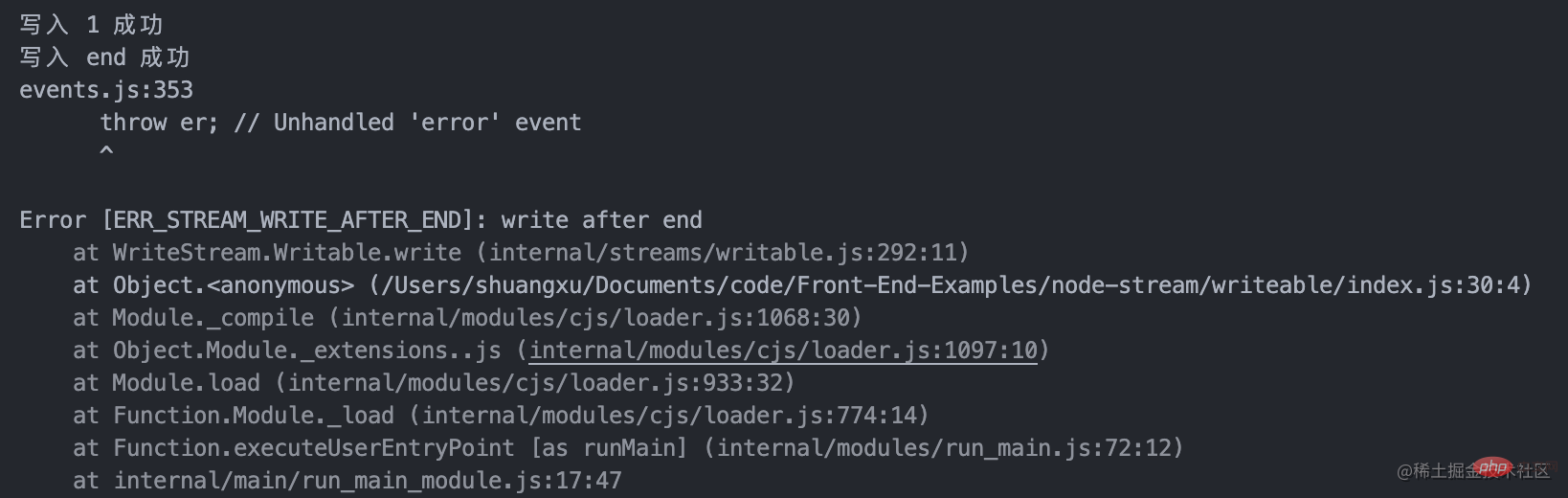

- mustcall writable.end(data) To end a writable stream, data is optional. After that, write cannot be called to add new data, otherwise an error will be reported

- After the end method is called, when all underlying write operations are completed, the finish event will be triggered

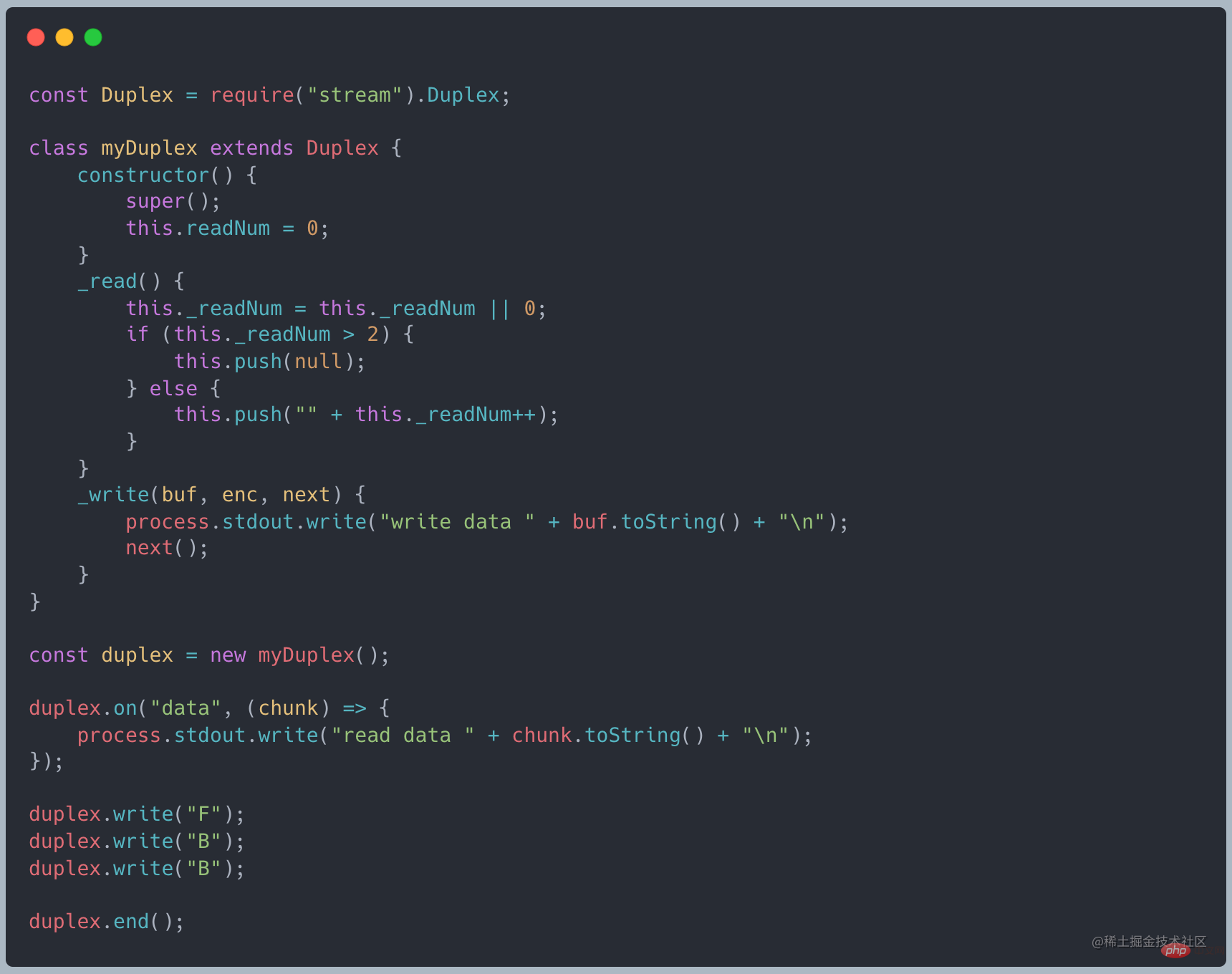

Duplex StreamDuplex Stream

Duplex stream can be both read and written. In fact, it is a stream that inherits Readable and Writable, so it can be used as both a readable stream and a writable stream.

A custom duplex stream needs to implement the _read method of Readable and The _write method of Writable

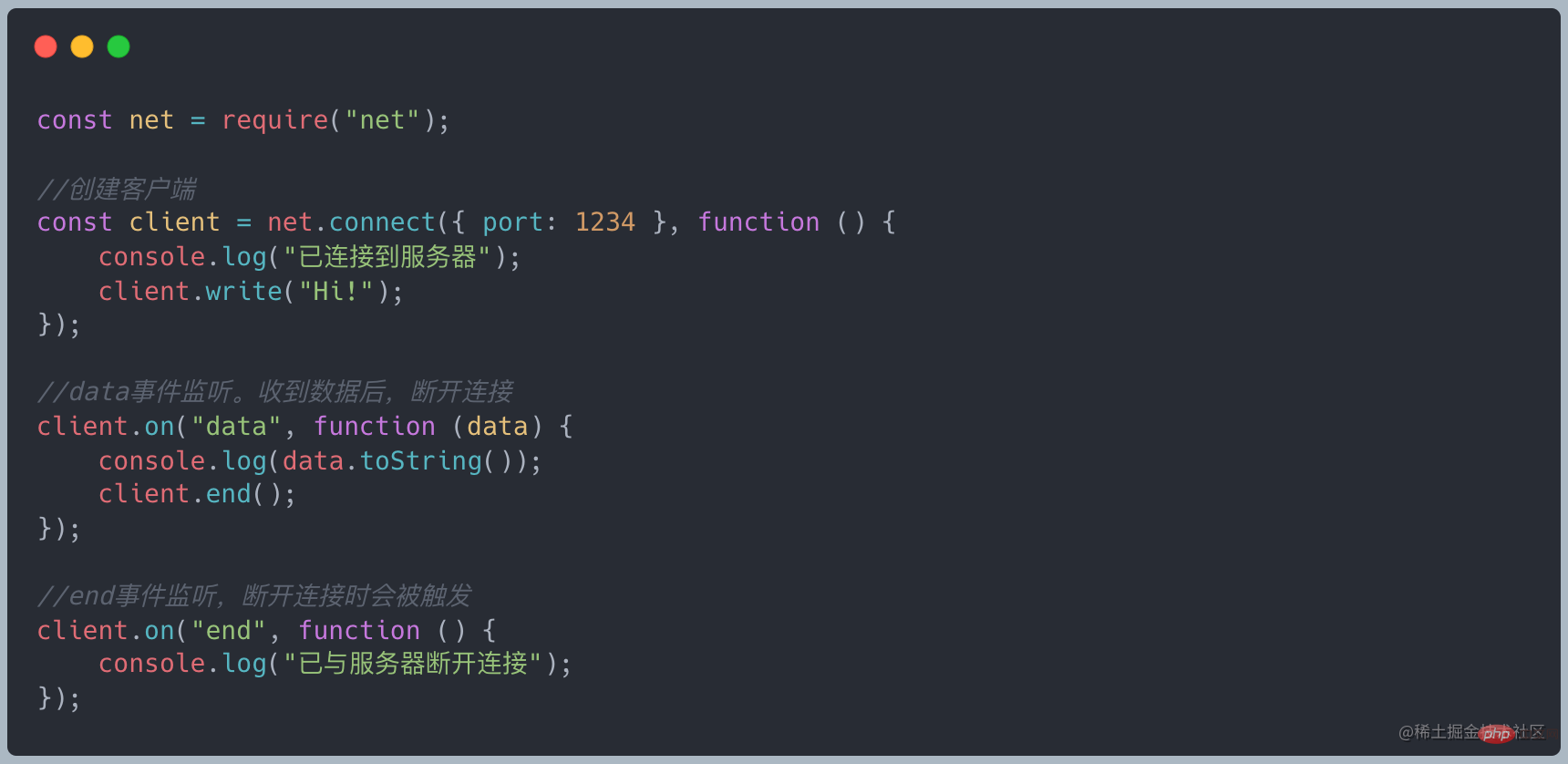

net module can be used to create a socket. The socket is a typical Duplex in NodeJS. See an example of a TCP client

client is a Duplex. The writable stream is used to send messages to the server, and the readable stream is used to receive server messages. There is no direct relationship between the data in the two streams

Transform StreamTransform Stream

In the above example, the data in the readable stream (0/1) and the data in the writable stream ('F','B','B') is isolated, and there is no relationship between the two, but for Transform, the data written on the writable side will be automatically added to the readable side after transformation.

Transform inherits from Duplex, and has already implemented the _write and _read methods. You only need to implement the _tranform method.

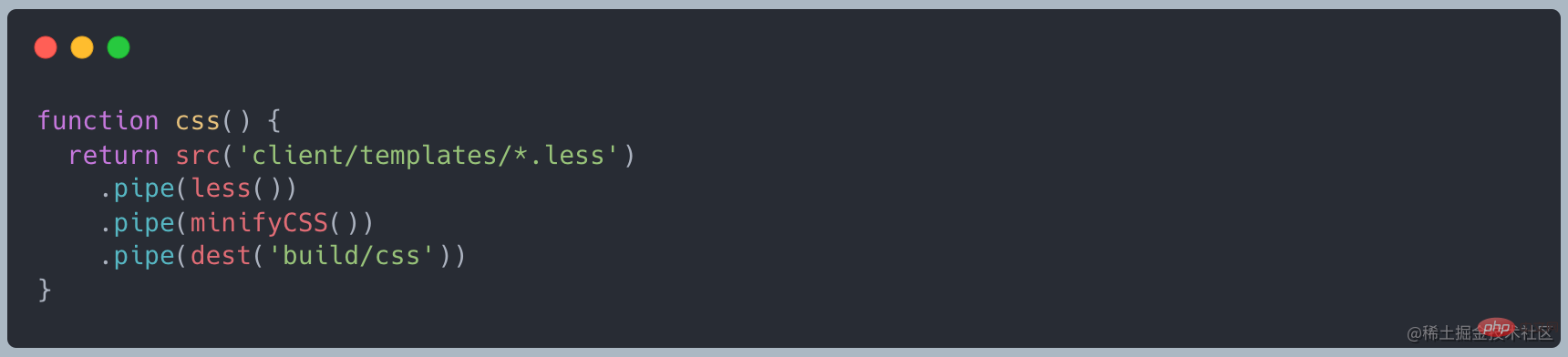

gulp Stream-based automation To build the tool, look at a sample code from the official website

Duplex and Transform selection

Compared with the above example, we find that when a stream serves both producers and consumers, we will choose Duplex, and when we just do some transformation work on the data, we will choose to use Transform

Backpressure problem

What is backpressure

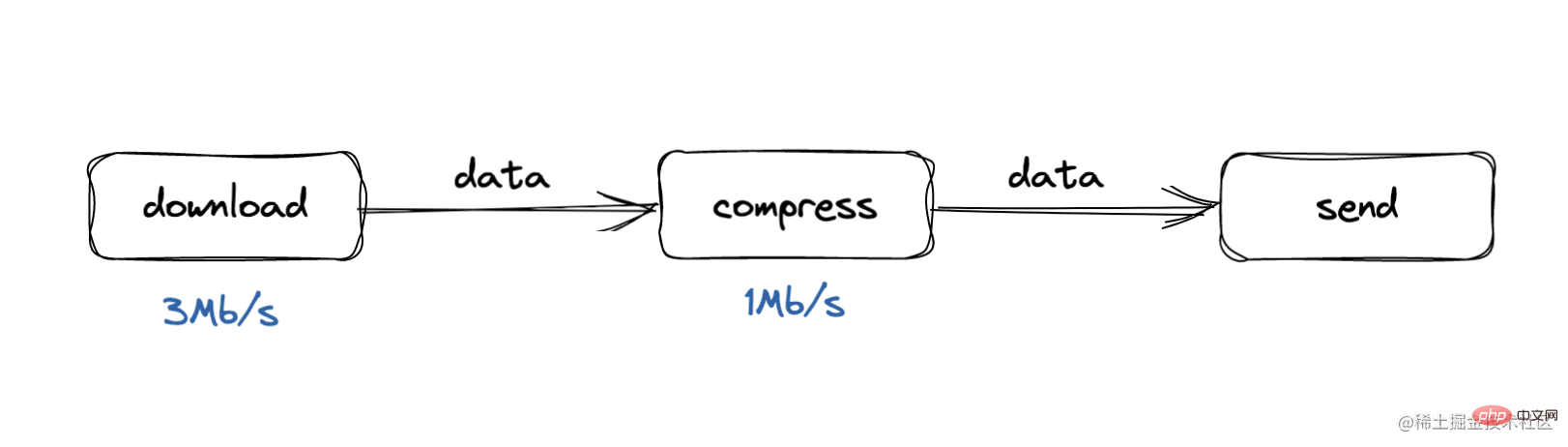

The backpressure problem comes from the producer-consumer model, where the consumer handles The speed is too slow

For example, during our download process, the processing speed is 3Mb/s, while during the compression process, the processing speed is 1Mb/s. In this case, the buffer queue will soon accumulate

Either the memory consumption of the entire process increases, or the entire buffer is slow and some data is lost

What is back pressure processing

Backpressure processing can be understood as a process of "proclaiming" upwards

When the compression processing finds that its buffer data squeeze exceeds the threshold, it "proclaims" to the download processing. I am too busy. , don’t send it again

Download processing will pause sending data downward after receiving the message

How to deal with back pressure

We have different functions to transfer data from one process to another. In Node.js, there is a built-in function called .pipe(), and ultimately, at a basic level in this process we have two unrelated components: the source of data, and the consumer.

When .pipe() is called by the source, it notifies the consumer that there is data to be transmitted. The pipeline function establishes a suitable backlog package for event triggering

When the data cache exceeds highWaterMark or the write queue is busy, .write() will return false

When false returns, The backlog system stepped in. It will pause incoming Readables from any data stream that is sending data. Once the data stream is emptied, the drain event will be triggered, consuming the incoming data stream

Once the queue is completely processed, the backlog mechanism will allow the data to be sent again. The memory space in use will release itself and prepare to receive the next batch of data

We can see the back pressure processing of the pipe:

- Divide the data according to chunks and write them

- When the chunk is too large or the queue is busy, read is paused

- When the queue is empty, continue reading data

For more node-related knowledge, please visit: nodejs tutorial!

The above is the detailed content of An in-depth analysis of Stream in Node. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

PHP and Vue: a perfect pairing of front-end development tools

Mar 16, 2024 pm 12:09 PM

PHP and Vue: a perfect pairing of front-end development tools

Mar 16, 2024 pm 12:09 PM

PHP and Vue: a perfect pairing of front-end development tools. In today's era of rapid development of the Internet, front-end development has become increasingly important. As users have higher and higher requirements for the experience of websites and applications, front-end developers need to use more efficient and flexible tools to create responsive and interactive interfaces. As two important technologies in the field of front-end development, PHP and Vue.js can be regarded as perfect tools when paired together. This article will explore the combination of PHP and Vue, as well as detailed code examples to help readers better understand and apply these two

Questions frequently asked by front-end interviewers

Mar 19, 2024 pm 02:24 PM

Questions frequently asked by front-end interviewers

Mar 19, 2024 pm 02:24 PM

In front-end development interviews, common questions cover a wide range of topics, including HTML/CSS basics, JavaScript basics, frameworks and libraries, project experience, algorithms and data structures, performance optimization, cross-domain requests, front-end engineering, design patterns, and new technologies and trends. . Interviewer questions are designed to assess the candidate's technical skills, project experience, and understanding of industry trends. Therefore, candidates should be fully prepared in these areas to demonstrate their abilities and expertise.

How to use Go language for front-end development?

Jun 10, 2023 pm 05:00 PM

How to use Go language for front-end development?

Jun 10, 2023 pm 05:00 PM

With the development of Internet technology, front-end development has become increasingly important. Especially the popularity of mobile devices requires front-end development technology that is efficient, stable, safe and easy to maintain. As a rapidly developing programming language, Go language has been used by more and more developers. So, is it feasible to use Go language for front-end development? Next, this article will explain in detail how to use Go language for front-end development. Let’s first take a look at why Go language is used for front-end development. Many people think that Go language is a

C# development experience sharing: front-end and back-end collaborative development skills

Nov 23, 2023 am 10:13 AM

C# development experience sharing: front-end and back-end collaborative development skills

Nov 23, 2023 am 10:13 AM

As a C# developer, our development work usually includes front-end and back-end development. As technology develops and the complexity of projects increases, the collaborative development of front-end and back-end has become more and more important and complex. This article will share some front-end and back-end collaborative development techniques to help C# developers complete development work more efficiently. After determining the interface specifications, collaborative development of the front-end and back-end is inseparable from the interaction of API interfaces. To ensure the smooth progress of front-end and back-end collaborative development, the most important thing is to define good interface specifications. Interface specification involves the name of the interface

Is Django front-end or back-end? check it out!

Jan 19, 2024 am 08:37 AM

Is Django front-end or back-end? check it out!

Jan 19, 2024 am 08:37 AM

Django is a web application framework written in Python that emphasizes rapid development and clean methods. Although Django is a web framework, to answer the question whether Django is a front-end or a back-end, you need to have a deep understanding of the concepts of front-end and back-end. The front end refers to the interface that users directly interact with, and the back end refers to server-side programs. They interact with data through the HTTP protocol. When the front-end and back-end are separated, the front-end and back-end programs can be developed independently to implement business logic and interactive effects respectively, and data exchange.

Exploring Go language front-end technology: a new vision for front-end development

Mar 28, 2024 pm 01:06 PM

Exploring Go language front-end technology: a new vision for front-end development

Mar 28, 2024 pm 01:06 PM

As a fast and efficient programming language, Go language is widely popular in the field of back-end development. However, few people associate Go language with front-end development. In fact, using Go language for front-end development can not only improve efficiency, but also bring new horizons to developers. This article will explore the possibility of using the Go language for front-end development and provide specific code examples to help readers better understand this area. In traditional front-end development, JavaScript, HTML, and CSS are often used to build user interfaces

Can golang be used as front-end?

Jun 06, 2023 am 09:19 AM

Can golang be used as front-end?

Jun 06, 2023 am 09:19 AM

Golang can be used as a front-end. Golang is a very versatile programming language that can be used to develop different types of applications, including front-end applications. By using Golang to write the front-end, you can get rid of a series of problems caused by languages such as JavaScript. For example, problems such as poor type safety, low performance, and difficult to maintain code.

How to implement instant messaging on the front end

Oct 09, 2023 pm 02:47 PM

How to implement instant messaging on the front end

Oct 09, 2023 pm 02:47 PM

Methods for implementing instant messaging include WebSocket, Long Polling, Server-Sent Events, WebRTC, etc. Detailed introduction: 1. WebSocket, which can establish a persistent connection between the client and the server to achieve real-time two-way communication. The front end can use the WebSocket API to create a WebSocket connection and achieve instant messaging by sending and receiving messages; 2. Long Polling, a technology that simulates real-time communication, etc.