How to compress large text storage in MySQL

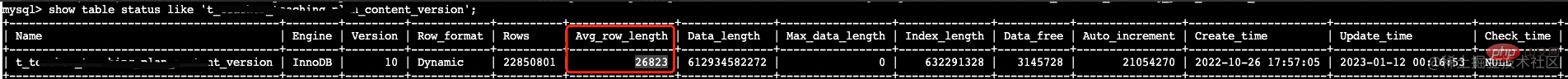

As mentioned earlier, we have a cloud document project whose snapshot content is directly stored in db, which is a large text storage. Most of the content fields of the document snapshot are kb level, and some Even to the MB level. At present, CDN caching optimization has been carried out for data reading ( Static resource caching tool - CDN). Data writing and storage still need to be optimized. If it can be done in large text through some compression algorithms Compressed storage can save DB storage space to a great extent and relieve DB I/O pressure.

Stock data analysis

select

table_name as '表名',

table_rows as '记录数',

truncate(data_length/1024/1024, 2) as '数据容量(MB)',

truncate(index_length/1024/1024, 2) as '索引容量(MB)',

truncate(DATA_FREE/1024/1024, 2) as '碎片占用(MB)'

from

information_schema.tables

where

table_schema=${数据库名}

order by

data_length desc, index_length desc;

Related content introduction

What happens if the innodb engine page data exceeds 16kb manage?

We all know that the default page block size of innodb is 16k. If the length of a row of data in the table exceeds 16k, row overflow will occur, and the overflowed row is stored in another place (uncompress blob page). Since innodb uses clustered index to store data, that is, B Tree structure, there are at least two rows of data in each page block, otherwise the meaning of B Tree will be lost, so the maximum length of a row of data is limited to 8k (Large fields will store 768 bytes of data in the data page, and the remaining data overflows to another page. The data page also has 20 bytes to record the address of the overflow page)

- For dynamic format For example, if the size of the data stored in the large object field (text/blob) is less than 40 bytes, all of it is placed on the data page. In the remaining scenarios, the data page only retains a 20-byte pointer pointing to the overflow page. In this scenario, if the data stored in each large object field is less than 40 bytes, it will have the same effect as varchar(40).

- innodb-row-format-dynamic:dev.mysql.com/doc/refman/…

Linux Sparse Files & Holes

- Sparse File: Sparse files are basically the same as other ordinary files. The difference is that some data in the file are all 0, and this part of the data does not occupy disk space

- File holes: file displacement The amount can be greater than the actual length of the file (bytes that are in the file but have not been written are set to 0). Whether the hole takes up disk space is determined by the operating system

The hole part of the file does not occupy disk space, and the disk space occupied by the file is still continuous.

Compression scheme provided by innodb

Page compression

Applicable scenarios: Due to the large amount of data and insufficient disk space, the load is mainly reflected in IO, and the server's CPU has a relatively large margin.

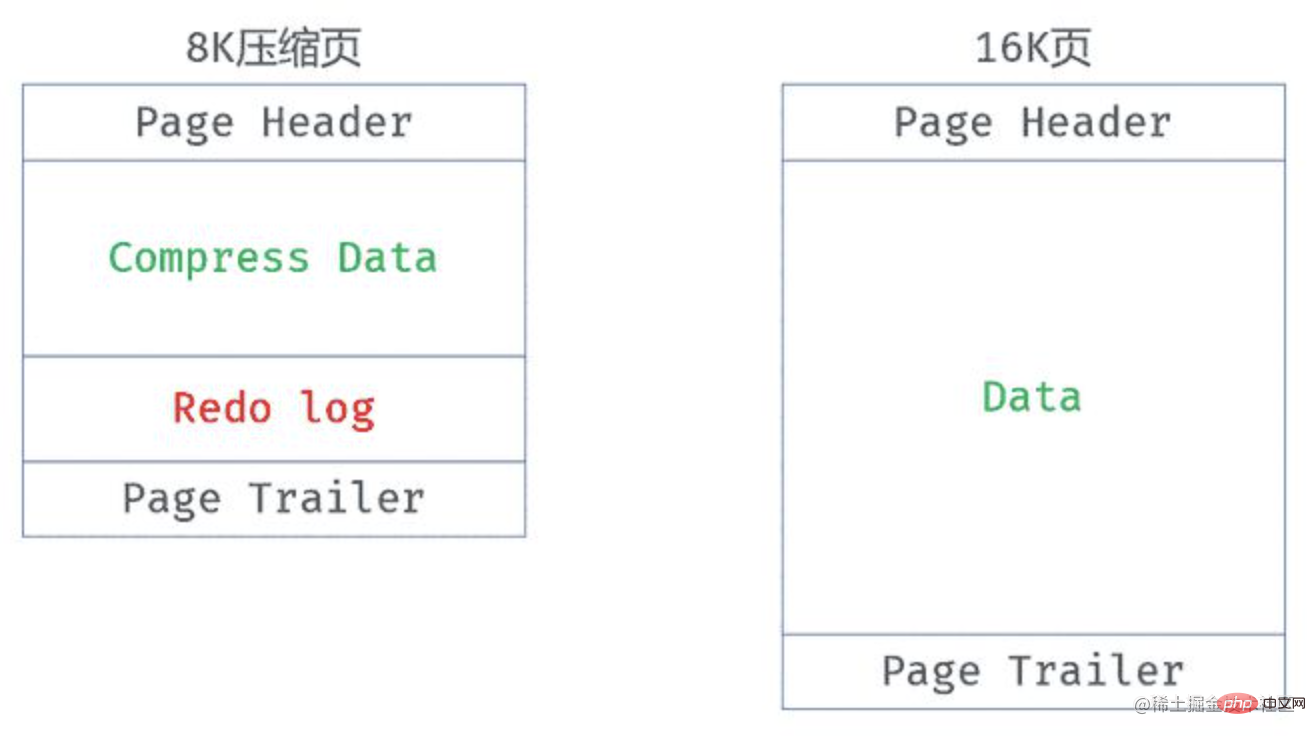

1) COMPRESS page compression

Related documents: dev.mysql.com/doc/refman/…

- The page compression function provided before MySQL5.7 version, specify ROW_FORMAT = COMPRESS when creating the table, and set the size of the compressed page through KEY_BLOCK_SIZE

- There are design flaws, it is possible It will lead to obvious performance degradation, and its original design is to improve performance and introduce the concept of "log is data"

- For the data modification of the compressed page, the page itself will not be modified directly, but the log will be modified. Stored in this page, this is indeed more friendly to data changes. There is no need to compress/decompress every modification.

- For data reading , the compressed data cannot be read directly, so this algorithm will retain a decompressed 16K page in the memory for data reading

- This results in a page that may have two versions (compressed version and non-compressed version) in the buffer pool, causing a very serious problem, that is, the number of pages that can be cached in the buffer pool is greatly reduced. , which may cause a great decrease in database performance

- For the data modification of the compressed page, the page itself will not be modified directly, but the log will be modified. Stored in this page, this is indeed more friendly to data changes. There is no need to compress/decompress every modification.

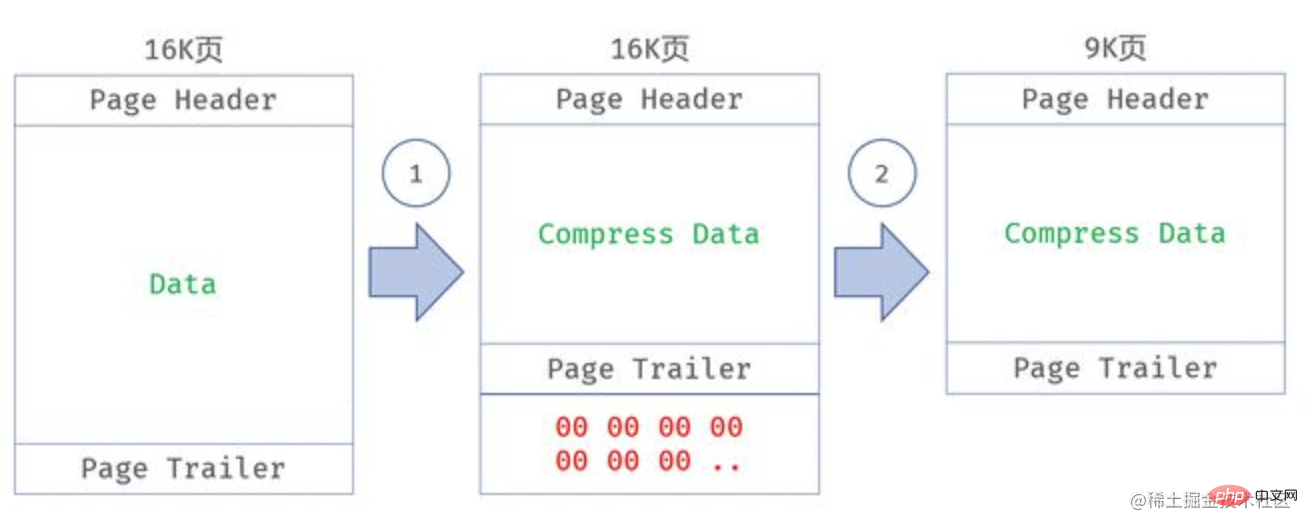

2) TPC (Transparent Page Compression)

Related documents: dev. mysql.com/doc/refman/…

- Working principle: When writing a page, use the specified compression algorithm to compress the page, and write it to the disk after compression, through the hole punching mechanism Release the empty space from the end of the page (requires the operating system to support the

holefeature) ALTER TABLE xxx COMPRESSION = ZLIBThe TPC page compression function can be enabled, but this is only for subsequent additions. To compress a large amount of data, if you expect to compress the entire table, you need to executeOPTIMIZE TABLE xxxImplementation process: A compressed page is a 16K non-volatile page in the buffer pool The compressed page will be compressed only when the data is flushed. The remaining space after compression will be filled with 0x00. The file system's hole punch is used to crop the file and release the sparse space occupied by 0x00

- Although TPC is good, it relies on the Hole Punch feature of the operating system, and the trimmed file size needs to be aligned with the file system block size ( 4K). That is, if the compressed page size is 9K, then the actual occupied space is 12K

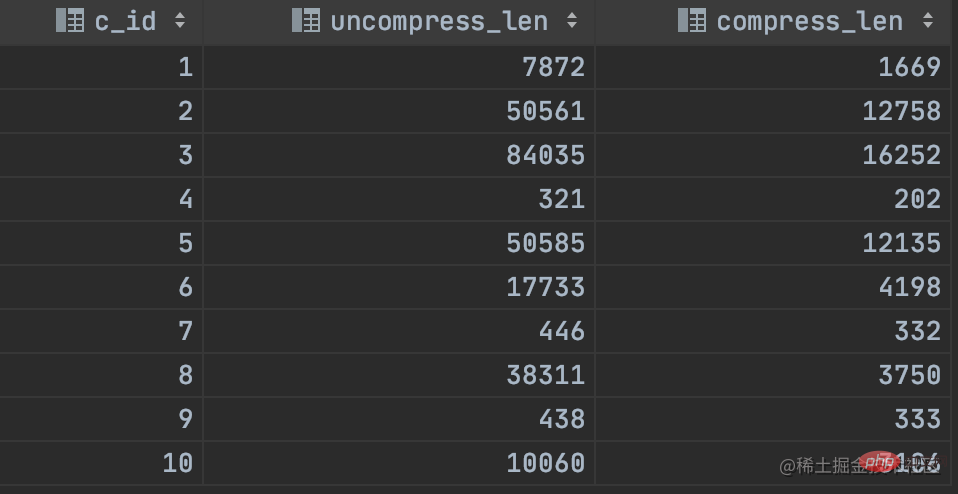

Column Compression

MySQL currently does not have a direct solution for column compression, but there is a curved way to save the country. , which is to use the compression and decompression functions provided by MySQL at the business layer to perform compression and decompression operations on columns. That is, if you need to compress a certain column, call the COMPRESS function to compress the contents of that column when writing, and use the UNCOMPRESS function to compress the compressed data when reading. Unzip.

- Usage scenario: For the situation where the data length of some columns in the table is relatively large, usually varchar, text, blob, json and other data types

- Related functions:

- Compression function:

COMPRESS() - Decompression function:

UNCOMPRESS() - String length function:

LENGTH() - Uncompressed string length function:

UNCOMPRESSED_LENGTH()

- Compression function:

- Test:

- Insert data:

insert into xxx (content) values (compress('xxx....')) Read compressed data:

select c_id, uncompressed_length(c_content) uncompress_len, length(c_content) compress_len from xxx

- Insert data:

##

##

为什么innodb提供的都是基于页面的压缩技术?

- 记录压缩:每次读写记录的时候,都要进行压缩或解压,过度依赖CPU的计算能力,性能相对会比较差

- 表空间压缩:压缩效率高,但要求表空间文件是静态不增长的,这对于我们大部分的场景都是不适用的

- 页面压缩:既能提升效率,又能在性能中取得一定的平衡

总结

- 对于一些性能不敏感的业务表,如日志表、监控表、告警表等,这些表只期望对存储空间进行优化,对性能的影响不是很关注,可以使用COMPRESS页压缩

- 对于一些比较核心的表,则比较推荐使用TPC压缩

- 列压缩过度依赖CPU,性能方面会稍差,且对业务有一定的改造成本,不够灵活,需要评估影响范围,做好切换的方案。好处是可以由业务端决定哪些数据需要压缩,并控制解压操作

- 对页面进行压缩,在业务侧不用进行什么改动,对线上完全透明,压缩方案也非常成熟

为什么要进行数据压缩?

- 由于处理器和高速缓存存储器的速度提高超过了磁盘存储设备,因此很多时候工作负载都是受限于磁盘I/O。数据压缩可以使数据占用更小的空间,可以节省磁盘I/O、减少网络I/O从而提高吞吐量,虽然会牺牲部分CPU资源作为代价

- 对于OLTP系统,经常进行update、delete、insert等操作,通过压缩表能够减少存储占用和IO消耗

- 压缩其实是一种平衡,并不一定是为了提升数据库的性能,这种平衡取决于解压缩带来的收益和开销之间的一种权衡,但压缩对存储空间来说,收益无疑是很大的

简单测试

innodb透明页压缩(TPC)

测试数据

1)创建表

- create table table_origin ( ...... ) comment '测试原表';

- create table table_compression_zlib ( ...... ) comment '测试压缩表_zlib' compression = 'zlib';

- create table table_compression_lz4 ( ...... ) comment '测试压缩表_lz4' compression = 'lz4';

2)往表中写入10w行测试数据

压缩率

SELECT NAME, FS_BLOCK_SIZE, FILE_SIZE, ALLOCATED_SIZE FROM information_schema.INNODB_TABLESPACES WHERE NAME like 'test_compress%';

-

FS_BLOCK_SIZE:文件系统块大小,也就是打孔使用的单位大小 -

FILE_SIZE:文件的表观大小,表示文件的最大大小,未压缩 -

ALLOCATED_SIZE:文件的实际大小,即磁盘上分配的空间量

压缩率:

- zlib:1320636416/3489660928 = 37.8%

- lz4:1566949376/3489660928 = 45%

耗时

- 循环插入10w条记录

- 原表:918275 ms

- zlib:878540 ms

- lz4:875259 ms

- 循环查询10w条记录

- 原表:332519 ms

- zlib:373387 ms

- lz4:343501 ms

【相关推荐:mysql视频教程】

The above is the detailed content of How to compress large text storage in MySQL. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL is an open source relational database management system. 1) Create database and tables: Use the CREATEDATABASE and CREATETABLE commands. 2) Basic operations: INSERT, UPDATE, DELETE and SELECT. 3) Advanced operations: JOIN, subquery and transaction processing. 4) Debugging skills: Check syntax, data type and permissions. 5) Optimization suggestions: Use indexes, avoid SELECT* and use transactions.

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

You can open phpMyAdmin through the following steps: 1. Log in to the website control panel; 2. Find and click the phpMyAdmin icon; 3. Enter MySQL credentials; 4. Click "Login".

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL is an open source relational database management system, mainly used to store and retrieve data quickly and reliably. Its working principle includes client requests, query resolution, execution of queries and return results. Examples of usage include creating tables, inserting and querying data, and advanced features such as JOIN operations. Common errors involve SQL syntax, data types, and permissions, and optimization suggestions include the use of indexes, optimized queries, and partitioning of tables.

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

Redis uses a single threaded architecture to provide high performance, simplicity, and consistency. It utilizes I/O multiplexing, event loops, non-blocking I/O, and shared memory to improve concurrency, but with limitations of concurrency limitations, single point of failure, and unsuitable for write-intensive workloads.

Why Use MySQL? Benefits and Advantages

Apr 12, 2025 am 12:17 AM

Why Use MySQL? Benefits and Advantages

Apr 12, 2025 am 12:17 AM

MySQL is chosen for its performance, reliability, ease of use, and community support. 1.MySQL provides efficient data storage and retrieval functions, supporting multiple data types and advanced query operations. 2. Adopt client-server architecture and multiple storage engines to support transaction and query optimization. 3. Easy to use, supports a variety of operating systems and programming languages. 4. Have strong community support and provide rich resources and solutions.

MySQL's Place: Databases and Programming

Apr 13, 2025 am 12:18 AM

MySQL's Place: Databases and Programming

Apr 13, 2025 am 12:18 AM

MySQL's position in databases and programming is very important. It is an open source relational database management system that is widely used in various application scenarios. 1) MySQL provides efficient data storage, organization and retrieval functions, supporting Web, mobile and enterprise-level systems. 2) It uses a client-server architecture, supports multiple storage engines and index optimization. 3) Basic usages include creating tables and inserting data, and advanced usages involve multi-table JOINs and complex queries. 4) Frequently asked questions such as SQL syntax errors and performance issues can be debugged through the EXPLAIN command and slow query log. 5) Performance optimization methods include rational use of indexes, optimized query and use of caches. Best practices include using transactions and PreparedStatemen

MySQL and SQL: Essential Skills for Developers

Apr 10, 2025 am 09:30 AM

MySQL and SQL: Essential Skills for Developers

Apr 10, 2025 am 09:30 AM

MySQL and SQL are essential skills for developers. 1.MySQL is an open source relational database management system, and SQL is the standard language used to manage and operate databases. 2.MySQL supports multiple storage engines through efficient data storage and retrieval functions, and SQL completes complex data operations through simple statements. 3. Examples of usage include basic queries and advanced queries, such as filtering and sorting by condition. 4. Common errors include syntax errors and performance issues, which can be optimized by checking SQL statements and using EXPLAIN commands. 5. Performance optimization techniques include using indexes, avoiding full table scanning, optimizing JOIN operations and improving code readability.

Monitor Redis Droplet with Redis Exporter Service

Apr 10, 2025 pm 01:36 PM

Monitor Redis Droplet with Redis Exporter Service

Apr 10, 2025 pm 01:36 PM

Effective monitoring of Redis databases is critical to maintaining optimal performance, identifying potential bottlenecks, and ensuring overall system reliability. Redis Exporter Service is a powerful utility designed to monitor Redis databases using Prometheus. This tutorial will guide you through the complete setup and configuration of Redis Exporter Service, ensuring you seamlessly build monitoring solutions. By studying this tutorial, you will achieve fully operational monitoring settings