What is process number 0 in linux?

In Linux, process No. 0 refers to the idle process, which is the first process started by Linux; the comm field of its task_struct is "swapper", so it is also called the swpper process. Process No. 0 is the only process that is not generated through fork or kernel_thread, because init_task is a static variable (initialized global variable), and the PCBs of other processes are created by fork or kernel_thread dynamically applying for memory.

#The operating environment of this tutorial: linux7.3 system, Dell G3 computer.

1. Process No. 0

# Process No. 0 is usually also called the idle process or the swapper process.

Each process has a process control block PCB (Process Control Block). The data structure type of PCB is struct task_struct. The PCB corresponding to the idle process is struct task_struct init_task.

The idle process is the only process that is not generated through fork or kernel_thread, because init_task is a static variable (initialized global variable), and the PCBs of other processes are created by fork or kernel_thread dynamically applying for memory.

Each process has a corresponding function. The function of the idle process is start_kernel(), because before entering this function, the stack pointer SP has pointed to the top of the stack of init_task. Which process you are in depends on which SP points to Process stack.

Process No. 0 is the first process started by Linux. The comm field of its task_struct is "swapper", so it is also called the swpper process.

#define INIT_TASK_COMM "swapper"

When all the processes in the system are up, process No. 0 will degenerate into an idle process. When there are no tasks to run on a core, the idle process will be run. Once the idle process is running, the core can enter a low-power mode, which is WFI on ARM.

Our focus in this section is how process No. 0 is started. In the Linux kernel, a static task_struct structure is specially defined for process No. 0, called init_task.

/*

* Set up the first task table, touch at your own risk!. Base=0,

* limit=0x1fffff (=2MB)

*/

struct task_struct init_task

= {

#ifdef CONFIG_THREAD_INFO_IN_TASK

.thread_info = INIT_THREAD_INFO(init_task),

.stack_refcount = ATOMIC_INIT(1),

#endif

.state = 0,

.stack = init_stack,

.usage = ATOMIC_INIT(2),

.flags = PF_KTHREAD,

.prio = MAX_PRIO - 20,

.static_prio = MAX_PRIO - 20,

.normal_prio = MAX_PRIO - 20,

.policy = SCHED_NORMAL,

.cpus_allowed = CPU_MASK_ALL,

.nr_cpus_allowed= NR_CPUS,

.mm = NULL,

.active_mm = &init_mm,

.tasks = LIST_HEAD_INIT(init_task.tasks),

.ptraced = LIST_HEAD_INIT(init_task.ptraced),

.ptrace_entry = LIST_HEAD_INIT(init_task.ptrace_entry),

.real_parent = &init_task,

.parent = &init_task,

.children = LIST_HEAD_INIT(init_task.children),

.sibling = LIST_HEAD_INIT(init_task.sibling),

.group_leader = &init_task,

RCU_POINTER_INITIALIZER(real_cred, &init_cred),

RCU_POINTER_INITIALIZER(cred, &init_cred),

.comm = INIT_TASK_COMM,

.thread = INIT_THREAD,

.fs = &init_fs,

.files = &init_files,

.signal = &init_signals,

.sighand = &init_sighand,

.blocked = {{0}},

.alloc_lock = __SPIN_LOCK_UNLOCKED(init_task.alloc_lock),

.journal_info = NULL,

INIT_CPU_TIMERS(init_task)

.pi_lock = __RAW_SPIN_LOCK_UNLOCKED(init_task.pi_lock),

.timer_slack_ns = 50000, /* 50 usec default slack */

.thread_pid = &init_struct_pid,

.thread_group = LIST_HEAD_INIT(init_task.thread_group),

.thread_node = LIST_HEAD_INIT(init_signals.thread_head),

};

EXPORT_SYMBOL(init_task);The members in this structure are all statically defined. For simple explanation, this structure has been simply deleted. At the same time, we only pay attention to the following fields in this structure, and do not pay attention to others.

.thread_info = INIT_THREAD_INFO(init_task), this structure is described in detail in the relationship between thread_info and the kernel stack

.stack = init_stack , init_stack is the static definition of the kernel stack

.comm = INIT_TASK_COMM, the name of process No. 0.

Both thread_info and stack involve Init_stack, so first look at where init_stack is set.

Finally found that init_task is defined in the link script.

#define INIT_TASK_DATA(align) \

. = ALIGN(align); \

__start_init_task = .; \

init_thread_union = .; \

init_stack = .; \

KEEP(*(.data..init_task)) \

KEEP(*(.data..init_thread_info)) \

. = __start_init_task + THREAD_SIZE; \

__end_init_task = .;An INIT_TASK_DATA macro is defined in the link script.

Among them, __start_init_task is the base address of the kernel stack of process No. 0. Of course, init_thread_union=init_task=__start_init_task.

The end address of the kernel stack of process 0 is equal to __start_init_task THREAD_SIZE. The size of THREAD_SIZE is generally 16K or 32K in ARM64. Then __end_init_task is the end address of the kernel stack of process No. 0.

The idle process is automatically created by the system and runs in the kernel state. The idle process has pid=0. Its predecessor is the first process created by the system and the only process that is not generated through fork or kernel_thread. After loading the system, it evolves into process scheduling and exchange.

2. Starting the Linux kernel

Friends who are familiar with the Linux kernel know that the bootloader is usually used to complete the loading of the Linux kernel. The bootloader will do Some hardware initialization will then jump to the running address of the Linux kernel.

If you are familiar with the ARM architecture, you also know that the ARM64 architecture is divided into EL0, EL1, EL2, and EL3. Normal startup usually starts from high privilege mode to low privilege mode. Generally speaking, ARM64 runs EL3 first, then EL2, and then traps from EL2 to EL1, which is our Linux kernel.

Let’s take a look at the code for Linux kernel startup.

Code path: arch/arm64/kernel/head.S file

/*

* Kernel startup entry point.

* ---------------------------

*

* The requirements are:

* MMU = off, D-cache = off, I-cache = on or off,

* x0 = physical address to the FDT blob.

*

* This code is mostly position independent so you call this at

* __pa(PAGE_OFFSET + TEXT_OFFSET).

*

* Note that the callee-saved registers are used for storing variables

* that are useful before the MMU is enabled. The allocations are described

* in the entry routines.

*/

/*

* The following callee saved general purpose registers are used on the

* primary lowlevel boot path:

*

* Register Scope Purpose

* x21 stext() .. start_kernel() FDT pointer passed at boot in x0

* x23 stext() .. start_kernel() physical misalignment/KASLR offset

* x28 __create_page_tables() callee preserved temp register

* x19/x20 __primary_switch() callee preserved temp registers

*/

ENTRY(stext)

bl preserve_boot_args

bl el2_setup // Drop to EL1, w0=cpu_boot_mode

adrp x23, __PHYS_OFFSET

and x23, x23, MIN_KIMG_ALIGN - 1 // KASLR offset, defaults to 0

bl set_cpu_boot_mode_flag

bl __create_page_tables

/*

* The following calls CPU setup code, see arch/arm64/mm/proc.S for

* details.

* On return, the CPU will be ready for the MMU to be turned on and

* the TCR will have been set.

*/

bl __cpu_setup // initialise processor

b __primary_switch

ENDPROC(stext)The above is the main work done by the kernel before calling start_kernel.

preserve_boot_args is used to preserve the parameters passed by the bootloader, such as the address of the usual dtb on ARM

el2_setup: From the comments, it is used to trap to EL1, indicating that we are running this command before Still in EL2

__create_page_tables: Used to create page tables. Only Linux has pages to manage physical memory. Before using the virtual address, you need to set the page and then open the MMU. Currently it is still running on the physical address

__primary_switch: The main task is to complete the opening of the MMU

__primary_switch:

adrp x1, init_pg_dir

bl __enable_mmu

ldr x8, =__primary_switched

adrp x0, __PHYS_OFFSET

br x8

ENDPROC(__primary_switch)主要是调用__enable_mmu来打开mmu,之后我们访问的就是虚拟地址了

调用__primary_switched来设置0号进程的运行内核栈,然后调用start_kernel函数

/*

* The following fragment of code is executed with the MMU enabled.

*

* x0 = __PHYS_OFFSET

*/

__primary_switched:

adrp x4, init_thread_union

add sp, x4, #THREAD_SIZE

adr_l x5, init_task

msr sp_el0, x5 // Save thread_info

adr_l x8, vectors // load VBAR_EL1 with virtual

msr vbar_el1, x8 // vector table address

isb

stp xzr, x30, [sp, #-16]!

mov x29, sp

str_l x21, __fdt_pointer, x5 // Save FDT pointer

ldr_l x4, kimage_vaddr // Save the offset between

sub x4, x4, x0 // the kernel virtual and

str_l x4, kimage_voffset, x5 // physical mappings

// Clear BSS

adr_l x0, __bss_start

mov x1, xzr

adr_l x2, __bss_stop

sub x2, x2, x0

bl __pi_memset

dsb ishst // Make zero page visible to PTW

add sp, sp, #16

mov x29, #0

mov x30, #0

b start_kernel

ENDPROC(__primary_switched)init_thread_union就是我们在链接脚本中定义的,也就是0号进程的内核栈的栈底

add sp, x4, #THREAD_SIZE: 设置堆栈指针SP的值,就是内核栈的栈底+THREAD_SIZE的大小。现在SP指到了内核栈的顶端

最终通过b start_kernel就跳转到我们熟悉的linux内核入口处了。 至此0号进程就已经运行起来了。

三、1号进程

3.1 1号进程的创建

当一条b start_kernel指令运行后,内核就开始的内核的全面初始化操作。

asmlinkage __visible void __init start_kernel(void)

{

char *command_line;

char *after_dashes;

set_task_stack_end_magic(&init_task);

smp_setup_processor_id();

debug_objects_early_init();

cgroup_init_early();

local_irq_disable();

early_boot_irqs_disabled = true;

/*

* Interrupts are still disabled. Do necessary setups, then

* enable them.

*/

boot_cpu_init();

page_address_init();

pr_notice("%s", linux_banner);

setup_arch(&command_line);

/*

* Set up the the initial canary and entropy after arch

* and after adding latent and command line entropy.

*/

add_latent_entropy();

add_device_randomness(command_line, strlen(command_line));

boot_init_stack_canary();

mm_init_cpumask(&init_mm);

setup_command_line(command_line);

setup_nr_cpu_ids();

setup_per_cpu_areas();

smp_prepare_boot_cpu(); /* arch-specific boot-cpu hooks */

boot_cpu_hotplug_init();

build_all_zonelists(NULL);

page_alloc_init();

。。。。。。。

acpi_subsystem_init();

arch_post_acpi_subsys_init();

sfi_init_late();

/* Do the rest non-__init'ed, we're now alive */

arch_call_rest_init();

}

void __init __weak arch_call_rest_init(void)

{

rest_init();

}start_kernel函数就是内核各个重要子系统的初始化,比如mm, cpu, sched, irq等等。最后会调用一个rest_init剩余部分初始化,start_kernel在其最后一个函数rest_init的调用中,会通过kernel_thread来生成一个内核进程,后者则会在新进程环境下调 用kernel_init函数,kernel_init一个让人感兴趣的地方在于它会调用run_init_process来执行根文件系统下的 /sbin/init等程序。

noinline void __ref rest_init(void)

{

struct task_struct *tsk;

int pid;

rcu_scheduler_starting();

/*

* We need to spawn init first so that it obtains pid 1, however

* the init task will end up wanting to create kthreads, which, if

* we schedule it before we create kthreadd, will OOPS.

*/

pid = kernel_thread(kernel_init, NULL, CLONE_FS);

/*

* Pin init on the boot CPU. Task migration is not properly working

* until sched_init_smp() has been run. It will set the allowed

* CPUs for init to the non isolated CPUs.

*/

rcu_read_lock();

tsk = find_task_by_pid_ns(pid, &init_pid_ns);

set_cpus_allowed_ptr(tsk, cpumask_of(smp_processor_id()));

rcu_read_unlock();

numa_default_policy();

pid = kernel_thread(kthreadd, NULL, CLONE_FS | CLONE_FILES);

rcu_read_lock();

kthreadd_task = find_task_by_pid_ns(pid, &init_pid_ns);

rcu_read_unlock();

/*

* Enable might_sleep() and smp_processor_id() checks.

* They cannot be enabled earlier because with CONFIG_PREEMPT=y

* kernel_thread() would trigger might_sleep() splats. With

* CONFIG_PREEMPT_VOLUNTARY=y the init task might have scheduled

* already, but it's stuck on the kthreadd_done completion.

*/

system_state = SYSTEM_SCHEDULING;

complete(&kthreadd_done);

}在这个rest_init函数中我们只关系两点:

pid = kernel_thread(kernel_init, NULL, CLONE_FS);

pid = kernel_thread(kthreadd, NULL, CLONE_FS | CLONE_FILES);

/*

* Create a kernel thread.

*/

pid_t kernel_thread(int (*fn)(void *), void *arg, unsigned long flags)

{

return _do_fork(flags|CLONE_VM|CLONE_UNTRACED, (unsigned long)fn,

(unsigned long)arg, NULL, NULL, 0);

}很明显这是创建了两个内核线程,而kernel_thread最终会调用do_fork根据参数的不同来创建一个进程或者内核线程。关系do_fork的实现我们在后面会做详细的介绍。当内核线程创建成功后就会调用设置的回调函数。

当kernel_thread(kernel_init)成功返回后,就会调用kernel_init内核线程,其实这时候1号进程已经产生了。1号进程的执行函数就是kernel_init, 这个函数被定义init/main.c中,接下来看下kernel_init主要做什么事情。

static int __ref kernel_init(void *unused)

{

int ret;

kernel_init_freeable();

/* need to finish all async __init code before freeing the memory */

async_synchronize_full();

ftrace_free_init_mem();

free_initmem();

mark_readonly();

/*

* Kernel mappings are now finalized - update the userspace page-table

* to finalize PTI.

*/

pti_finalize();

system_state = SYSTEM_RUNNING;

numa_default_policy();

rcu_end_inkernel_boot();

if (ramdisk_execute_command) {

ret = run_init_process(ramdisk_execute_command);

if (!ret)

return 0;

pr_err("Failed to execute %s (error %d)\n",

ramdisk_execute_command, ret);

}

/*

* We try each of these until one succeeds.

*

* The Bourne shell can be used instead of init if we are

* trying to recover a really broken machine.

*/

if (execute_command) {

ret = run_init_process(execute_command);

if (!ret)

return 0;

panic("Requested init %s failed (error %d).",

execute_command, ret);

}

if (!try_to_run_init_process("/sbin/init") ||

!try_to_run_init_process("/etc/init") ||

!try_to_run_init_process("/bin/init") ||

!try_to_run_init_process("/bin/sh"))

return 0;

panic("No working init found. Try passing init= option to kernel. "

"See Linux Documentation/admin-guide/init.rst for guidance.");

}kernel_init_freeable函数中就会做各种外设驱动的初始化。

最主要的工作就是通过execve执行/init可以执行文件。它按照配置文件/etc/initab的要求,完成系统启动工作,创建编号为1号、2号...的若干终端注册进程getty。每个getty进程设置其进程组标识号,并监视配置到系统终端的接口线路。当检测到来自终端的连接信号时,getty进程将通过函数execve()执行注册程序login,此时用户就可输入注册名和密码进入登录过程,如果成功,由login程序再通过函数execv()执行shell,该shell进程接收getty进程的pid,取代原来的getty进程。再由shell直接或间接地产生其他进程。

我们通常将init称为1号进程,其实在刚才kernel_init的时候1号线程已经创建成功,也可以理解kernel_init是1号进程的内核态,而我们所熟知的init进程是用户态的,调用execve函数之前属于内核态,调用之后就属于用户态了,执行的代码段与0号进程不在一样。

1号内核线程负责执行内核的部分初始化工作及进行系统配置,并创建若干个用于高速缓存和虚拟主存管理的内核线程。

至此1号进程就完美的创建成功了,而且也成功执行了init可执行文件。

3.2 init进程

随后,1号进程调用do_execve运行可执行程序init,并演变成用户态1号进程,即init进程。

init进程是linux内核启动的第一个用户级进程。init有许多很重要的任务,比如像启动getty(用于用户登录)、实现运行级别、以及处理孤立进程。

它按照配置文件/etc/initab的要求,完成系统启动工作,创建编号为1号、2号…的若干终端注册进程getty。

每个getty进程设置其进程组标识号,并监视配置到系统终端的接口线路。当检测到来自终端的连接信号时,getty进程将通过函数do_execve()执行注册程序login,此时用户就可输入注册名和密码进入登录过程,如果成功,由login程序再通过函数execv()执行shell,该shell进程接收getty进程的pid,取代原来的getty进程。再由shell直接或间接地产生其他进程。

上述过程可描述为:0号进程->1号内核进程->1号用户进程(init进程)->getty进程->shell进程

注意,上述过程描述中提到:1号内核进程调用执行init函数并演变成1号用户态进程(init进程),这里前者是init是函数,后者是进程。两者容易混淆,区别如下:

kernel_init函数在内核态运行,是内核代码

init进程是内核启动并运行的第一个用户进程,运行在用户态下。

一号内核进程调用execve()从文件/etc/inittab中加载可执行程序init并执行,这个过程并没有使用调用do_fork(),因此两个进程都是1号进程。

当内核启动了自己之后(已被装入内存、已经开始运行、已经初始化了所有的设备驱动程序和数据结构等等),通过启动用户级程序init来完成引导进程的内核部分。因此,init总是第一个进程(它的进程号总是1)。

当init开始运行,它通过执行一些管理任务来结束引导进程,例如检查文件系统、清理/tmp、启动各种服务以及为每个终端和虚拟控制台启动getty,在这些地方用户将登录系统。

After the system is fully up, init restarts getty for each terminal that the user has exited (so that the next user can log in). init also collects orphaned processes: when a process starts a child process and terminates before the child process, the child process immediately becomes a child process of init. This is important for various technical reasons, but it is also beneficial to know this because it makes it easier to understand process lists and process tree diagrams. There are very few variations of init. Most Linux distributions use sysinit (written by Miguel van Smoorenburg), which is based on System V's init design. The BSD version of UNIX has a different init. The main difference is the runlevel: System V has it and BSD doesn't (at least traditionally). This distinction is not primary. Here we only discuss sysvinit. Configure init to start getty:/etc/inittab file.

3.3 init program

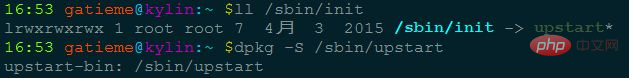

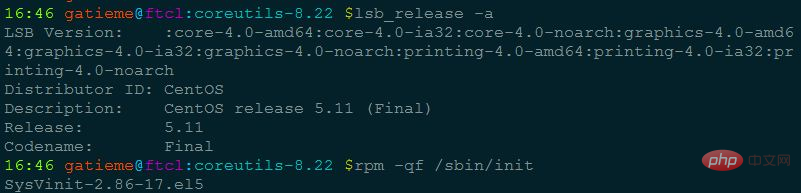

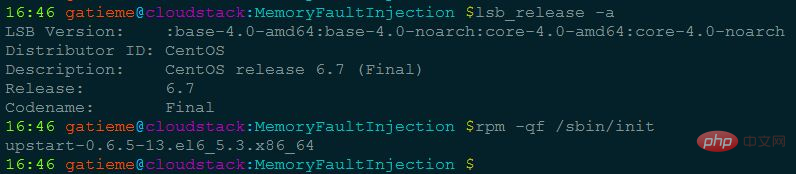

Process No. 1 executes the init program through execve to enter the user space and become the init process. So where is this init?

The kernel looks for init in several locations that were commonly used to place init in the past, but the most appropriate location for init (on Linux systems) is /sbin/init. If the kernel does not find init, it will try to run /bin/sh. If it still fails, the system startup will fail.

Therefore, the init program is a process that can be written by the user. If you want to see the source code of the init program, you can refer to it.

| init package | Description |

| sysvinit | Used by some earlier versions The initialization process tool is gradually fading out of the Linux history stage. sysvinit is a system V-style init system. As the name suggests, it originates from the System V series UNIX. It provides greater flexibility than BSD-style init systems. It is a UNIX init system that has been popular for decades and has been used by various Linux distributions. |

| initdaemon used by debian, Ubuntu and other systems | |

| Systemd It is the latest initialization system (init) in the Linux system. Its main design goal is to overcome the inherent shortcomings of sysvinit and improve the startup speed of the system |