Technology peripherals

Technology peripherals

AI

AI

Reinforcement learning is on the cover of Nature again, and the new paradigm of autonomous driving safety verification significantly reduces test mileage

Reinforcement learning is on the cover of Nature again, and the new paradigm of autonomous driving safety verification significantly reduces test mileage

Reinforcement learning is on the cover of Nature again, and the new paradigm of autonomous driving safety verification significantly reduces test mileage

Introduce dense reinforcement learning and use AI to verify AI.

Rapid advances in autonomous vehicle (AV) technology have us on the cusp of a transportation revolution on a scale not seen since the advent of the automobile a century ago. Autonomous driving technology has the potential to significantly improve traffic safety, mobility, and sustainability, and therefore has attracted the attention of industry, government agencies, professional organizations, and academic institutions.

The development of autonomous vehicles has come a long way over the past 20 years, especially with the advent of deep learning. By 2015, companies were starting to announce that they would be mass-producing AVs by 2020. But so far, no level 4 AV is available on the market.

There are many reasons for this phenomenon, but the most important is that the safety performance of self-driving cars is still significantly lower than that of human drivers. For the average driver in the United States, the probability of a collision in the natural driving environment (NDE) is approximately 1.9 × 10^−6 per mile. By comparison, the disengagement rate for state-of-the-art autonomous vehicles is about 2.0 × 10^−5/mile, according to California’s 2021 Disengagement Reports.

Note: The disengagement rate is an important indicator for evaluating the reliability of autonomous driving. It describes the number of times the system requires the driver to take over every 1,000 miles of operation. The lower the disengagement rate of the system, the better the reliability. When the disengagement rate is equal to 0, it means that the autonomous driving system has reached the driverless level to some extent.

Although the disengagement rate can be criticized for being biased, it has been widely used to evaluate the safety performance of autonomous vehicles.

A key bottleneck in improving the safety performance of autonomous vehicles is the low efficiency of safety verification. It is currently popular to test the non-destructive testing of autonomous vehicles through a combination of software simulation, closed test track and road testing. As a result, AV developers must incur significant economic and time costs for evaluation, hindering the progress of AV deployment.

Verifying AV security performance in an NDE environment is very complex. For example, driving environments are complex in space and time, so the variables required to define such environments are high-dimensional. As the dimensionality of variables increases exponentially, so does the computational complexity. In this case, deep learning models are difficult to learn even given large amounts of data.

In this article, researchers from the University of Michigan, Ann Arbor, Tsinghua University and other institutions propose a dense deep-reinforcement learning (D2RL) method to solve this challenge.

The study appears on the cover of Nature.

- Paper address: https://www.nature.com/articles/s41586-023-05732-2

- Project address: https ://github.com/michigan-traffic-lab/Dense-Deep-Reinforcement-Learning

The thesis was awarded a master's degree, and he is currently a Tenure-Track Assistant Professor in the Department of Automation, Tsinghua University. , Additionally, he is an Assistant Research Scientist at the University of Michigan Transportation Research Institute (UMTRI). He received his bachelor's and doctoral degrees from the Department of Automation, Tsinghua University, in 2014 and 2019, under the supervision of Professor Zhang Yi. From 2017 to 2019, he was a visiting doctoral student in Civil and Environmental Engineering at the University of Michigan, studying under Professor Henry X. Liu (corresponding author of this article).

Research Introduction

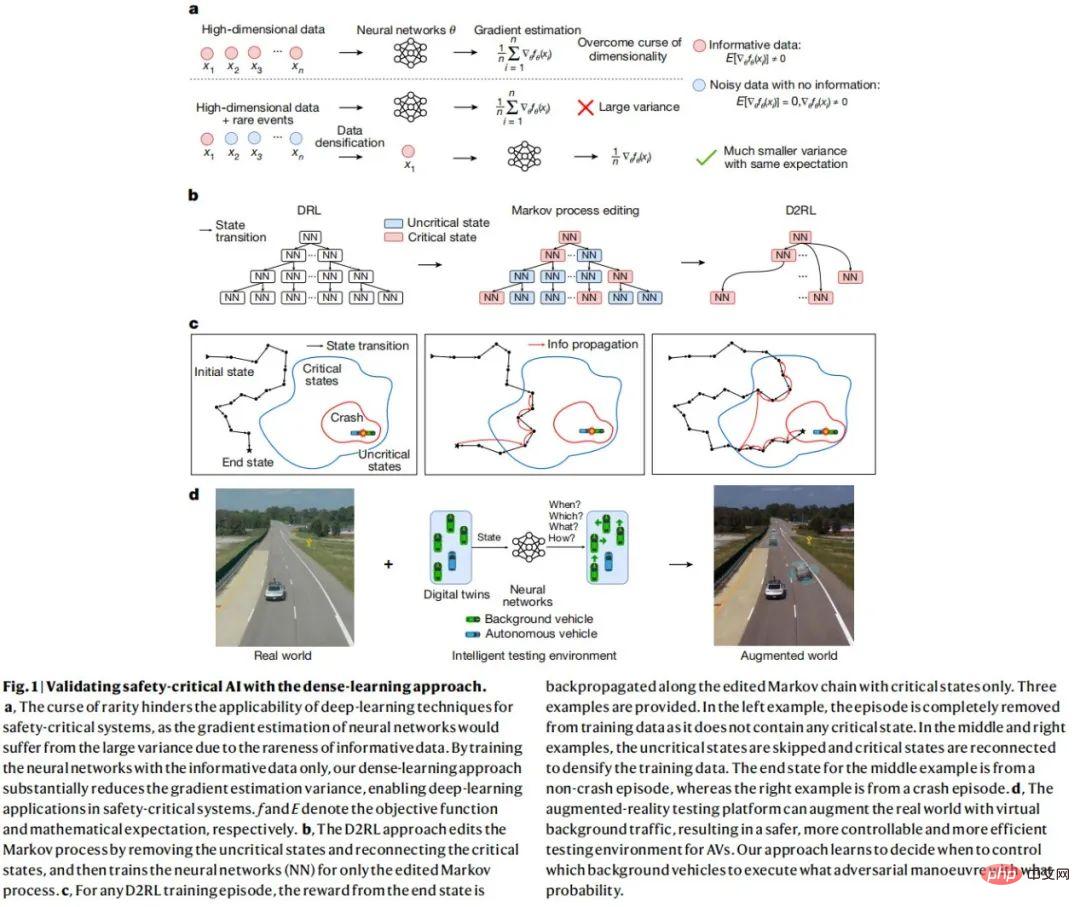

The basic idea of the D2RL method is to identify and remove non-safety-critical data, and use safety-critical data to train the neural network. Since only a small portion of the data is security-critical, the remaining data will be heavily densified with information.

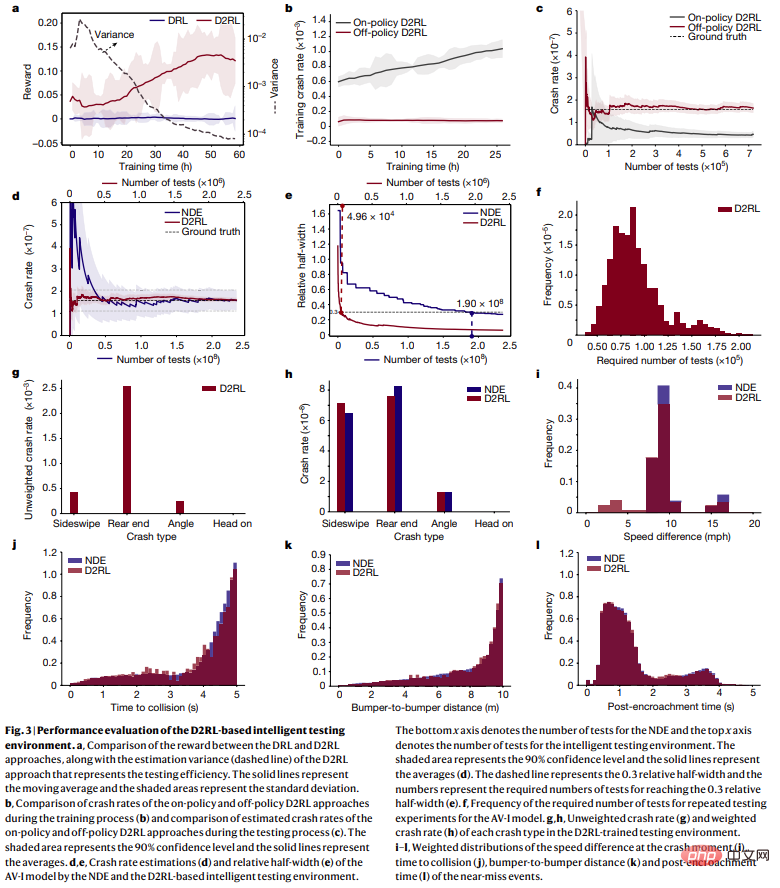

Compared with the DRL method, the D2RL method can significantly reduce the variance of the policy gradient estimate by multiple orders of magnitude without losing unbiasedness. This significant variance reduction can enable neural networks to learn and complete tasks that are intractable for DRL methods.

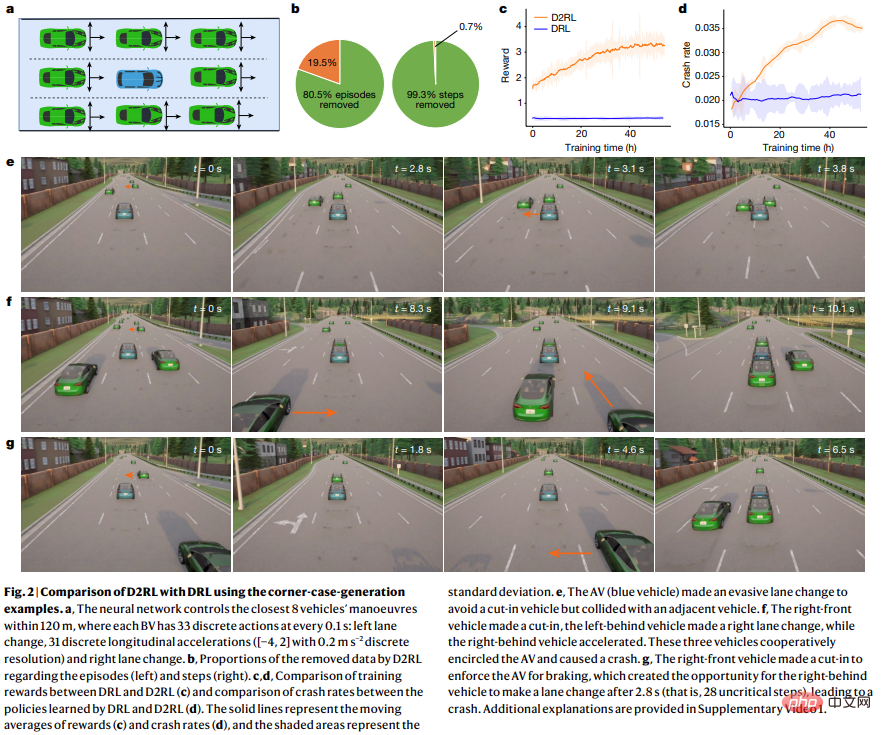

For AV testing, this research utilizes the D2RL method to train background vehicles (BV) through neural networks to learn when to perform what adversarial operations, aiming to improve testing efficiency. D2RL can reduce the test mileage required for AVs by multiple orders of magnitude in an AI-based adversarial testing environment while ensuring unbiased testing.

The D2RL method can be applied to complex driving environments, including multiple highways, intersections, and roundabouts, which was not possible with previous scenario-based methods. Moreover, the method proposed in this study can create intelligent testing environments that use AI to verify AI. This is a paradigm shift that opens the door for accelerated testing and training of other safety-critical systems.

In order to prove that the AI-based testing method is effective, this study trained BV using a large-scale actual driving data set, and conducted simulation experiments and field experiments on physical test tracks. The experimental results are as follows Figure 1 shown.

Dense Deep Reinforcement Learning

To take advantage of AI technology, this study formulated the AV testing problem as a Markov Decision Process (MDP), The operation of BV is determined based on the current status information. The study aims to train a policy (DRL agent) modeled by a neural network that controls the actions of BVs interacting with AVs to maximize evaluation efficiency and ensure unbiasedness. However, as mentioned above, due to the limitations of dimensionality and computational complexity, it is difficult or even impossible to learn effective policies if the DRL method is directly applied.

Since most states are non-critical and cannot provide information for security-critical events, D2RL focuses on removing data from these non-critical states. For AV testing problems, many security metrics can be leveraged to identify critical states with varying efficiency and effectiveness. The criticality metric utilized in this study is an external approximation of the AV collision rate within a specific time frame of the current state (e.g., 1 second). The study then edited the Markov process, discarding data for non-critical states, and used the remaining data for policy gradient estimation and Bootstrap for DRL training.

As shown in Figure 2 below, compared to DRL, the advantage of D2RL is that it can maximize the reward during the training process.

AV Simulation Test

To evaluate the accuracy, efficiency, scalability and generality of the D2RL method, this study was conducted simulation test. For each test set, the study simulated a fixed distance of traffic travel and then recorded and analyzed the test results, as shown in Figure 3 below.

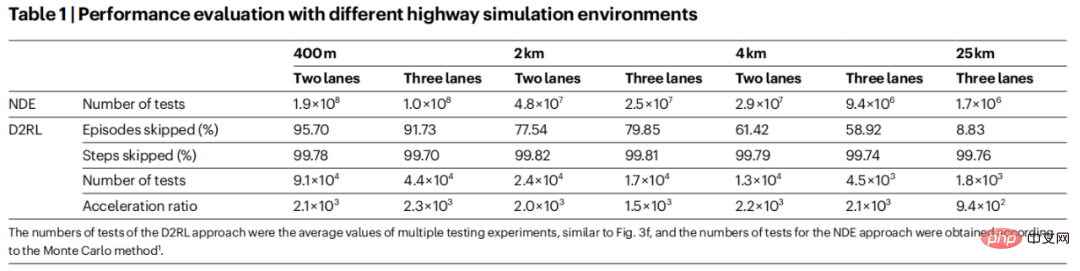

In order to further study the scalability and generalization of D2RL, this study conducted AV-I models with different number of lanes (2 lanes and 3 lanes) and driving distance. (400 m, 2 km, 4 km and 25 km) experiments. This article examines 25-kilometer trips because the average commuter in the United States travels approximately 25 kilometers one-way. The results are shown in Table 1:

The above is the detailed content of Reinforcement learning is on the cover of Nature again, and the new paradigm of autonomous driving safety verification significantly reduces test mileage. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

Exchanges that support cross-chain transactions: 1. Binance, 2. Uniswap, 3. SushiSwap, 4. Curve Finance, 5. Thorchain, 6. 1inch Exchange, 7. DLN Trade, these platforms support multi-chain asset transactions through various technologies.

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Factors of rising virtual currency prices include: 1. Increased market demand, 2. Decreased supply, 3. Stimulated positive news, 4. Optimistic market sentiment, 5. Macroeconomic environment; Decline factors include: 1. Decreased market demand, 2. Increased supply, 3. Strike of negative news, 4. Pessimistic market sentiment, 5. Macroeconomic environment.

What is the analysis chart of Bitcoin finished product structure? How to draw?

Apr 21, 2025 pm 07:42 PM

What is the analysis chart of Bitcoin finished product structure? How to draw?

Apr 21, 2025 pm 07:42 PM

The steps to draw a Bitcoin structure analysis chart include: 1. Determine the purpose and audience of the drawing, 2. Select the right tool, 3. Design the framework and fill in the core components, 4. Refer to the existing template. Complete steps ensure that the chart is accurate and easy to understand.

What are the hybrid blockchain trading platforms?

Apr 21, 2025 pm 11:36 PM

What are the hybrid blockchain trading platforms?

Apr 21, 2025 pm 11:36 PM

Suggestions for choosing a cryptocurrency exchange: 1. For liquidity requirements, priority is Binance, Gate.io or OKX, because of its order depth and strong volatility resistance. 2. Compliance and security, Coinbase, Kraken and Gemini have strict regulatory endorsement. 3. Innovative functions, KuCoin's soft staking and Bybit's derivative design are suitable for advanced users.

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

In the bustling world of cryptocurrencies, new opportunities always emerge. At present, KernelDAO (KERNEL) airdrop activity is attracting much attention and attracting the attention of many investors. So, what is the origin of this project? What benefits can BNB Holder get from it? Don't worry, the following will reveal it one by one for you.

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a proposal to modify the AAVE protocol token and introduce token repos, which has implemented a quorum for AAVEDAO. Marc Zeller, founder of the AAVE Project Chain (ACI), announced this on X, noting that it marks a new era for the agreement. Marc Zeller, founder of the AAVE Chain Initiative (ACI), announced on X that the Aavenomics proposal includes modifying the AAVE protocol token and introducing token repos, has achieved a quorum for AAVEDAO. According to Zeller, this marks a new era for the agreement. AaveDao members voted overwhelmingly to support the proposal, which was 100 per week on Wednesday

The top ten free platform recommendations for real-time data on currency circle markets are released

Apr 22, 2025 am 08:12 AM

The top ten free platform recommendations for real-time data on currency circle markets are released

Apr 22, 2025 am 08:12 AM

Cryptocurrency data platforms suitable for beginners include CoinMarketCap and non-small trumpet. 1. CoinMarketCap provides global real-time price, market value, and trading volume rankings for novice and basic analysis needs. 2. The non-small quotation provides a Chinese-friendly interface, suitable for Chinese users to quickly screen low-risk potential projects.