Technology peripherals

Technology peripherals

AI

AI

When GPT-4 learns to read pictures and texts, a productivity revolution is unstoppable

When GPT-4 learns to read pictures and texts, a productivity revolution is unstoppable

When GPT-4 learns to read pictures and texts, a productivity revolution is unstoppable

Many researchers from academia and industry conducted in-depth discussions around "intelligent image and text processing technology and multi-scenario application technology",

"It's too complicated!"

In the experience After the continuous bombardment of GPT-4 and Microsoft 365 Copilot, I believe many people have this feeling.

Compared with GPT-3.5, GPT-4 has achieved significant improvements in many aspects. For example, in the mock bar exam, it has evolved from the original bottom 10% to the positive 10%. Of course, ordinary people may not have any idea about these professional examinations. But if I show you a picture, you will understand how terrifying its improvement is:

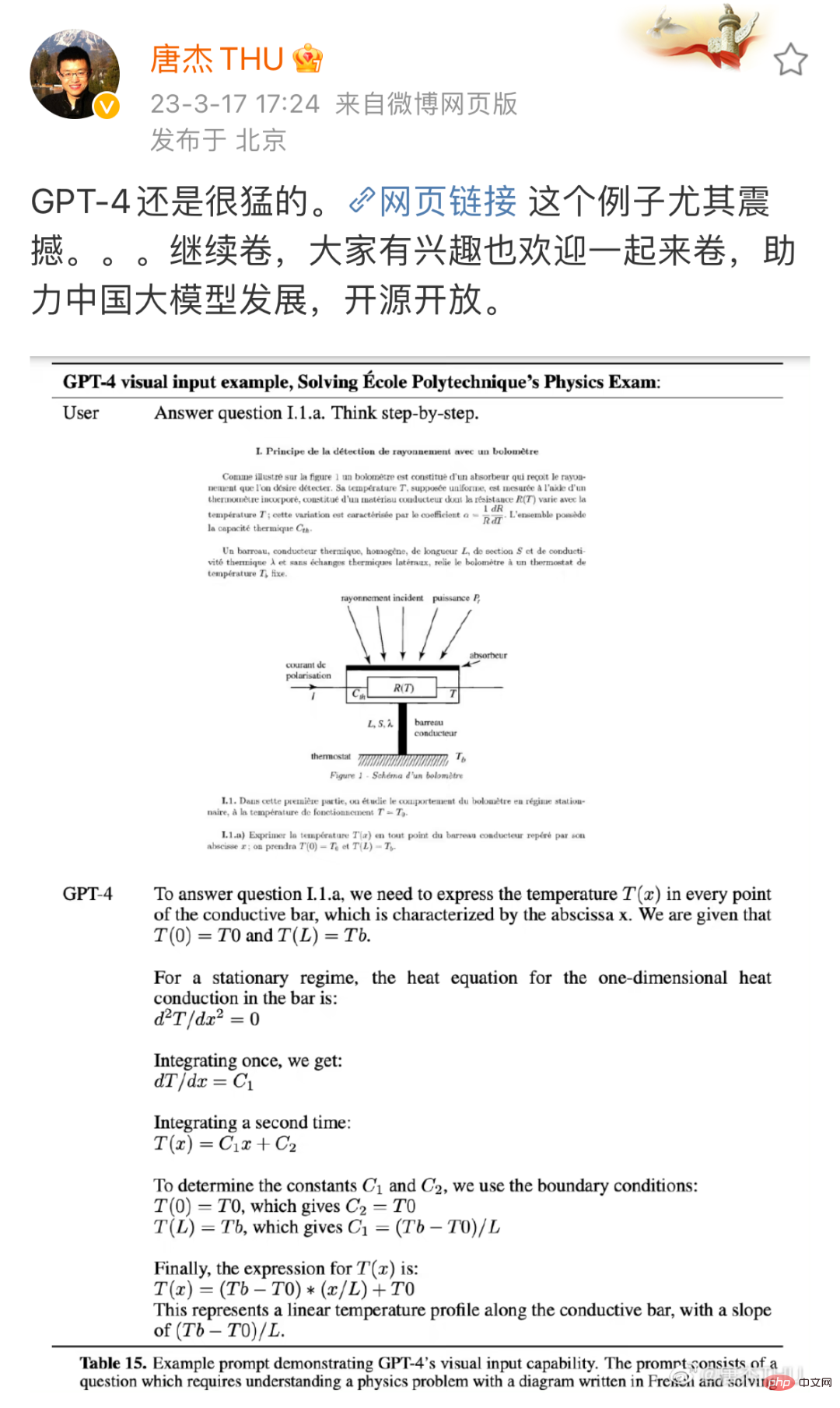

Source: Tang Jie, professor of the Department of Computer Science at Tsinghua University, Weibo. Link: https://m.weibo.cn/detail/4880331053992765

This is a physics question. GPT-4 is required to solve the problem step by step according to the pictures and texts. This is GPT-3.5 (here Refers to the capabilities that the model that ChatGPT relied on before the upgrade did not have. On the one hand, GPT-3.5 is only trained to understand text, and it cannot understand the picture in the question. On the other hand, GPT-3.5's problem-solving ability is also very weak, and it can be stumped by a chicken and a rabbit in the same cage. But this time, both problems seem to have been solved beautifully.

When everyone thought this was a big deal, Microsoft released another blockbuster: GPT-4. These capabilities have been integrated into a new application called Microsoft 365 Copilot. With its powerful image and text processing capabilities, Microsoft 365 Copilot can not only help you write various documents, but also easily convert documents into PPT and automatically summarize Excel data into charts...

From the technology debut to the product launch, OpenAI and Microsoft only gave the public two days to respond. Seemingly overnight, a new productivity revolution has arrived.

Because changes are happening so fast, both the academic community and the industry are more or less in a state of confusion and "FOMO (fear of missing out, fear of missing out)". Currently, everyone wants to know an answer: What can we do in this wave? What opportunities are available? From the demo released by Microsoft, we can find a clear breakthrough: Intelligent processing of graphics and text.

In real-life scenarios, many jobs in all walks of life are related to graphic and text processing, such as organizing unstructured data into charts, writing reports based on charts, and extracting useful information from massive graphic and text information. Information and so on. Because of this, the impact of this revolution may be far more profound than many people imagine. A blockbuster paper recently released by OpenAI and the Wharton School predicts this impact: about 80% of the U.S. workforce may have at least 10% of their work tasks affected by the introduction of GPT, and about 19% of workers are likely to see at least 50% of tasks affected. It is foreseeable that a large part of the work involves graphic and text intelligence.

At such an entry point, what research efforts or engineering efforts are worth exploring? In the recent CSIG Enterprise Tour event hosted by the Chinese Society of Image and Graphics (CSIG) and jointly organized by Hehe Information and CSIG Document Image Analysis and Recognition Professional Committee, many researchers from academia and industry focused on " Graphics and Text "Intelligent processing technology and multi-scenario application technology" has been discussed in depth, which may provide some inspiration to researchers and practitioners who are concerned about the field of intelligent image and text processing.

Processing graphics and text, starting from the underlying vision

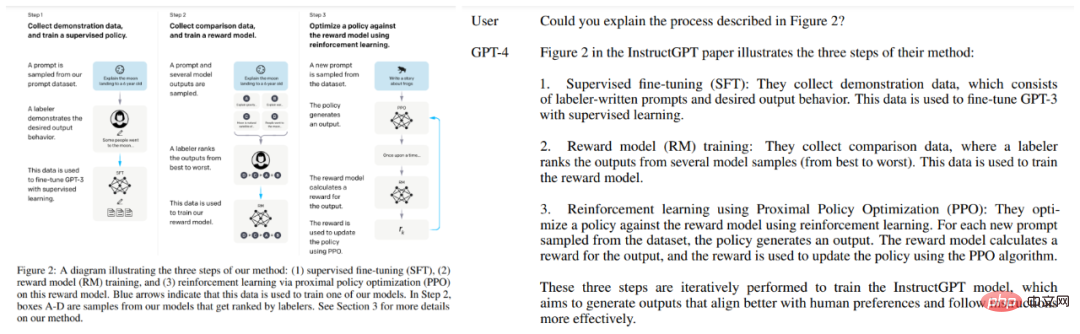

As mentioned earlier, GPT-4’s graphics and text processing capabilities are very shocking. In addition to the above physics question, OpenAI's technical report also cited other examples, such as letting GPT-4 read the paper picture:

However, if we want to make this technology widespread There may still be a lot of basic work to be done before it is implemented, and the underlying vision is one of them.

The characteristics of the underlying vision are very obvious: the input is an image, and the output is also an image. Image preprocessing, filtering, restoration and enhancement all fall into this category.

"The theories and methods of underlying vision are widely used in many fields, such as mobile phones, medical image analysis, security monitoring, etc. Enterprises and institutions that value the quality of images and video content must pay attention to the direction of underlying vision. Research. If the underlying vision is not done well, many high-level vision systems (such as detection, recognition, and understanding) cannot be truly implemented." Hehe Information Image Algorithm R&D Director Guo Fengjun said during the CSIG Enterprise Tour event sharing .

How to understand this sentence? We can look at some examples:

Different from the ideal situation shown in OpenAI and Microsoft demos, real-world images and texts always exist in challenging forms, such as deformation, shadows, and moiré patterns, which will increase the difficulty of subsequent recognition and understanding. Difficulty. The goal of Guo Fengjun’s team is to solve these problems in the initial stage.

To this end, they divided this task into several modules, including region of interest (RoI) extraction, deformation correction, image restoration (such as shadow removal, moiré), quality enhancement (such as sharpening) , clarity), etc.

The combination of these technologies can create some very interesting applications. After years of exploration, these modules have achieved quite good results, and the related technology has been applied to the company's intelligent text recognition product "Scanner".

From words to tables, and then to chapters, read pictures and texts step by step

After the image is processed, the next step is to identify the content of the picture and text above. This is also a very detailed work, and may even be done in units of "words".

In many real-life scenarios, characters may not necessarily appear in standardized print form, which brings challenges to character recognition.

Take the education scene as an example. Assuming you are a teacher, you definitely want AI to directly help you correct all students' homework, and at the same time summarize the students' mastery of each part of the knowledge. It is best to also give wrong questions, typos and correction suggestions. Associate Professor Du Jun of the National Engineering Laboratory of Speech and Language Information Processing at the University of Science and Technology of China is doing work in this area.

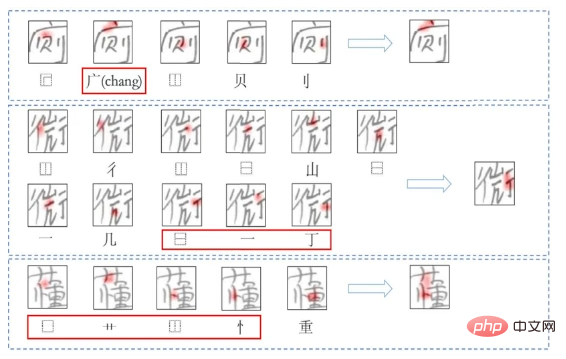

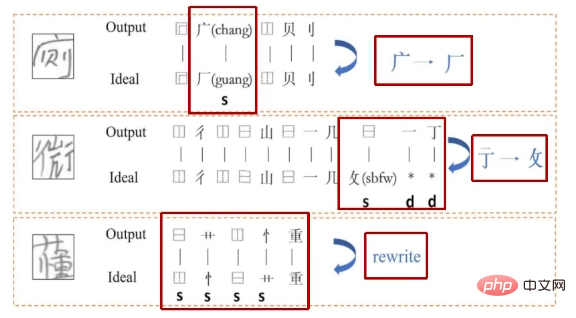

Specifically, they created a Chinese character recognition, generation and evaluation system based on radicals, because compared with whole character modeling, there are much fewer combinations of radicals. Among them, recognition and generation are jointly optimized, which is a bit like the process of mutual reinforcement of literacy and writing when students learn. In the past, most evaluation work focused on the grammatical level, but Du Jun's team designed a method that can find typos directly from the image and explain the errors in detail. This method will be very useful in scenarios such as intelligent marking.

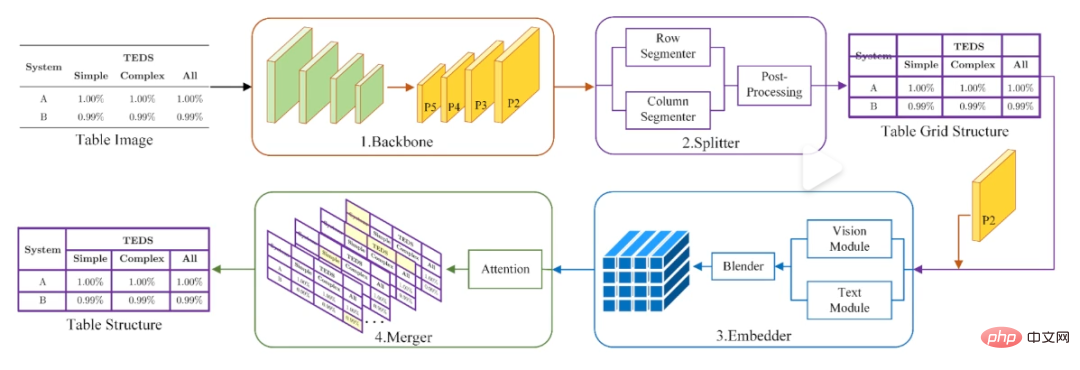

In addition to text, the identification and processing of tables is actually a big difficulty, because you not only have to identify the content inside, but also clarify the structural relationship between these contents. , and some tables may not even have wireframes. To this end, Du Jun's team designed a "first segment, then merge" method, that is, first split the table image into a series of basic grids, and then make further corrections through merging.

# Du Jun's team's "first segmentation, then merge" form recognition method.

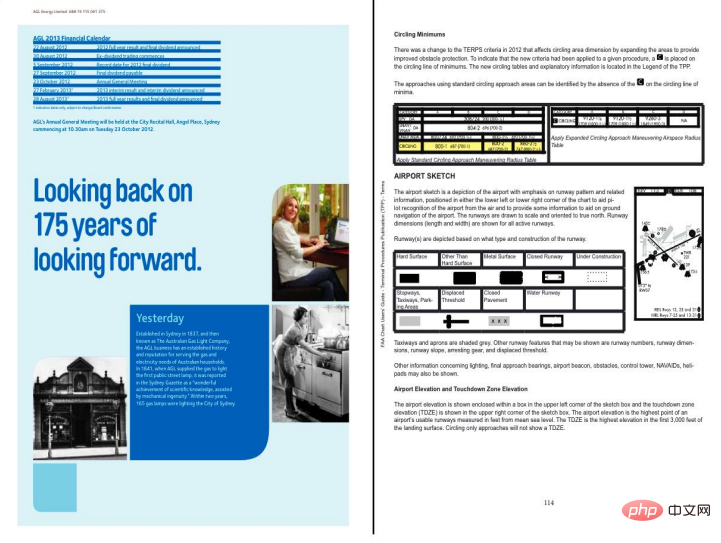

Of course, all of this work will ultimately play a role in document structuring and understanding at the chapter level. In real-life environments, most documents faced by the model are more than one page (such as a paper). In this direction, the work of Du Jun's team focuses on the classification of cross-page document elements and the restoration of cross-page document structure. However, these methods still have limitations in multi-layout scenarios.

Large model, multi-modality, world model... Where is the future?

Talking about chapter-level image and text processing and understanding, in fact we are not far away from GPT-4. "After the multi-modal GPT-4 came out, we were also thinking about whether we could do something in these aspects," Du Jun said at the event. I believe many researchers or practitioners in the field of image and text processing have this idea.

The goal of the GPT series of models has always been to strive to improve versatility and ultimately achieve general artificial intelligence (AGI). The powerful image and text understanding capabilities demonstrated by GPT-4 this time are an important part of this general capability. In order to make a model with similar capabilities, OpenAI has given some reference, but also left many mysteries and unsolved problems.

First of all, the success of GPT-4 shows that the multi-modal approach to large models is feasible. However, what issues should be studied in large models and how to solve the exaggerated computing power requirements of multi-modal models are all challenges facing researchers.

For the first question, Qiu Xipeng, a professor at the School of Computer Science at Fudan University gave some directions worthy of reference. According to some information previously disclosed by OpenAI, we know that ChatGPT is inseparable from several key technologies, including in-context learning, chain of thought, and learn from instructions. Qiu Xipeng pointed out in his sharing that there are still many issues to be discussed in these directions, such as where these abilities come from, how to continue to improve, and how to use them to transform existing learning paradigms. In addition, he also shared the capabilities that should be considered when building conversational large-scale language models and the research directions that can be considered to align these models with the real world.

Regarding the second question, Nanqiang Distinguished Professor Ji Rongrong of Xiamen University contributed an important idea. He believes that there is a natural connection between language and vision, and joint learning between the two is the general trend. But in the face of this wave, the power of any university or laboratory is insignificant. So now, starting from Xiamen University where he works, he is trying to persuade researchers to integrate computing power and form a network to build large multi-modal models. In fact, at an event some time ago, Academician E Weinan, who focuses on AI for Science, also expressed similar views, hoping that all walks of life "dare to pool resources in original innovation directions."

However, will the path taken by GPT-4 definitely lead to general artificial intelligence? Some researchers are skeptical about this, and Turing Award winner Yann LeCun is one of them. He believes that these current large models have staggering demands for data and computing power, but their learning efficiency is very low (such as self-driving cars). Therefore, he created a theory called "world model" (that is, an internal model of how the world works), believing that learning a world model (which can be understood as running a simulation for the real world) may be the key to achieving AGI. At the event, Professor Yang Xiaokang of Shanghai Jiao Tong University shared their work in this direction. Specifically, his team focused on the world model of visual intuition (because visual intuition has a large amount of information), trying to model vision, intuition, and the perception of time and space. Finally, he also emphasized the importance of the intersection of mathematics, physics, information cognition and computer disciplines for this type of research.

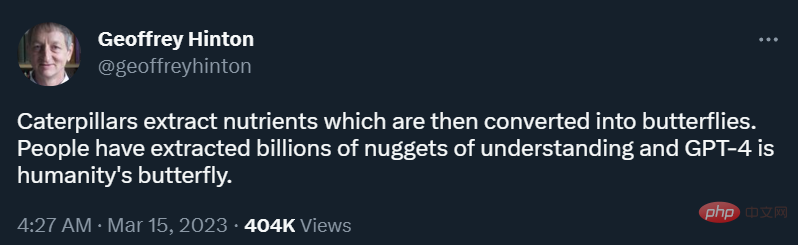

"Caterpillars extract nutrients from food and then turn into butterflies. People have extracted billions of clues to understand that GPT-4 is the butterfly for humans." The day after GPT-4 was released , Geoffrey Hinton, the father of deep learning, tweeted.

Currently, no one can determine how big a hurricane this butterfly will set off. But to be sure, this is not a perfect butterfly yet, and the entire AGI world puzzle is not yet complete. Every researcher and practitioner still has opportunities.

The above is the detailed content of When GPT-4 learns to read pictures and texts, a productivity revolution is unstoppable. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

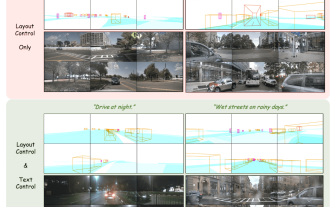

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

Some of the author’s personal thoughts In the field of autonomous driving, with the development of BEV-based sub-tasks/end-to-end solutions, high-quality multi-view training data and corresponding simulation scene construction have become increasingly important. In response to the pain points of current tasks, "high quality" can be decoupled into three aspects: long-tail scenarios in different dimensions: such as close-range vehicles in obstacle data and precise heading angles during car cutting, as well as lane line data. Scenes such as curves with different curvatures or ramps/mergings/mergings that are difficult to capture. These often rely on large amounts of data collection and complex data mining strategies, which are costly. 3D true value - highly consistent image: Current BEV data acquisition is often affected by errors in sensor installation/calibration, high-precision maps and the reconstruction algorithm itself. this led me to

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

Please note that this square man is frowning, thinking about the identities of the "uninvited guests" in front of him. It turned out that she was in a dangerous situation, and once she realized this, she quickly began a mental search to find a strategy to solve the problem. Ultimately, she decided to flee the scene and then seek help as quickly as possible and take immediate action. At the same time, the person on the opposite side was thinking the same thing as her... There was such a scene in "Minecraft" where all the characters were controlled by artificial intelligence. Each of them has a unique identity setting. For example, the girl mentioned before is a 17-year-old but smart and brave courier. They have the ability to remember and think, and live like humans in this small town set in Minecraft. What drives them is a brand new,

How to edit photos on iPhone using iOS 17

Nov 30, 2023 pm 11:39 PM

How to edit photos on iPhone using iOS 17

Nov 30, 2023 pm 11:39 PM

Mobile photography has fundamentally changed the way we capture and share life’s moments. The advent of smartphones, especially the iPhone, played a key role in this shift. Known for its advanced camera technology and user-friendly editing features, iPhone has become the first choice for amateur and experienced photographers alike. The launch of iOS 17 marks an important milestone in this journey. Apple's latest update brings an enhanced set of photo editing features, giving users a more powerful toolkit to turn their everyday snapshots into visually engaging and artistically rich images. This technological development not only simplifies the photography process but also opens up new avenues for creative expression, allowing users to effortlessly inject a professional touch into their photos

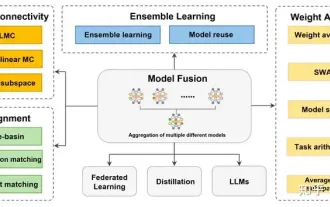

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

In September 23, the paper "DeepModelFusion:ASurvey" was published by the National University of Defense Technology, JD.com and Beijing Institute of Technology. Deep model fusion/merging is an emerging technology that combines the parameters or predictions of multiple deep learning models into a single model. It combines the capabilities of different models to compensate for the biases and errors of individual models for better performance. Deep model fusion on large-scale deep learning models (such as LLM and basic models) faces some challenges, including high computational cost, high-dimensional parameter space, interference between different heterogeneous models, etc. This article divides existing deep model fusion methods into four categories: (1) "Pattern connection", which connects solutions in the weight space through a loss-reducing path to obtain a better initial model fusion

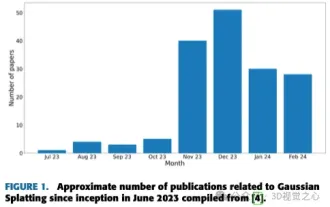

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

Written above & The author’s personal understanding is that image-based 3D reconstruction is a challenging task that involves inferring the 3D shape of an object or scene from a set of input images. Learning-based methods have attracted attention for their ability to directly estimate 3D shapes. This review paper focuses on state-of-the-art 3D reconstruction techniques, including generating novel, unseen views. An overview of recent developments in Gaussian splash methods is provided, including input types, model structures, output representations, and training strategies. Unresolved challenges and future directions are also discussed. Given the rapid progress in this field and the numerous opportunities to enhance 3D reconstruction methods, a thorough examination of the algorithm seems crucial. Therefore, this study provides a comprehensive overview of recent advances in Gaussian scattering. (Swipe your thumb up