Technology peripherals

Technology peripherals

AI

AI

What improvement does GPT-4 have over ChatGPT? Jen-Hsun Huang held a 'fireside chat' with OpenAI co-founder

What improvement does GPT-4 have over ChatGPT? Jen-Hsun Huang held a 'fireside chat' with OpenAI co-founder

What improvement does GPT-4 have over ChatGPT? Jen-Hsun Huang held a 'fireside chat' with OpenAI co-founder

The most important difference between ChatGPT and GPT-4 is that building on GPT-4 predicts the next character with higher accuracy. The better a neural network can predict the next word in a text, the better it can understand the text.

produced by Big Data Digest

Author: Caleb

What kind of sparks will Nvidia create when it encounters OpenAI?

Just now, Nvidia founder and CEO Huang Jensen had an in-depth exchange with OpenAI co-founder Ilya Sutskever during a GTC fireside chat.

Video link:

https://www.nvidia.cn/gtc-global/session-catalog/?tab.catalogallsessinotallow=16566177511100015Kus #/session/1669748941314001t6Nv

Two days ago, OpenAI launched the most powerful artificial intelligence model to date, GPT-4. OpenAI calls GPT-4 "OpenAI's most advanced system" on its official website and "can produce safer and more useful responses."

Sutskever also said during the talk that GPT-4 marks "considerable improvements" in many aspects compared to ChatGPT, noting that the new model can read images and text. "In some future version, [users] may get a chart" in response to questions and inquiries, he said.

There is no doubt that with the popularity of ChatGPT and GPT-4 on a global scale, this has also become the focus of this conversation. In addition to GPT-4 and its predecessors including ChatGPT related topics, Huang Renxun and Sutskever also talked about the capabilities, limitations and internal operations of deep neural networks, as well as predictions for future AI development.

Let’s take a closer look at this conversation with Digest Fungus~

Start when no one cares about network scale and computing scale

There may be many people When I hear Sutskever's name, the first thing that comes to mind is OpenAI and its related AI products, but you must know that Sutskever's resume can be traced back to Andrew Ng's postdoc, Google Brain research scientist, and co-developer of the Seq2Seq model.

It can be said that from the beginning, deep learning has been bound to Sutskever.

When talking about his understanding of deep learning, Sutskever said that from now on, deep learning has indeed changed the world. However, his personal starting point lies more in his intuition about the huge impact potential of AI, his strong interest in consciousness and human experience, and his belief that the development of AI will help answer these questions.

During 2002-03, people generally believed that learning was something that only humans could do, and that computers could not learn. And if computers can be given the ability to learn, it will be a major breakthrough in the field of AI.

This also became an opportunity for Sutskever to officially enter the AI field.

So Sutskever found Jeff Hinton from the same university. In his view, the neural network Hinton is working on is the breakthrough, because the characteristics of neural networks lie in parallel computers that can learn and be programmed automatically.

At that time, no one cared about the importance of network scale and calculation scale. People trained only 50 or 100 neural networks, and hundreds of them were already considered large, with one million parameters. Also considered huge.

In addition, they can only run programs on unoptimized CPU code, because no one understands BLAS. They use optimized Matlab to do some experiments, such as what kind of questions to use to ask and compare. good.

But the problem is that these are very scattered experiments and cannot really promote technological progress.

Building a neural network for computer vision

At that time, Sutskever realized that supervised learning was the way forward in the future.

This is not only an intuition, but also an undisputed fact. If the neural network is deep enough and large enough, it will have the ability to solve some difficult tasks. But people have not yet focused on deep and large neural networks, or even focused on neural networks at all.

In order to find a good solution, a suitably large data set and a lot of calculations are needed.

ImageNet is that data. At that time, ImageNet was a very difficult data set, but to train a large convolutional neural network, you must have matching computing power.

Next it’s time for the GPU to appear. Under the suggestion of Jeff Hinton, they found that with the emergence of the ImageNet data set, the convolutional neural network is a very suitable model for GPU, so it can be made very fast and the scale is getting larger and larger.

Subsequently, it directly and significantly broke the record of computer vision. This is not based on the continuation of previous methods. The key lies in the difficulty and scope of the data set itself.

OpenAI: From 100 People to ChatGPT

In the early days of OpenAI, Sutskever admitted that they were not entirely sure how to promote the project.

At the beginning of 2016, neural networks were not as developed and there were many fewer researchers than there are now. Sutskever recalled that there were only 100 people in the company at the time, and most of them were still working at Google or deepmind.

But they had two big ideas at the time.

One of them is unsupervised learning through compression. In 2016, unsupervised learning was an unsolved problem in machine learning, and no one knew how to implement it. Compression has not been a topic that people usually talk about recently, but suddenly everyone realized that GPT actually compresses the training data.

Mathematically speaking, training these autoregressive generative models compresses the data, and intuitively you can see why it works. If the data is compressed well enough, you can extract all the hidden information present in it. This also directly led to OpenAI’s related research on emotional neurons.

At the same time, when they tuned the same LSTM to predict the next character of an Amazon review, they found that if you predict the next character well enough, there will be a neuron within the LSTM that corresponds to its sentiment. This is a good demonstration of the effect of unsupervised learning and also verifies the idea of next character prediction.

But where do we get the data for unsupervised learning? The hard part about unsupervised learning, Sutskever said, is less about the data and more about why you're doing it, and realizing that training a neural network to predict the next character is worth pursuing and exploring. From there it learns an understandable representation.

Another big idea is reinforcement learning. Sutskever has always believed that bigger is better. At OpenAI, one of their goals is to figure out the right way to scale.

The first really big project OpenAI completed was the implementation of the strategy game Dota 2. At that time, OpenAI trained a reinforcement learning agent to fight against itself. The goal was to reach a certain level and be able to play games with human players.

The transformation from Dota's reinforcement learning to the reinforcement learning of human feedback combined with the GPT output technology base has become today's ChatGPT.

How OpenAI trains a large neural network

When training a large neural network to accurately predict the next word in different texts on the Internet, what OpenAI does is learn a world Model.

This looks like we are only learning statistical correlations in text, but in fact, learning these statistical correlations can compress this knowledge very well. What the neural network learns are some expressions in the process of generating text. This text is actually a map of the world, so the neural network can learn more and more perspectives to view humans and society. These are what the neural network really learns in the task of accurately predicting the next word.

At the same time, the more accurate the prediction of the next word, the higher the degree of restoration, and the higher the resolution of the world obtained in this process. This is the role of the pre-training phase, but it does not make the neural network behave the way we want it to behave.

What a language model is really trying to do is, if I had some random text on the internet, starting with some prefix or hint, what would it complete.

Of course it can also find text to fill in on the Internet, but this is not what was originally conceived, so additional training is required, which is fine-tuning, reinforcement learning from human teachers, and other forms of Where AI assistance can help.

But this is not about teaching new knowledge, but about communicating with it and conveying to it what we want it to be, which also includes boundaries. The better this process is done, the more useful and reliable the neural network will be, and the higher the fidelity of the boundaries will be.

Let’s talk about GPT-4 again

Not long after ChatGPT became the application with the fastest growing users, GPT-4 was officially released.

When talking about the differences between the two, Sutskever said that GPT-4 has achieved considerable improvements in many dimensions compared to ChatGPT.

The most important difference between ChatGPT and GPT-4 is that building on GPT-4 predicts the next character with higher accuracy. The better a neural network can predict the next word in a text, the better it can understand the text.

For example, you read a detective novel. The plot is very complex, with many plots and characters interspersed, and many mysterious clues buried. In the last chapter of the book, the detective collected all the clues, called everyone together, and said that now he will reveal who the culprit is, and that person is...

This is what GPT-4 can predict.

People say that deep learning cannot reason logically. But whether it is this example or some of the things that GPT can do, it shows a certain degree of reasoning ability.

Sutskever responded that when we are defining logical reasoning, if you can think about it in a certain way when making the next decision, you may be able to get a better answer. It remains to be seen how far neural networks can go, and OpenAI has not yet fully tapped its potential.

Some neural networks actually already have this kind of ability, but most of them are not reliable enough. Reliability is the biggest obstacle to making these models useful, and it is also a major bottleneck of current models. It’s not about whether the model has a specific capability, but how much capability it has.

Sutskever also said that GPT-4 did not have a built-in search function when it was released. It is just a good tool that can predict the next word, but it can be said that it fully has this ability and will make search more efficient. good.

Another significant improvement in GPT-4 is the response and processing of images. Multimodal learning plays an important role in it. Sutskever said that multimodality has two dimensions. The first is that multimodality is useful for neural networks, especially vision; the second is that in addition to text learning In addition, knowledge about the world can also be learned from images.

The future of artificial intelligence

When it comes to using AI to train AI, Sutskever said this part of the data should not be ignored.

It is difficult to predict the development of language models in the future, but in Sutskever’s view, there is good reason to believe that this field will continue to progress, and AI will continue to shock mankind with its strength at the boundaries of its capabilities. The reliability of AI is determined by whether it can be trusted, and it will definitely reach a point where it can be completely trusted in the future.

If it doesn’t fully understand, it will also ask questions to figure it out, or tell you that it doesn’t know. These are the areas where AI usability has the greatest impact and will see the greatest progress in the future.

Now we are faced with such a challenge, you want a neural network to summarize a long document or obtain a summary, how to ensure that important details have not been overlooked? If a point is obviously important enough that every reader will agree on it, then the content summarized by the neural network can be accepted as reliable.

The same applies to whether the neural network clearly follows the user's intent.

We will see more and more of this technology in the next two years, making this technology more and more reliable.

Related reports:https://blogs.nvidia.com/blog/2023/03/22/sutskever-openai-gtc/

The above is the detailed content of What improvement does GPT-4 have over ChatGPT? Jen-Hsun Huang held a 'fireside chat' with OpenAI co-founder. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

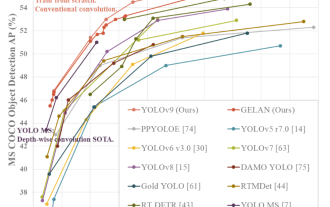

YOLO is immortal! YOLOv9 is released: performance and speed SOTA~

Feb 26, 2024 am 11:31 AM

YOLO is immortal! YOLOv9 is released: performance and speed SOTA~

Feb 26, 2024 am 11:31 AM

Today's deep learning methods focus on designing the most suitable objective function so that the model's prediction results are closest to the actual situation. At the same time, a suitable architecture must be designed to obtain sufficient information for prediction. Existing methods ignore the fact that when the input data undergoes layer-by-layer feature extraction and spatial transformation, a large amount of information will be lost. This article will delve into important issues when transmitting data through deep networks, namely information bottlenecks and reversible functions. Based on this, the concept of programmable gradient information (PGI) is proposed to cope with the various changes required by deep networks to achieve multi-objectives. PGI can provide complete input information for the target task to calculate the objective function, thereby obtaining reliable gradient information to update network weights. In addition, a new lightweight network framework is designed

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

Regarding Llama3, new test results have been released - the large model evaluation community LMSYS released a large model ranking list. Llama3 ranked fifth, and tied for first place with GPT-4 in the English category. The picture is different from other benchmarks. This list is based on one-on-one battles between models, and the evaluators from all over the network make their own propositions and scores. In the end, Llama3 ranked fifth on the list, followed by three different versions of GPT-4 and Claude3 Super Cup Opus. In the English single list, Llama3 overtook Claude and tied with GPT-4. Regarding this result, Meta’s chief scientist LeCun was very happy and forwarded the tweet and

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

Installation steps: 1. Download the ChatGTP software from the ChatGTP official website or mobile store; 2. After opening it, in the settings interface, select the language as Chinese; 3. In the game interface, select human-machine game and set the Chinese spectrum; 4 . After starting, enter commands in the chat window to interact with the software.

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The volume is crazy, the volume is crazy, and the big model has changed again. Just now, the world's most powerful AI model changed hands overnight, and GPT-4 was pulled from the altar. Anthropic released the latest Claude3 series of models. One sentence evaluation: It really crushes GPT-4! In terms of multi-modal and language ability indicators, Claude3 wins. In Anthropic’s words, the Claude3 series models have set new industry benchmarks in reasoning, mathematics, coding, multi-language understanding and vision! Anthropic is a startup company formed by employees who "defected" from OpenAI due to different security concepts. Their products have repeatedly hit OpenAI hard. This time, Claude3 even had a big surgery.

1.3ms takes 1.3ms! Tsinghua's latest open source mobile neural network architecture RepViT

Mar 11, 2024 pm 12:07 PM

1.3ms takes 1.3ms! Tsinghua's latest open source mobile neural network architecture RepViT

Mar 11, 2024 pm 12:07 PM

Paper address: https://arxiv.org/abs/2307.09283 Code address: https://github.com/THU-MIG/RepViTRepViT performs well in the mobile ViT architecture and shows significant advantages. Next, we explore the contributions of this study. It is mentioned in the article that lightweight ViTs generally perform better than lightweight CNNs on visual tasks, mainly due to their multi-head self-attention module (MSHA) that allows the model to learn global representations. However, the architectural differences between lightweight ViTs and lightweight CNNs have not been fully studied. In this study, the authors integrated lightweight ViTs into the effective