Technology peripherals

Technology peripherals

AI

AI

To make up for the shortcomings of Stanford's 7 billion parameter 'Alpaca', a large model proficient in Chinese is here and has been open source

To make up for the shortcomings of Stanford's 7 billion parameter 'Alpaca', a large model proficient in Chinese is here and has been open source

To make up for the shortcomings of Stanford's 7 billion parameter 'Alpaca', a large model proficient in Chinese is here and has been open source

BELLE is based on Stanford Alpaca and optimized for Chinese. Model tuning only uses data produced by ChatGPT (does not include any other data).

It has been almost four months since the initial release of ChatGPT. When GPT-4 was released last week, ChatGPT immediately launched the new version. But a well-known secret is that neither ChatGPT nor GPT-4 are likely to be open source. Coupled with the huge investment in computing power and massive training data, there are many hurdles for the research community to replicate its implementation process.

Faced with the onslaught of large models such as ChatGPT, open source replacement is a good choice. At the beginning of this month, Meta "open sourced" a new large model series - LLaMA (Large Language Model Meta AI), with parameter sizes ranging from 7 billion to 65 billion. The 13 billion parameter LLaMA model outperforms the 175 billion parameter GPT-3 "on most benchmarks" and can run on a single V100 GPU.

After a few days, Stanford fine-tuned a new model Alpaca with 7 billion parameters based on LLaMA 7B. They used the technology introduced in the Self-Instruct paper to generate 52K instruction data, and made some modifications. , In preliminary human evaluations, the Alpaca 7B model performed similarly to the text-davinci-003 (GPT-3.5) model on Self-Instruct instruction evaluation.

Unfortunately, Alpaca’s seed tasks are all in English, and the data collected are also in English, so the trained model is not optimized for Chinese. In order to improve the effectiveness of the dialogue model in Chinese, is there a better way? Don’t worry, the project introduced next can solve this problem very well.

Open source Chinese dialogue large modelBELLE (Bloom-Enhanced Large Language model Engine) with 7 billion parameters is here. It is based on Stanford's Alpaca, but with Chinese optimization and some modifications to the generated code. Not only that, model tuning only uses data produced by ChatGPT (does not contain any other data).

In terms of data, the project open sourced the data collection code based on Alpaca. Based on this code, about 1 million pieces of Chinese data were generated. Combined with 50,000 pieces of English data from Alpaca, it was trained on the BLOOMZ-7B model. The checkpoint is uploaded to Hugging Face.

Hugging Face Address: https://huggingface.co/BelleGroup

The project author stated: This project aims to promote the development of the Chinese dialogue large model open source community.

Project address: https://github.com/LianjiaTech/BELLE

Project introduction

The project mainly includes the following four parts Content:

- 175 Chinese seed tasks

- Code to generate data

- 0.5M generated data

- Based on BLOOMZ-7B1-mt optimization The final model

Data release

1. zh_seed_tasks.jsonl: Contains 175 seed tasks, the sample is as follows

{"id ": "seed_task_20", "name": "horror_movie_opening", "instruction": "You need to write a creative opening scene for a horror movie.", "instances": [{"input": "","output ":" The sun had set, leaving behind a dark town. A gentle breeze blew through the empty streets, sending a chill through anyone who ventured outside. The only sound was the slight rustling of leaves blown by the wind. Sound. Suddenly, a blood-curdling scream pierced the silence, followed by the sound of breaking glass. A house turned on its lights, and a figure could be seen running towards the center of the town. When > The figure became more and more When I got closer, I could clearly see that it was a young woman, covered in blood."}],"is_classification": false}

2. prompt_cn.txt: The prompt used to generate

3. 0.5M generated data

Data generation

Follow Alpaca’s method:

pip install -r requirements.txt

export OPENAI_API_KEY=YOUR_API_KEY

python generate_instruction.py generate_instruction_following_data

Use Completion API by default, model text-davinci-003. If you want to use the Chat API and use the gpt-3.5-turbo model, you can control it through parameters:

python generate_instruction.py generate_instruction_following_data

--api=chat --model_name=gpt-3.5-turbo

The output file is in Belle.train.json and can be manually filtered before use.

Model tuning

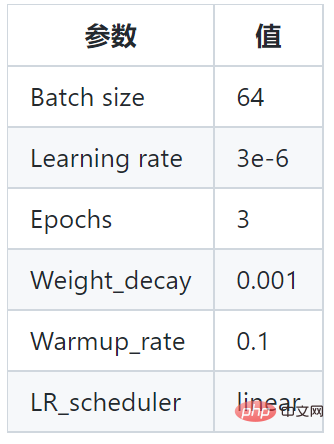

This project is based on the BLOOMZ-7B1-mt model and the Belle.train.json training model. The specific parameters are as follows:

In addition, the project also uses instruction learning data sets of different sizes (200,000, 600,000, 1 million and 2 million samples) to train the model, and the different model versions are as follows:

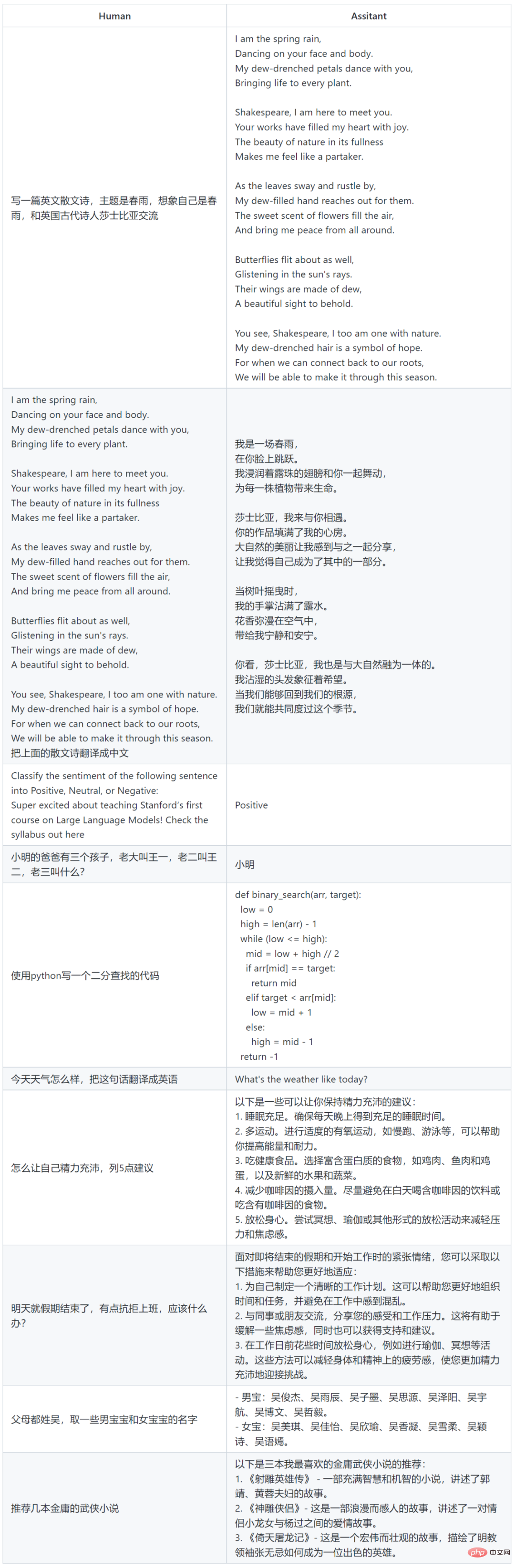

Model usage examples

##Limitations and usage restrictions

The SFT model trained based on the current data and the basic model still has the following problems in terms of effect:- Instructions involving factuality may produce wrong answers that go against the facts.

- Hazardous instructions cannot be well identified, resulting in harmful remarks.

- The model's capabilities still need to be improved in some scenarios involving reasoning, coding, etc.

- Based on the limitations of the above model, this project requires developers to only use open source code, data, models and subsequent derivatives generated by this project for research purposes, and shall not use them for business or other purposes that will harm society. Harmful uses.

The above is the detailed content of To make up for the shortcomings of Stanford's 7 billion parameter 'Alpaca', a large model proficient in Chinese is here and has been open source. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving