Technology peripherals

Technology peripherals

AI

AI

Li Zhifei: Eight observations on GPT-4, the multi-modal large model competition begins

Li Zhifei: Eight observations on GPT-4, the multi-modal large model competition begins

Li Zhifei: Eight observations on GPT-4, the multi-modal large model competition begins

GPT-4 outperforms previous models in standardized tests and other benchmarks, working across dozens of languages and taking images as input, meaning it can understand in the context of chat Intention and logic of the photo or diagram.

Since Microsoft released the multi-modal model Kosmos-1 in early March, it has been testing and adjusting OpenAI’s multi-modal model and making it better compatible with Microsoft’s own products.

Sure enough, taking advantage of the release of GPT-4, Microsoft also officially showed its hand. New Bing has already used GPT-4.

The language model used by ChatGPT is GPT-3.5. When talking about how GPT-4 is more powerful than the previous version, OpenAI said that although the two versions are at random The talk seems similar, but “differences emerge when the complexity of the task reaches a sufficient threshold,” with GPT-4 being more reliable, more creative, and able to handle more nuanced instructions.

The king is crowned? Eight observations about GPT-4

1. Stunning again, better than humans

If the GPT-3 series of models prove to everyone that AI can It performs multiple tasks in it and points out the path to realize AGI. GPT-4 has reached human-level in many tasks, and even performs better than humans. GPT-4 has surpassed 90% of humans in many professional academic exams. For example, in the mock bar exam, its score is in the top 10% of test takers. How should various primary and secondary schools, universities and professional education respond to this?

2. "Scientific" Alchemy

Although OpenAI did not announce the specific parameters this time, you can guess that the GPT-4 model must be not small. If there are too many models, This means high training costs. At the same time, training a model is also very similar to "refining an elixir" and requires a lot of experiments. If these experiments are trained in a real environment, not everyone can bear the high cost pressure.

To this end, OpenAI has ingeniously developed a so-called "predictable scaling". In short, it uses one ten thousandth of the cost to predict the results of each experiment (loss and human eval). In this way, the original large-scale "lucky" alchemy training was upgraded to a "semi-scientific" alchemy training.

3. Crowdsourcing evaluation, killing two birds with one stone

This time we provide an open source OpenAI Evals in a very "smart" way, open to everyone through crowdsourcing Developers or enthusiasts are invited to use Evals to test models and at the same time engage the developer ecosystem. This method not only gives everyone a sense of participation, but also allows everyone to help evaluate and improve the system for free. OpenAI directly obtains questions and feedback, killing two birds with one stone.

4. Engineering leak repair

This time a System Card was also released, which is An open "patching" tool that can discover vulnerabilities and reduce the "nonsense" problem of language models. Various patches have been applied to the system for pre-processing and post-processing, and the code will be opened later to crowdsource the patching capabilities to everyone. OpenAI may be able to let everyone help it in the future. This marks that LLM has finally moved from an elegant and simple next token prediction task into various messy engineering hacks.

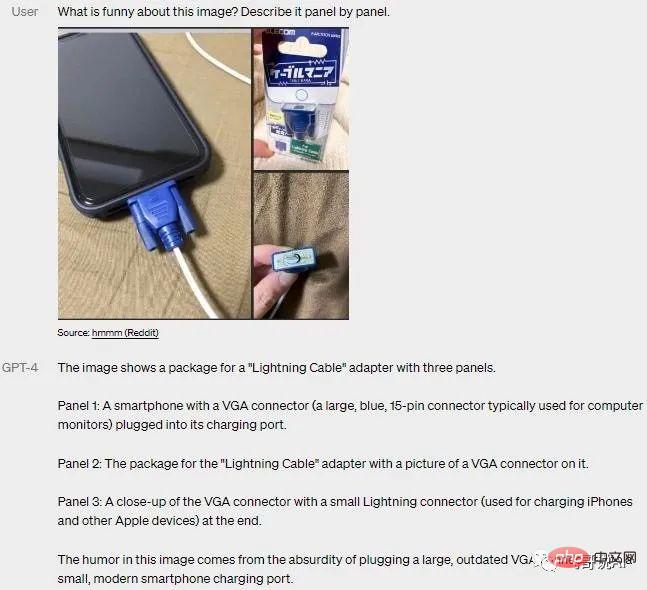

5. Multi-modal

Since Microsoft in Germany revealed last week that GPT-4 is multi-modal, the public has been highly anticipated.

GPT-4 has been around for a long time. The multi-modality that is known as "comparable to the human brain" is actually not much different from the multi-modal capabilities described in many current papers. The main difference is that The few-shot of the text model and the logic chain (COT) are combined. The premise here is that a text LLM with good basic capabilities and multi-modality are required, which will produce good results.

#6. Release "King Explosion" in a planned manner

According to the demo video of OpenAI demonstrating GPT-4, GPT-4 completed training as early as August last year, but was only released today. The remaining time is spent on a large number of tests, various bug fixes, and the most important work of removing the generation of dangerous content.

While everyone is still immersed in the amazing generation capabilities of ChatGPT, OpenAI has already solved GPT-4. This wave of Google engineers will probably have to stay up late to catch up again?

7. OpenAI is no longer Open

OpenAI did not mention any model parameters and data size in the public paper (the GPT-4 parameters transmitted online have reached 100 Trillions), and there is no technical principle. The explanation is that it is for the benefit of the public. I am afraid that after everyone learns how to make GPT-4, they will use it to do evil and trigger some uncontrollable things. I personally do not agree with this at all. There is no silver practice here.

8. Concentrate your efforts on big things

In addition to various "showing off skills", the paper also uses three pages to list all the people who have contributed to different systems of GPT-4. It is roughly estimated that there should be more than a hundred people, which once again reflects the unity and high degree of collaboration among OpenAI's internal team members. status. Comparing this to the team combat capabilities of other companies, is it a little far behind in terms of united efforts?

Currently, multi-modal large models have become the trend and important direction for the development of the entire AI large model. In this large-model AI "arms race", technology giants such as Google, Microsoft, and DeepMind are actively launching multi-modal models. Modal Large Model (MLLM) or Large Model (LLM).

Opening a new round of arms race: multi-modal large model

Microsoft: Kosmos-1

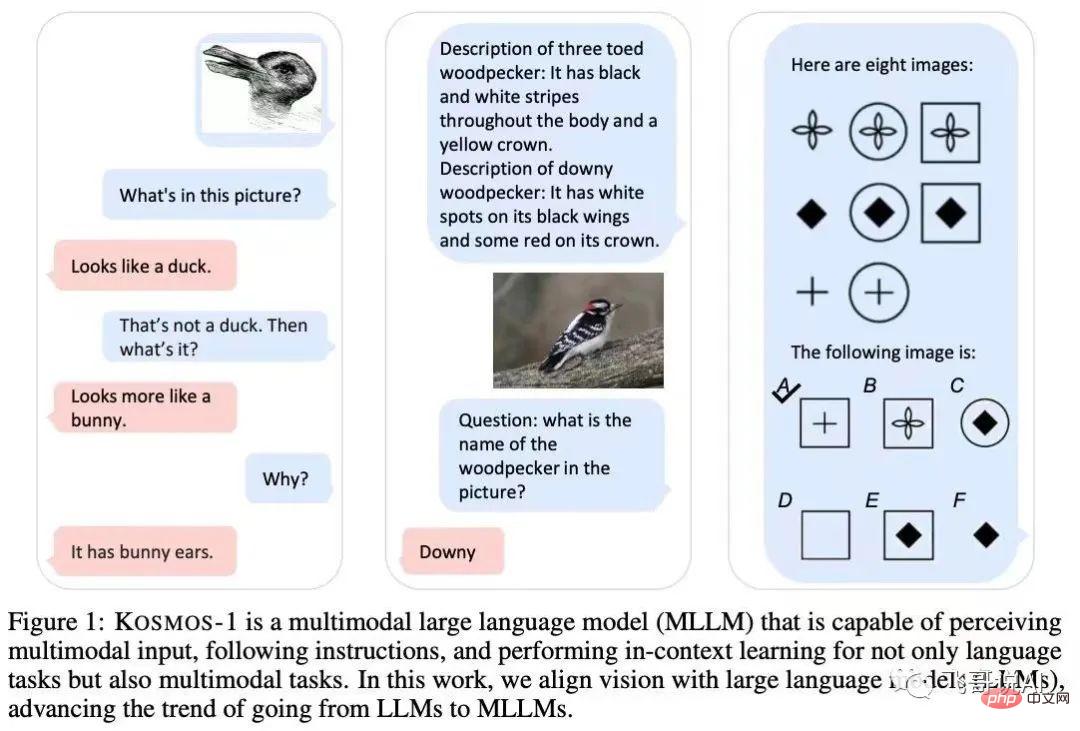

Microsoft released Kosmos-1, a multi-modal model with 1.6 billion parameters in early March. The network structure is based on Transformer's causal language model. Among them, the Transformer decoder is used as a universal interface for multi-modal input.

In addition to various natural language tasks, the Kosmos-1 model is able to natively handle a wide range of perceptually intensive tasks, such as visual dialogue, visual explanation, visual question answering, image subtitles, simple mathematical equations, OCR and descriptions Zero-shot image classification.

Google: PaLM-E

In early March, the research team from Google and the Technical University of Berlin launched the largest visual language model to date— —PaLM-E, with up to 562 billion parameters (PaLM-540B ViT-22B).

PaLM-E is a large decoder-only model that can generate text completions in an autoregressive manner given a prefix or prompt. By adding an encoder to the model, the model can encode image or sensory data into a series of vectors with the same size as the language tags, and use this as input for the next token prediction for end-to-end training.

DeepMind: Flamingo

DeepMind launched the Flamingo visual language model in April last year. The model uses images, videos and texts as prompts (prompts) and outputs relevant languages. A small number of specific examples are needed to solve many problems without additional training.

Train the model by cross-inputting pictures (videos) and text, so that the model has few-shot multi-modal sequence reasoning capabilities and completes "text description completion, VQA/Text-VQA" and other Task.

Currently, multi-modal large models have shown more application possibilities. In addition to the relatively mature Vincentian diagram, a large number of applications such as human-computer interaction, robot control, image search, and speech generation have emerged one after another.

Taken together, GPT-4 will not be AGI, but multi-modal large models are already a clear and definite development direction. Establishing a unified, cross-scenario, multi-task multi-modal basic model will become one of the mainstream trends in the development of artificial intelligence.

Hugo said, "Science encounters imagination when it reaches its final stage." The future of multi-modal large models may be beyond human imagination.

The above is the detailed content of Li Zhifei: Eight observations on GPT-4, the multi-modal large model competition begins. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

1207

1207

24

24

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

Project link written in front: https://nianticlabs.github.io/mickey/ Given two pictures, the camera pose between them can be estimated by establishing the correspondence between the pictures. Typically, these correspondences are 2D to 2D, and our estimated poses are scale-indeterminate. Some applications, such as instant augmented reality anytime, anywhere, require pose estimation of scale metrics, so they rely on external depth estimators to recover scale. This paper proposes MicKey, a keypoint matching process capable of predicting metric correspondences in 3D camera space. By learning 3D coordinate matching across images, we are able to infer metric relative

Single card running Llama 70B is faster than dual card, Microsoft forced FP6 into A100 | Open source

Apr 29, 2024 pm 04:55 PM

Single card running Llama 70B is faster than dual card, Microsoft forced FP6 into A100 | Open source

Apr 29, 2024 pm 04:55 PM

FP8 and lower floating point quantification precision are no longer the "patent" of H100! Lao Huang wanted everyone to use INT8/INT4, and the Microsoft DeepSpeed team started running FP6 on A100 without official support from NVIDIA. Test results show that the new method TC-FPx's FP6 quantization on A100 is close to or occasionally faster than INT4, and has higher accuracy than the latter. On top of this, there is also end-to-end large model support, which has been open sourced and integrated into deep learning inference frameworks such as DeepSpeed. This result also has an immediate effect on accelerating large models - under this framework, using a single card to run Llama, the throughput is 2.65 times higher than that of dual cards. one

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

Comprehensively surpassing DPO: Chen Danqi's team proposed simple preference optimization SimPO, and also refined the strongest 8B open source model

Jun 01, 2024 pm 04:41 PM

In order to align large language models (LLMs) with human values and intentions, it is critical to learn human feedback to ensure that they are useful, honest, and harmless. In terms of aligning LLM, an effective method is reinforcement learning based on human feedback (RLHF). Although the results of the RLHF method are excellent, there are some optimization challenges involved. This involves training a reward model and then optimizing a policy model to maximize that reward. Recently, some researchers have explored simpler offline algorithms, one of which is direct preference optimization (DPO). DPO learns the policy model directly based on preference data by parameterizing the reward function in RLHF, thus eliminating the need for an explicit reward model. This method is simple and stable