Technology peripherals

Technology peripherals

AI

AI

Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidu's response is here!

Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidu's response is here!

Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidu's response is here!

Wen Xinyiyan is constantly learning and growing as everyone uses it. Please give some confidence and time to self-developed technologies and products, and do not spread rumors and believe them. We also hope that Wenxinyiyan can bring more to everyone. joy.

Compilation | Yunzhao

Recently, someone found something wrong with the Wen Sheng Diagram function while using Wen Xin Yiyan.

For example, draw "mouse" as "mouse" and draw "bus" as "bus". Secondly, there is a big problem with Chinese understanding ability. I fell into a little storm of "you draw and I guess". For example, draw "Texas Braised Chicken" as "a plate of rooster".

Baidu responded as follows:

We have noticed the relevant feedback on the function of Wen Xin Yi Yan Wen Sheng Tu. The response description is as follows:

1. Wenxin Yiyan is completely a large language model independently developed by Baidu. The Wenxin graph capability comes from the Wenxin cross-modal large model ERNIE-ViLG.

2. In large model training, we use global Internet public data, which is in line with industry practice. Everyone will also see Baidu’s self-research strength from the rapid tuning and iteration of Wenshengtu’s capabilities.

Wen Xinyiyan is constantly learning and growing while everyone is using it. Please give some confidence and time to self-developed technology and products, and do not spread rumors or believe rumors. I also hope that Wenxinyiyan can bring you some benefits. Come more joy.

At the end of the article are attached a few use case diagrams, I hope Wen Xinyiyan can quickly iterate and adjust! Everyone, give some time to self-developed models!

![Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here! Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here!](https://img.php.cn/upload/article/000/000/000/168032087928272.png)

![Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here! Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here!](https://img.php.cn/upload/article/000/000/000/168032087973175.png)

![Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here! Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here!](https://img.php.cn/upload/article/000/000/000/168032087953304.png)

![Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here! Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here!](https://img.php.cn/upload/article/000/000/000/168032088056837.png)

![Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here! Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here!](https://img.php.cn/upload/article/000/000/000/168032088017818.png)

In fact, it’s not just Vincent’s pictures, simple conversations also lead to confusion of right and wrong:

![Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here! Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here!](https://img.php.cn/upload/article/000/000/000/168032088026143.png)

![Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here! Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidus response is here!](https://img.php.cn/upload/article/000/000/000/168032088117947.png)

The above is the detailed content of Wen Xin said [You draw and I guess] to refute rumors about the grand prize, and Baidu's response is here!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

No OpenAI data required, join the list of large code models! UIUC releases StarCoder-15B-Instruct

Jun 13, 2024 pm 01:59 PM

At the forefront of software technology, UIUC Zhang Lingming's group, together with researchers from the BigCode organization, recently announced the StarCoder2-15B-Instruct large code model. This innovative achievement achieved a significant breakthrough in code generation tasks, successfully surpassing CodeLlama-70B-Instruct and reaching the top of the code generation performance list. The unique feature of StarCoder2-15B-Instruct is its pure self-alignment strategy. The entire training process is open, transparent, and completely autonomous and controllable. The model generates thousands of instructions via StarCoder2-15B in response to fine-tuning the StarCoder-15B base model without relying on expensive manual annotation.

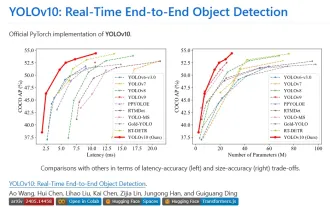

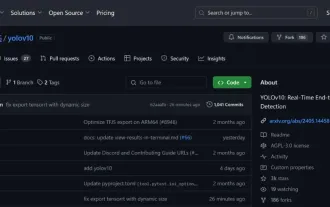

Yolov10: Detailed explanation, deployment and application all in one place!

Jun 07, 2024 pm 12:05 PM

Yolov10: Detailed explanation, deployment and application all in one place!

Jun 07, 2024 pm 12:05 PM

1. Introduction Over the past few years, YOLOs have become the dominant paradigm in the field of real-time object detection due to its effective balance between computational cost and detection performance. Researchers have explored YOLO's architectural design, optimization goals, data expansion strategies, etc., and have made significant progress. At the same time, relying on non-maximum suppression (NMS) for post-processing hinders end-to-end deployment of YOLO and adversely affects inference latency. In YOLOs, the design of various components lacks comprehensive and thorough inspection, resulting in significant computational redundancy and limiting the capabilities of the model. It offers suboptimal efficiency, and relatively large potential for performance improvement. In this work, the goal is to further improve the performance efficiency boundary of YOLO from both post-processing and model architecture. to this end

deepseek web version entrance deepseek official website entrance

Feb 19, 2025 pm 04:54 PM

deepseek web version entrance deepseek official website entrance

Feb 19, 2025 pm 04:54 PM

DeepSeek is a powerful intelligent search and analysis tool that provides two access methods: web version and official website. The web version is convenient and efficient, and can be used without installation; the official website provides comprehensive product information, download resources and support services. Whether individuals or corporate users, they can easily obtain and analyze massive data through DeepSeek to improve work efficiency, assist decision-making and promote innovation.

Tsinghua University took over and YOLOv10 came out: the performance was greatly improved and it was on the GitHub hot list

Jun 06, 2024 pm 12:20 PM

Tsinghua University took over and YOLOv10 came out: the performance was greatly improved and it was on the GitHub hot list

Jun 06, 2024 pm 12:20 PM

The benchmark YOLO series of target detection systems has once again received a major upgrade. Since the release of YOLOv9 in February this year, the baton of the YOLO (YouOnlyLookOnce) series has been passed to the hands of researchers at Tsinghua University. Last weekend, the news of the launch of YOLOv10 attracted the attention of the AI community. It is considered a breakthrough framework in the field of computer vision and is known for its real-time end-to-end object detection capabilities, continuing the legacy of the YOLO series by providing a powerful solution that combines efficiency and accuracy. Paper address: https://arxiv.org/pdf/2405.14458 Project address: https://github.com/THU-MIG/yo

Google Gemini 1.5 technical report: Easily prove Mathematical Olympiad questions, the Flash version is 5 times faster than GPT-4 Turbo

Jun 13, 2024 pm 01:52 PM

Google Gemini 1.5 technical report: Easily prove Mathematical Olympiad questions, the Flash version is 5 times faster than GPT-4 Turbo

Jun 13, 2024 pm 01:52 PM

In February this year, Google launched the multi-modal large model Gemini 1.5, which greatly improved performance and speed through engineering and infrastructure optimization, MoE architecture and other strategies. With longer context, stronger reasoning capabilities, and better handling of cross-modal content. This Friday, Google DeepMind officially released the technical report of Gemini 1.5, which covers the Flash version and other recent upgrades. The document is 153 pages long. Technical report link: https://storage.googleapis.com/deepmind-media/gemini/gemini_v1_5_report.pdf In this report, Google introduces Gemini1

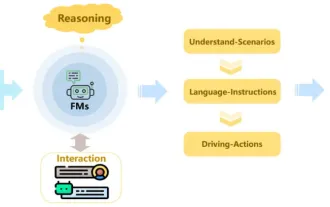

Review! Comprehensively summarize the important role of basic models in promoting autonomous driving

Jun 11, 2024 pm 05:29 PM

Review! Comprehensively summarize the important role of basic models in promoting autonomous driving

Jun 11, 2024 pm 05:29 PM

Written above & the author’s personal understanding: Recently, with the development and breakthroughs of deep learning technology, large-scale foundation models (Foundation Models) have achieved significant results in the fields of natural language processing and computer vision. The application of basic models in autonomous driving also has great development prospects, which can improve the understanding and reasoning of scenarios. Through pre-training on rich language and visual data, the basic model can understand and interpret various elements in autonomous driving scenarios and perform reasoning, providing language and action commands for driving decision-making and planning. The base model can be data augmented with an understanding of the driving scenario to provide those rare feasible features in long-tail distributions that are unlikely to be encountered during routine driving and data collection.

Do different data sets have different scaling laws? And you can predict it with a compression algorithm

Jun 07, 2024 pm 05:51 PM

Do different data sets have different scaling laws? And you can predict it with a compression algorithm

Jun 07, 2024 pm 05:51 PM

Generally speaking, the more calculations it takes to train a neural network, the better its performance. When scaling up a calculation, a decision must be made: increase the number of model parameters or increase the size of the data set—two factors that must be weighed within a fixed computational budget. The advantage of increasing the number of model parameters is that it can improve the complexity and expression ability of the model, thereby better fitting the training data. However, too many parameters can lead to overfitting, making the model perform poorly on unseen data. On the other hand, expanding the data set size can improve the generalization ability of the model and reduce overfitting problems. Let us tell you: As long as you allocate parameters and data appropriately, you can maximize performance within a fixed computing budget. Many previous studies have explored Scalingl of neural language models.

Modularly reconstruct LLaVA. To replace components, just add 1-2 files. The open source TinyLLaVA Factory is here.

Jun 08, 2024 pm 09:21 PM

Modularly reconstruct LLaVA. To replace components, just add 1-2 files. The open source TinyLLaVA Factory is here.

Jun 08, 2024 pm 09:21 PM

The TinyLLaVA+ project was jointly created by Professor Wu Ji’s team from the Multimedia Signal and Intelligent Information Processing Laboratory (MSIIP) of the Department of Electronics, Tsinghua University, and Professor Huang Lei’s team from the School of Artificial Intelligence, Beihang University. The MSIIP Laboratory of Tsinghua University has long been committed to research fields such as intelligent medical care, natural language processing and knowledge discovery, and multi-modality. The Beijing Airlines team has long been committed to research fields such as deep learning, multi-modality, and computer vision. The goal of the TinyLLaVA+ project is to develop a small cross-language intelligent assistant with multi-modal capabilities such as language understanding, question and answer, and dialogue. The project team will give full play to their respective advantages, jointly overcome technical problems, and realize the design and development of intelligent assistants. This will provide opportunities for intelligent medical care, natural language processing and knowledge