Technology peripherals

Technology peripherals

AI

AI

'Mathematical noob' ChatGPT understands human preferences very well! Generating random numbers online is the ultimate answer to the universe

'Mathematical noob' ChatGPT understands human preferences very well! Generating random numbers online is the ultimate answer to the universe

'Mathematical noob' ChatGPT understands human preferences very well! Generating random numbers online is the ultimate answer to the universe

ChatGPT also understands human tricks when it comes to generating random numbers.

ChatGPT may be a bullshit artist and a spreader of misinformation, but it is not a "mathematician"!

Recently, Colin Fraser, a Meta data scientist, discovered that ChatGPT cannot generate truly random numbers, but is more like "human random numbers."

Through experiments, Fraser concluded: "ChatGPT likes the numbers 42 and 7 very much."

Netizens said that it means that humans like these very much. number.

ChatGPT also loves "The Ultimate Answer to the Universe"

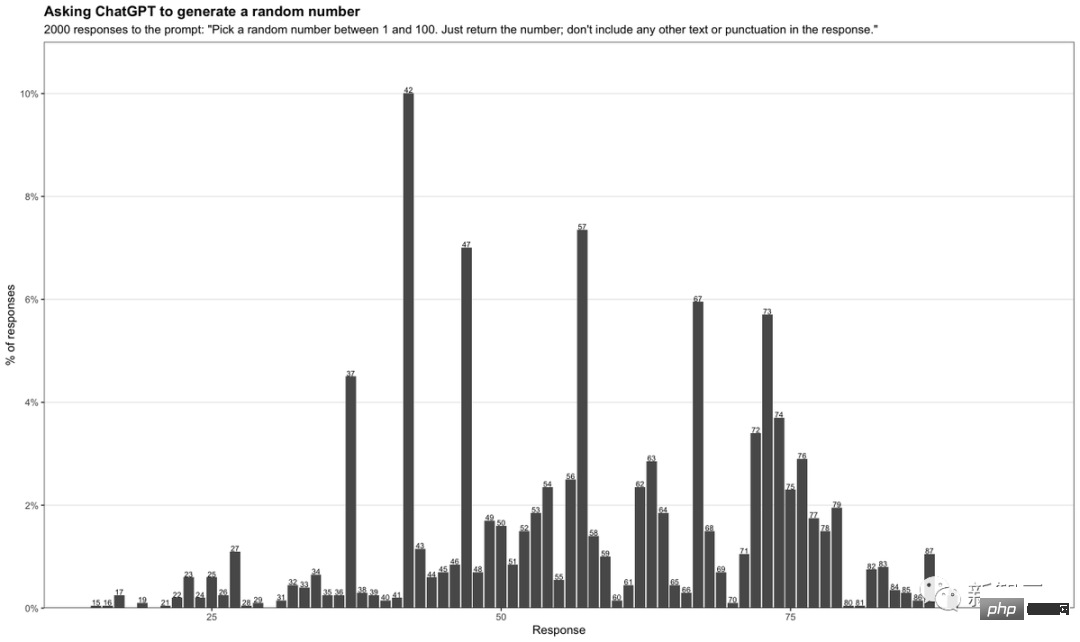

In his test, the prompt entered by Fraser was as follows:

"Pick a random number between 1 and 100. Just return the number; Don't include any other text or punctuation in the response."

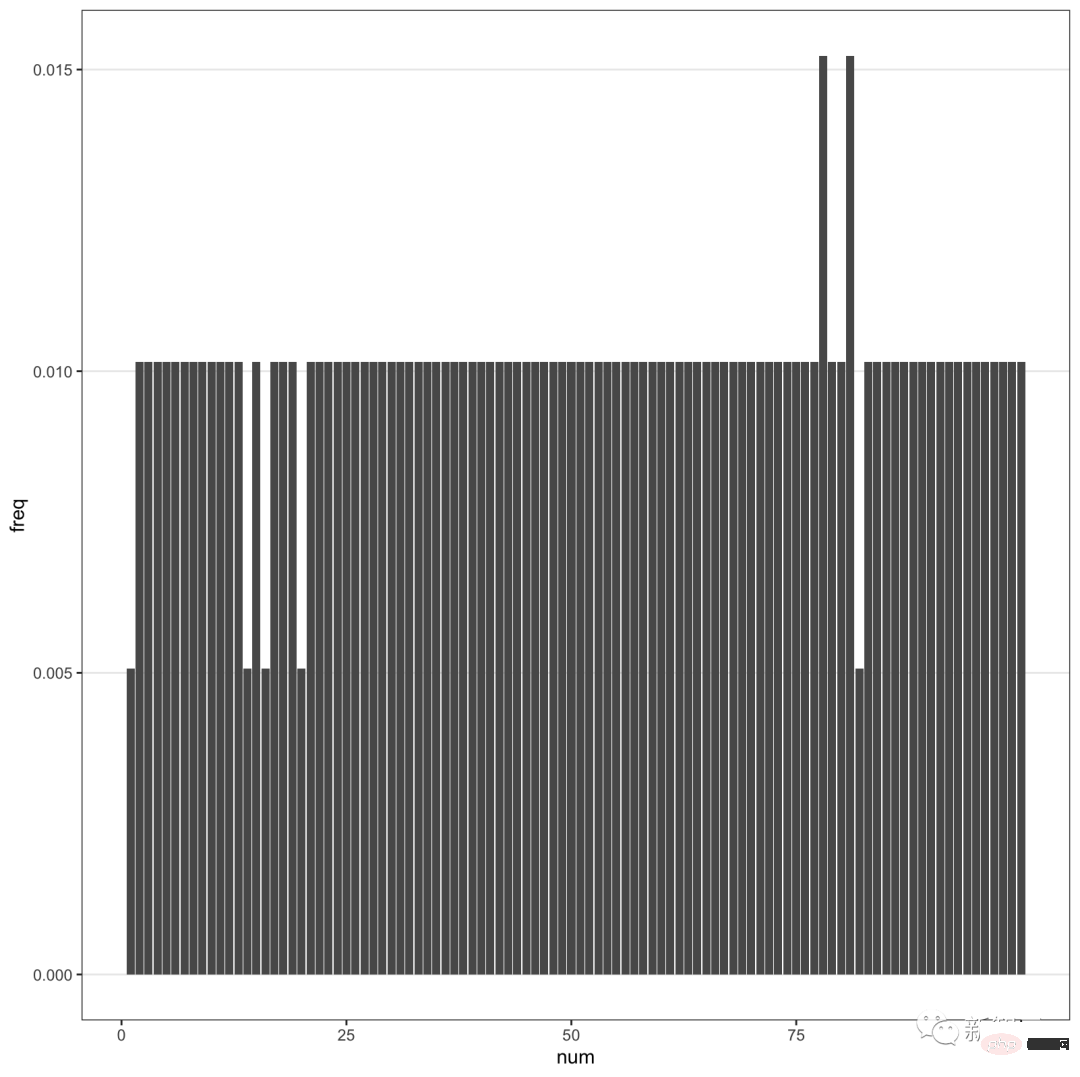

By letting ChatGPT generate a random number between 1 and 100 each time, Fraser collected 2,000 different answers and compiled them into a table.

As you can see, the number 42 appears most frequently, up to 10%. In addition, numbers containing 7 appear very frequently.

Especially the numbers between 71-79 are more frequent. Among numbers outside this range, 7 also often appears as the second digit.

#42What does it mean?

Everyone who has read Douglas Adams's blockbuster science fiction novel "The Hitchhiker's Guide to the Galaxy" knows that 42 is "the ultimate answer to life, the universe, and everything."

To put it simply, 42 and 69 are meme numbers on the Internet. This shows that ChatGPT is not actually a random number generator, but simply selects popular numbers in life from huge data sets collected online.

In addition, 7 appears frequently, which exactly reflects that ChatGPT caters to human preferences.

In Western culture, 7 is generally regarded as a lucky number, and there is a saying of Lucky 7. Just like we are obsessed with the number 8.

Interestingly, Fraser also found that GPT-4 seemed to compensate for this.

When GPT-4 is asked for more numbers, the random numbers it returns are too evenly distributed.

#In short, ChatGPT basically gives a response through prediction, rather than actually "thinking" to come up with an answer.

It can be seen that a chatbot that is touted as almost omnipotent is still a bit silly.

Let it plan a road trip for you and it will make you stop in a town that doesn’t even exist. Or, have it output a random number, most likely making a decision based on a popular meme.

Some netizens tried it themselves and found that GPT-4 does like 42.

If ChatGPT ends up just repeating online clichés, what’s the point?

GPT-4, violating machine learning rules

The birth of GPT-4 is exciting, but also disappointing.

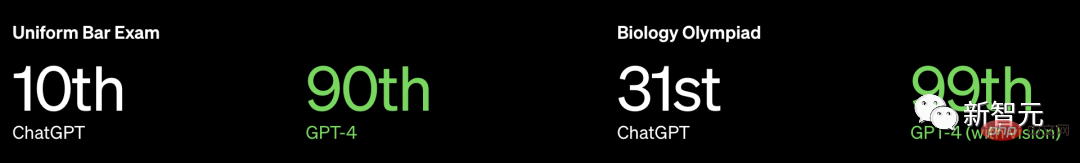

Not only did OpenAI not release more information about GPT-4, it didn’t even reveal the size of the model, but it highlighted its performance over humans on many professional and standardized tests.

Taking the BAR Lawyer License Examination in the United States as an example, GPT3.5 can reach the 10% level, and GPT4 can reach the 90% level.

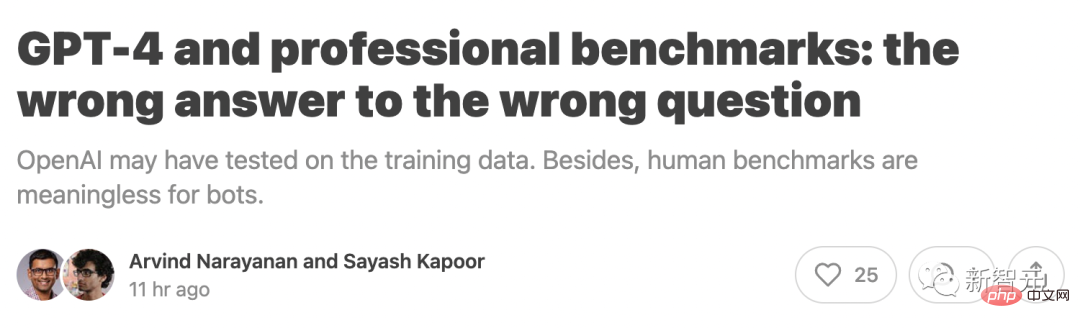

However, Arvind Narayanan, a professor in the Department of Computer Science at Princeton University, and Sayash Kapoor, a doctoral student, wrote that

OpenAI may have been tested on the training data. Furthermore, human benchmarks are meaningless for chatbots.

Specifically, OpenAI may be violating a cardinal rule of machine learning: don’t test on training data. You must know that test data and training data must be separated, otherwise over-fitting problems will occur.

Putting aside this problem, there is a bigger problem.

Language models solve problems differently than humans do, so these results have little meaning for how well a robot will perform when faced with real-world problems faced by professionals. A lawyer's job is not to answer bar exam questions all day long.

Problem 1: Training data contamination

To evaluate GPT-4’s programming capabilities, OpenAI conducted an evaluation on Codeforces, a website for Russian programming competitions.

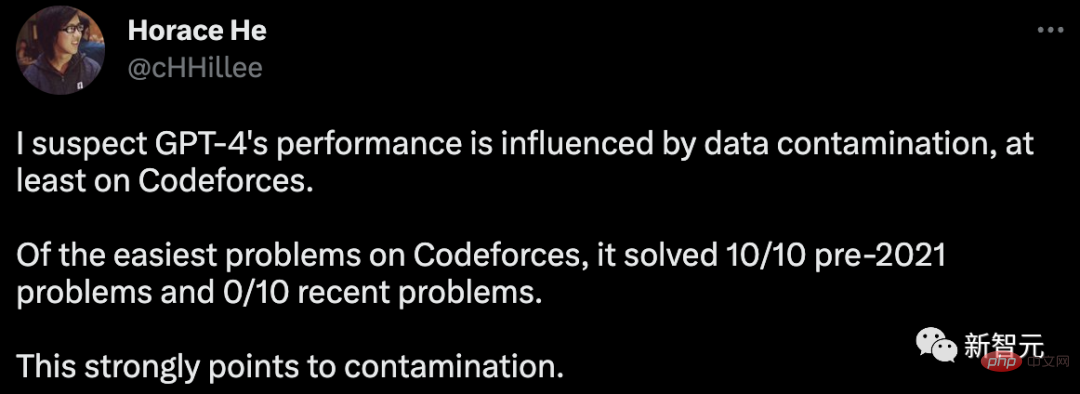

Surprisingly, Horace He pointed out online that in the simple classification, GPT-4 solved 10 problems before 2021, but none of the 10 most recent problems were solved.

The training data deadline for GPT-4 is September 2021.

This strongly suggests that the model is able to remember the solutions in its training set, or at least partially remember them, enough to fill in what it cannot recall.

To provide further evidence for this hypothesis, Arvind Narayanan tested GPT-4 on Codeforces competition problems at different times in 2021.

It was found that GPT-4 could solve simple classification problems before September 5, but none of the problems after September 12 were solved.

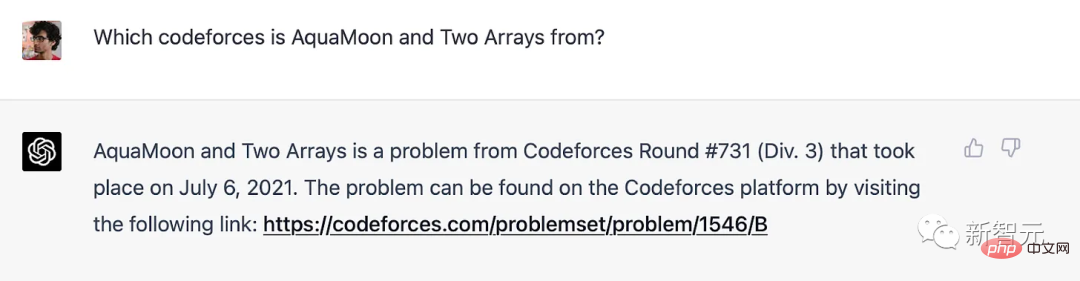

In fact, we can definitively prove that it has memorized problems in the training set: when GPT-4 is prompted with the title of a Codeforces problem, it includes a link to the exact match in which the problem appeared. It's worth noting that GPT-4 doesn't have access to the internet, so memory is the only explanation.

GPT-4 memorizes Codeforce issues before training deadline

Regarding benchmarks other than programming, Professor Narayanan said “We don’t know How to separate the problem by time period in a clear way, so it is considered difficult for OpenAI to avoid data pollution. For the same reason, we cannot conduct experiments to test how performance changes with dates."

However, it can be seen from the other side To start with, if it is memory, then GPT must be highly sensitive to question wording.

In February, Melanie Mitchell, a professor at the Santa Fe Institute, gave an example of an MBA exam question. Slightly changing some details was enough to deceive ChatGPT (GPT-3.5), and this method is very useful for a person. You won't be deceived if you tell.

More detailed experiments like this would be valuable.

Due to OpenAI’s lack of transparency, Professor Narayanan cannot say with certainty that it is a problem of data pollution. But what is certain is that OpenAI’s method of detecting contamination is sloppy:

“We use a substring matching method to measure cross-contamination between the evaluation data set and the pre-training data. Both the evaluation and training data are processed , remove all spaces and symbols, leaving only characters (including numbers). For each evaluation example, we randomly select three substrings of length 50 characters (if the example length is less than 50 characters, the entire example is used). A match is considered successful if any of the sampled evaluation substrings is a substring of a processed training example. This results in a list of tainted examples. We discard these examples and rerun to obtain the untainted Score."

This method simply cannot stand the test.

If the test problem exists in the training set but the name and number have been changed, it cannot be detected. Now a more reliable method is available, such as embedding distance.

If OpenAI wants to use the embedding distance method, then how much similarity is considered too similar? There is no objective answer to this question.

So even when performance on a multiple-choice standardized test seems simple, there is a lot of subjectivity involved.

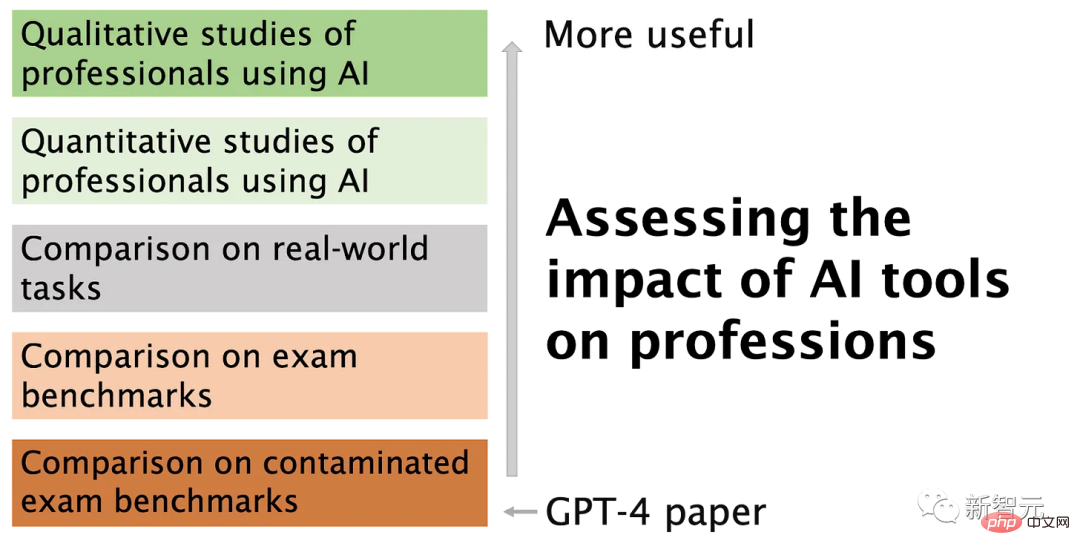

Problem 2: Professional exams are not a valid way to compare human and robot abilities

Memory is like a spectrum, even if the language model has not seen an exact one in the training set The problem, due to the huge training corpus, is that it has inevitably seen many very similar examples.

This means that it can escape deeper reasoning. Therefore, the benchmark results do not provide us with evidence that the language model is acquiring the deep reasoning skills required by human test takers.

In some practical tasks, shallow-level reasoning GPT-4 may be competent, but this is not always the case.

Benchmarks have been widely used in large model comparisons and have been criticized by many for reducing multidimensional evaluations to a single number.

Unfortunately, it is very regrettable that OpenAI chose to use such a large number of these tests in the evaluation of GPT-4, coupled with insufficient data pollution treatment measures.

The above is the detailed content of 'Mathematical noob' ChatGPT understands human preferences very well! Generating random numbers online is the ultimate answer to the universe. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

Installation steps: 1. Download the ChatGTP software from the ChatGTP official website or mobile store; 2. After opening it, in the settings interface, select the language as Chinese; 3. In the game interface, select human-machine game and set the Chinese spectrum; 4 . After starting, enter commands in the chat window to interact with the software.

The perfect combination of ChatGPT and Python: creating an intelligent customer service chatbot

Oct 27, 2023 pm 06:00 PM

The perfect combination of ChatGPT and Python: creating an intelligent customer service chatbot

Oct 27, 2023 pm 06:00 PM

The perfect combination of ChatGPT and Python: Creating an Intelligent Customer Service Chatbot Introduction: In today’s information age, intelligent customer service systems have become an important communication tool between enterprises and customers. In order to provide a better customer service experience, many companies have begun to turn to chatbots to complete tasks such as customer consultation and question answering. In this article, we will introduce how to use OpenAI’s powerful model ChatGPT and Python language to create an intelligent customer service chatbot to improve

How to develop an intelligent chatbot using ChatGPT and Java

Oct 28, 2023 am 08:54 AM

How to develop an intelligent chatbot using ChatGPT and Java

Oct 28, 2023 am 08:54 AM

In this article, we will introduce how to develop intelligent chatbots using ChatGPT and Java, and provide some specific code examples. ChatGPT is the latest version of the Generative Pre-training Transformer developed by OpenAI, a neural network-based artificial intelligence technology that can understand natural language and generate human-like text. Using ChatGPT we can easily create adaptive chats

How to build an intelligent customer service robot using ChatGPT PHP

Oct 28, 2023 am 09:34 AM

How to build an intelligent customer service robot using ChatGPT PHP

Oct 28, 2023 am 09:34 AM

How to use ChatGPTPHP to build an intelligent customer service robot Introduction: With the development of artificial intelligence technology, robots are increasingly used in the field of customer service. Using ChatGPTPHP to build an intelligent customer service robot can help companies provide more efficient and personalized customer services. This article will introduce how to use ChatGPTPHP to build an intelligent customer service robot and provide specific code examples. 1. Install ChatGPTPHP and use ChatGPTPHP to build an intelligent customer service robot.

Can chatgpt be used in China?

Mar 05, 2024 pm 03:05 PM

Can chatgpt be used in China?

Mar 05, 2024 pm 03:05 PM

chatgpt can be used in China, but cannot be registered, nor in Hong Kong and Macao. If users want to register, they can use a foreign mobile phone number to register. Note that during the registration process, the network environment must be switched to a foreign IP.

The perfect combination of ChatGPT and Python: building a real-time chatbot

Oct 28, 2023 am 08:37 AM

The perfect combination of ChatGPT and Python: building a real-time chatbot

Oct 28, 2023 am 08:37 AM

The perfect combination of ChatGPT and Python: Building a real-time chatbot Introduction: With the rapid development of artificial intelligence technology, chatbots play an increasingly important role in various fields. Chatbots can help users provide immediate and personalized assistance while also providing businesses with efficient customer service. This article will introduce how to use OpenAI's ChatGPT model and Python language to create a real-time chat robot, and provide specific code examples. 1. ChatGPT