Technology peripherals

Technology peripherals

AI

AI

Guide to using the ChatGPT plug-in to unlock a new Internet experience

Guide to using the ChatGPT plug-in to unlock a new Internet experience

Guide to using the ChatGPT plug-in to unlock a new Internet experience

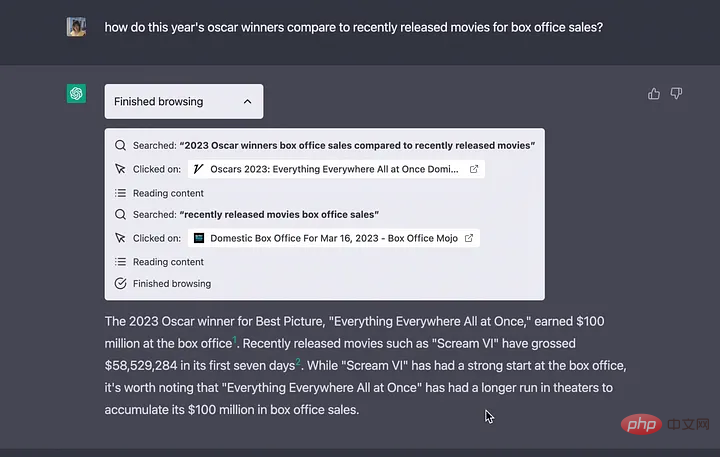

ChatGPT’s knowledge base was trained with data as of September 2021, but by using these plugins, ChatGPT is now able to search the web for the latest answers, thus removing the limitations of relying solely on its knowledge base.

Recently, OpenAI released a new feature of ChatGPT: plug-in system. ChatGPT can now be extended with functionality and perform new tasks, such as:

- Retrieve real-time information: for example, sports scores, stock prices, the latest news, etc.

- Retrieve knowledge base information: for example, company documents, personal notes, etc.

- performs actions on behalf of the user: for example, booking a flight, ordering food, etc.

ChatGPT’s knowledge base was trained with data as of September 2021, but by using these plugins, ChatGPT is now able to search the web for the latest answers , thus removing the limitation of relying solely on its knowledge base.

Create custom plugins

OpenAI also enables any developer to create their own plugins. Although developers currently need to join a waiting list (https://openai.com/waitlist/plugins), the files to create plugins are already available.

More information about the plug-in process can be found on this page (https://platform.openai.com/docs/plugins/introduction).

Example code can be found on this page (https://platform.openai.com/docs/plugins/examples).

The documentation only shows how the integration between the third-party API and ChatGPT works. The following article will explore the inner workings of this integration:

"How do large language models perform operations without receiving relevant training?"

LangChain Introduction

LangChain is a framework for creating chatbots, generative question answering, summaries, and more

LangChain is Harrison Chase (hwchase17) A tool developed in 2022 to assist developers in integrating third-party applications into large language models (LLM).

Borrow the example shown below to explain its working mode:

import os

os.environ["SERPAPI_API_KEY"] = "

os. environ["OPENAI_API_KEY"] = "

from langchain.agents import load_tools

from langchain.agents import initialize_agent

from langchain.llms import OpenAI

# First, load the language model you want to use to control the agent

llm = OpenAI(temperature=0)

# Next, load some tools to use. Note that the llm-math tool uses LLM, so you need to pass it in

tools = load_tools(["serpapi", "llm-math"], llm=llm)

# Finally, use the tools , language model and the agent type you want to use to initialize the agent

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

# Test now

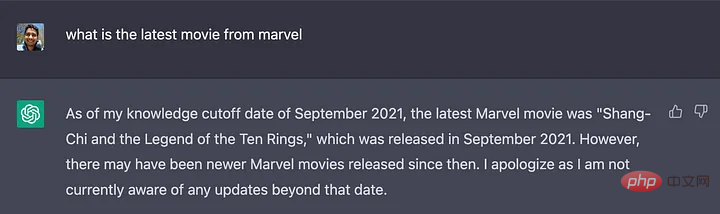

agent.run("Who is Olivia Wilde's boyfriend? What is his current age raised to the 0.23 power?")

Three main parts can be seen from this example:

- LLM: LLM is a core component of LangChain, which helps the agent understand natural language. In this example, OpenAI’s default model is used. According to the source code (https://github.com/hwchase17/langchain/blob/master/langchain/llms/openai.py#L133), the default model is text-davinci-003.

- Agent: The agent uses LLM to decide which actions to take and in what order. An action can be using a tool and observing its output, or it can be returning a response to the user.

The 0-shot-react-description is used here. From its documentation, we can learn that "this agent uses the ReAct framework and decides which tool to use based entirely on the tool's description." This information will be used later.

- Tools: Functions that agents can use to interact with the world. In this example, two tools are used:

serpapi: a wrapper around the https://serpapi.com/ API. It is used for browsing the web.

llm-math: Enables the agent to answer math-related questions in prompts, such as "What is his current age raised to the power 0.23?".

When the script is run, the agent does several things, such as browsing who Olivia Wilde's boyfriend is, extracting his name, asking Harry Style's age, performing a search and using llm-math The tool calculates 29^0.23, which is 2.16.

The biggest advantage of LangChain is that it does not rely on a single provider, as documented (https://python.langchain.com/en/latest/modules/llms/ integrations.html).

Why can LangChain provide powerful functions for the ChatGPT plug-in system?

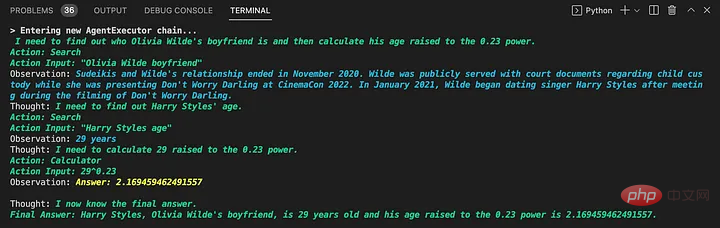

On March 21, OpenAI’s strongest partner Microsoft released MM-REACT, revealing ChatGPT’s multi-modal reasoning and actions (https://github.com/microsoft/MM-REACT).

When looking at the capabilities of this "system paradigm", you can see that each example involves an interaction between the language model and some external application.

By looking at the sample code provided (https://github.com/microsoft/MM-REACT/blob/main/sample.py), we can see , the implementation of de model tools interaction is done with LangChain. The README.md file (https://github.com/microsoft/MM-REACT/blob/main/README.md) also states that "MM-REACT's code is based on langchain".

Combining this evidence, plus the fact that the ChatGPT plug-in documentation mentions that “plug-in descriptions, API requests, and API responses are all inserted into conversations with ChatGPT.” it can be assumed that the plug-in system adds different plug-ins as proxies tool, in this case ChatGPT.

It is also possible that OpenAI turned ChatGPT into a proxy of type zero-shot-react-description to support these plug-ins (which is the type we saw in the previous example). Because the description of the API is inserted into the conversation, this matches the agent's expectations, as can be seen in the documentation excerpt below.

LangChain

Conclusion

Although the plug-in system is not yet open to users, it can be experienced using published documents and MM-REACT The powerful functions of ChatGPT plug-in system.

The above is the detailed content of Guide to using the ChatGPT plug-in to unlock a new Internet experience. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

1207

1207

24

24

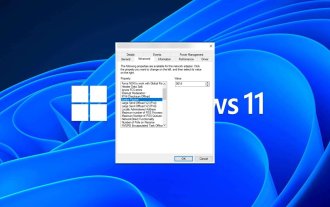

How to adjust MTU size on Windows 11

Aug 25, 2023 am 11:21 AM

How to adjust MTU size on Windows 11

Aug 25, 2023 am 11:21 AM

If you're suddenly experiencing a slow internet connection on Windows 11 and you've tried every trick in the book, it might have nothing to do with your network and everything to do with your maximum transmission unit (MTU). Problems may occur if your system sends or receives data with the wrong MTU size. In this post, we will learn how to change MTU size on Windows 11 for smooth and uninterrupted internet connection. What is the default MTU size in Windows 11? The default MTU size in Windows 11 is 1500, which is the maximum allowed. MTU stands for maximum transmission unit. This is the maximum packet size that can be sent or received on the network. every support network

![WLAN expansion module has stopped [fix]](https://img.php.cn/upload/article/000/465/014/170832352052603.gif?x-oss-process=image/resize,m_fill,h_207,w_330) WLAN expansion module has stopped [fix]

Feb 19, 2024 pm 02:18 PM

WLAN expansion module has stopped [fix]

Feb 19, 2024 pm 02:18 PM

If there is a problem with the WLAN expansion module on your Windows computer, it may cause you to be disconnected from the Internet. This situation is often frustrating, but fortunately, this article provides some simple suggestions that can help you solve this problem and get your wireless connection working properly again. Fix WLAN Extensibility Module Has Stopped If the WLAN Extensibility Module has stopped working on your Windows computer, follow these suggestions to fix it: Run the Network and Internet Troubleshooter to disable and re-enable wireless network connections Restart the WLAN Autoconfiguration Service Modify Power Options Modify Advanced Power Settings Reinstall Network Adapter Driver Run Some Network Commands Now, let’s look at it in detail

How to solve win11 DNS server error

Jan 10, 2024 pm 09:02 PM

How to solve win11 DNS server error

Jan 10, 2024 pm 09:02 PM

We need to use the correct DNS when connecting to the Internet to access the Internet. In the same way, if we use the wrong dns settings, it will prompt a dns server error. At this time, we can try to solve the problem by selecting to automatically obtain dns in the network settings. Let’s take a look at the specific solutions. How to solve win11 network dns server error. Method 1: Reset DNS 1. First, click Start in the taskbar to enter, find and click the "Settings" icon button. 2. Then click the "Network & Internet" option command in the left column. 3. Then find the "Ethernet" option on the right and click to enter. 4. After that, click "Edit" in the DNS server assignment, and finally set DNS to "Automatic (D

Fix 'Failed Network Error' downloads on Chrome, Google Drive and Photos!

Oct 27, 2023 pm 11:13 PM

Fix 'Failed Network Error' downloads on Chrome, Google Drive and Photos!

Oct 27, 2023 pm 11:13 PM

What is the "Network error download failed" issue? Before we delve into the solutions, let’s first understand what the “Network Error Download Failed” issue means. This error usually occurs when the network connection is interrupted during downloading. It can happen due to various reasons such as weak internet connection, network congestion or server issues. When this error occurs, the download will stop and an error message will be displayed. How to fix failed download with network error? Facing “Network Error Download Failed” can become a hindrance while accessing or downloading necessary files. Whether you are using browsers like Chrome or platforms like Google Drive and Google Photos, this error will pop up causing inconvenience. Below are points to help you navigate and resolve this issue

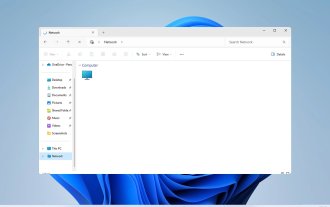

Fix: WD My Cloud doesn't show up on the network in Windows 11

Oct 02, 2023 pm 11:21 PM

Fix: WD My Cloud doesn't show up on the network in Windows 11

Oct 02, 2023 pm 11:21 PM

If WDMyCloud is not showing up on the network in Windows 11, this can be a big problem, especially if you store backups or other important files in it. This can be a big problem for users who frequently need to access network storage, so in today's guide, we'll show you how to fix this problem permanently. Why doesn't WDMyCloud show up on Windows 11 network? Your MyCloud device, network adapter, or internet connection is not configured correctly. The SMB function is not installed on the computer. A temporary glitch in Winsock can sometimes cause this problem. What should I do if my cloud doesn't show up on the network? Before we start fixing the problem, you can perform some preliminary checks:

What should I do if the earth is displayed in the lower right corner of Windows 10 when I cannot access the Internet? Various solutions to the problem that the Earth cannot access the Internet in Win10

Feb 29, 2024 am 09:52 AM

What should I do if the earth is displayed in the lower right corner of Windows 10 when I cannot access the Internet? Various solutions to the problem that the Earth cannot access the Internet in Win10

Feb 29, 2024 am 09:52 AM

This article will introduce the solution to the problem that the globe symbol is displayed on the Win10 system network but cannot access the Internet. The article will provide detailed steps to help readers solve the problem of Win10 network showing that the earth cannot access the Internet. Method 1: Restart directly. First check whether the network cable is not plugged in properly and whether the broadband is in arrears. The router or optical modem may be stuck. In this case, you need to restart the router or optical modem. If there are no important things being done on the computer, you can restart the computer directly. Most minor problems can be quickly solved by restarting the computer. If it is determined that the broadband is not in arrears and the network is normal, that is another matter. Method 2: 1. Press the [Win] key, or click [Start Menu] in the lower left corner. In the menu item that opens, click the gear icon above the power button. This is [Settings].

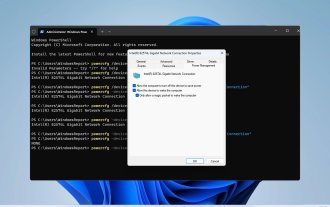

How to enable/disable Wake on LAN in Windows 11

Sep 06, 2023 pm 02:49 PM

How to enable/disable Wake on LAN in Windows 11

Sep 06, 2023 pm 02:49 PM

Wake on LAN is a network feature on Windows 11 that allows you to remotely wake your computer from hibernation or sleep mode. While casual users don't use it often, this feature is useful for network administrators and power users using wired networks, and today we'll show you how to set it up. How do I know if my computer supports Wake on LAN? To use this feature, your computer needs the following: The PC needs to be connected to an ATX power supply so that you can wake it from sleep mode remotely. Access control lists need to be created and added to all routers in the network. The network card needs to support the wake-up-on-LAN function. For this feature to work, both computers need to be on the same network. Although most Ethernet adapters use

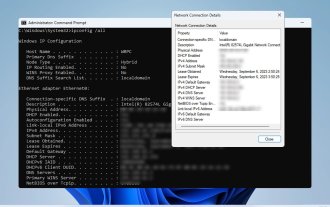

How to check network connection details and status on Windows 11

Sep 11, 2023 pm 02:17 PM

How to check network connection details and status on Windows 11

Sep 11, 2023 pm 02:17 PM

In order to make sure your network connection is working properly or to fix the problem, sometimes you need to check the network connection details on Windows 11. By doing this, you can view a variety of information including your IP address, MAC address, link speed, driver version, and more, and in this guide, we'll show you how to do that. How to find network connection details on Windows 11? 1. Use the "Settings" app and press the + key to open Windows Settings. WindowsI Next, navigate to Network & Internet in the left pane and select your network type. In our case, this is Ethernet. If you are using a wireless network, select a Wi-Fi network instead. At the bottom of the screen you should see