Technology peripherals

Technology peripherals

AI

AI

Breaking NAS bottlenecks, new method AIO-P predicts architecture performance across tasks

Breaking NAS bottlenecks, new method AIO-P predicts architecture performance across tasks

Breaking NAS bottlenecks, new method AIO-P predicts architecture performance across tasks

Huawei HiSilicon Canada Research Institute and the University of Alberta jointly launched a neural network performance prediction framework based on pre-training and knowledge injection.

The performance evaluation of neural networks (precision, recall, PSNR, etc.) requires a lot of resources and time and is the main bottleneck of neural network structure search (NAS). Early NAS methods required extensive resources to train each new structure searched from scratch. In recent years, network performance predictors are attracting more attention as an efficient performance evaluation method.

However, current predictors are limited in their scope of use because they can only model network structures from a specific search space and can only predict the performance of new structures on specific tasks. For example, the training samples only contain classification networks and their accuracy, so that the trained predictors can only be used to evaluate the performance of new network structures on image classification tasks.

In order to break this boundary and enable the predictor to predict the performance of a certain network structure on multiple tasks and have cross-task and cross-data generalization capabilities, Huawei HiSilicon Canada Research Institute and the University of Alberta jointly introduced a neural network performance prediction framework based on pre-training and knowledge injection. This framework can quickly evaluate the performance of different structures and types of networks on many different types of CV tasks such as classification, detection, segmentation, etc. for neural network structure search. Research paper has been accepted by AAAI 2023.

- Paper link: https://arxiv.org/abs/2211.17228

- Code link: https://github.com/Ascend -Research/AIO-P

The AIO-P (All-in-One Predictors) approach aims to extend the scope of neural predictors to computer vision tasks beyond classification. AIO-P utilizes K-Adapter technology to inject task-related knowledge into the predictor model, and also designs a label scaling mechanism based on FLOPs (Floating Point Operands) to adapt to different performance indicators and distributions. AIO-P uses a unique pseudo-labeling scheme to train K-Adapters, generating new training samples in just minutes. Experimental results show that AIO-P exhibits strong performance prediction capabilities and achieves excellent MAE and SRCC results on several computer vision tasks. In addition, AIO-P can directly migrate and predict the performance of never-before-seen network structures, and can cooperate with NAS to optimize the calculation amount of existing networks without reducing performance.

Method Introduction

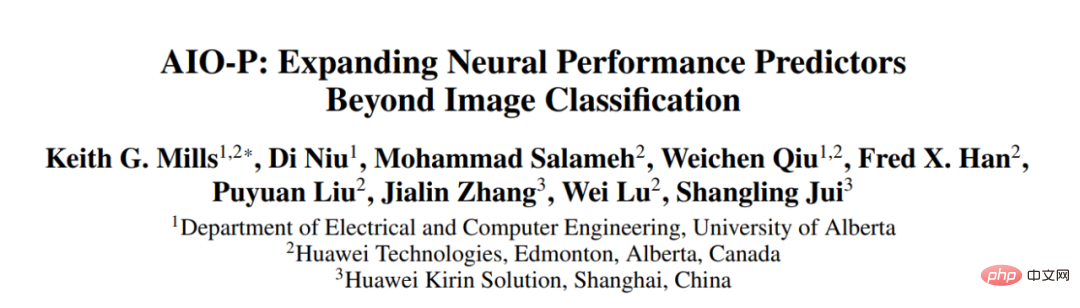

AIO-P is a general network performance predictor that can be generalized to multiple tasks. AIO-P achieves performance prediction capabilities across tasks and search spaces through predictor pre-training and domain-specific knowledge injection. AIO-P uses K-Adapter technology to inject task-related knowledge into the predictor, and relies on a common computational graph (CG) format to represent a network structure, ultimately enabling it to support networks from different search spaces and tasks, as shown in Figure 1 below. shown.

Figure 1. How AIO-P represents the network structure used for different tasks

In addition, the pseudo-marking mechanism The use of AIO-P can quickly generate new training samples to train K-Adapters. To bridge the gap between performance measurement ranges on different tasks, AIO-P proposes a label scaling method based on FLOPs to achieve cross-task performance modeling. Extensive experimental results show that AIO-P is able to make accurate performance predictions on a variety of different CV tasks, such as pose estimation and segmentation, without requiring training samples or with only a small amount of fine-tuning. Additionally, AIO-P can correctly rank performance on never-before-seen network structures and, when combined with a search algorithm, is used to optimize Huawei's facial recognition network, keeping its performance unchanged and reducing FLOPs by more than 13.5%. The paper has been accepted by AAAI-23 and the code has been open sourced on GitHub.

Computer vision networks usually consist of a "backbone" that performs feature extraction and a "head" that uses the extracted features to make predictions. The structure of the "backbone" is usually designed based on a certain known network structure (ResNet, Inception, MobileNet, ViT, UNet), while the "head" is designed for a given task, such as classification, pose estimation, segmentation, etc. Designed. Traditional NAS solutions manually customize the search space based on the structure of the "backbone". For example, if the "backbone" is MobileNetV3, the search space may include the number of MBConv Blocks, the parameters of each MBConv (kernel size, expansion), the number of channels, etc. However, this customized search space is not universal. If there is another "backbone" designed based on ResNet, it cannot be optimized through the existing NAS framework, but the search space needs to be redesigned.

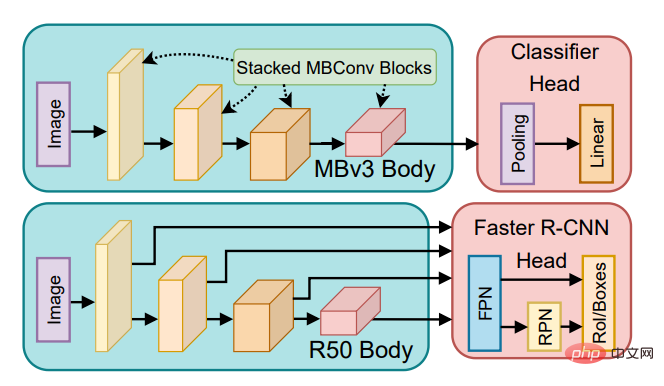

In order to solve this problem, AIO-P chose to represent different network structures from the computational graph level, achieving a unified representation of any network structure. As shown in Figure 2, the computational graph format allows AIO-P to encode the header and backbone together to represent the entire network structure. This also allows AIO-P to predict the performance of networks from different search spaces (such as MobileNets and ResNets) on various tasks.

Figure 2. Representation of the Squeeze-and-Excite module in MobileNetV3 at the computational graph level

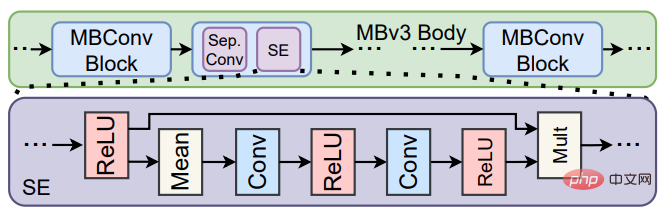

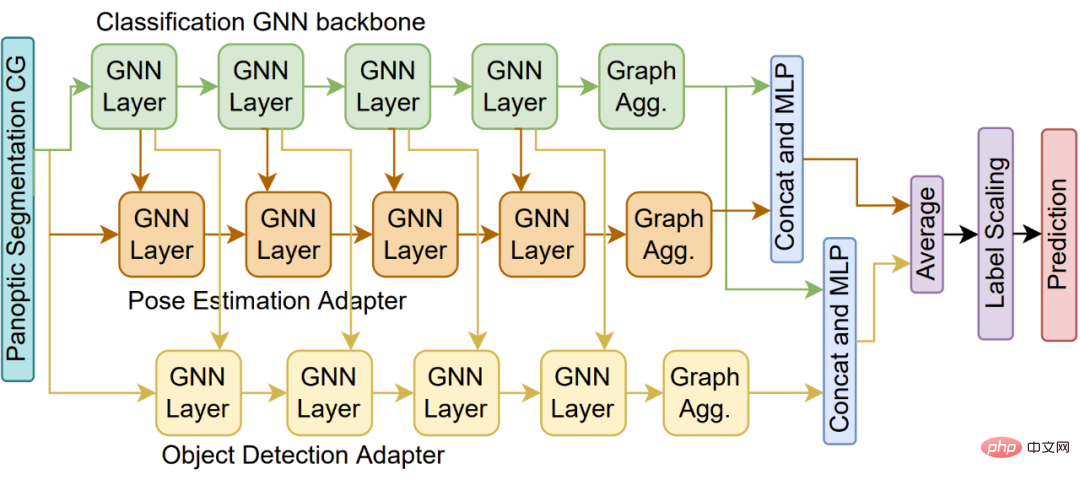

Proposed in AIO-P The predictor structure starts from a single GNN regression model (Figure 3, green block), which predicts the performance of the image classification network. To add to it the knowledge of other CV tasks, such as detection or segmentation, the study attached a K-Adapter (Fig. 3, orange block) to the original regression model. The K-Adapter is trained on samples from the new task, while the original model weights are frozen. Therefore, this study separately trains multiple K-Adapters (Figure 4) to add knowledge from multiple tasks.

Figure 3. AIO-P predictor with a K-Adapter

Figure 4. AIO-P predictor with multiple K-Adapters

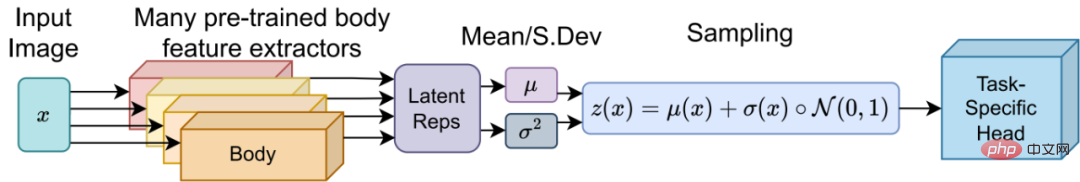

In order to further reduce the cost of training each K-Adapter, this study proposes a clever pseudo-labeling technology . This technique uses a latent sampling scheme to train a "head" model that can be shared between different tasks. The shared head can then be paired with any network backbone in the search space and fine-tuned to generate pseudo-labels in 10-15 minutes (Figure 5).

Figure 5. Training a "head" model that can be shared between different tasks

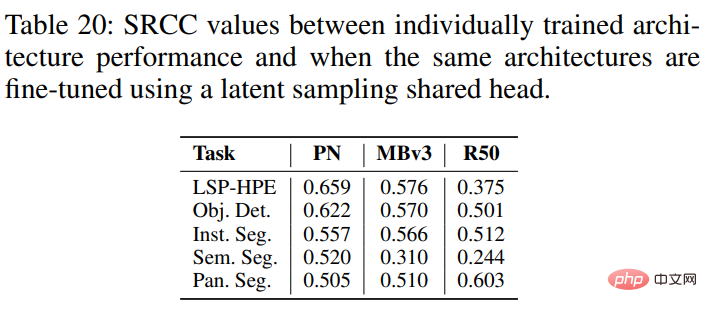

It has been proven by experiments that using shared heads The pseudo-labels obtained are positively correlated with the actual performance obtained by training a network from scratch for a day or more, sometimes with a rank correlation coefficient exceeding 0.5 (Spearman correlation).

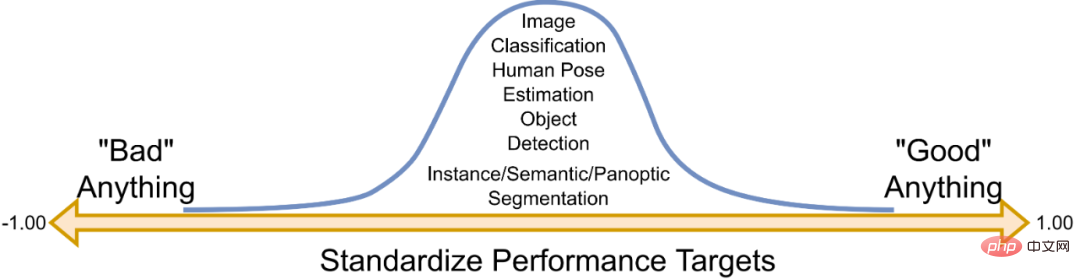

In addition, different tasks will have different performance indicators. These performance indicators usually have their own specific distribution interval. For example, a classification network using a specific backbone may have a classification accuracy of about 75% on ImageNet, while the mAP on the MS-COCO object detection task may be 30-35 %. To account for these different intervals, this study proposes a method to understand network performance from a normal distribution based on the normalization concept. In layman's terms, if the predicted value is 0, the network performance is average; if > 0, it is a better network;

Figure 6. How to normalize network performance

The FLOPs of a network are related to model size, input data, and are generally positively correlated with performance Related trends. This study uses FLOPs transformations to enhance the labels that AIO-P learns from.

Experiments and Results

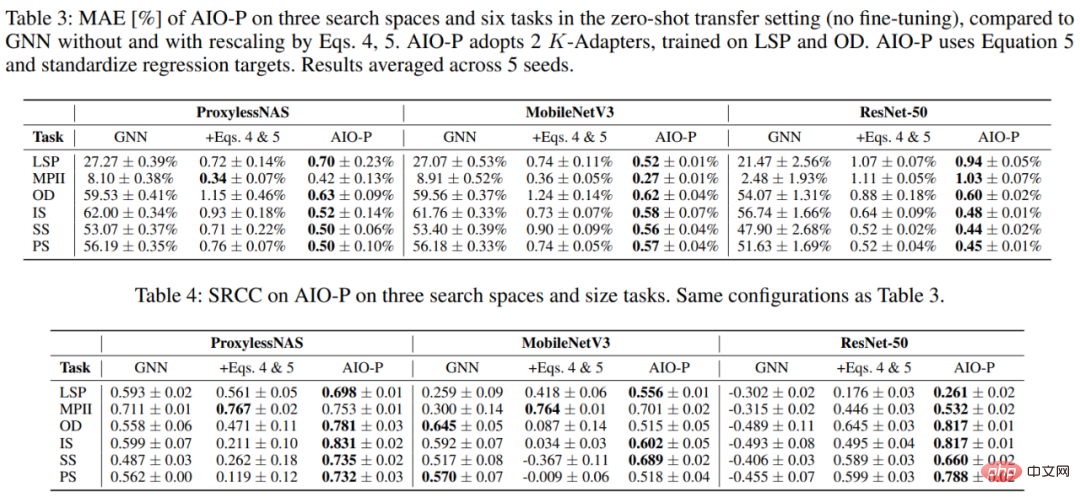

This study first trained AIO-P on human pose estimation and object detection tasks, and then used it to predict the performance of network structures on multiple tasks, including pose estimation ( LSP and MPII), detection (OD), instance segmentation (IS), semantic segmentation (SS) and panoramic segmentation (PS). Even in the case of zero-shot direct migration, use AIO-P to predict the performance of networks from the Once-for-All (OFA) search space (ProxylessNAS, MobileNetV3 and ResNet-50) on these tasks, and the final prediction results A MAE of less than 1.0% and a ranking correlation of over 0.5 were achieved.

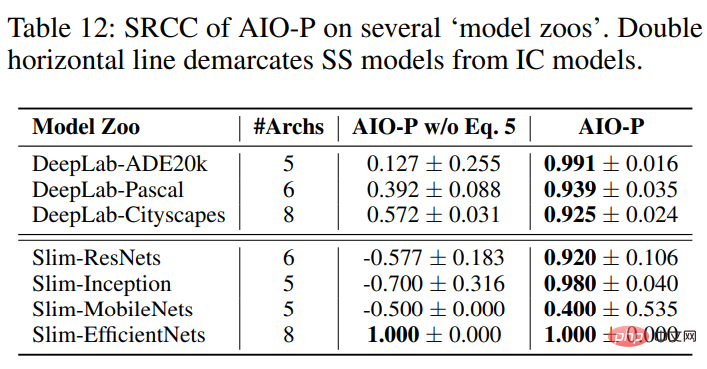

In addition, this study also used AIO-P to predict the performance of networks in the TensorFlow-Slim open source model library (such as DeepLab semantic segmentation model, ResNets, Inception nets, MobileNets and EfficientNets), these network structures may not have appeared in the training samples of AIO-P.

AIO-P can achieve almost perfect SRCC on 3 DeepLab semantic segmentation model libraries, obtain positive SRCC on all 4 classification model libraries, and achieve SRCC=1.0 on the EfficientNet model by utilizing FLOPs transformation. .

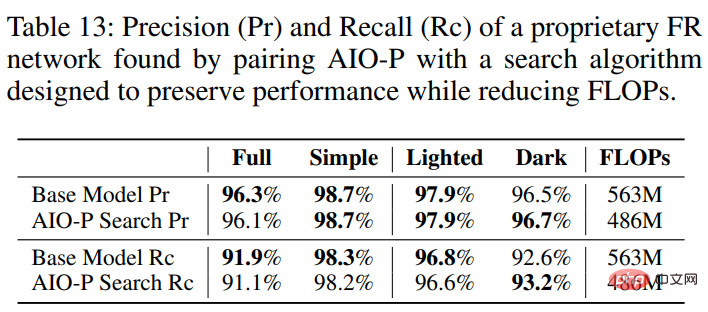

Finally, the core motivation of AIO-P is to be able to pair it with a search algorithm and use it to optimize arbitrary network structures, which can be independent and not belong to any The structure of a search space or library of known models, or even one for a task that has never been trained on. This study uses AIO-P and the random mutation search algorithm to optimize the face recognition (FR) model used on Huawei mobile phones. The results show that AIO-P can reduce the model calculation FLOPs by more than 13.5% while maintaining performance (precision (Pr) and recall (Rc)).

Interested readers can read the original text of the paper to learn more research details.

The above is the detailed content of Breaking NAS bottlenecks, new method AIO-P predicts architecture performance across tasks. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to evaluate the cost-effectiveness of commercial support for Java frameworks

Jun 05, 2024 pm 05:25 PM

How to evaluate the cost-effectiveness of commercial support for Java frameworks

Jun 05, 2024 pm 05:25 PM

Evaluating the cost/performance of commercial support for a Java framework involves the following steps: Determine the required level of assurance and service level agreement (SLA) guarantees. The experience and expertise of the research support team. Consider additional services such as upgrades, troubleshooting, and performance optimization. Weigh business support costs against risk mitigation and increased efficiency.

How does the learning curve of PHP frameworks compare to other language frameworks?

Jun 06, 2024 pm 12:41 PM

How does the learning curve of PHP frameworks compare to other language frameworks?

Jun 06, 2024 pm 12:41 PM

The learning curve of a PHP framework depends on language proficiency, framework complexity, documentation quality, and community support. The learning curve of PHP frameworks is higher when compared to Python frameworks and lower when compared to Ruby frameworks. Compared to Java frameworks, PHP frameworks have a moderate learning curve but a shorter time to get started.

How do the lightweight options of PHP frameworks affect application performance?

Jun 06, 2024 am 10:53 AM

How do the lightweight options of PHP frameworks affect application performance?

Jun 06, 2024 am 10:53 AM

The lightweight PHP framework improves application performance through small size and low resource consumption. Its features include: small size, fast startup, low memory usage, improved response speed and throughput, and reduced resource consumption. Practical case: SlimFramework creates REST API, only 500KB, high responsiveness and high throughput

Performance comparison of Java frameworks

Jun 04, 2024 pm 03:56 PM

Performance comparison of Java frameworks

Jun 04, 2024 pm 03:56 PM

According to benchmarks, for small, high-performance applications, Quarkus (fast startup, low memory) or Micronaut (TechEmpower excellent) are ideal choices. SpringBoot is suitable for large, full-stack applications, but has slightly slower startup times and memory usage.

Golang framework documentation best practices

Jun 04, 2024 pm 05:00 PM

Golang framework documentation best practices

Jun 04, 2024 pm 05:00 PM

Writing clear and comprehensive documentation is crucial for the Golang framework. Best practices include following an established documentation style, such as Google's Go Coding Style Guide. Use a clear organizational structure, including headings, subheadings, and lists, and provide navigation. Provides comprehensive and accurate information, including getting started guides, API references, and concepts. Use code examples to illustrate concepts and usage. Keep documentation updated, track changes and document new features. Provide support and community resources such as GitHub issues and forums. Create practical examples, such as API documentation.

How to choose the best golang framework for different application scenarios

Jun 05, 2024 pm 04:05 PM

How to choose the best golang framework for different application scenarios

Jun 05, 2024 pm 04:05 PM

Choose the best Go framework based on application scenarios: consider application type, language features, performance requirements, and ecosystem. Common Go frameworks: Gin (Web application), Echo (Web service), Fiber (high throughput), gorm (ORM), fasthttp (speed). Practical case: building REST API (Fiber) and interacting with the database (gorm). Choose a framework: choose fasthttp for key performance, Gin/Echo for flexible web applications, and gorm for database interaction.

Detailed practical explanation of golang framework development: Questions and Answers

Jun 06, 2024 am 10:57 AM

Detailed practical explanation of golang framework development: Questions and Answers

Jun 06, 2024 am 10:57 AM

In Go framework development, common challenges and their solutions are: Error handling: Use the errors package for management, and use middleware to centrally handle errors. Authentication and authorization: Integrate third-party libraries and create custom middleware to check credentials. Concurrency processing: Use goroutines, mutexes, and channels to control resource access. Unit testing: Use gotest packages, mocks, and stubs for isolation, and code coverage tools to ensure sufficiency. Deployment and monitoring: Use Docker containers to package deployments, set up data backups, and track performance and errors with logging and monitoring tools.

What are the common misunderstandings in the learning process of Golang framework?

Jun 05, 2024 pm 09:59 PM

What are the common misunderstandings in the learning process of Golang framework?

Jun 05, 2024 pm 09:59 PM

There are five misunderstandings in Go framework learning: over-reliance on the framework and limited flexibility. If you don’t follow the framework conventions, the code will be difficult to maintain. Using outdated libraries can cause security and compatibility issues. Excessive use of packages obfuscates code structure. Ignoring error handling leads to unexpected behavior and crashes.