Technology peripherals

Technology peripherals

AI

AI

30 page paper! New work by Yu Shilun's team: AIGC comprehensive survey, development history from GAN to ChatGPT

30 page paper! New work by Yu Shilun's team: AIGC comprehensive survey, development history from GAN to ChatGPT

30 page paper! New work by Yu Shilun's team: AIGC comprehensive survey, development history from GAN to ChatGPT

2022 can be said to be the first year of generative AI. Recently, Yu Shilun’s team published a comprehensive survey on AIGC, introducing the development history from GAN to ChatGPT.

The year 2022 that has just passed is undoubtedly the singular point of the explosion of generative AI.

Since 2021, generative AI has been selected into Gartner's "Artificial Intelligence Technology Hype Cycle" for two consecutive years and is considered an important AI technology trend in the future.

Recently, Yu Shilun’s team published a comprehensive survey on AIGC, introducing the development history from GAN to ChatGPT.

Paper address: https://arxiv.org/pdf/2303.04226.pdf

This article excerpts part of the paper for introduction.

The singularity has arrived?

In recent years, artificial intelligence-generated content (AIGC, also known as generative AI) has attracted widespread attention outside the computer science community.

The entire society has begun to take great interest in various content generation products developed by large technology companies, such as ChatGPT and DALL-E-2.

AIGC refers to the use of generative artificial intelligence (GAI) technology to generate content and can automatically create a large amount of content in a short time.

ChatGPT is an AI system developed by OpenAI for building conversations. The system is able to effectively understand and respond to human language in a meaningful way.

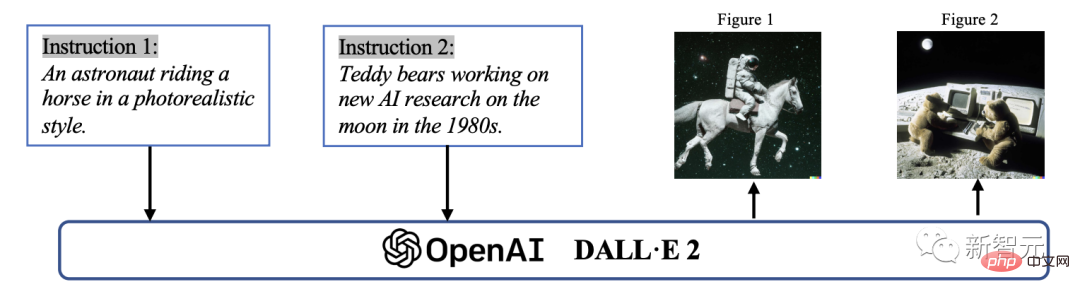

In addition, DALL-E-2 is another state-of-the-art GAI model developed by OpenAI, capable of creating unique high-quality images from text descriptions in minutes.

Example of AIGC in image generation

Technically speaking, AIGC refers to given instructions that can guide the model to complete the task, using GAI to generate satisfying The content of the instruction. This generation process usually consists of two steps: extracting intent information from instructions, and generating content based on the extracted intent.

However, as previous research has proven, the paradigm of the GAI model including the above two steps is not completely novel.

Compared with previous work, the core point of recent AIGC advancements is to train more complex generative models on larger data sets, use larger base model frameworks, and have access to a wide range of computing resources.

For example, the main framework of GPT-3 is the same as GPT-2, but the pre-training data size increases from WebText (38GB) to CommonCrawl (570GB after filtering), and the basic model size increases from 1.5B to 175B.

Therefore, GPT-3 has better generalization ability than GPT-2 on various tasks.

In addition to the benefits of increased data volumes and computing power, researchers are also exploring ways to combine new technologies with GAI algorithms.

For example, ChatGPT utilizes reinforcement learning with human feedback (RLHF) to determine the most appropriate response to a given instruction, thereby improving the model’s reliability and accuracy over time. This approach enables ChatGPT to better understand human preferences in long conversations.

At the same time, in CV, Stable Diffusion proposed by Stability AI in 2022 has also achieved great success in image generation.

Unlike previous methods, generative diffusion models can help generate high-resolution images by controlling the balance between exploration and exploitation, thereby achieving diversity in the generated images, harmony with the similarity of the training data combination.

By combining these advances, the model has made significant progress in AIGC's mission and has been adopted by industries as diverse as art, advertising, and education.

In the near future, AIGC will continue to become an important area of machine learning research.

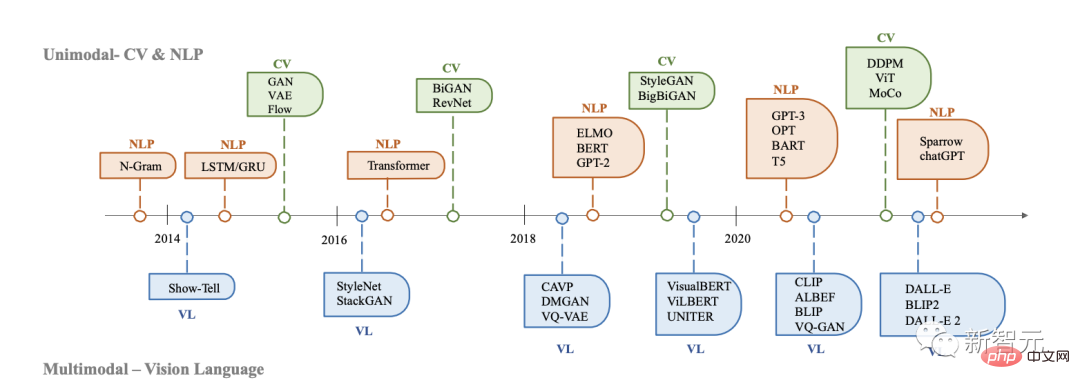

Generally speaking, GAI models can be divided into two types: single-modal model and multi-modal model

Therefore, conduct a comprehensive review of past research and find out this Problems in the field are crucial. This is the first survey focusing on core technologies and applications in the AIGC field.

This is the first comprehensive survey of AIGC summarizing GAI in terms of technology and applications.

Previous surveys mainly introduced GAI from different perspectives, including natural language generation, image generation, and multi-modal machine learning generation. However, these previous works only focused on specific parts of AIGC.

In this survey, we first reviewed the basic technologies commonly used in AIGC. Then, a comprehensive summary of advanced GAI algorithms is further provided, including unimodal and multimodal generation. Additionally, the paper examines the applications and potential challenges of AIGC.

Finally, the future direction of this field is emphasized. In summary, the main contributions of this paper are as follows: - To the best of our knowledge, we are the first to provide a formal definition and comprehensive survey of AIGC and AI-augmented generative processes.

-We reviewed the history and basic technology of AIGC, and conducted a comprehensive analysis of the latest progress in GAI tasks and models from the perspectives of unimodal generation and multimodal generation.

-This article discusses the main challenges facing AIGC and future research trends.

Generative AI History

Generative models have a long history in artificial intelligence, dating back to the development of Hidden Markov Models (HMMs) and Gaussian Mixture Models (GMMs) in the 1950s.

These models generate continuous data such as speech and time series. However, it was not until the advent of deep learning that the performance of generative models improved significantly.

In early deep generative models, different domains usually did not overlap much.

The development history of generative AI in CV, NLP and VL

In NLP, the traditional method of generating sentences is to use N-gram language model learning distribution of words, and then search for the best sequence. However, this method cannot effectively adapt to long sentences.

To solve this problem, Recurrent Neural Networks (RNNs) were later introduced to language modeling tasks, allowing relatively long dependencies to be modeled.

The second is the development of long short-term memory (LSTM) and gated recurrent units (GRU), which use gating mechanisms to control memory during training. These methods are able to handle approximately 200 tokens in a sample, which marks a significant improvement compared to N-gram language models.

At the same time, in CV, before the emergence of deep learning-based methods, traditional image generation algorithms used techniques such as texture synthesis (PTS) and texture mapping.

These algorithms are based on hand-designed features and have limited capabilities in generating complex and diverse images.

In 2014, Generative Adversarial Networks (GANs) were first proposed and became a milestone in the field of artificial intelligence because of their impressive results in various applications.

Variant autoencoders (VAEs) and other methods, such as generative diffusion models, have also been developed to provide more fine-grained control over the image generation process and enable the generation of high-quality images.

The development of generative models in different fields has followed different paths, but eventually an intersection emerged: the Transformer architecture.

In 2017, Transformer was introduced in NLP tasks by Vaswani et al., and was later applied to CV, and then became the dominant architecture for many generative models in various fields.

In the field of NLP, many well-known large-scale language models, such as BERT and GPT, adopt the Transformer architecture as their main building block. Advantages compared to previous building blocks, namely LSTM and GRU.

In CV, Vision Transformer (ViT) and Swin Transformer later developed this concept further, combining the Transformer architecture with a vision component, enabling it to be applied to image-based downlink systems.

In addition to the improvements brought by Transformer to a single modality, this crossover also enables models from different fields to be fused together to perform multi-modal tasks.

An example of a multimodal model is CLIP. CLIP is a joint visual language model. It combines the Transformer architecture with a visual component, allowing training on large amounts of text and image data.

Due to combining visual and linguistic knowledge in pre-training, CLIP can also be used as an image encoder in multi-modal cue generation. In short, the emergence of Transformer-based models has revolutionized the generation of artificial intelligence and led to the possibility of large-scale training.

In recent years, researchers have also begun to introduce new technologies based on these models.

For example, in NLP, in order to help the model better understand task requirements, people sometimes prefer few-shot hints. It refers to including in the prompt some examples selected from the dataset.

In visual languages, researchers combine pattern-specific models with self-supervised contrastive learning goals to provide more powerful representations.

In the future, as AIGC becomes more and more important, more and more technologies will be introduced, which will give this field great vitality.

AIGC Basics

This section introduces the commonly used basic models of AIGC.

Basic Model

Transformer

Transformer is the backbone architecture of many state-of-the-art models, such as GPT-3, DALL-E-2, Codex and Gopher.

It was first proposed to solve the limitations of traditional models, such as RNNs, in processing variable-length sequences and context awareness.

The architecture of Transformer is mainly based on a self-attention mechanism, which enables the model to pay attention to different parts of the input sequence.

Transformer consists of an encoder and a decoder. The encoder receives an input sequence and generates a hidden representation, while the decoder receives a hidden representation and generates an output sequence.

Each layer of the encoder and decoder consists of a multi-head attention and a feed-forward neural network. Multi-head attention is the core component of Transformer, which learns to assign different weights based on the relevance of tags.

This information routing approach enables the model to better handle long-term dependencies and, therefore, improves performance in a wide range of NLP tasks.

Another advantage of Transformer is that its architecture makes it highly parallel and allows the data to overcome inductive bias. This feature makes Transformer very suitable for large-scale pre-training, allowing Transformer-based models to adapt to different downstream tasks.

Pre-trained language model

Since the introduction of the Transformer architecture, it has become a mainstream choice for natural language processing due to its parallelism and learning capabilities.

Generally speaking, these Transformer-based pre-trained language models can usually be divided into two categories according to their training tasks: autoregressive language models, and mask language models.

Given a sentence consisting of multiple tokens, the goal of masked language modeling, such as BERT and RoBERTa, is to predict the probability of the masked token given contextual information.

The most notable example of a masked language model is BERT, which includes masked language modeling and next sentence prediction tasks. RoBERTa uses the same architecture as BERT, improving its performance by increasing the amount of pre-training data and incorporating more challenging pre-training objectives.

XL-Net is also based on BERT, which incorporates permutation operations to change the order of predictions for each training iteration, enabling the model to learn more cross-label information.

Autoregressive language models, such as GPT-3 and OPT, model the probability given the previous token, and are therefore left-to-right language models. Unlike masked language models, autoregressive language models are more suitable for generative tasks.

Reinforcement Learning from Human Feedback

Despite being trained on large-scale data, AIGC may not always output content consistent with user intent.

To make AIGC output better match human preferences, reinforcement learning from human feedback (RLHF) has been applied to model fine-tuning in various applications, such as Sparrow, InstructGPT, and ChatGPT.

Normally, the entire process of RLHF includes the following three steps: pre-training, reward learning and fine-tuning of reinforcement learning.

Computing

Hardware

In recent years, hardware technology has made significant progress, facilitating the training of large models.

In the past, training a large neural network using a CPU could take days or even weeks. However, with the increase in computing power, this process has been accelerated by several orders of magnitude.

For example, NVIDIA’s NVIDIA A100 GPU is 7 times faster than V100 and 11 times faster than T4 in BERT large-scale inference process.

In addition, Google’s Tensor Processing Unit (TPU) is designed for deep learning and provides higher computing performance compared to the A100 GPU.

The accelerated advancement of computing power has significantly improved the efficiency of artificial intelligence model training, providing new possibilities for the development of large and complex models.

Distributed training

Another major improvement is distributed training.

In traditional machine learning, training is usually performed on a machine using a single processor. This approach works well for small datasets and models, but becomes impractical when dealing with large datasets and complex models.

In distributed training, the training tasks are distributed to multiple processors or machines, which greatly improves the training speed of the model.

Some companies have also released frameworks that simplify the distributed training process of deep learning stacks. These frameworks provide tools and APIs that allow developers to easily distribute training tasks across multiple processors or machines without having to manage the underlying infrastructure.

Cloud computing

Cloud computing also plays a vital role in training large models. Previously, models were often trained locally. Now, with cloud computing services such as AWS and Azure providing access to powerful computing resources, deep learning researchers and practitioners can create large GPU or TPU clusters required for large model training on demand.

Collectively, these advances make it possible to develop more complex and accurate models, opening up new possibilities in various areas of artificial intelligence research and applications.

Introduction to the author

Philip S. Yu is a scholar in the field of computer science, an ACM/IEEE Fellow, and a distinguished professor in the Department of Computer Science at the University of Illinois at Chicago (UIC).

He has made world-renowned achievements in the theory and technology of big data mining and management. In response to the challenges of big data in terms of scale, speed and diversity, he has proposed effective and cutting-edge solutions in data mining and management methods and technologies, especially in integrating diverse data, mining data streams, frequent patterns, and subspaces. He made groundbreaking contributions to graphs.

He also made pioneering contributions in the field of parallel and distributed database processing technology, and applied it to the IBM S/390 Parallel Sysplex system, successfully integrating traditional IBM mainframe Transition to parallel microprocessor architecture.

The above is the detailed content of 30 page paper! New work by Yu Shilun's team: AIGC comprehensive survey, development history from GAN to ChatGPT. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

37

37

110

110

Web3 trading platform ranking_Web3 global exchanges top ten summary

Apr 21, 2025 am 10:45 AM

Web3 trading platform ranking_Web3 global exchanges top ten summary

Apr 21, 2025 am 10:45 AM

Binance is the overlord of the global digital asset trading ecosystem, and its characteristics include: 1. The average daily trading volume exceeds $150 billion, supports 500 trading pairs, covering 98% of mainstream currencies; 2. The innovation matrix covers the derivatives market, Web3 layout and education system; 3. The technical advantages are millisecond matching engines, with peak processing volumes of 1.4 million transactions per second; 4. Compliance progress holds 15-country licenses and establishes compliant entities in Europe and the United States.

Top 10 cryptocurrency exchange platforms The world's largest digital currency exchange list

Apr 21, 2025 pm 07:15 PM

Top 10 cryptocurrency exchange platforms The world's largest digital currency exchange list

Apr 21, 2025 pm 07:15 PM

Exchanges play a vital role in today's cryptocurrency market. They are not only platforms for investors to trade, but also important sources of market liquidity and price discovery. The world's largest virtual currency exchanges rank among the top ten, and these exchanges are not only far ahead in trading volume, but also have their own advantages in user experience, security and innovative services. Exchanges that top the list usually have a large user base and extensive market influence, and their trading volume and asset types are often difficult to reach by other exchanges.

How to avoid losses after ETH upgrade

Apr 21, 2025 am 10:03 AM

How to avoid losses after ETH upgrade

Apr 21, 2025 am 10:03 AM

After ETH upgrade, novices should adopt the following strategies to avoid losses: 1. Do their homework and understand the basic knowledge and upgrade content of ETH; 2. Control positions, test the waters in small amounts and diversify investment; 3. Make a trading plan, clarify goals and set stop loss points; 4. Profil rationally and avoid emotional decision-making; 5. Choose a formal and reliable trading platform; 6. Consider long-term holding to avoid the impact of short-term fluctuations.

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

The platforms that have outstanding performance in leveraged trading, security and user experience in 2025 are: 1. OKX, suitable for high-frequency traders, providing up to 100 times leverage; 2. Binance, suitable for multi-currency traders around the world, providing 125 times high leverage; 3. Gate.io, suitable for professional derivatives players, providing 100 times leverage; 4. Bitget, suitable for novices and social traders, providing up to 100 times leverage; 5. Kraken, suitable for steady investors, providing 5 times leverage; 6. Bybit, suitable for altcoin explorers, providing 20 times leverage; 7. KuCoin, suitable for low-cost traders, providing 10 times leverage; 8. Bitfinex, suitable for senior play

What are the top ten platforms in the currency exchange circle?

Apr 21, 2025 pm 12:21 PM

What are the top ten platforms in the currency exchange circle?

Apr 21, 2025 pm 12:21 PM

The top exchanges include: 1. Binance, the world's largest trading volume, supports 600 currencies, and the spot handling fee is 0.1%; 2. OKX, a balanced platform, supports 708 trading pairs, and the perpetual contract handling fee is 0.05%; 3. Gate.io, covers 2700 small currencies, and the spot handling fee is 0.1%-0.3%; 4. Coinbase, the US compliance benchmark, the spot handling fee is 0.5%; 5. Kraken, the top security, and regular reserve audit.

Rexas Finance (RXS) can surpass Solana (Sol), Cardano (ADA), XRP and Dogecoin (Doge) in 2025

Apr 21, 2025 pm 02:30 PM

Rexas Finance (RXS) can surpass Solana (Sol), Cardano (ADA), XRP and Dogecoin (Doge) in 2025

Apr 21, 2025 pm 02:30 PM

In the volatile cryptocurrency market, investors are looking for alternatives that go beyond popular currencies. Although well-known cryptocurrencies such as Solana (SOL), Cardano (ADA), XRP and Dogecoin (DOGE) also face challenges such as market sentiment, regulatory uncertainty and scalability. However, a new emerging project, RexasFinance (RXS), is emerging. It does not rely on celebrity effects or hype, but focuses on combining real-world assets (RWA) with blockchain technology to provide investors with an innovative way to invest. This strategy makes it hoped to be one of the most successful projects of 2025. RexasFi

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

The plunge in the cryptocurrency market has caused panic among investors, and Dogecoin (Doge) has become one of the hardest hit areas. Its price fell sharply, and the total value lock-in of decentralized finance (DeFi) (TVL) also saw a significant decline. The selling wave of "Black Monday" swept the cryptocurrency market, and Dogecoin was the first to be hit. Its DeFiTVL fell to 2023 levels, and the currency price fell 23.78% in the past month. Dogecoin's DeFiTVL fell to a low of $2.72 million, mainly due to a 26.37% decline in the SOSO value index. Other major DeFi platforms, such as the boring Dao and Thorchain, TVL also dropped by 24.04% and 20, respectively.